Pantelis Dogoulis

Constraint-Guided Prediction Refinement via Deterministic Diffusion Trajectories

Jun 15, 2025Abstract:Many real-world machine learning tasks require outputs that satisfy hard constraints, such as physical conservation laws, structured dependencies in graphs, or column-level relationships in tabular data. Existing approaches rely either on domain-specific architectures and losses or on strong assumptions on the constraint space, restricting their applicability to linear or convex constraints. We propose a general-purpose framework for constraint-aware refinement that leverages denoising diffusion implicit models (DDIMs). Starting from a coarse prediction, our method iteratively refines it through a deterministic diffusion trajectory guided by a learned prior and augmented by constraint gradient corrections. The approach accommodates a wide class of non-convex and nonlinear equality constraints and can be applied post hoc to any base model. We demonstrate the method in two representative domains: constrained adversarial attack generation on tabular data with column-level dependencies and in AC power flow prediction under Kirchhoff's laws. Across both settings, our diffusion-guided refinement improves both constraint satisfaction and performance while remaining lightweight and model-agnostic.

KCLNet: Physics-Informed Power Flow Prediction via Constraints Projections

Jun 15, 2025Abstract:In the modern context of power systems, rapid, scalable, and physically plausible power flow predictions are essential for ensuring the grid's safe and efficient operation. While traditional numerical methods have proven robust, they require extensive computation to maintain physical fidelity under dynamic or contingency conditions. In contrast, recent advancements in artificial intelligence (AI) have significantly improved computational speed; however, they often fail to enforce fundamental physical laws during real-world contingencies, resulting in physically implausible predictions. In this work, we introduce KCLNet, a physics-informed graph neural network that incorporates Kirchhoff's Current Law as a hard constraint via hyperplane projections. KCLNet attains competitive prediction accuracy while ensuring zero KCL violations, thereby delivering reliable and physically consistent power flow predictions critical to secure the operation of modern smart grids.

Robustness Analysis of AI Models in Critical Energy Systems

Jun 20, 2024

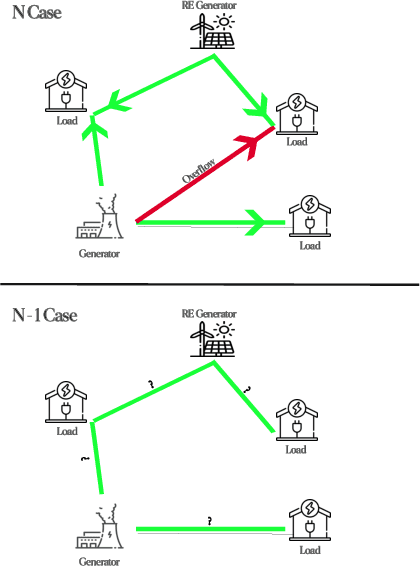

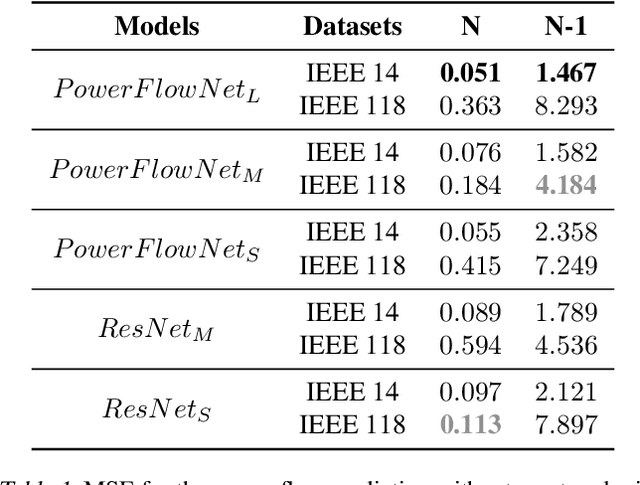

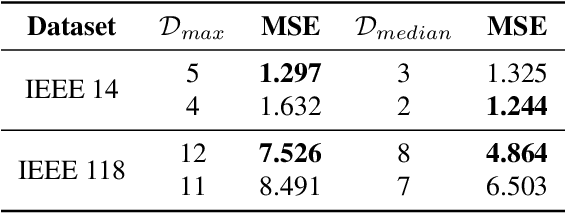

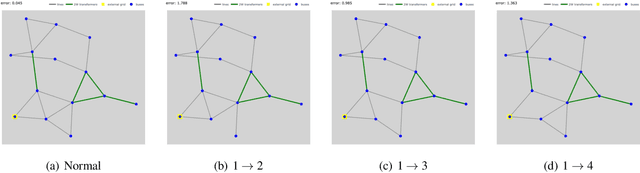

Abstract:This paper analyzes the robustness of state-of-the-art AI-based models for power grid operations under the $N-1$ security criterion. While these models perform well in regular grid settings, our results highlight a significant loss in accuracy following the disconnection of a line.%under this security criterion. Using graph theory-based analysis, we demonstrate the impact of node connectivity on this loss. Our findings emphasize the need for practical scenario considerations in developing AI methodologies for critical infrastructure.

Improving Synthetically Generated Image Detection in Cross-Concept Settings

Apr 24, 2023

Abstract:New advancements for the detection of synthetic images are critical for fighting disinformation, as the capabilities of generative AI models continuously evolve and can lead to hyper-realistic synthetic imagery at unprecedented scale and speed. In this paper, we focus on the challenge of generalizing across different concept classes, e.g., when training a detector on human faces and testing on synthetic animal images - highlighting the ineffectiveness of existing approaches that randomly sample generated images to train their models. By contrast, we propose an approach based on the premise that the robustness of the detector can be enhanced by training it on realistic synthetic images that are selected based on their quality scores according to a probabilistic quality estimation model. We demonstrate the effectiveness of the proposed approach by conducting experiments with generated images from two seminal architectures, StyleGAN2 and Latent Diffusion, and using three different concepts for each, so as to measure the cross-concept generalization ability. Our results show that our quality-based sampling method leads to higher detection performance for nearly all concepts, improving the overall effectiveness of the synthetic image detectors.

A convolutional neural network of low complexity for tumor anomaly detection

Jan 25, 2023Abstract:The automated detection of cancerous tumors has attracted interest mainly during the last decade, due to the necessity of early and efficient diagnosis that will lead to the most effective possible treatment of the impending risk. Several machine learning and artificial intelligence methodologies has been employed aiming to provide trustworthy helping tools that will contribute efficiently to this attempt. In this article, we present a low-complexity convolutional neural network architecture for tumor classification enhanced by a robust image augmentation methodology. The effectiveness of the presented deep learning model has been investigated based on 3 datasets containing brain, kidney and lung images, showing remarkable diagnostic efficiency with classification accuracies of 99.33%, 100% and 99.7% for the 3 datasets respectively. The impact of the augmentation preprocessing step has also been extensively examined using 4 evaluation measures. The proposed low-complexity scheme, in contrast to other models in the literature, renders our model quite robust to cases of overfitting that typically accompany small datasets frequently encountered in medical classification challenges. Finally, the model can be easily re-trained in case additional volume images are included, as its simplistic architecture does not impose a significant computational burden.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge