Pablo Munoz

SuperSAM: Crafting a SAM Supernetwork via Structured Pruning and Unstructured Parameter Prioritization

Jan 15, 2025

Abstract:Neural Architecture Search (NAS) is a powerful approach of automating the design of efficient neural architectures. In contrast to traditional NAS methods, recently proposed one-shot NAS methods prove to be more efficient in performing NAS. One-shot NAS works by generating a singular weight-sharing supernetwork that acts as a search space (container) of subnetworks. Despite its achievements, designing the one-shot search space remains a major challenge. In this work we propose a search space design strategy for Vision Transformer (ViT)-based architectures. In particular, we convert the Segment Anything Model (SAM) into a weight-sharing supernetwork called SuperSAM. Our approach involves automating the search space design via layer-wise structured pruning and parameter prioritization. While the structured pruning applies probabilistic removal of certain transformer layers, parameter prioritization performs weight reordering and slicing of MLP-blocks in the remaining layers. We train supernetworks on several datasets using the sandwich rule. For deployment, we enhance subnetwork discovery by utilizing a program autotuner to identify efficient subnetworks within the search space. The resulting subnetworks are 30-70% smaller in size compared to the original pre-trained SAM ViT-B, yet outperform the pretrained model. Our work introduces a new and effective method for ViT NAS search-space design.

LEFL: Low Entropy Client Sampling in Federated Learning

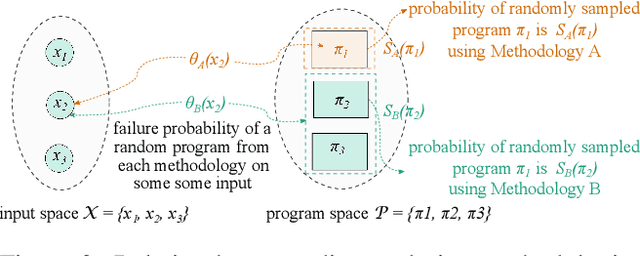

Dec 29, 2023Abstract:Federated learning (FL) is a machine learning paradigm where multiple clients collaborate to optimize a single global model using their private data. The global model is maintained by a central server that orchestrates the FL training process through a series of training rounds. In each round, the server samples clients from a client pool before sending them its latest global model parameters for further optimization. Naive sampling strategies implement random client sampling and fail to factor client data distributions for privacy reasons. Hence we proposes an alternative sampling strategy by performing a one-time clustering of clients based on their model's learned high-level features while respecting data privacy. This enables the server to perform stratified client sampling across clusters in every round. We show datasets of sampled clients selected with this approach yield a low relative entropy with respect to the global data distribution. Consequently, the FL training becomes less noisy and significantly improves the convergence of the global model by as much as 7.4% in some experiments. Furthermore, it also significantly reduces the communication rounds required to achieve a target accuracy.

Exploring Resiliency to Natural Image Corruptions in Deep Learning using Design Diversity

Mar 15, 2023

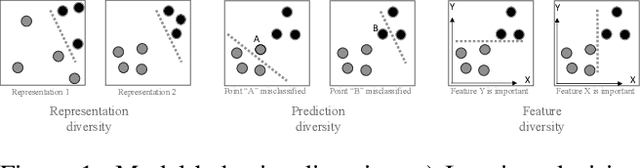

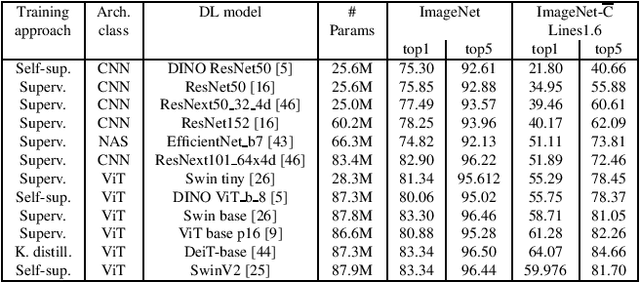

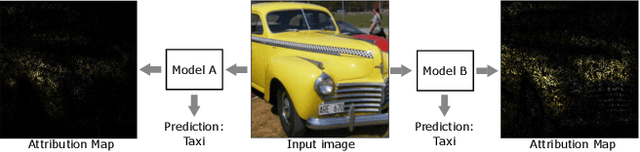

Abstract:In this paper, we investigate the relationship between diversity metrics, accuracy, and resiliency to natural image corruptions of Deep Learning (DL) image classifier ensembles. We investigate the potential of an attribution-based diversity metric to improve the known accuracy-diversity trade-off of the typical prediction-based diversity. Our motivation is based on analytical studies of design diversity that have shown that a reduction of common failure modes is possible if diversity of design choices is achieved. Using ResNet50 as a comparison baseline, we evaluate the resiliency of multiple individual DL model architectures against dataset distribution shifts corresponding to natural image corruptions. We compare ensembles created with diverse model architectures trained either independently or through a Neural Architecture Search technique and evaluate the correlation of prediction-based and attribution-based diversity to the final ensemble accuracy. We evaluate a set of diversity enforcement heuristics based on negative correlation learning to assess the final ensemble resilience to natural image corruptions and inspect the resulting prediction, activation, and attribution diversity. Our key observations are: 1) model architecture is more important for resiliency than model size or model accuracy, 2) attribution-based diversity is less negatively correlated to the ensemble accuracy than prediction-based diversity, 3) a balanced loss function of individual and ensemble accuracy creates more resilient ensembles for image natural corruptions, 4) architecture diversity produces more diversity in all explored diversity metrics: predictions, attributions, and activations.

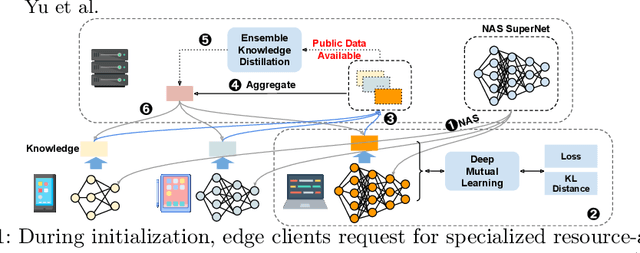

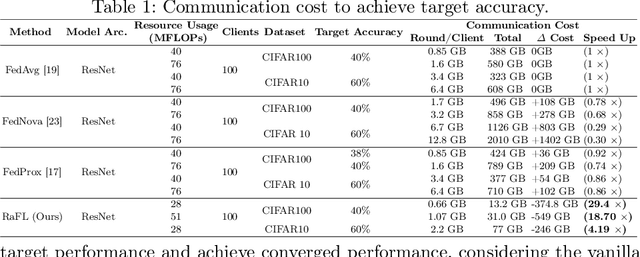

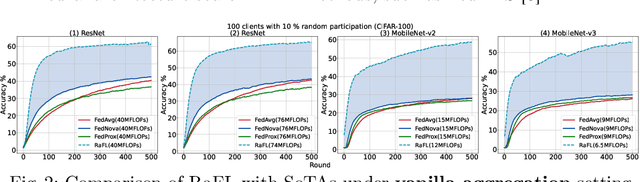

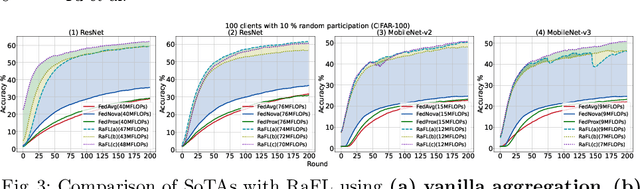

Resource-Aware Heterogeneous Federated Learning using Neural Architecture Search

Nov 09, 2022

Abstract:Federated Learning (FL) is extensively used to train AI/ML models in distributed and privacy-preserving settings. Participant edge devices in FL systems typically contain non-independent and identically distributed~(Non-IID) private data and unevenly distributed computational resources. Preserving user data privacy while optimizing AI/ML models in a heterogeneous federated network requires us to address data heterogeneity and system/resource heterogeneity. Hence, we propose \underline{R}esource-\underline{a}ware \underline{F}ederated \underline{L}earning~(RaFL) to address these challenges. RaFL allocates resource-aware models to edge devices using Neural Architecture Search~(NAS) and allows heterogeneous model architecture deployment by knowledge extraction and fusion. Integrating NAS into FL enables on-demand customized model deployment for resource-diverse edge devices. Furthermore, we propose a multi-model architecture fusion scheme allowing the aggregation of the distributed learning results. Results demonstrate RaFL's superior resource efficiency compared to SoTA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge