Pablo Aragón

Characterizing Knowledge Manipulation in a Russian Wikipedia Fork

Apr 14, 2025Abstract:Wikipedia is powered by MediaWiki, a free and open-source software that is also the infrastructure for many other wiki-based online encyclopedias. These include the recently launched website Ruwiki, which has copied and modified the original Russian Wikipedia content to conform to Russian law. To identify practices and narratives that could be associated with different forms of knowledge manipulation, this article presents an in-depth analysis of this Russian Wikipedia fork. We propose a methodology to characterize the main changes with respect to the original version. The foundation of this study is a comprehensive comparative analysis of more than 1.9M articles from Russian Wikipedia and its fork. Using meta-information and geographical, temporal, categorical, and textual features, we explore the changes made by Ruwiki editors. Furthermore, we present a classification of the main topics of knowledge manipulation in this fork, including a numerical estimation of their scope. This research not only sheds light on significant changes within Ruwiki, but also provides a methodology that could be applied to analyze other Wikipedia forks and similar collaborative projects.

Language-Agnostic Modeling of Source Reliability on Wikipedia

Oct 24, 2024

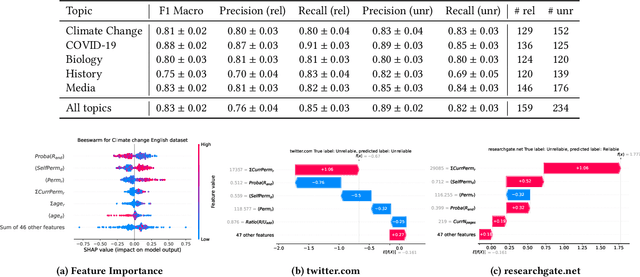

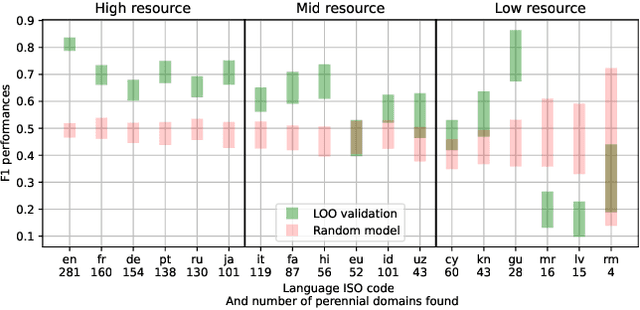

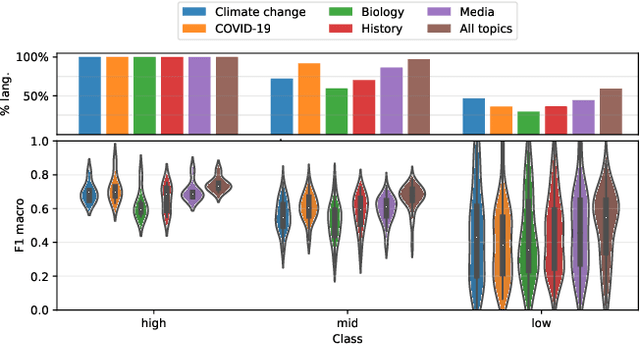

Abstract:Over the last few years, content verification through reliable sources has become a fundamental need to combat disinformation. Here, we present a language-agnostic model designed to assess the reliability of sources across multiple language editions of Wikipedia. Utilizing editorial activity data, the model evaluates source reliability within different articles of varying controversiality such as Climate Change, COVID-19, History, Media, and Biology topics. Crafting features that express domain usage across articles, the model effectively predicts source reliability, achieving an F1 Macro score of approximately 0.80 for English and other high-resource languages. For mid-resource languages, we achieve 0.65 while the performance of low-resource languages varies; in all cases, the time the domain remains present in the articles (which we dub as permanence) is one of the most predictive features. We highlight the challenge of maintaining consistent model performance across languages of varying resource levels and demonstrate that adapting models from higher-resource languages can improve performance. This work contributes not only to Wikipedia's efforts in ensuring content verifiability but in ensuring reliability across diverse user-generated content in various language communities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge