Osher Azulay

Embodiment-Agnostic Navigation Policy Trained with Visual Demonstrations

Dec 28, 2024

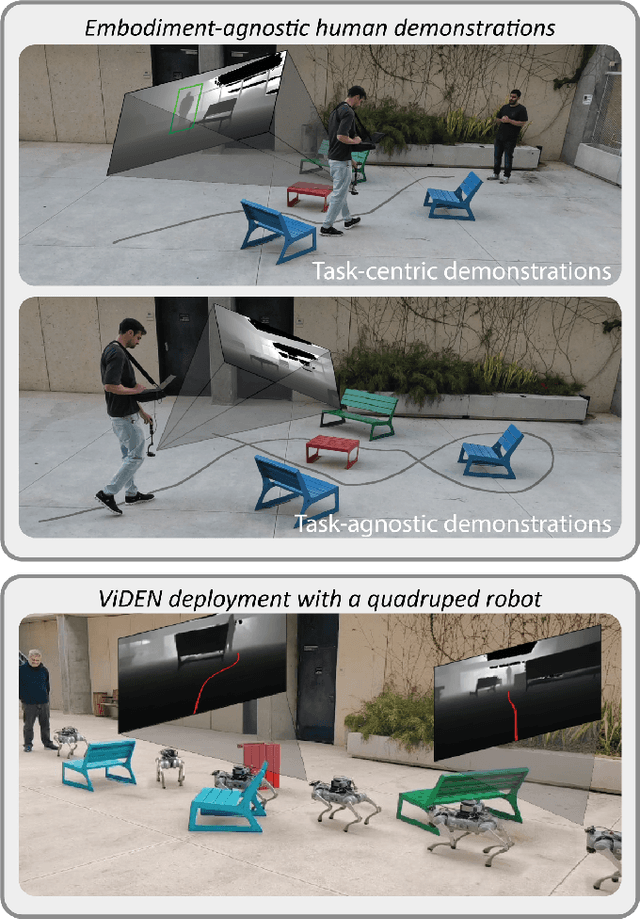

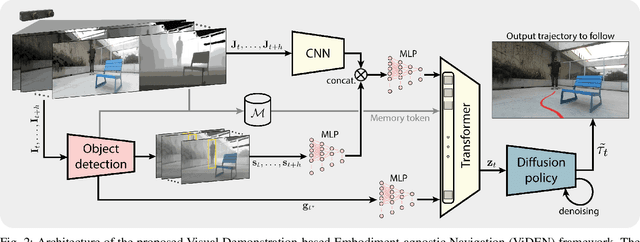

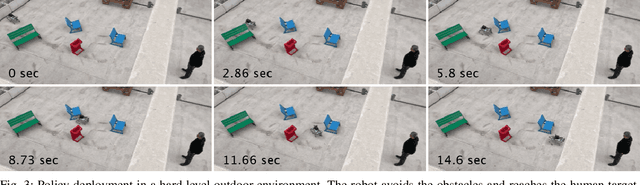

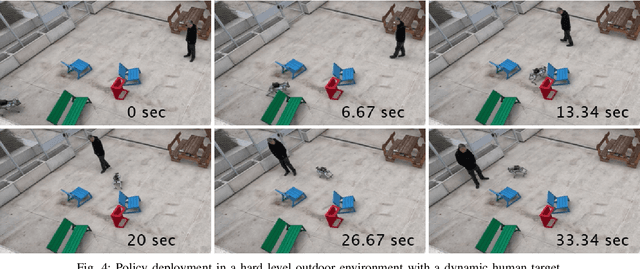

Abstract:Learning to navigate in unstructured environments is a challenging task for robots. While reinforcement learning can be effective, it often requires extensive data collection and can pose risk. Learning from expert demonstrations, on the other hand, offers a more efficient approach. However, many existing methods rely on specific robot embodiments, pre-specified target images and require large datasets. We propose the Visual Demonstration-based Embodiment-agnostic Navigation (ViDEN) framework, a novel framework that leverages visual demonstrations to train embodiment-agnostic navigation policies. ViDEN utilizes depth images to reduce input dimensionality and relies on relative target positions, making it more adaptable to diverse environments. By training a diffusion-based policy on task-centric and embodiment-agnostic demonstrations, ViDEN can generate collision-free and adaptive trajectories in real-time. Our experiments on human reaching and tracking demonstrate that ViDEN outperforms existing methods, requiring a small amount of data and achieving superior performance in various indoor and outdoor navigation scenarios. Project website: https://nimicurtis.github.io/ViDEN/.

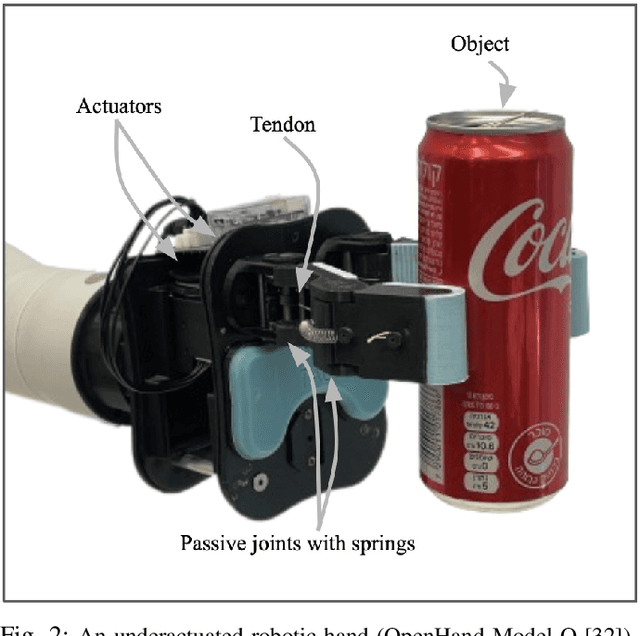

Visuotactile-Based Learning for Insertion with Compliant Hands

Nov 10, 2024

Abstract:Compared to rigid hands, underactuated compliant hands offer greater adaptability to object shapes, provide stable grasps, and are often more cost-effective. However, they introduce uncertainties in hand-object interactions due to their inherent compliance and lack of precise finger proprioception as in rigid hands. These limitations become particularly significant when performing contact-rich tasks like insertion. To address these challenges, additional sensing modalities are required to enable robust insertion capabilities. This letter explores the essential sensing requirements for successful insertion tasks with compliant hands, focusing on the role of visuotactile perception. We propose a simulation-based multimodal policy learning framework that leverages all-around tactile sensing and an extrinsic depth camera. A transformer-based policy, trained through a teacher-student distillation process, is successfully transferred to a real-world robotic system without further training. Our results emphasize the crucial role of tactile sensing in conjunction with visual perception for accurate object-socket pose estimation, successful sim-to-real transfer and robust task execution.

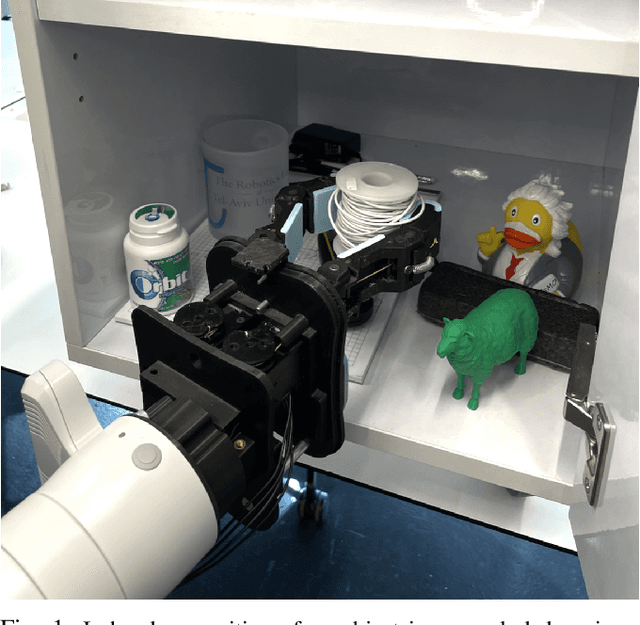

Kinesthetic-based In-Hand Object Recognition with an Underactuated Robotic Hand

Jan 30, 2024

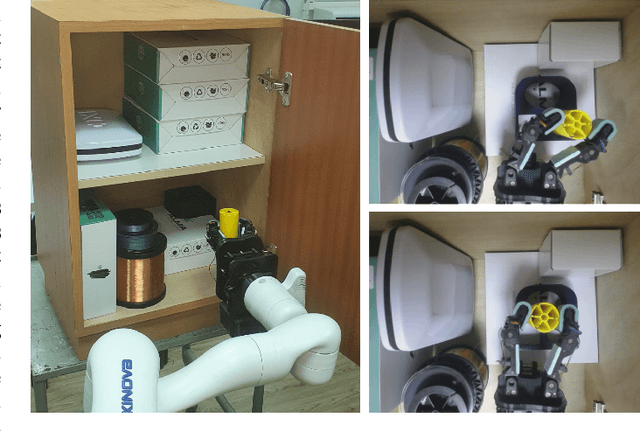

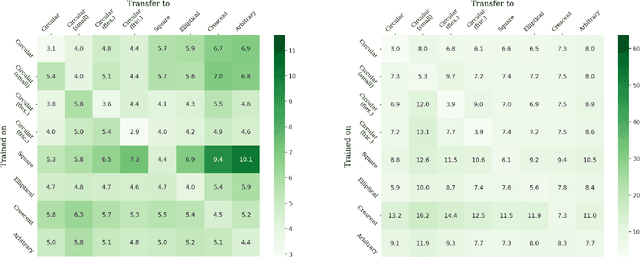

Abstract:Tendon-based underactuated hands are intended to be simple, compliant and affordable. Often, they are 3D printed and do not include tactile sensors. Hence, performing in-hand object recognition with direct touch sensing is not feasible. Adding tactile sensors can complicate the hardware and introduce extra costs to the robotic hand. Also, the common approach of visual perception may not be available due to occlusions. In this paper, we explore whether kinesthetic haptics can provide in-direct information regarding the geometry of a grasped object during in-hand manipulation with an underactuated hand. By solely sensing actuator positions and torques over a period of time during motion, we show that a classifier can recognize an object from a set of trained ones with a high success rate of almost 95%. In addition, the implementation of a real-time majority vote during manipulation further improves recognition. Additionally, a trained classifier is also shown to be successful in distinguishing between shape categories rather than just specific objects.

Survey of Learning Approaches for Robotic In-Hand Manipulation

Jan 15, 2024Abstract:Human dexterity is an invaluable capability for precise manipulation of objects in complex tasks. The capability of robots to similarly grasp and perform in-hand manipulation of objects is critical for their use in the ever changing human environment, and for their ability to replace manpower. In recent decades, significant effort has been put in order to enable in-hand manipulation capabilities to robotic systems. Initial robotic manipulators followed carefully programmed paths, while later attempts provided a solution based on analytical modeling of motion and contact. However, these have failed to provide practical solutions due to inability to cope with complex environments and uncertainties. Therefore, the effort has shifted to learning-based approaches where data is collected from the real world or through a simulation, during repeated attempts to complete various tasks. The vast majority of learning approaches focused on learning data-based models that describe the system to some extent or Reinforcement Learning (RL). RL, in particular, has seen growing interest due to the remarkable ability to generate solutions to problems with minimal human guidance. In this survey paper, we track the developments of learning approaches for in-hand manipulations and, explore the challenges and opportunities. This survey is designed both as an introduction for novices in the field with a glossary of terms as well as a guide of novel advances for advanced practitioners.

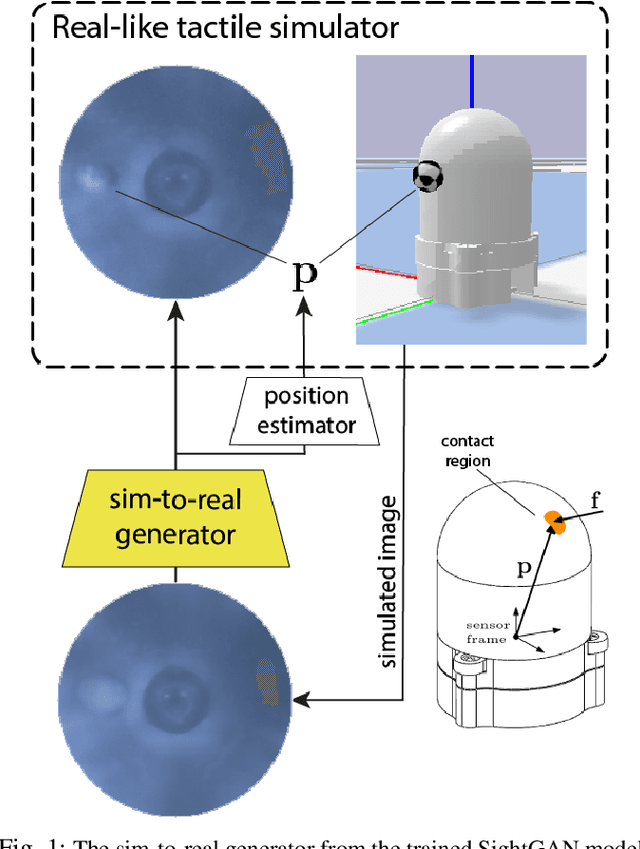

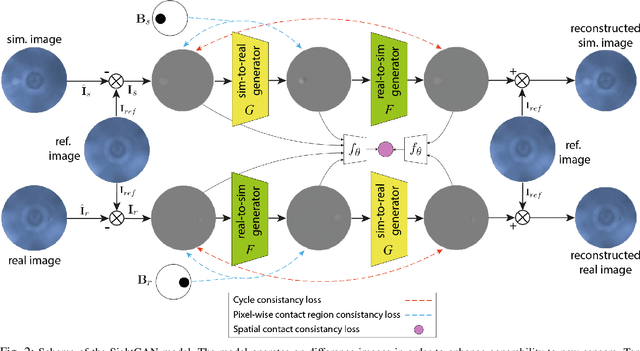

Augmenting Tactile Simulators with Real-like and Zero-Shot Capabilities

Sep 19, 2023

Abstract:Simulating tactile perception could potentially leverage the learning capabilities of robotic systems in manipulation tasks. However, the reality gap of simulators for high-resolution tactile sensors remains large. Models trained on simulated data often fail in zero-shot inference and require fine-tuning with real data. In addition, work on high-resolution sensors commonly focus on ones with flat surfaces while 3D round sensors are essential for dexterous manipulation. In this paper, we propose a bi-directional Generative Adversarial Network (GAN) termed SightGAN. SightGAN relies on the early CycleGAN while including two additional loss components aimed to accurately reconstruct background and contact patterns including small contact traces. The proposed SightGAN learns real-to-sim and sim-to-real processes over difference images. It is shown to generate real-like synthetic images while maintaining accurate contact positioning. The generated images can be used to train zero-shot models for newly fabricated sensors. Consequently, the resulted sim-to-real generator could be built on top of the tactile simulator to provide a real-world framework. Potentially, the framework can be used to train, for instance, reinforcement learning policies of manipulation tasks. The proposed model is verified in extensive experiments with test data collected from real sensors and also shown to maintain embedded force information within the tactile images.

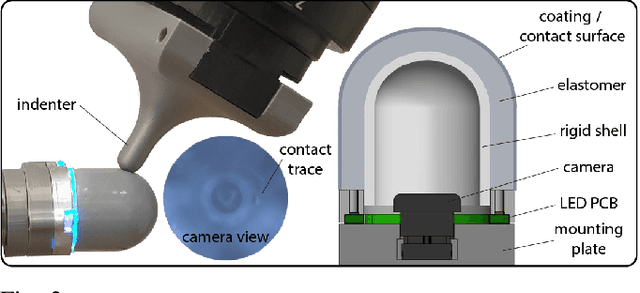

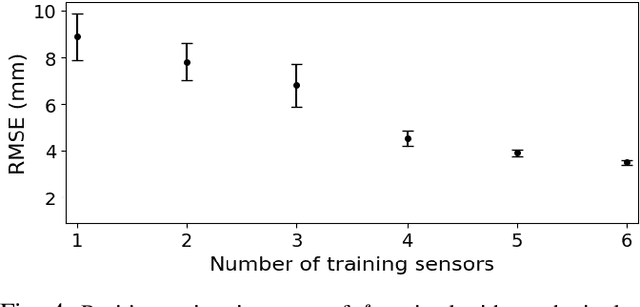

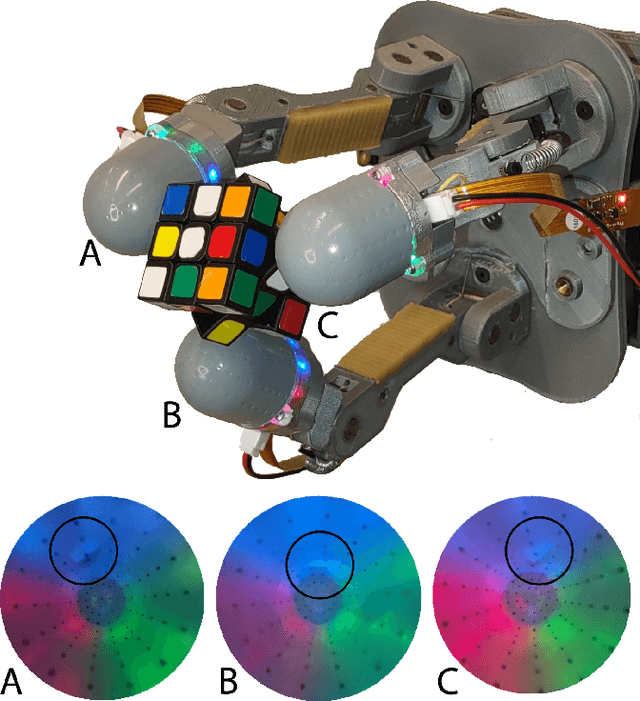

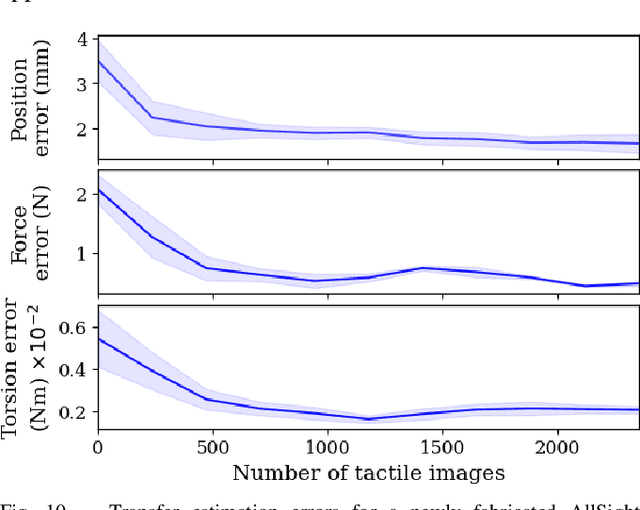

AllSight: A Low-Cost and High-Resolution Round Tactile Sensor with Zero-Shot Learning Capability

Jul 06, 2023

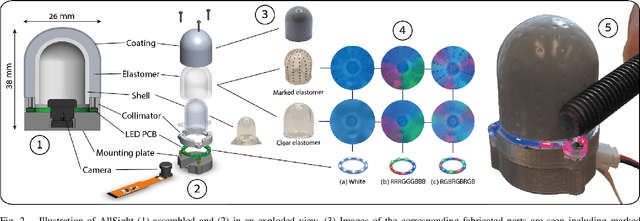

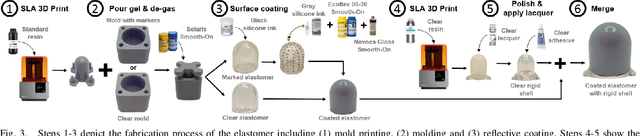

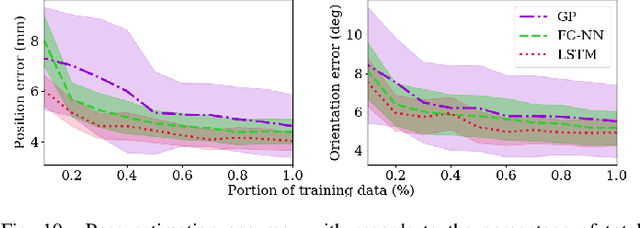

Abstract:Tactile sensing is a necessary capability for a robotic hand to perform fine manipulations and interact with the environment. Optical sensors are a promising solution for high-resolution contact estimation. Nevertheless, they are usually not easy to fabricate and require individual calibration in order to acquire sufficient accuracy. In this letter, we propose AllSight, an optical tactile sensor with a round 3D structure potentially designed for robotic in-hand manipulation tasks. AllSight is mostly 3D printed making it low-cost, modular, durable and in the size of a human thumb while with a large contact surface. We show the ability of AllSight to learn and estimate a full contact state, i.e., contact position, forces and torsion. With that, an experimental benchmark between various configurations of illumination and contact elastomers are provided. Furthermore, the robust design of AllSight provides it with a unique zero-shot capability such that a practitioner can fabricate the open-source design and have a ready-to-use state estimation model. A set of experiments demonstrates the accurate state estimation performance of AllSight.

Learning Haptic-based Object Pose Estimation for In-hand Manipulation with Underactuated Robotic Hands

Jul 06, 2022

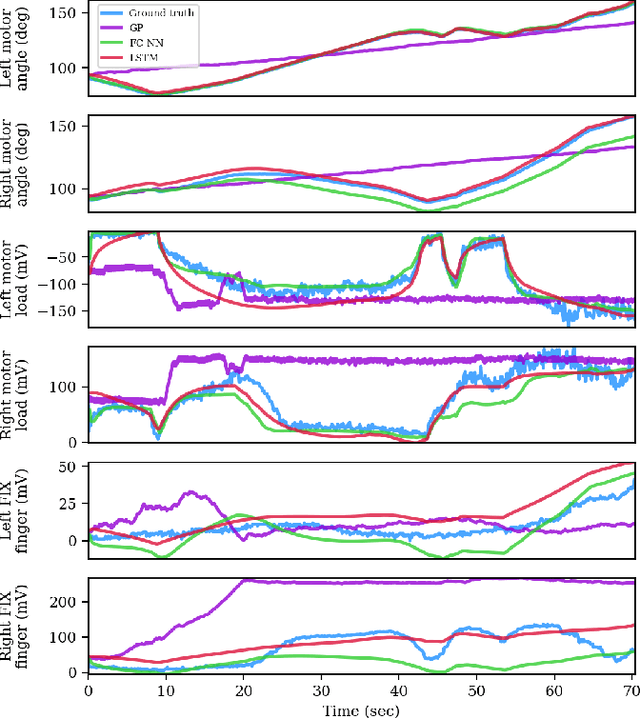

Abstract:Unlike traditional robotic hands, underactuated compliant hands are challenging to model due to inherent uncertainties. Consequently, pose estimation of a grasped object is usually performed based on visual perception. However, visual perception of the hand and object can be limited in occluded or partly-occluded environments. In this paper, we aim to explore the use of haptics, i.e., kinesthetic and tactile sensing, for pose estimation and in-hand manipulation with underactuated hands. Such haptic approach would mitigate occluded environments where line-of-sight is not always available. We put an emphasis on identifying the feature state representation of the system that does not include vision and can be obtained with simple and low-cost hardware. For tactile sensing, therefore, we propose a low-cost and flexible sensor that is mostly 3D printed along with the finger-tip and can provide implicit contact information. Taking a two-finger underactuated hand as a test-case, we analyze the contribution of kinesthetic and tactile features along with various regression models to the accuracy of the predictions. Furthermore, we propose a Model Predictive Control (MPC) approach which utilizes the pose estimation to manipulate objects to desired states solely based on haptics. We have conducted a series of experiments that validate the ability to estimate poses of various objects with different geometry, stiffness and texture, and show manipulation to goals in the workspace with relatively high accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge