Orrin Devinsky

The Temporal Structure of Language Processing in the Human Brain Corresponds to The Layered Hierarchy of Deep Language Models

Oct 11, 2023Abstract:Deep Language Models (DLMs) provide a novel computational paradigm for understanding the mechanisms of natural language processing in the human brain. Unlike traditional psycholinguistic models, DLMs use layered sequences of continuous numerical vectors to represent words and context, allowing a plethora of emerging applications such as human-like text generation. In this paper we show evidence that the layered hierarchy of DLMs may be used to model the temporal dynamics of language comprehension in the brain by demonstrating a strong correlation between DLM layer depth and the time at which layers are most predictive of the human brain. Our ability to temporally resolve individual layers benefits from our use of electrocorticography (ECoG) data, which has a much higher temporal resolution than noninvasive methods like fMRI. Using ECoG, we record neural activity from participants listening to a 30-minute narrative while also feeding the same narrative to a high-performing DLM (GPT2-XL). We then extract contextual embeddings from the different layers of the DLM and use linear encoding models to predict neural activity. We first focus on the Inferior Frontal Gyrus (IFG, or Broca's area) and then extend our model to track the increasing temporal receptive window along the linguistic processing hierarchy from auditory to syntactic and semantic areas. Our results reveal a connection between human language processing and DLMs, with the DLM's layer-by-layer accumulation of contextual information mirroring the timing of neural activity in high-order language areas.

Localized Motion Artifact Reduction on Brain MRI Using Deep Learning with Effective Data Augmentation Techniques

Jul 10, 2020

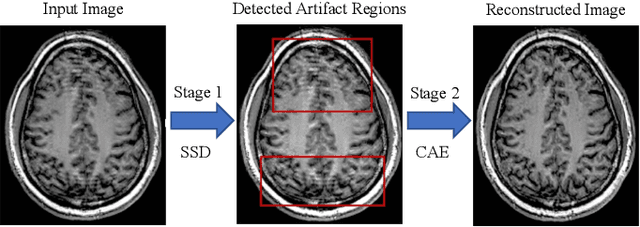

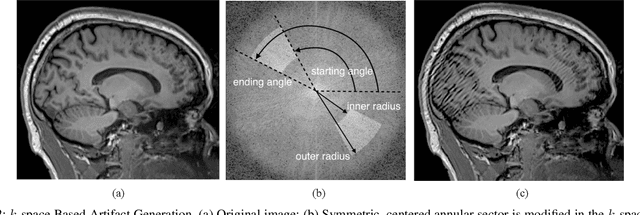

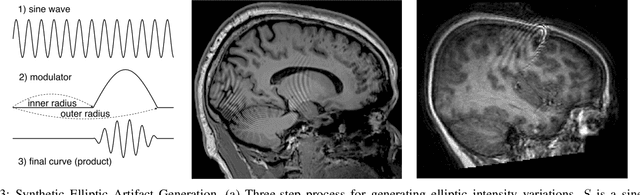

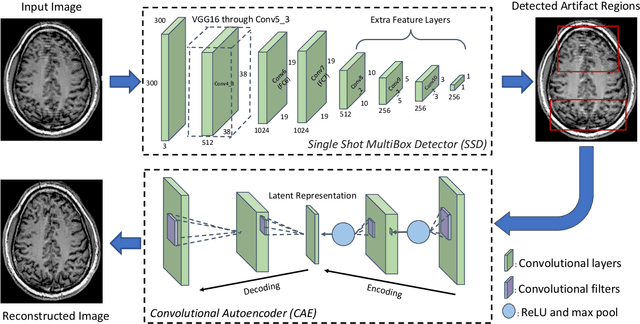

Abstract:In-scanner motion degrades the quality of magnetic resonance imaging (MRI) thereby reducing its utility in the detection of clinically relevant abnormalities. We introduce a deep learning-based MRI artifact reduction model (DMAR) to localize and correct head motion artifacts in brain MRI scans. Our approach integrates the latest advances in object detection and noise reduction in Computer Vision. Specifically, DMAR employs a two-stage approach: in the first, degraded regions are detected using the Single Shot Multibox Detector (SSD), and in the second, the artifacts within the found regions are reduced using a convolutional autoencoder (CAE). We further introduce a set of novel data augmentation techniques to address the high dimensionality of MRI images and the scarcity of available data. As a result, our model was trained on a large synthetic dataset of 217,000 images generated from six whole-brain T1-weighted MRI scans obtained from three subjects. DMAR produces convincing visual results when applied to both synthetic test images and 55 real-world motion-affected slices from 18 subjects from the multi-center Autism Brain Imaging Data Exchange study. Quantitatively, depending on the level of degradation, our model achieves a 14.3%-25.6% reduction in RMSE and a 1.38-2.68 dB gain in PSNR on a 5000-sample set of synthetic images. For real-world scans where the ground-truth is unavailable, our model produces a 3.65% reduction in regional standard deviations of image intensity.

Multi-task Learning with Weak Class Labels: Leveraging iEEG to Detect Cortical Lesions in Cryptogenic Epilepsy

Jul 30, 2016

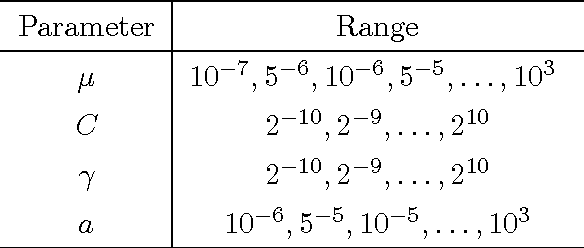

Abstract:Multi-task learning (MTL) is useful for domains in which data originates from multiple sources that are individually under-sampled. MTL methods are able to learn classification models that have higher performance as compared to learning a single model by aggregating all the data together or learning a separate model for each data source. The performance of these methods relies on label accuracy. We address the problem of simultaneously learning multiple classifiers in the MTL framework when the training data has imprecise labels. We assume that there is an additional source of information that provides a score for each instance which reflects the certainty about its label. Modeling this score as being generated by an underlying ranking function, we augment the MTL framework with an added layer of supervision. This results in new MTL methods that are able to learn accurate classifiers while preserving the domain structure provided through the rank information. We apply these methods to the task of detecting abnormal cortical regions in the MRIs of patients suffering from focal epilepsy whose MRI were read as normal by expert neuroradiologists. In addition to the noisy labels provided by the results of surgical resection, we employ the results of an invasive intracranial-EEG exam as an additional source of label information. Our proposed methods are able to successfully detect abnormal regions for all patients in our dataset and achieve a higher performance as compared to baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge