Norihiro Maruyama

A Concurrent Modular Agent: Framework for Autonomous LLM Agents

Aug 26, 2025Abstract:We introduce the Concurrent Modular Agent (CMA), a framework that orchestrates multiple Large-Language-Model (LLM)-based modules that operate fully asynchronously yet maintain a coherent and fault-tolerant behavioral loop. This framework addresses long-standing difficulties in agent architectures by letting intention emerge from language-mediated interactions among autonomous processes. This approach enables flexible, adaptive, and context-dependent behavior through the combination of concurrently executed modules that offload reasoning to an LLM, inter-module communication, and a single shared global state.We consider this approach to be a practical realization of Minsky's Society of Mind theory. We demonstrate the viability of our system through two practical use-case studies. The emergent properties observed in our system suggest that complex cognitive phenomena like self-awareness may indeed arise from the organized interaction of simpler processes, supporting Minsky-Society of Mind concept and opening new avenues for artificial intelligence research. The source code for our work is available at: https://github.com/AlternativeMachine/concurrent-modular-agent.

Evolution of Collective AI Beyond Individual Optimization

Dec 03, 2024

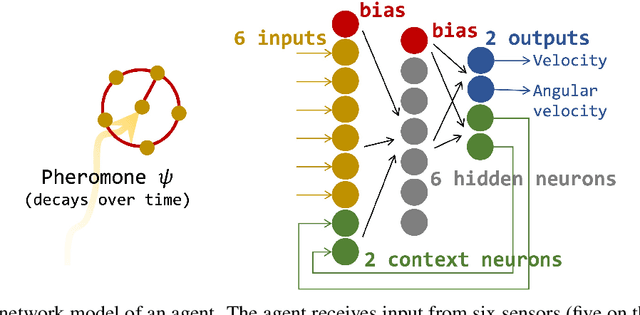

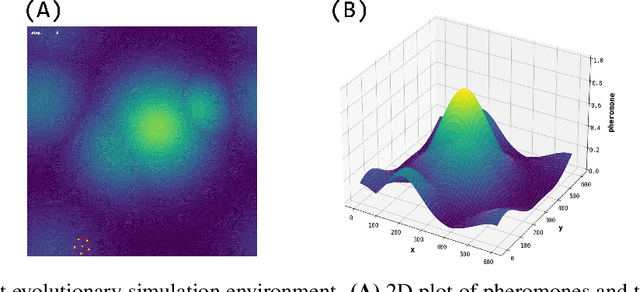

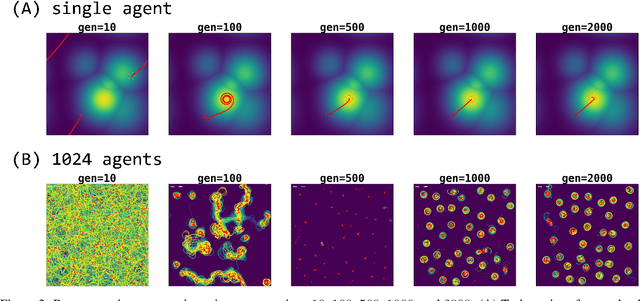

Abstract:This study investigates collective behaviors that emerge from a group of homogeneous individuals optimized for a specific capability. We created a group of simple, identical neural network based agents modeled after chemotaxis-driven vehicles that follow pheromone trails and examined multi-agent simulations using clones of these evolved individuals. Our results show that the evolution of individuals led to population differentiation. Surprisingly, we observed that collective fitness significantly changed during later evolutionary stages, despite maintained high individual performance and simplified neural architectures. This decline occurred when agents developed reduced sensor-motor coupling, suggesting that over-optimization of individual agents almost always lead to less effective group behavior. Our research investigates how individual differentiation can evolve through what evolutionary pathways.

A Simulation Environment for the Neuroevolution of Ant Colony Dynamics

Jun 19, 2024

Abstract:We introduce a simulation environment to facilitate research into emergent collective behaviour, with a focus on replicating the dynamics of ant colonies. By leveraging real-world data, the environment simulates a target ant trail that a controllable agent must learn to replicate, using sensory data observed by the target ant. This work aims to contribute to the neuroevolution of models for collective behaviour, focusing on evolving neural architectures that encode domain-specific behaviours in the network topology. By evolving models that can be modified and studied in a controlled environment, we can uncover the necessary conditions required for collective behaviours to emerge. We hope this environment will be useful to those studying the role of interactions in emergent behaviour within collective systems.

Self-Replicating and Self-Employed Smart Contract on Ethereum Blockchain

May 07, 2024

Abstract:Blockchain is the underlying technology for cryptocurrencies such as Bitcoin. Blockchain is a robust distributed ledger that uses consensus algorithms to approve transactions in a decentralized manner, making malicious tampering extremely difficult. Ethereum, one of the blockchains, can be seen as an unstoppable computer which shared by users around the world that can run Turing-complete programs. In order to run any program on Ethereum, Ether (currency on Ethereum) is required. In other words, Ether can be seen as a kind of energy in the Ethereum world. We developed self-replicating and self-employed agents who earn the energy by themselves to replicate them, on the Ethereum blockchain. The agents can issued their token and gain Ether each time the tokens are sold. When a certain amount of Ether is accumulated, the agent replicates itself and leaves offspring. The goal of this project is to implement artificial agents that lives for itself, not as a tool for humans, in the open cyber space connected to the real world.

Neural Autopoiesis: Organizing Self-Boundary by Stimulus Avoidance in Biological and Artificial Neural Networks

Jan 27, 2020

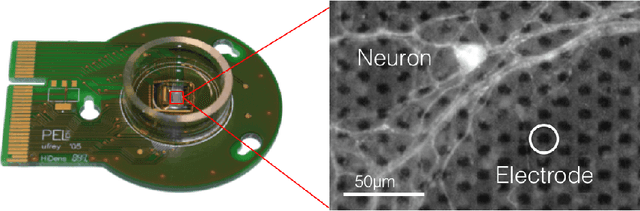

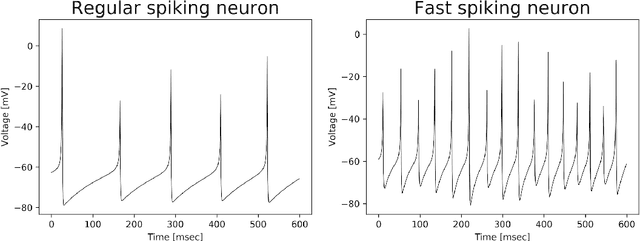

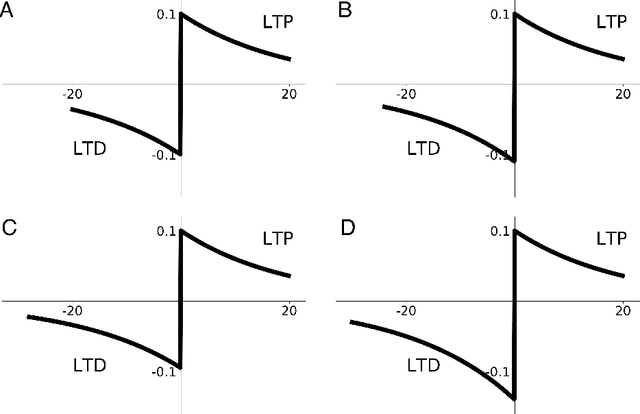

Abstract:Living organisms must actively maintain themselves in order to continue existing. Autopoiesis is a key concept in the study of living organisms, where the boundaries of the organism is not static by dynamically regulated by the system itself. To study the autonomous regulation of self-boundary, we focus on neural homeodynamic responses to environmental changes using both biological and artificial neural networks. Previous studies showed that embodied cultured neural networks and spiking neural networks with spike-timing dependent plasticity (STDP) learn an action as they avoid stimulation from outside. In this paper, as a result of our experiments using embodied cultured neurons, we find that there is also a second property allowing the network to avoid stimulation: if the agent cannot learn an action to avoid the external stimuli, it tends to decrease the stimulus-evoked spikes, as if to ignore the uncontrollable-input. We also show such a behavior is reproduced by spiking neural networks with asymmetric STDP. We consider that these properties are regarded as autonomous regulation of self and non-self for the network, in which a controllable-neuron is regarded as self, and an uncontrollable-neuron is regarded as non-self. Finally, we introduce neural autopoiesis by proposing the principle of stimulus avoidance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge