Lana Sinapayen

Self-Replication, Spontaneous Mutations, and Exponential Genetic Drift in Neural Cellular Automata

May 22, 2023Abstract:This paper reports on patterns exhibiting self-replication with spontaneous, inheritable mutations and exponential genetic drift in Neural Cellular Automata. Despite the models not being explicitly trained for mutation or inheritability, the descendant patterns exponentially drift away from ancestral patterns, even when the automaton is deterministic. While this is far from being the first instance of evolutionary dynamics in a cellular automaton, it is the first to do so by exploiting the power and convenience of Neural Cellular Automata, arguably increasing the space of variations and the opportunity for Open Ended Evolution.

Hybrid Life: Integrating Biological, Artificial, and Cognitive Systems

Dec 01, 2022Abstract:Artificial life is a research field studying what processes and properties define life, based on a multidisciplinary approach spanning the physical, natural and computational sciences. Artificial life aims to foster a comprehensive study of life beyond "life as we know it" and towards "life as it could be", with theoretical, synthetic and empirical models of the fundamental properties of living systems. While still a relatively young field, artificial life has flourished as an environment for researchers with different backgrounds, welcoming ideas and contributions from a wide range of subjects. Hybrid Life is an attempt to bring attention to some of the most recent developments within the artificial life community, rooted in more traditional artificial life studies but looking at new challenges emerging from interactions with other fields. In particular, Hybrid Life focuses on three complementary themes: 1) theories of systems and agents, 2) hybrid augmentation, with augmented architectures combining living and artificial systems, and 3) hybrid interactions among artificial and biological systems. After discussing some of the major sources of inspiration for these themes, we will focus on an overview of the works that appeared in Hybrid Life special sessions, hosted by the annual Artificial Life Conference between 2018 and 2022.

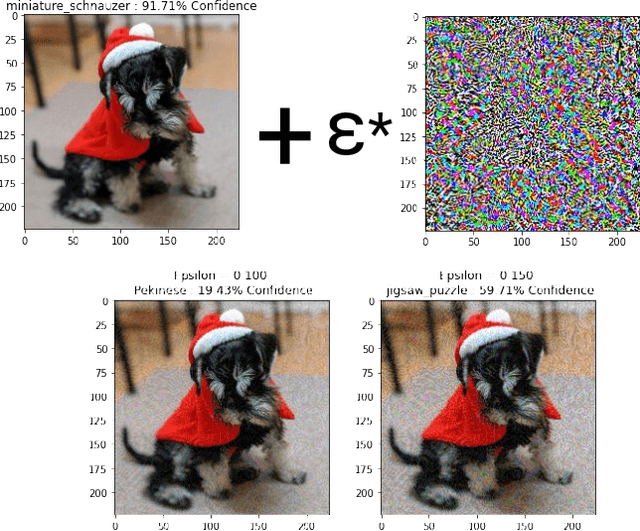

Evolutionary Generation of Visual Motion Illusions

Dec 25, 2021

Abstract:Why do we sometimes perceive static images as if they were moving? Visual motion illusions enjoy a sustained popularity, yet there is no definitive answer to the question of why they work. We present a generative model, the Evolutionary Illusion GENerator (EIGen), that creates new visual motion illusions. The structure of EIGen supports the hypothesis that illusory motion might be the result of perceiving the brain's own predictions rather than perceiving raw visual input from the eyes. The scientific motivation of this paper is to demonstrate that the perception of illusory motion could be a side effect of the predictive abilities of the brain. The philosophical motivation of this paper is to call attention to the untapped potential of "motivated failures", ways for artificial systems to fail as biological systems fail, as a worthy outlet for Artificial Intelligence and Artificial Life research.

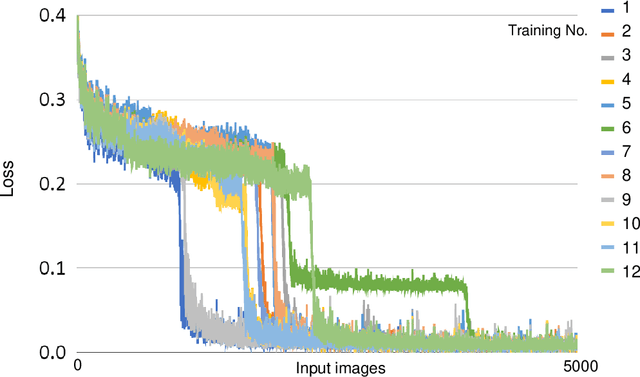

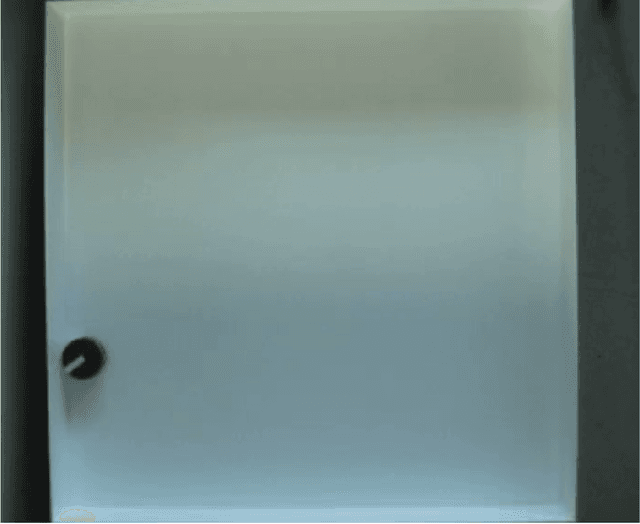

Impact of GPU uncertainty on the training of predictive deep neural networks

Sep 25, 2021

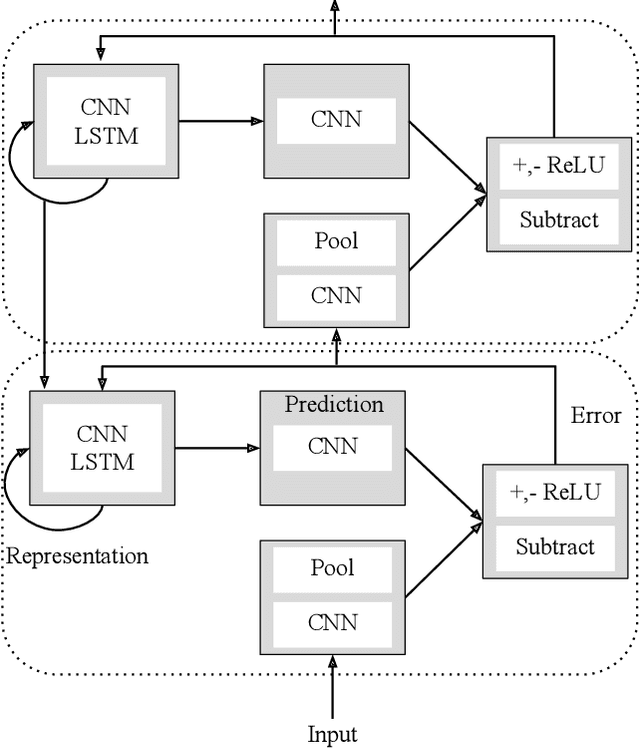

Abstract:[retracted] We found out that the difference was dependent on the Chainer library, and does not replicate with another library (pytorch) which indicates that the results are probably due to a bug in Chainer, rather than being hardware-dependent. -- old abstract Deep neural networks often present uncertainties such as hardware- and software-derived noise and randomness. We studied the effects of such uncertainty on learning outcomes, with a particular focus on the function of graphics processing units (GPUs), and found that GPU-induced uncertainty increased learning accuracy of a certain deep neural network. When training a predictive deep neural network using only the CPU without the GPU, the learning error is higher than when training the same number of epochs using the GPU, suggesting that the GPU plays a different role in the learning process than just increasing the computational speed. Because this effect cannot be observed in learning by a simple autoencoder, it could be a phenomenon specific to certain types of neural networks. GPU-specific computational processing is more indeterminate than that by CPUs, and hardware-derived uncertainties, which are often considered obstacles that need to be eliminated, might, in some cases, be successfully incorporated into the training of deep neural networks. Moreover, such uncertainties might be interesting phenomena to consider in brain-related computational processing, which comprises a large mass of uncertain signals.

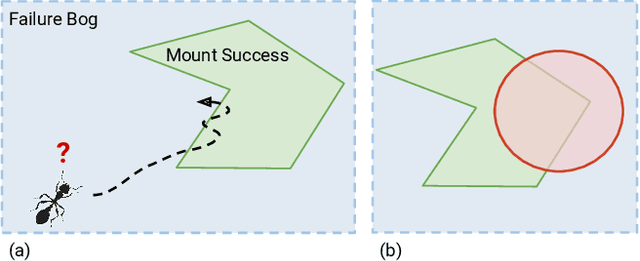

Perspective: Purposeful Failure in Artificial Life and Artificial Intelligence

Feb 24, 2021

Abstract:Complex systems fail. I argue that failures can be a blueprint characterizing living organisms and biological intelligence, a control mechanism to increase complexity in evolutionary simulations, and an alternative to classical fitness optimization. Imitating biological successes in Artificial Life and Artificial Intelligence can be misleading; imitating failures offers a path towards understanding and emulating life it in artificial systems.

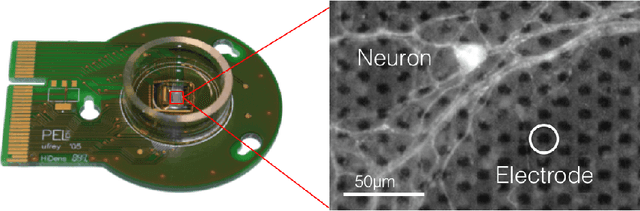

Neural Autopoiesis: Organizing Self-Boundary by Stimulus Avoidance in Biological and Artificial Neural Networks

Jan 27, 2020

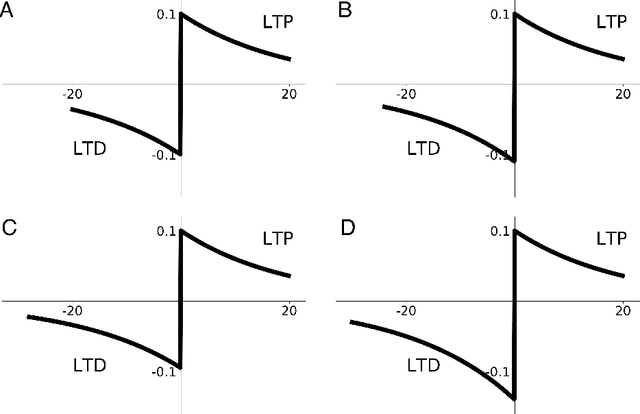

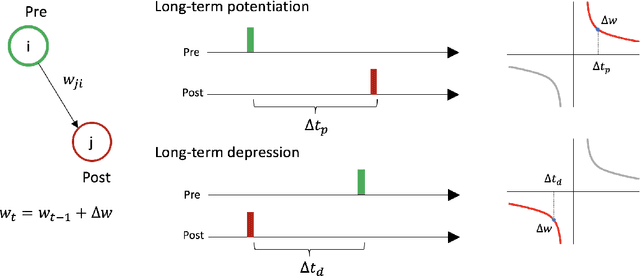

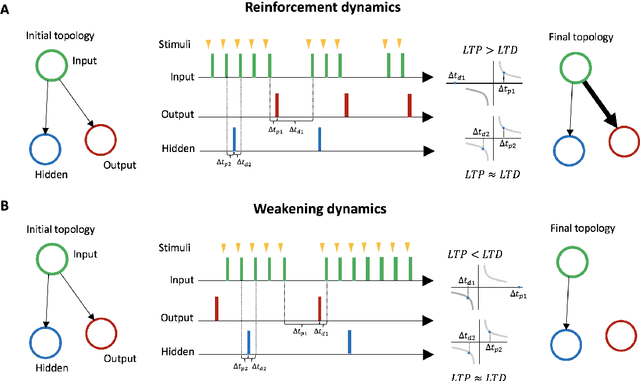

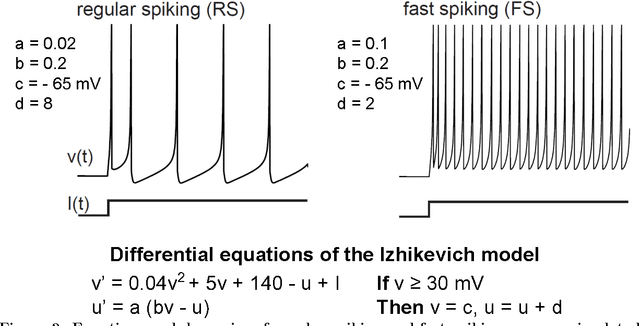

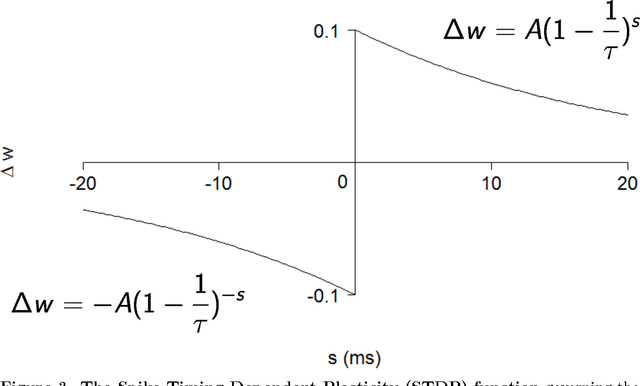

Abstract:Living organisms must actively maintain themselves in order to continue existing. Autopoiesis is a key concept in the study of living organisms, where the boundaries of the organism is not static by dynamically regulated by the system itself. To study the autonomous regulation of self-boundary, we focus on neural homeodynamic responses to environmental changes using both biological and artificial neural networks. Previous studies showed that embodied cultured neural networks and spiking neural networks with spike-timing dependent plasticity (STDP) learn an action as they avoid stimulation from outside. In this paper, as a result of our experiments using embodied cultured neurons, we find that there is also a second property allowing the network to avoid stimulation: if the agent cannot learn an action to avoid the external stimuli, it tends to decrease the stimulus-evoked spikes, as if to ignore the uncontrollable-input. We also show such a behavior is reproduced by spiking neural networks with asymmetric STDP. We consider that these properties are regarded as autonomous regulation of self and non-self for the network, in which a controllable-neuron is regarded as self, and an uncontrollable-neuron is regarded as non-self. Finally, we introduce neural autopoiesis by proposing the principle of stimulus avoidance.

Predictive Coding as Stimulus Avoidance in Spiking Neural Networks

Nov 21, 2019

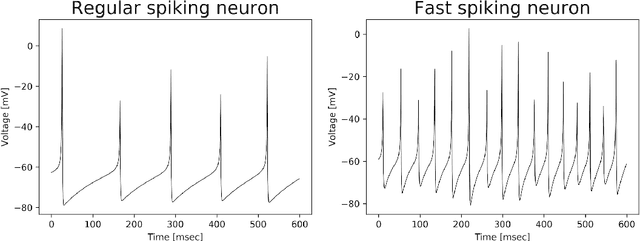

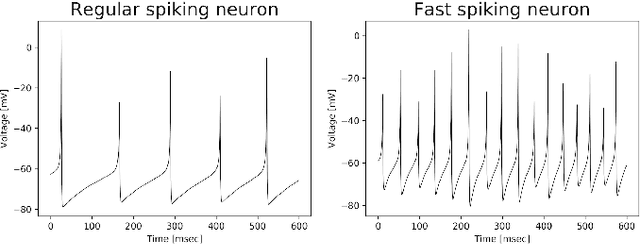

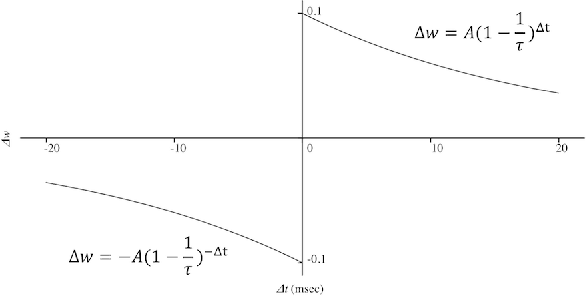

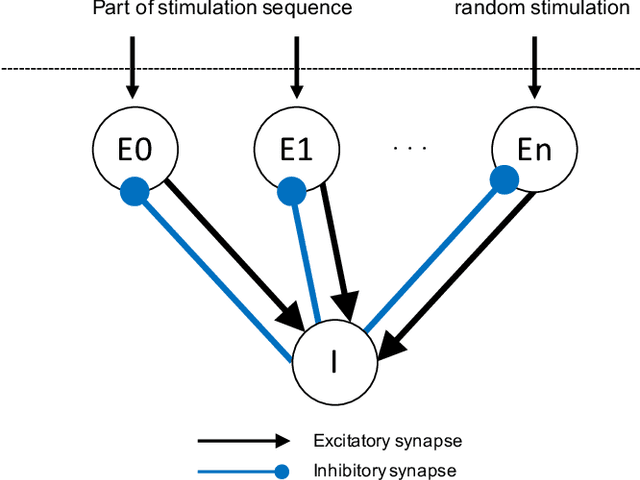

Abstract:Predictive coding can be regarded as a function which reduces the error between an input signal and a top-down prediction. If reducing the error is equivalent to reducing the influence of stimuli from the environment, predictive coding can be regarded as stimulation avoidance by prediction. Our previous studies showed that action and selection for stimulation avoidance emerge in spiking neural networks through spike-timing dependent plasticity (STDP). In this study, we demonstrate that spiking neural networks with random structure spontaneously learn to predict temporal sequences of stimuli based solely on STDP.

DNN Architecture for High Performance Prediction on Natural Videos Loses Submodule's Ability to Learn Discrete-World Dataset

Apr 16, 2019

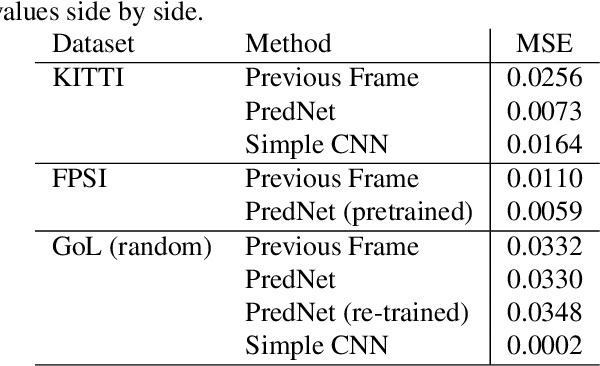

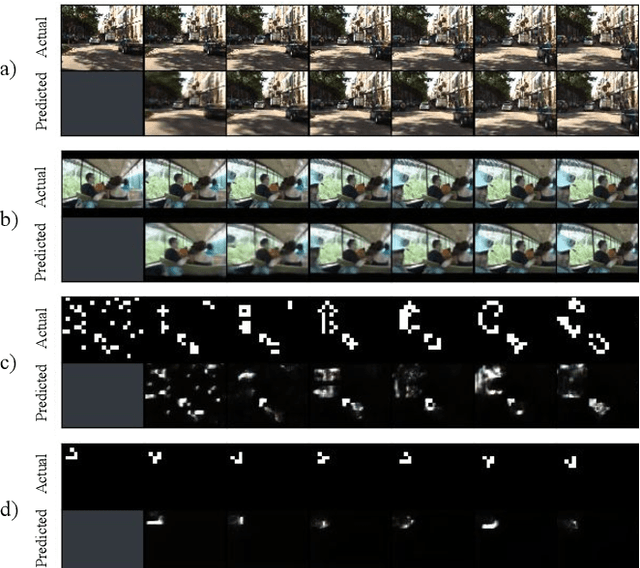

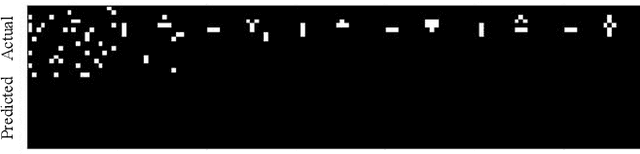

Abstract:Is cognition a collection of loosely connected functions tuned to different tasks, or can there be a general learning algorithm? If such an hypothetical general algorithm did exist, tuned to our world, could it adapt seamlessly to a world with different laws of nature? We consider the theory that predictive coding is such a general rule, and falsify it for one specific neural architecture known for high-performance predictions on natural videos and replication of human visual illusions: PredNet. Our results show that PredNet's high performance generalizes without retraining on a completely different natural video dataset. Yet PredNet cannot be trained to reach even mediocre accuracy on an artificial video dataset created with the rules of the Game of Life (GoL). We also find that a submodule of PredNet, a Convolutional Neural Network trained alone, reaches perfect accuracy on the GoL while being mediocre for natural videos, showing that PredNet's architecture itself is responsible for both the high performance on natural videos and the loss of performance on the GoL. Just as humans cannot predict the dynamics of the GoL, our results suggest that there might be a trade-off between high performance on sensory inputs with different sets of rules.

Reactive, Proactive, and Inductive Agents: An evolutionary path for biological and artificial spiking networks

Feb 18, 2019

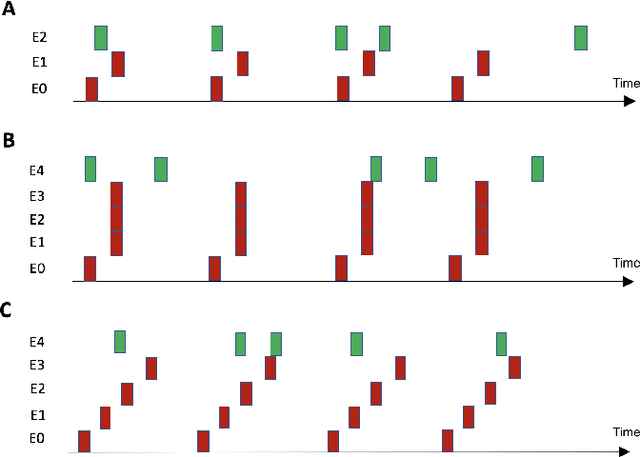

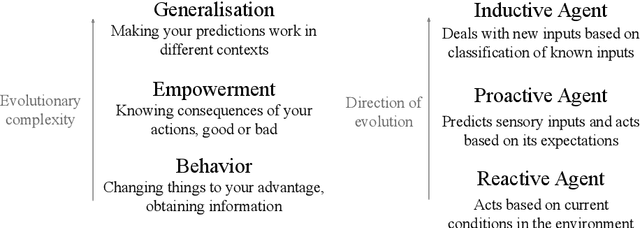

Abstract:Complex environments provide structured yet variable sensory inputs. To best exploit information from these environments, organisms must evolve the ability to correctly anticipate consequences of unknown stimuli, and act on these predictions. We propose an evolutionary path for neural networks, leading an organism from reactive behavior to simple proactive behavior and from simple proactive behavior to induction-based behavior. Through in-vitro and in-silico experiments, we define the minimal conditions necessary in a network with spike-timing dependent plasticity for the organism to go from reactive to proactive behavior. Our results support the existence of small evolutionary steps and four necessary conditions allowing embodied neural networks to evolve predictive and inductive abilities from an initial reactive strategy. We extend these conditions to more general structures.

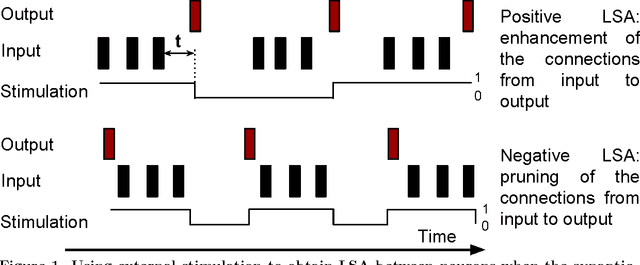

Learning by Stimulation Avoidance: A Principle to Control Spiking Neural Networks Dynamics

Sep 25, 2016

Abstract:Learning based on networks of real neurons, and by extension biologically inspired models of neural networks, has yet to find general learning rules leading to widespread applications. In this paper, we argue for the existence of a principle allowing to steer the dynamics of a biologically inspired neural network. Using carefully timed external stimulation, the network can be driven towards a desired dynamical state. We term this principle "Learning by Stimulation Avoidance" (LSA). We demonstrate through simulation that the minimal sufficient conditions leading to LSA in artificial networks are also sufficient to reproduce learning results similar to those obtained in biological neurons by Shahaf and Marom [1]. We examine the mechanism's basic dynamics in a reduced network, and demonstrate how it scales up to a network of 100 neurons. We show that LSA has a higher explanatory power than existing hypotheses about the response of biological neural networks to external simulation, and can be used as a learning rule for an embodied application: learning of wall avoidance by a simulated robot. The surge in popularity of artificial neural networks is mostly directed to disembodied models of neurons with biologically irrelevant dynamics: to the authors' knowledge, this is the first work demonstrating sensory-motor learning with random spiking networks through pure Hebbian learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge