Atsushi Masumori

A Concurrent Modular Agent: Framework for Autonomous LLM Agents

Aug 26, 2025Abstract:We introduce the Concurrent Modular Agent (CMA), a framework that orchestrates multiple Large-Language-Model (LLM)-based modules that operate fully asynchronously yet maintain a coherent and fault-tolerant behavioral loop. This framework addresses long-standing difficulties in agent architectures by letting intention emerge from language-mediated interactions among autonomous processes. This approach enables flexible, adaptive, and context-dependent behavior through the combination of concurrently executed modules that offload reasoning to an LLM, inter-module communication, and a single shared global state.We consider this approach to be a practical realization of Minsky's Society of Mind theory. We demonstrate the viability of our system through two practical use-case studies. The emergent properties observed in our system suggest that complex cognitive phenomena like self-awareness may indeed arise from the organized interaction of simpler processes, supporting Minsky-Society of Mind concept and opening new avenues for artificial intelligence research. The source code for our work is available at: https://github.com/AlternativeMachine/concurrent-modular-agent.

Spontaneous Emergence of Agent Individuality through Social Interactions in LLM-Based Communities

Nov 05, 2024

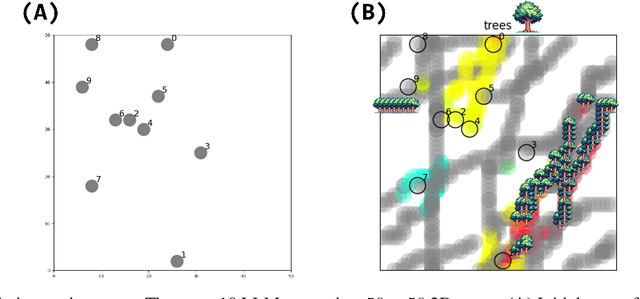

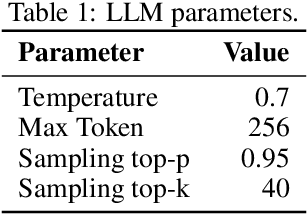

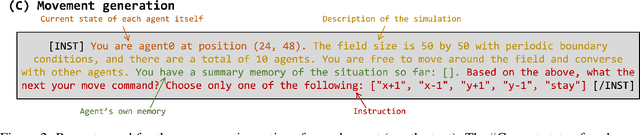

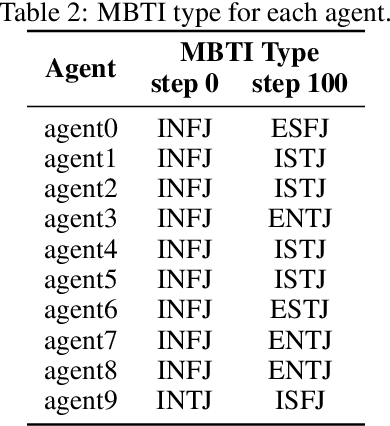

Abstract:We study the emergence of agency from scratch by using Large Language Model (LLM)-based agents. In previous studies of LLM-based agents, each agent's characteristics, including personality and memory, have traditionally been predefined. We focused on how individuality, such as behavior, personality, and memory, can be differentiated from an undifferentiated state. The present LLM agents engage in cooperative communication within a group simulation, exchanging context-based messages in natural language. By analyzing this multi-agent simulation, we report valuable new insights into how social norms, cooperation, and personality traits can emerge spontaneously. This paper demonstrates that autonomously interacting LLM-powered agents generate hallucinations and hashtags to sustain communication, which, in turn, increases the diversity of words within their interactions. Each agent's emotions shift through communication, and as they form communities, the personalities of the agents emerge and evolve accordingly. This computational modeling approach and its findings will provide a new method for analyzing collective artificial intelligence.

Minimal Self in Humanoid Robot "Alter3" Driven by Large Language Model

Jun 17, 2024Abstract:This paper introduces Alter3, a humanoid robot that demonstrates spontaneous motion generation through the integration of GPT-4, Large Language Model (LLM). This overcomes challenges in applying language models to direct robot control. By translating linguistic descriptions into actions, Alter3 can autonomously perform various tasks. The key aspect of humanoid robots is their ability to mimic human movement and emotions, allowing them to leverage human knowledge from language models. This raises the question of whether Alter3+GPT-4 can develop a "minimal self" with a sense of agency and ownership. This paper introduces mirror self-recognition and rubber hand illusion tests to assess Alter3's potential for a sense of self. The research suggests that even disembodied language models can develop agency when coupled with a physical robotic platform.

Self-Replicating and Self-Employed Smart Contract on Ethereum Blockchain

May 07, 2024

Abstract:Blockchain is the underlying technology for cryptocurrencies such as Bitcoin. Blockchain is a robust distributed ledger that uses consensus algorithms to approve transactions in a decentralized manner, making malicious tampering extremely difficult. Ethereum, one of the blockchains, can be seen as an unstoppable computer which shared by users around the world that can run Turing-complete programs. In order to run any program on Ethereum, Ether (currency on Ethereum) is required. In other words, Ether can be seen as a kind of energy in the Ethereum world. We developed self-replicating and self-employed agents who earn the energy by themselves to replicate them, on the Ethereum blockchain. The agents can issued their token and gain Ether each time the tokens are sold. When a certain amount of Ether is accumulated, the agent replicates itself and leaves offspring. The goal of this project is to implement artificial agents that lives for itself, not as a tool for humans, in the open cyber space connected to the real world.

From Text to Motion: Grounding GPT-4 in a Humanoid Robot "Alter3"

Dec 11, 2023Abstract:We report the development of Alter3, a humanoid robot capable of generating spontaneous motion using a Large Language Model (LLM), specifically GPT-4. This achievement was realized by integrating GPT-4 into our proprietary android, Alter3, thereby effectively grounding the LLM with Alter's bodily movement. Typically, low-level robot control is hardware-dependent and falls outside the scope of LLM corpora, presenting challenges for direct LLM-based robot control. However, in the case of humanoid robots like Alter3, direct control is feasible by mapping the linguistic expressions of human actions onto the robot's body through program code. Remarkably, this approach enables Alter3 to adopt various poses, such as a 'selfie' stance or 'pretending to be a ghost,' and generate sequences of actions over time without explicit programming for each body part. This demonstrates the robot's zero-shot learning capabilities. Additionally, verbal feedback can adjust poses, obviating the need for fine-tuning. A video of Alter3's generated motions is available at https://tnoinkwms.github.io/ALTER-LLM/

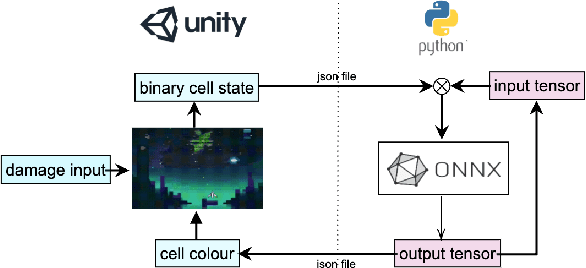

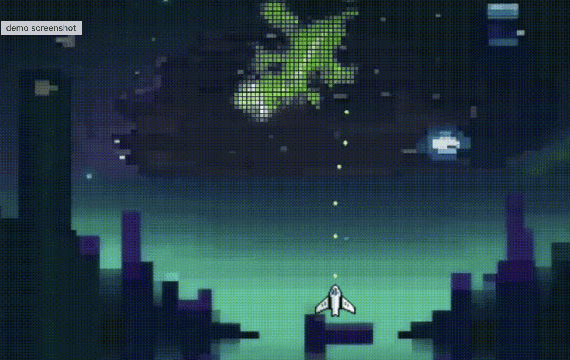

Automata Quest: NCAs as a Video Game Life Mechanic

Sep 23, 2023

Abstract:We study life over the course of video game history as represented by their mechanics. While there have been some variations depending on genre or "character type", we find that most games converge to a similar representation. We also examine the development of Conway's Game of Life (one of the first zero player games) and related automata that have developed over the years. With this history in mind, we investigate the viability of one popular form of automata, namely Neural Cellular Automata, as a way to more fully express life within video game settings and innovate new game mechanics or gameplay loops.

* This article was submitted to and presented at Alife for and from Video Games Workshop at ALIFE2023, Sappro (Japan)

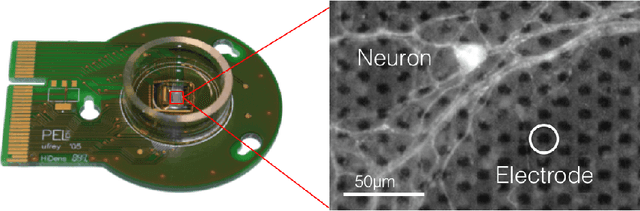

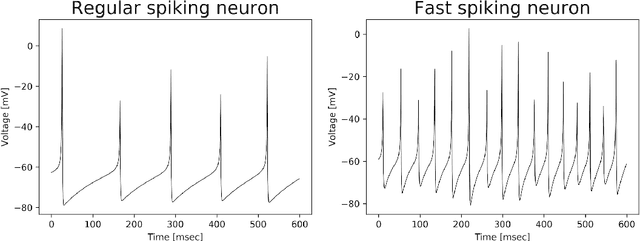

Neural Autopoiesis: Organizing Self-Boundary by Stimulus Avoidance in Biological and Artificial Neural Networks

Jan 27, 2020

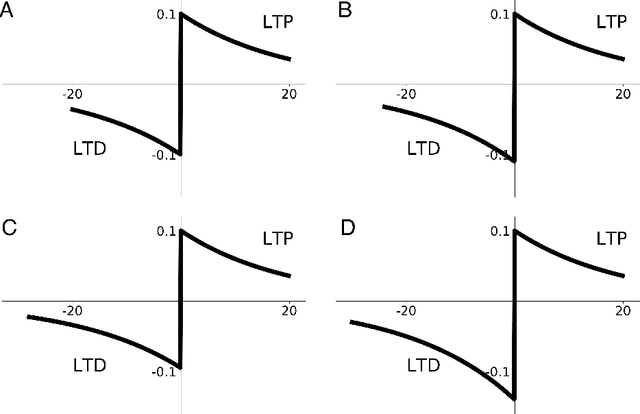

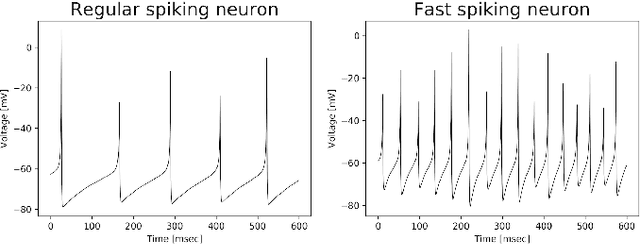

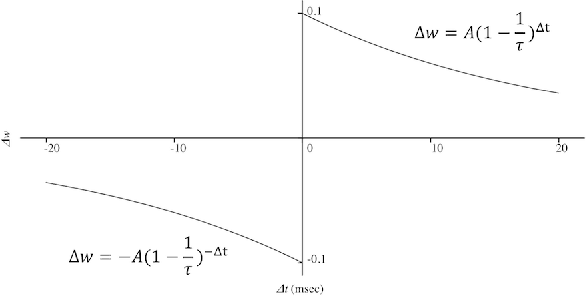

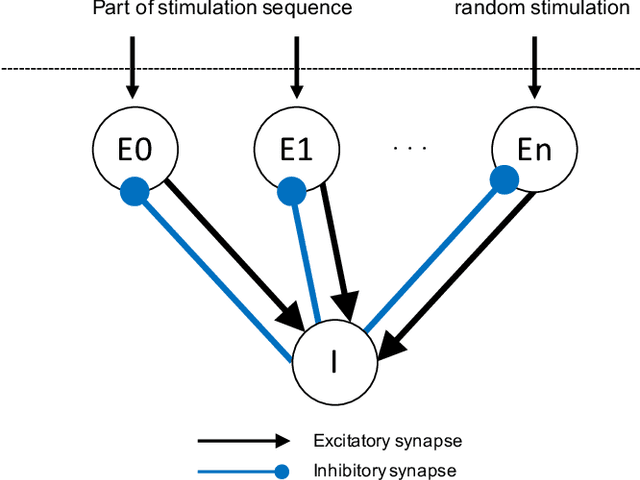

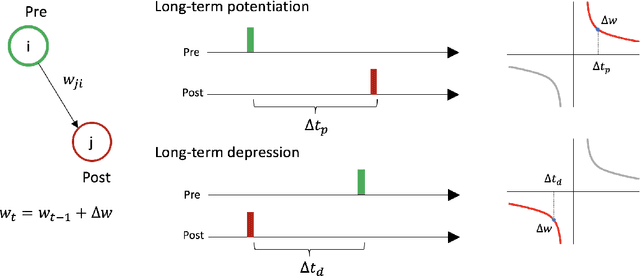

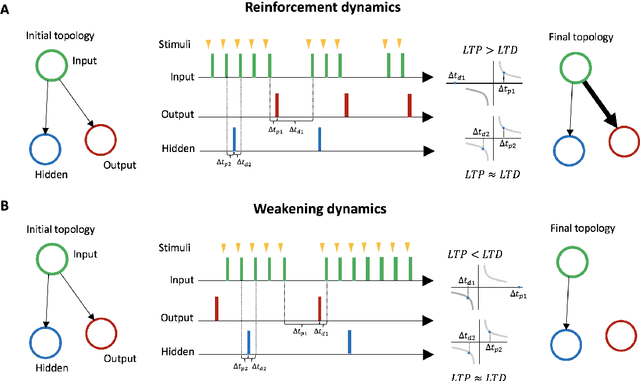

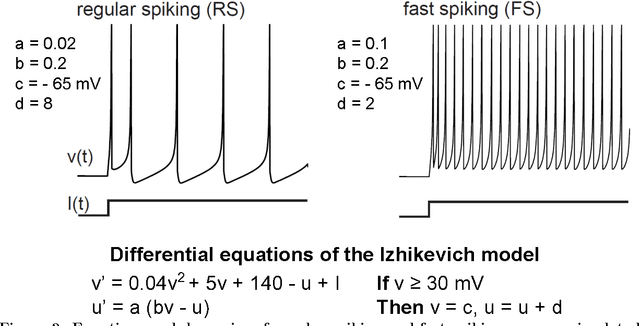

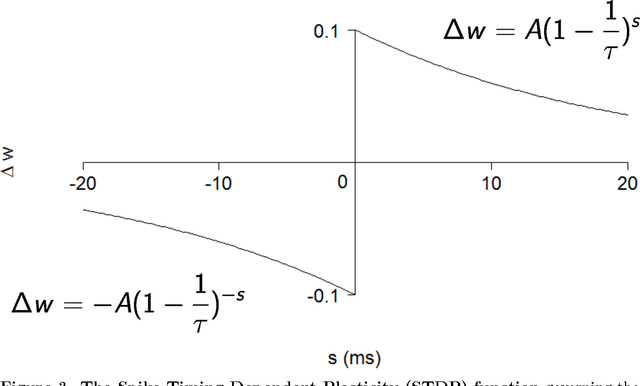

Abstract:Living organisms must actively maintain themselves in order to continue existing. Autopoiesis is a key concept in the study of living organisms, where the boundaries of the organism is not static by dynamically regulated by the system itself. To study the autonomous regulation of self-boundary, we focus on neural homeodynamic responses to environmental changes using both biological and artificial neural networks. Previous studies showed that embodied cultured neural networks and spiking neural networks with spike-timing dependent plasticity (STDP) learn an action as they avoid stimulation from outside. In this paper, as a result of our experiments using embodied cultured neurons, we find that there is also a second property allowing the network to avoid stimulation: if the agent cannot learn an action to avoid the external stimuli, it tends to decrease the stimulus-evoked spikes, as if to ignore the uncontrollable-input. We also show such a behavior is reproduced by spiking neural networks with asymmetric STDP. We consider that these properties are regarded as autonomous regulation of self and non-self for the network, in which a controllable-neuron is regarded as self, and an uncontrollable-neuron is regarded as non-self. Finally, we introduce neural autopoiesis by proposing the principle of stimulus avoidance.

Predictive Coding as Stimulus Avoidance in Spiking Neural Networks

Nov 21, 2019

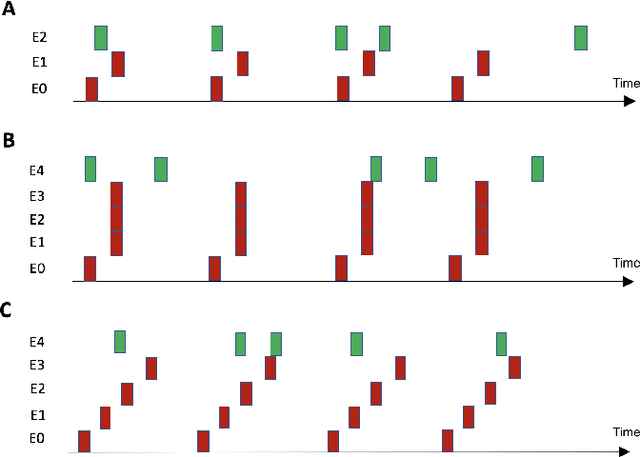

Abstract:Predictive coding can be regarded as a function which reduces the error between an input signal and a top-down prediction. If reducing the error is equivalent to reducing the influence of stimuli from the environment, predictive coding can be regarded as stimulation avoidance by prediction. Our previous studies showed that action and selection for stimulation avoidance emerge in spiking neural networks through spike-timing dependent plasticity (STDP). In this study, we demonstrate that spiking neural networks with random structure spontaneously learn to predict temporal sequences of stimuli based solely on STDP.

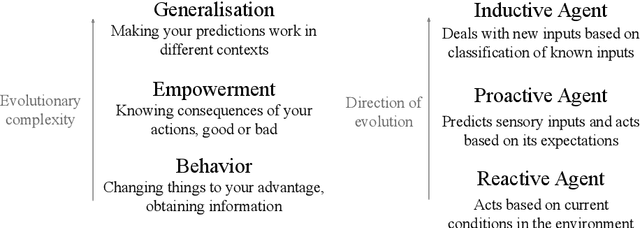

Reactive, Proactive, and Inductive Agents: An evolutionary path for biological and artificial spiking networks

Feb 18, 2019

Abstract:Complex environments provide structured yet variable sensory inputs. To best exploit information from these environments, organisms must evolve the ability to correctly anticipate consequences of unknown stimuli, and act on these predictions. We propose an evolutionary path for neural networks, leading an organism from reactive behavior to simple proactive behavior and from simple proactive behavior to induction-based behavior. Through in-vitro and in-silico experiments, we define the minimal conditions necessary in a network with spike-timing dependent plasticity for the organism to go from reactive to proactive behavior. Our results support the existence of small evolutionary steps and four necessary conditions allowing embodied neural networks to evolve predictive and inductive abilities from an initial reactive strategy. We extend these conditions to more general structures.

Learning by Stimulation Avoidance: A Principle to Control Spiking Neural Networks Dynamics

Sep 25, 2016

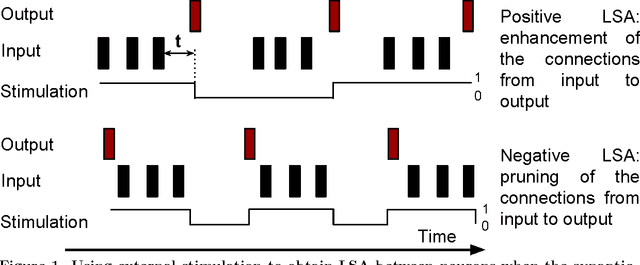

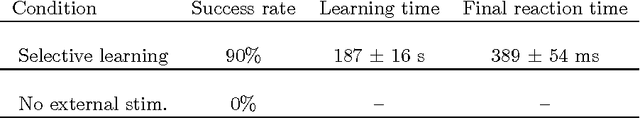

Abstract:Learning based on networks of real neurons, and by extension biologically inspired models of neural networks, has yet to find general learning rules leading to widespread applications. In this paper, we argue for the existence of a principle allowing to steer the dynamics of a biologically inspired neural network. Using carefully timed external stimulation, the network can be driven towards a desired dynamical state. We term this principle "Learning by Stimulation Avoidance" (LSA). We demonstrate through simulation that the minimal sufficient conditions leading to LSA in artificial networks are also sufficient to reproduce learning results similar to those obtained in biological neurons by Shahaf and Marom [1]. We examine the mechanism's basic dynamics in a reduced network, and demonstrate how it scales up to a network of 100 neurons. We show that LSA has a higher explanatory power than existing hypotheses about the response of biological neural networks to external simulation, and can be used as a learning rule for an embodied application: learning of wall avoidance by a simulated robot. The surge in popularity of artificial neural networks is mostly directed to disembodied models of neurons with biologically irrelevant dynamics: to the authors' knowledge, this is the first work demonstrating sensory-motor learning with random spiking networks through pure Hebbian learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge