Eiji Watanabe

Using SlowFast Networks for Near-Miss Incident Analysis in Dashcam Videos

Dec 05, 2024

Abstract:This paper classifies near-miss traffic videos using the SlowFast deep neural network that mimics the characteristics of the slow and fast visual information processed by two different streams from the M (Magnocellular) and P (Parvocellular) cells of the human brain. The approach significantly improves the accuracy of the traffic near-miss video analysis and presents insights into human visual perception in traffic scenarios. Moreover, it contributes to traffic safety enhancements and provides novel perspectives on the potential cognitive errors in traffic accidents.

* Best Research Paper Award for Asia-Pacific Region, The 30th ITS World Congress 2024

Evolutionary Generation of Visual Motion Illusions

Dec 25, 2021

Abstract:Why do we sometimes perceive static images as if they were moving? Visual motion illusions enjoy a sustained popularity, yet there is no definitive answer to the question of why they work. We present a generative model, the Evolutionary Illusion GENerator (EIGen), that creates new visual motion illusions. The structure of EIGen supports the hypothesis that illusory motion might be the result of perceiving the brain's own predictions rather than perceiving raw visual input from the eyes. The scientific motivation of this paper is to demonstrate that the perception of illusory motion could be a side effect of the predictive abilities of the brain. The philosophical motivation of this paper is to call attention to the untapped potential of "motivated failures", ways for artificial systems to fail as biological systems fail, as a worthy outlet for Artificial Intelligence and Artificial Life research.

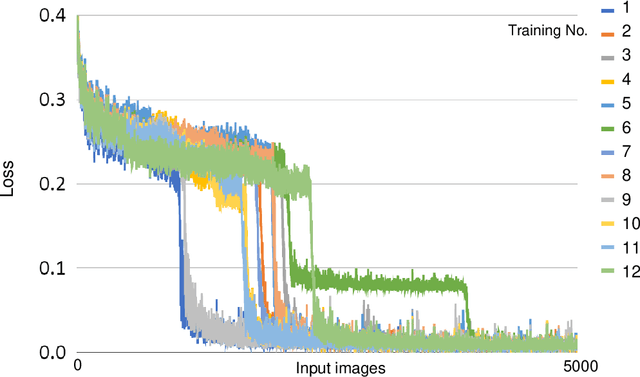

Impact of GPU uncertainty on the training of predictive deep neural networks

Sep 25, 2021

Abstract:[retracted] We found out that the difference was dependent on the Chainer library, and does not replicate with another library (pytorch) which indicates that the results are probably due to a bug in Chainer, rather than being hardware-dependent. -- old abstract Deep neural networks often present uncertainties such as hardware- and software-derived noise and randomness. We studied the effects of such uncertainty on learning outcomes, with a particular focus on the function of graphics processing units (GPUs), and found that GPU-induced uncertainty increased learning accuracy of a certain deep neural network. When training a predictive deep neural network using only the CPU without the GPU, the learning error is higher than when training the same number of epochs using the GPU, suggesting that the GPU plays a different role in the learning process than just increasing the computational speed. Because this effect cannot be observed in learning by a simple autoencoder, it could be a phenomenon specific to certain types of neural networks. GPU-specific computational processing is more indeterminate than that by CPUs, and hardware-derived uncertainties, which are often considered obstacles that need to be eliminated, might, in some cases, be successfully incorporated into the training of deep neural networks. Moreover, such uncertainties might be interesting phenomena to consider in brain-related computational processing, which comprises a large mass of uncertain signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge