Manuel Baltieri

AI in a vat: Fundamental limits of efficient world modelling for agent sandboxing and interpretability

Apr 06, 2025

Abstract:Recent work proposes using world models to generate controlled virtual environments in which AI agents can be tested before deployment to ensure their reliability and safety. However, accurate world models often have high computational demands that can severely restrict the scope and depth of such assessments. Inspired by the classic `brain in a vat' thought experiment, here we investigate ways of simplifying world models that remain agnostic to the AI agent under evaluation. By following principles from computational mechanics, our approach reveals a fundamental trade-off in world model construction between efficiency and interpretability, demonstrating that no single world model can optimise all desirable characteristics. Building on this trade-off, we identify procedures to build world models that either minimise memory requirements, delineate the boundaries of what is learnable, or allow tracking causes of undesirable outcomes. In doing so, this work establishes fundamental limits in world modelling, leading to actionable guidelines that inform core design choices related to effective agent evaluation.

Disentangled Representations for Causal Cognition

Jun 30, 2024

Abstract:Complex adaptive agents consistently achieve their goals by solving problems that seem to require an understanding of causal information, information pertaining to the causal relationships that exist among elements of combined agent-environment systems. Causal cognition studies and describes the main characteristics of causal learning and reasoning in human and non-human animals, offering a conceptual framework to discuss cognitive performances based on the level of apparent causal understanding of a task. Despite the use of formal intervention-based models of causality, including causal Bayesian networks, psychological and behavioural research on causal cognition does not yet offer a computational account that operationalises how agents acquire a causal understanding of the world. Machine and reinforcement learning research on causality, especially involving disentanglement as a candidate process to build causal representations, represent on the one hand a concrete attempt at designing causal artificial agents that can shed light on the inner workings of natural causal cognition. In this work, we connect these two areas of research to build a unifying framework for causal cognition that will offer a computational perspective on studies of animal cognition, and provide insights in the development of new algorithms for causal reinforcement learning in AI.

Hybrid Life: Integrating Biological, Artificial, and Cognitive Systems

Dec 01, 2022Abstract:Artificial life is a research field studying what processes and properties define life, based on a multidisciplinary approach spanning the physical, natural and computational sciences. Artificial life aims to foster a comprehensive study of life beyond "life as we know it" and towards "life as it could be", with theoretical, synthetic and empirical models of the fundamental properties of living systems. While still a relatively young field, artificial life has flourished as an environment for researchers with different backgrounds, welcoming ideas and contributions from a wide range of subjects. Hybrid Life is an attempt to bring attention to some of the most recent developments within the artificial life community, rooted in more traditional artificial life studies but looking at new challenges emerging from interactions with other fields. In particular, Hybrid Life focuses on three complementary themes: 1) theories of systems and agents, 2) hybrid augmentation, with augmented architectures combining living and artificial systems, and 3) hybrid interactions among artificial and biological systems. After discussing some of the major sources of inspiration for these themes, we will focus on an overview of the works that appeared in Hybrid Life special sessions, hosted by the annual Artificial Life Conference between 2018 and 2022.

Scaling active inference

Nov 24, 2019

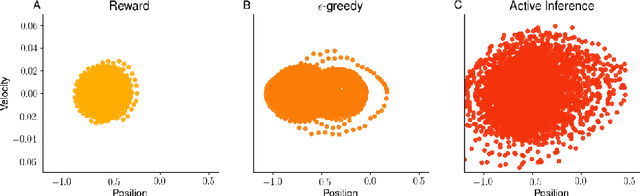

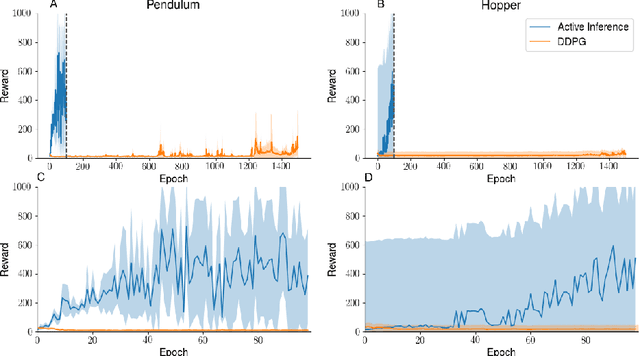

Abstract:In reinforcement learning (RL), agents often operate in partially observed and uncertain environments. Model-based RL suggests that this is best achieved by learning and exploiting a probabilistic model of the world. 'Active inference' is an emerging normative framework in cognitive and computational neuroscience that offers a unifying account of how biological agents achieve this. On this framework, inference, learning and action emerge from a single imperative to maximize the Bayesian evidence for a niched model of the world. However, implementations of this process have thus far been restricted to low-dimensional and idealized situations. Here, we present a working implementation of active inference that applies to high-dimensional tasks, with proof-of-principle results demonstrating efficient exploration and an order of magnitude increase in sample efficiency over strong model-free baselines. Our results demonstrate the feasibility of applying active inference at scale and highlight the operational homologies between active inference and current model-based approaches to RL.

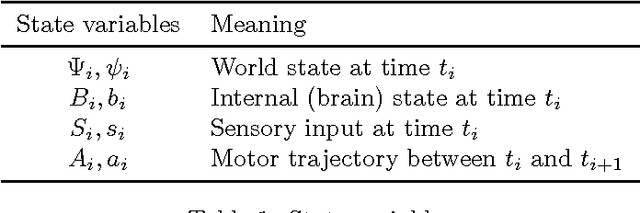

Generative models as parsimonious descriptions of sensorimotor loops

Apr 29, 2019Abstract:The Bayesian brain hypothesis, predictive processing and variational free energy minimisation are typically used to describe perceptual processes based on accurate generative models of the world. However, generative models need not be veridical representations of the environment. We suggest that they can (and should) be used to describe sensorimotor relationships relevant for behaviour rather than precise accounts of the world.

Nonmodular architectures of cognitive systems based on active inference

Mar 22, 2019

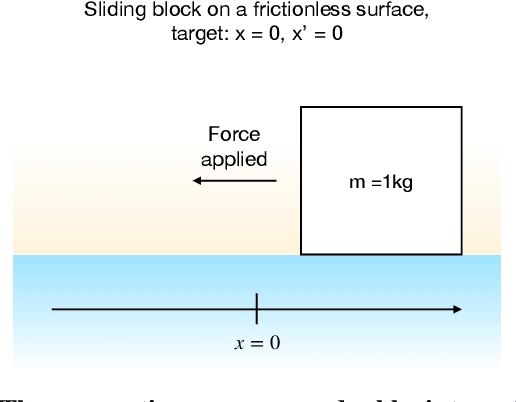

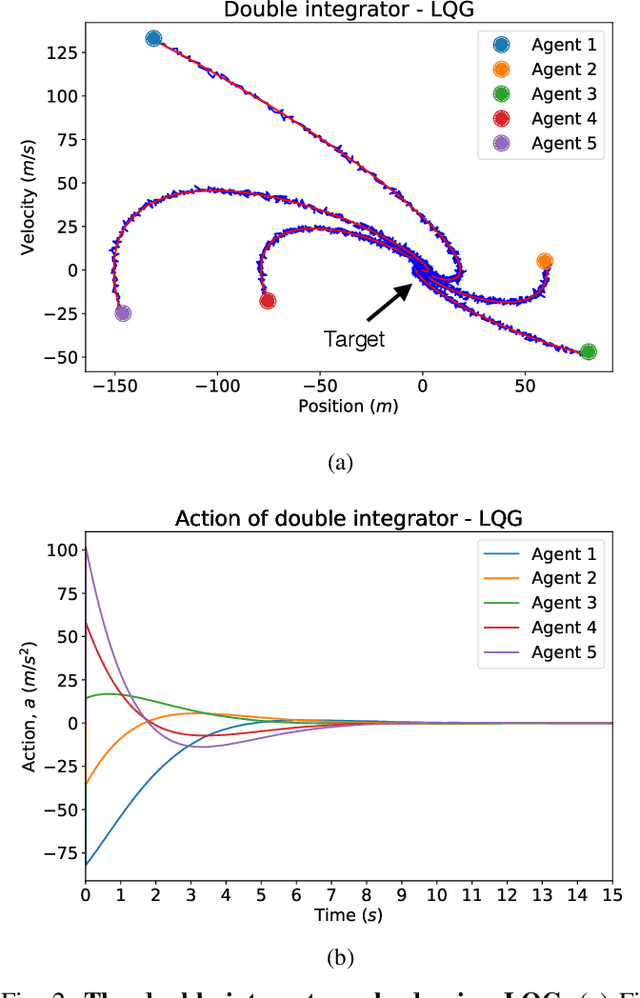

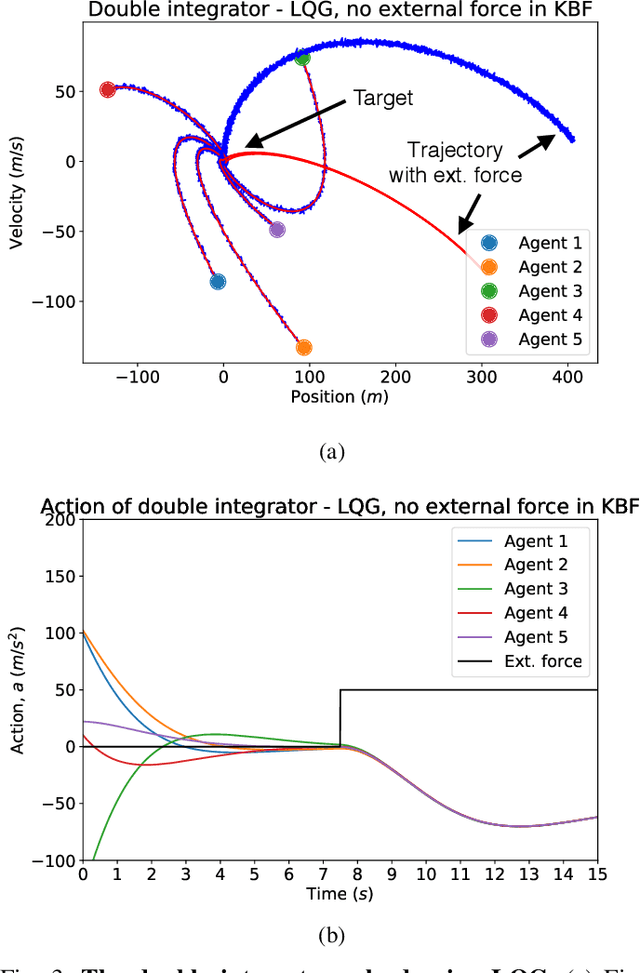

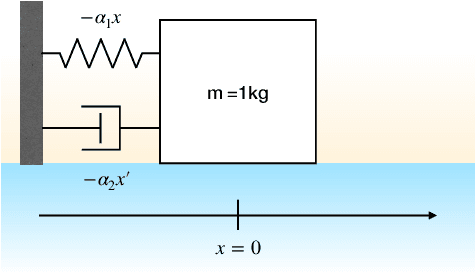

Abstract:In psychology and neuroscience it is common to describe cognitive systems as input/output devices where perceptual and motor functions are implemented in a purely feedforward, open-loop fashion. On this view, perception and action are often seen as encapsulated modules with limited interaction between them. While embodied and enactive approaches to cognitive science have challenged the idealisation of the brain as an input/output device, we argue that even the more recent attempts to model systems using closed-loop architectures still heavily rely on a strong separation between motor and perceptual functions. Previously, we have suggested that the mainstream notion of modularity strongly resonates with the separation principle of control theory. In this work we present a minimal model of a sensorimotor loop implementing an architecture based on the separation principle. We link this to popular formulations of perception and action in the cognitive sciences, and show its limitations when, for instance, external forces are not modelled by an agent. These forces can be seen as variables that an agent cannot directly control, i.e., a perturbation from the environment or an interference caused by other agents. As an alternative approach inspired by embodied cognitive science, we then propose a nonmodular architecture based on the active inference framework. We demonstrate the robustness of this architecture to unknown external inputs and show that the mechanism with which this is achieved in linear models is equivalent to integral control.

A Minimal Active Inference Agent

Mar 13, 2015

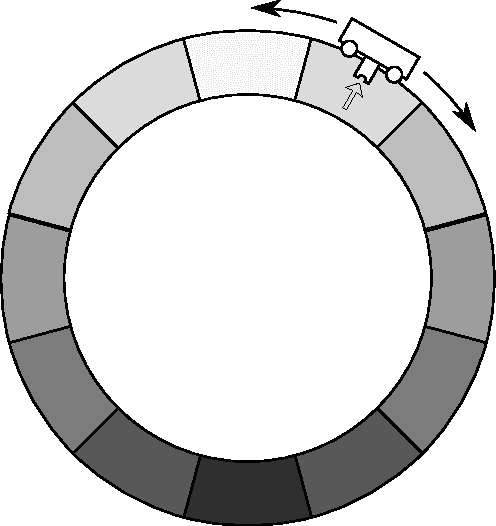

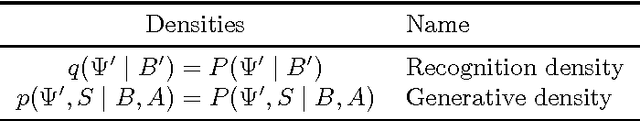

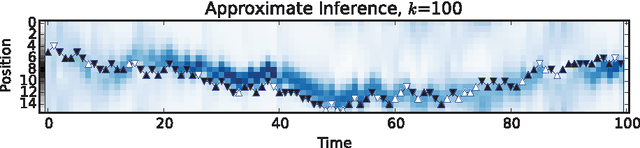

Abstract:Research on the so-called "free-energy principle'' (FEP) in cognitive neuroscience is becoming increasingly high-profile. To date, introductions to this theory have proved difficult for many readers to follow, but it depends mainly upon two relatively simple ideas: firstly that normative or teleological values can be expressed as probability distributions (active inference), and secondly that approximate Bayesian reasoning can be effectively performed by gradient descent on model parameters (the free-energy principle). The notion of active inference is of great interest for a number of disciplines including cognitive science and artificial intelligence, as well as cognitive neuroscience, and deserves to be more widely known. This paper attempts to provide an accessible introduction to active inference and informational free-energy, for readers from a range of scientific backgrounds. In this work introduce an agent-based model with an agent trying to make predictions about its position in a one-dimensional discretized world using methods from the FEP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge