Michael Crosscombe

A Simulation Environment for the Neuroevolution of Ant Colony Dynamics

Jun 19, 2024

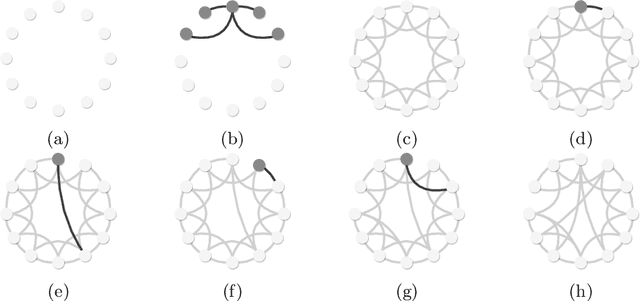

Abstract:We introduce a simulation environment to facilitate research into emergent collective behaviour, with a focus on replicating the dynamics of ant colonies. By leveraging real-world data, the environment simulates a target ant trail that a controllable agent must learn to replicate, using sensory data observed by the target ant. This work aims to contribute to the neuroevolution of models for collective behaviour, focusing on evolving neural architectures that encode domain-specific behaviours in the network topology. By evolving models that can be modified and studied in a controlled environment, we can uncover the necessary conditions required for collective behaviours to emerge. We hope this environment will be useful to those studying the role of interactions in emergent behaviour within collective systems.

The Impact of Network Connectivity on Collective Learning

Jun 18, 2021

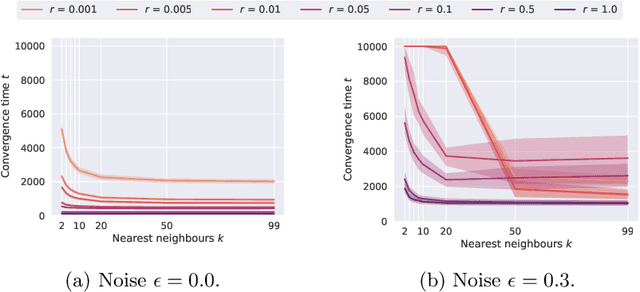

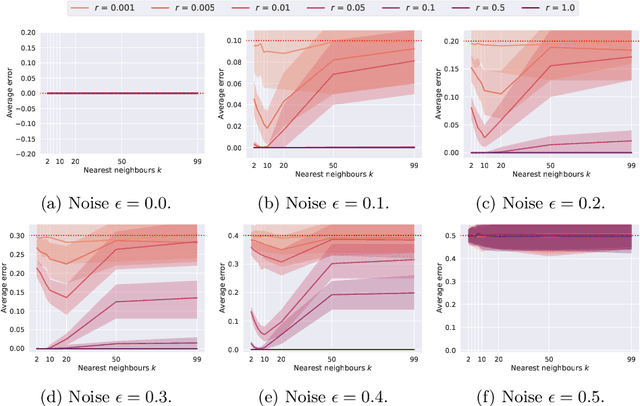

Abstract:In decentralised autonomous systems it is the interactions between individual agents which govern the collective behaviours of the system. These local-level interactions are themselves often governed by an underlying network structure. These networks are particularly important for collective learning and decision-making whereby agents must gather evidence from their environment and propagate this information to other agents in the system. Models for collective behaviours may often rely upon the assumption of total connectivity between agents to provide effective information sharing within the system, but this assumption may be ill-advised. In this paper we investigate the impact that the underlying network has on performance in the context of collective learning. Through simulations we study small-world networks with varying levels of connectivity and randomness and conclude that totally-connected networks result in higher average error when compared to networks with less connectivity. Furthermore, we show that networks of high regularity outperform networks with increasing levels of random connectivity.

Evidence Propagation and Consensus Formation in Noisy Environments

May 13, 2019

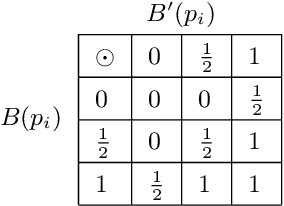

Abstract:We study the effectiveness of consensus formation in multi-agent systems where there is both belief updating based on direct evidence and also belief combination between agents. In particular, we consider the scenario in which a population of agents collaborate on the best-of-n problem where the aim is to reach a consensus about which is the best (alternatively, true) state from amongst a set of states, each with a different quality value (or level of evidence). Agents' beliefs are represented within Dempster-Shafer theory by mass functions and we invegate the macro-level properties of four well-known belief combination operators for this multi-agent consensus formation problem: Dempster's rule, Yager's rule, Dubois & Prade's operator and the averaging operator. The convergence properties of the operators are considered and simulation experiments are conducted for different evidence rates and noise levels. Results show that a combination of updating from direct evidence and belief combination between agents results in better consensus to the best state than does evidence updating alone. We also find that in this framework the operators are robust to noise. Broadly, Dubois & Prade's operator results in better convergence to the best state. Finally, we consider how well the Dempster-Shafer approach to the best-of-n problem scales to large numbers of states.

A Model of Multi-Agent Consensus for Vague and Uncertain Beliefs

Jan 12, 2018

Abstract:Consensus formation is investigated for multi-agent systems in which agents' beliefs are both vague and uncertain. Vagueness is represented by a third truth state meaning \emph{borderline}. This is combined with a probabilistic model of uncertainty. A belief combination operator is then proposed which exploits borderline truth values to enable agents with conflicting beliefs to reach a compromise. A number of simulation experiments are carried out in which agents apply this operator in pairwise interactions, under the bounded confidence restriction that the two agents' beliefs must be sufficiently consistent with each other before agreement can be reached. As well as studying the consensus operator in isolation we also investigate scenarios in which agents are influenced either directly or indirectly by the state of the world. For the former we conduct simulations which combine consensus formation with belief updating based on evidence. For the latter we investigate the effect of assuming that the closer an agent's beliefs are to the truth the more visible they are in the consensus building process. In all cases applying the consensus operators results in the population converging to a single shared belief which is both crisp and certain. Furthermore, simulations which combine consensus formation with evidential updating converge faster to a shared opinion which is closer to the actual state of the world than those in which beliefs are only changed as a result of directly receiving new evidence. Finally, if agent interactions are guided by belief quality measured as similarity to the true state of the world, then applying the consensus operator alone results in the population converging to a high quality shared belief.

Exploiting Vagueness for Multi-Agent Consensus

Sep 20, 2016

Abstract:A framework for consensus modelling is introduced using Kleene's three valued logic as a means to express vagueness in agents' beliefs. Explicitly borderline cases are inherent to propositions involving vague concepts where sentences of a propositional language may be absolutely true, absolutely false or borderline. By exploiting these intermediate truth values, we can allow agents to adopt a more vague interpretation of underlying concepts in order to weaken their beliefs and reduce the levels of inconsistency, so as to achieve consensus. We consider a consensus combination operation which results in agents adopting the borderline truth value as a shared viewpoint if they are in direct conflict. Simulation experiments are presented which show that applying this operator to agents chosen at random (subject to a consistency threshold) from a population, with initially diverse opinions, results in convergence to a smaller set of more precise shared beliefs. Furthermore, if the choice of agents for combination is dependent on the payoff of their beliefs, this acting as a proxy for performance or usefulness, then the system converges to beliefs which, on average, have higher payoff.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge