Nitish V. Thakor

Visual Feedback of Pattern Separability Improves Myoelectric Decoding Performance of Upper Limb Prostheses

May 14, 2025Abstract:State-of-the-art upper limb myoelectric prostheses often use pattern recognition (PR) control systems that translate electromyography (EMG) signals into desired movements. As prosthesis movement complexity increases, users often struggle to produce sufficiently distinct EMG patterns for reliable classification. Existing training typically involves heuristic, trial-and-error user adjustments to static decoder boundaries. Goal: We introduce the Reviewer, a 3D visual interface projecting EMG signals directly into the decoder's classification space, providing intuitive, real-time insight into PR algorithm behavior. This structured feedback reduces cognitive load and fosters mutual, data-driven adaptation between user-generated EMG patterns and decoder boundaries. Methods: A 10-session study with 12 able-bodied participants compared PR performance after motor-based training and updating using the Reviewer versus conventional virtual arm visualization. Performance was assessed using a Fitts law task that involved the aperture of the cursor and the control of orientation. Results: Participants trained with the Reviewer achieved higher completion rates, reduced overshoot, and improved path efficiency and throughput compared to the standard visualization group. Significance: The Reviewer introduces decoder-informed motor training, facilitating immediate and consistent PR-based myoelectric control improvements. By iteratively refining control through real-time feedback, this approach reduces reliance on trial-and-error recalibration, enabling a more adaptive, self-correcting training framework. Conclusion: The 3D visual feedback significantly improves PR control in novice operators through structured training, enabling feedback-driven adaptation and reducing reliance on extensive heuristic adjustments.

Online Adaptation for Myographic Control of Natural Dexterous Hand and Finger Movements

Dec 23, 2024Abstract:One of the most elusive goals in myographic prosthesis control is the ability to reliably decode continuous positions simultaneously across multiple degrees-of-freedom. Goal: To demonstrate dexterous, natural, biomimetic finger and wrist control of the highly advanced robotic Modular Prosthetic Limb. Methods: We combine sequential temporal regression models and reinforcement learning using myographic signals to predict continuous simultaneous predictions of 7 finger and wrist degrees-of-freedom for 9 non-amputee human subjects in a minimally-constrained freeform training process. Results: We demonstrate highly dexterous 7 DoF position-based regression for prosthesis control from EMG signals, with significantly lower error rates than traditional approaches (p < 0.001) and nearly zero prediction response time delay (p < 0.001). Their performance can be continuously improved at any time using our freeform reinforcement process. Significance: We have demonstrated the most dexterous, biomimetic, and natural prosthesis control performance ever obtained from the surface EMG signal. Our reinforcement approach allowed us to abandon standard training protocols and simply allow the subject to move in any desired way while our models adapt. Conclusions: This work redefines the state-of-the-art in myographic decoding in terms of the reliability, responsiveness, and movement complexity available from prosthesis control systems. The present-day emergence and convergence of advanced algorithmic methods, experiment protocols, dexterous robotic prostheses, and sensor modalities represents a unique opportunity to finally realize our ultimate goal of achieving fully restorative natural upper-limb function for amputees.

At First Contact: Stiffness Estimation Using Vibrational Information for Prosthetic Grasp Modulation

Nov 27, 2024

Abstract:Stiffness estimation is crucial for delicate object manipulation in robotic and prosthetic hands but remains challenging due to dependence on force and displacement measurement and real-time sensory integration. This study presents a piezoelectric sensing framework for stiffness estimation at first contact during pinch grasps, addressing the limitations of traditional force-based methods. Inspired by human skin, a multimodal tactile sensor that captures vibrational and force data is developed and integrated into a prosthetic hand's fingertip. Machine learning models, including support vector machines and convolutional neural networks, demonstrate that vibrational signals within the critical 15 ms after first contact reliably encode stiffness, achieving classification accuracies up to 98.6\% and regression errors as low as 2.39 Shore A on real-world objects of varying stiffness. Inference times of less than 1.5 ms are significantly faster than the average grasp closure time (16.65 ms in our dataset), enabling real-time stiffness estimation before the object is fully grasped. By leveraging the transient asymmetry in grasp dynamics, where one finger contacts the object before the others, this method enables early grasp modulation, enhancing safety and intuitiveness in prosthetic hands while offering broad applications in robotics.

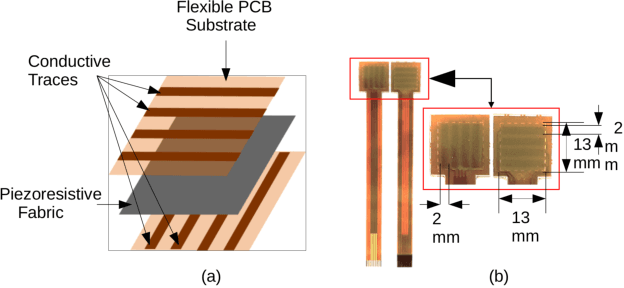

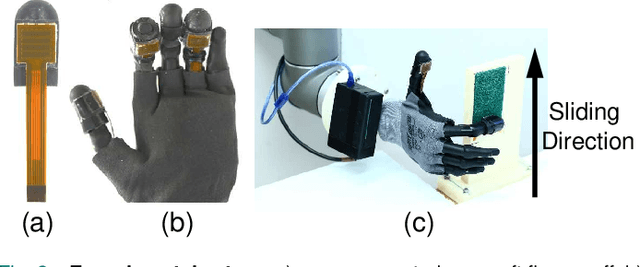

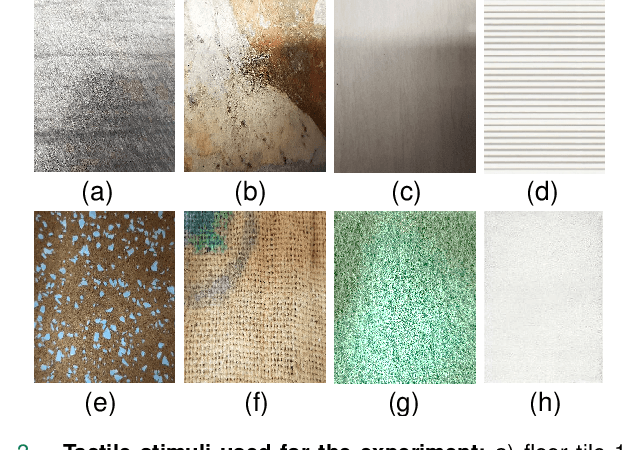

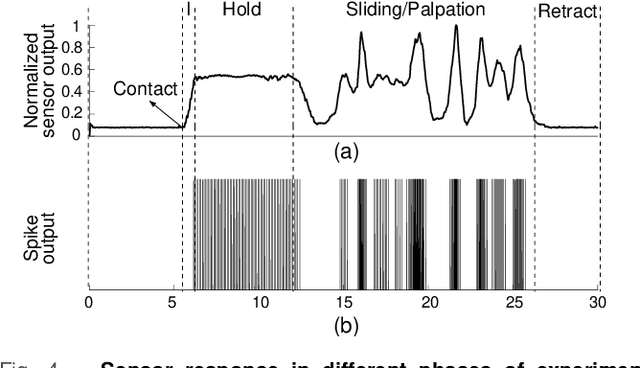

Invariant neuromorphic representations of tactile stimuli improve robustness of a real-time texture classification system

Nov 26, 2024

Abstract:Humans have an exquisite sense of touch which robotic and prosthetic systems aim to recreate. We developed algorithms to create neuron-like (neuromorphic) spiking representations of texture that are invariant to the scanning speed and contact force applied in the sensing process. The spiking representations are based on mimicking activity from mechanoreceptors in human skin and further processing up to the brain. The neuromorphic encoding process transforms analog sensor readings into speed and force invariant spiking representations in three sequential stages: the force invariance module (in the analog domain), the spiking activity encoding module (transforms from analog to spiking domain), and the speed invariance module (in the spiking domain). The algorithms were tested on a tactile texture dataset collected in 15 speed-force conditions. An offline texture classification system built on the invariant representations has higher classification accuracy, improved computational efficiency, and increased capability to identify textures explored in novel speed-force conditions. The speed invariance algorithm was adapted to a real-time human-operated texture classification system. Similarly, the invariant representations improved classification accuracy, computational efficiency, and capability to identify textures explored in novel conditions. The invariant representation is even more crucial in this context due to human imprecision which seems to the classification system as a novel condition. These results demonstrate that invariant neuromorphic representations enable better performing neurorobotic tactile sensing systems. Furthermore, because the neuromorphic representations are based on biological processing, this work can be used in the future as the basis for naturalistic sensory feedback for upper limb amputees.

Spatio-temporal encoding improves neuromorphic tactile texture classification

Oct 27, 2020

Abstract:With the increase in interest in deployment of robots in unstructured environments to work alongside humans, the development of human-like sense of touch for robots becomes important. In this work, we implement a multi-channel neuromorphic tactile system that encodes contact events as discrete spike events that mimic the behavior of slow adapting mechanoreceptors. We study the impact of information pooling across artificial mechanoreceptors on classification performance of spatially non-uniform naturalistic textures. We encoded the spatio-temporal activation patterns of mechanoreceptors through gray-level co-occurrence matrix computed from time-varying mean spiking rate-based tactile response volume. We found that this approach greatly improved texture classification in comparison to use of individual mechanoreceptor response alone. In addition, the performance was also more robust to changes in sliding velocity. The importance of exploiting precise spatial and temporal correlations between sensory channels is evident from the fact that on either removal of precise temporal information or altering of spatial structure of response pattern, a significant performance drop was observed. This study thus demonstrates the superiority of population coding approaches that can exploit the precise spatio-temporal information encoded in activation patterns of mechanoreceptor populations. It, therefore, makes an advance in the direction of development of bio-inspired tactile systems required for realistic touch applications in robotics and prostheses.

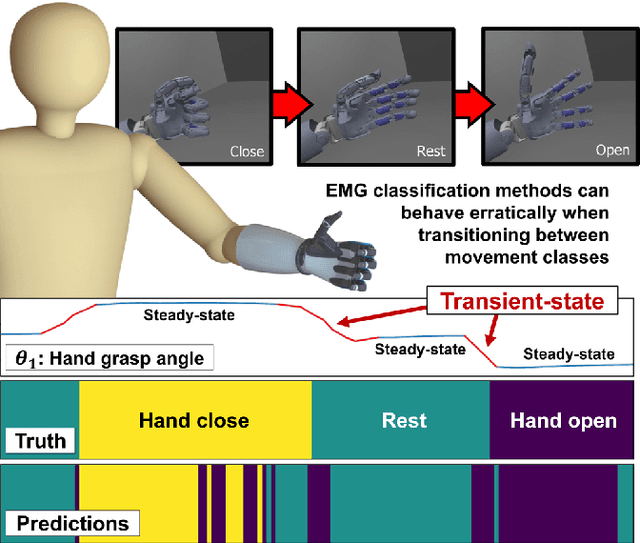

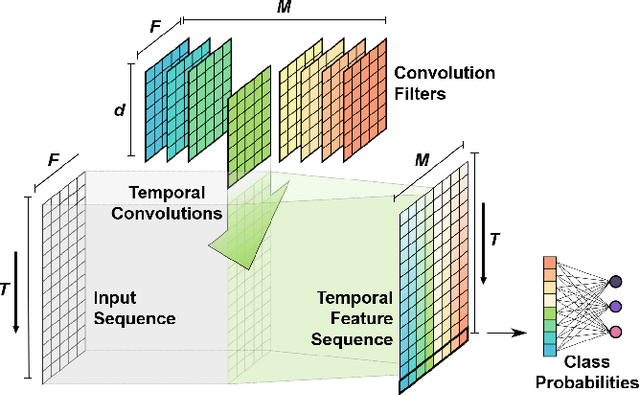

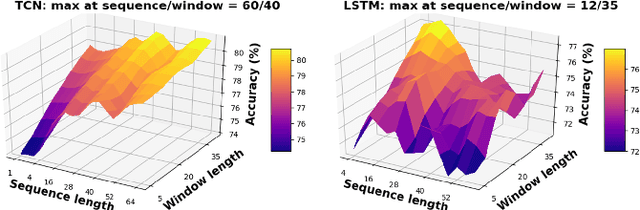

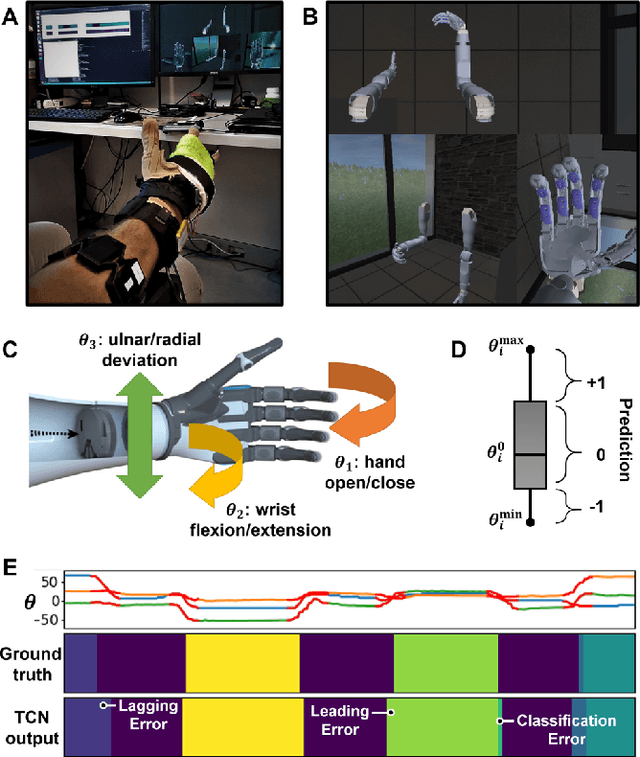

Stable Electromyographic Sequence Prediction During Movement Transitions using Temporal Convolutional Networks

Jan 08, 2019

Abstract:Transient muscle movements influence the temporal structure of myoelectric signal patterns, often leading to unstable prediction behavior from movement-pattern classification methods. We show that temporal convolutional network sequential models leverage the myoelectric signal's history to discover contextual temporal features that aid in correctly predicting movement intentions, especially during interclass transitions. We demonstrate myoelectric classification using temporal convolutional networks to effect 3 simultaneous hand and wrist degrees-of-freedom in an experiment involving nine human-subjects. Temporal convolutional networks yield significant $(p<0.001)$ performance improvements over other state-of-the-art methods in terms of both classification accuracy and stability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge