Anupam K. Gupta

Semi-Supervised Disentanglement of Tactile Contact~Geometry from Sliding-Induced Shear

Aug 26, 2022

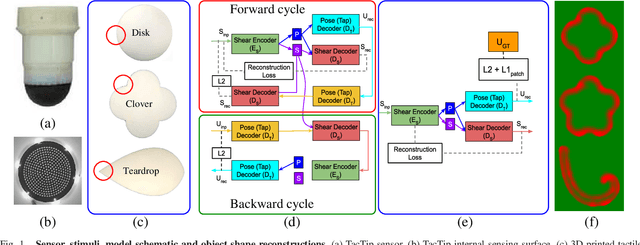

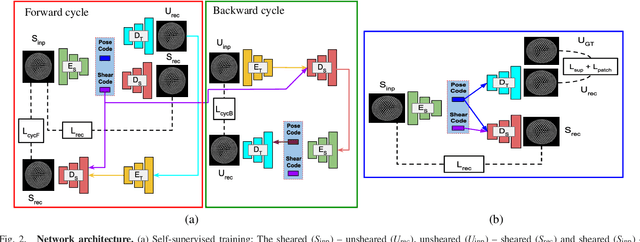

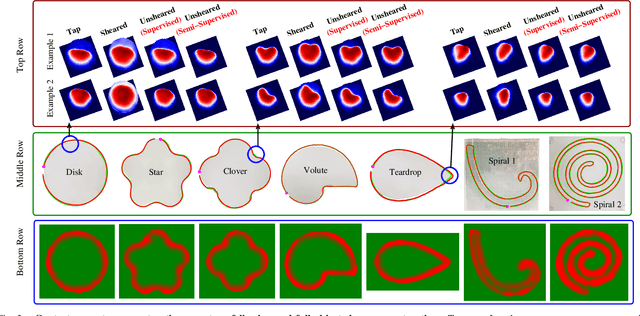

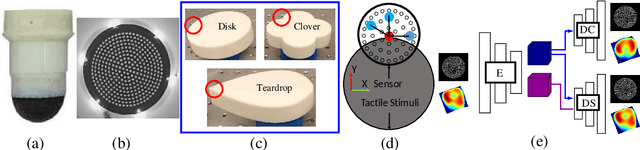

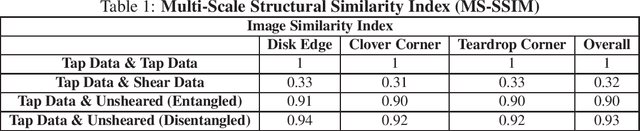

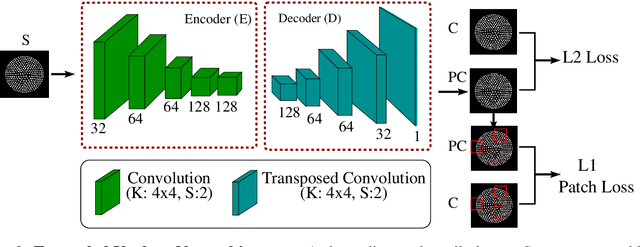

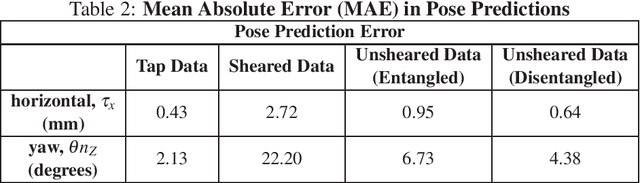

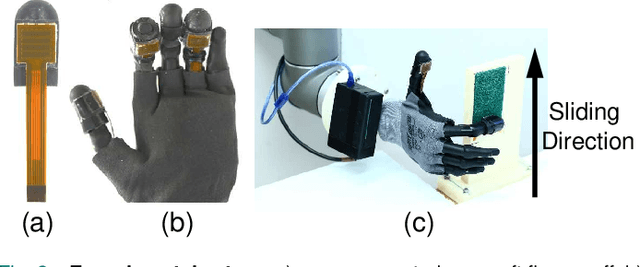

Abstract:The sense of touch is fundamental to human dexterity. When mimicked in robotic touch, particularly by use of soft optical tactile sensors, it suffers from distortion due to motion-dependent shear. This complicates tactile tasks like shape reconstruction and exploration that require information about contact geometry. In this work, we pursue a semi-supervised approach to remove shear while preserving contact-only information. We validate our approach by showing a match between the model-generated unsheared images with their counterparts from vertically tapping onto the object. The model-generated unsheared images give faithful reconstruction of contact-geometry otherwise masked by shear, along with robust estimation of object pose then used for sliding exploration and full reconstruction of several planar shapes. We show that our semi-supervised approach achieves comparable performance to its fully supervised counterpart across all validation tasks with an order of magnitude less supervision. The semi-supervised method is thus more computational and labeled sample-efficient. We expect it will have broad applicability to wide range of complex tactile exploration and manipulation tasks performed via a shear-sensitive sense of touch.

Tactile Image-to-Image Disentanglement of Contact Geometry from Motion-Induced Shear

Sep 08, 2021

Abstract:Robotic touch, particularly when using soft optical tactile sensors, suffers from distortion caused by motion-dependent shear. The manner in which the sensor contacts a stimulus is entangled with the tactile information about the geometry of the stimulus. In this work, we propose a supervised convolutional deep neural network model that learns to disentangle, in the latent space, the components of sensor deformations caused by contact geometry from those due to sliding-induced shear. The approach is validated by reconstructing unsheared tactile images from sheared images and showing they match unsheared tactile images collected with no sliding motion. In addition, the unsheared tactile images give a faithful reconstruction of the contact geometry that is not possible from the sheared data, and robust estimation of the contact pose that can be used for servo control sliding around various 2D shapes. Finally, the contact geometry reconstruction in conjunction with servo control sliding were used for faithful full object reconstruction of various 2D shapes. The methods have broad applicability to deep learning models for robots with a shear-sensitive sense of touch.

Spatio-temporal encoding improves neuromorphic tactile texture classification

Oct 27, 2020

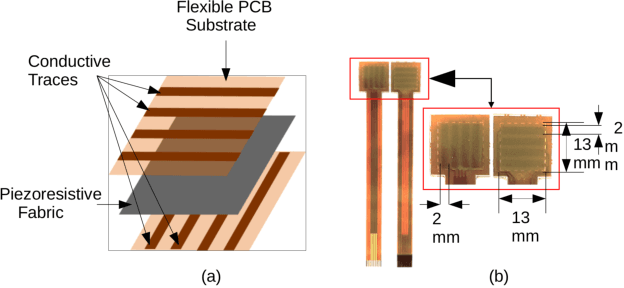

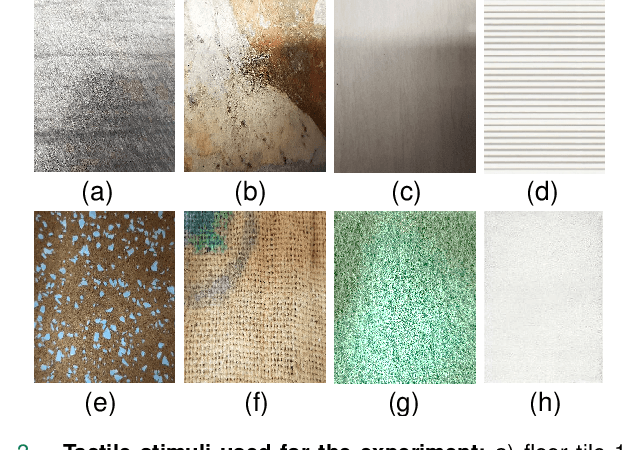

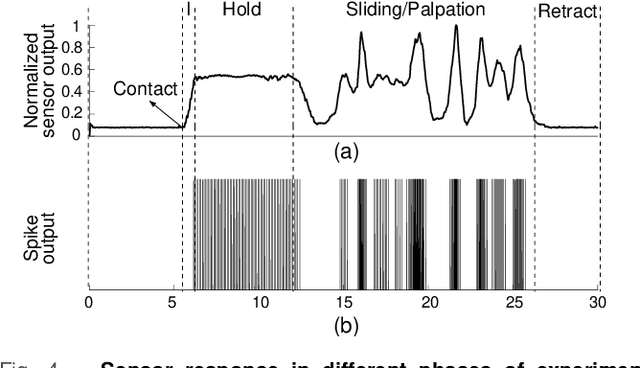

Abstract:With the increase in interest in deployment of robots in unstructured environments to work alongside humans, the development of human-like sense of touch for robots becomes important. In this work, we implement a multi-channel neuromorphic tactile system that encodes contact events as discrete spike events that mimic the behavior of slow adapting mechanoreceptors. We study the impact of information pooling across artificial mechanoreceptors on classification performance of spatially non-uniform naturalistic textures. We encoded the spatio-temporal activation patterns of mechanoreceptors through gray-level co-occurrence matrix computed from time-varying mean spiking rate-based tactile response volume. We found that this approach greatly improved texture classification in comparison to use of individual mechanoreceptor response alone. In addition, the performance was also more robust to changes in sliding velocity. The importance of exploiting precise spatial and temporal correlations between sensory channels is evident from the fact that on either removal of precise temporal information or altering of spatial structure of response pattern, a significant performance drop was observed. This study thus demonstrates the superiority of population coding approaches that can exploit the precise spatio-temporal information encoded in activation patterns of mechanoreceptor populations. It, therefore, makes an advance in the direction of development of bio-inspired tactile systems required for realistic touch applications in robotics and prostheses.

Investigating Convolutional Neural Networks using Spatial Orderness

Aug 18, 2019

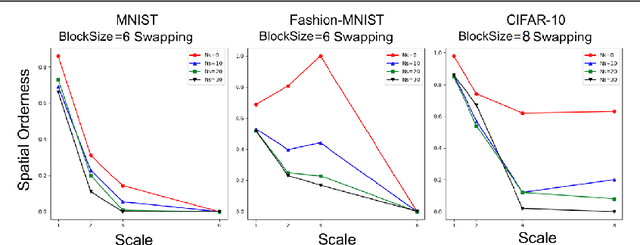

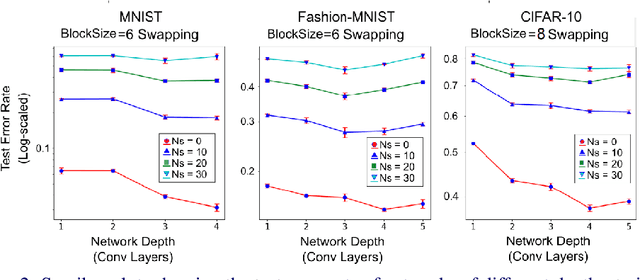

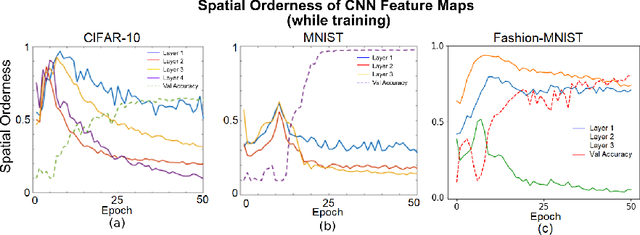

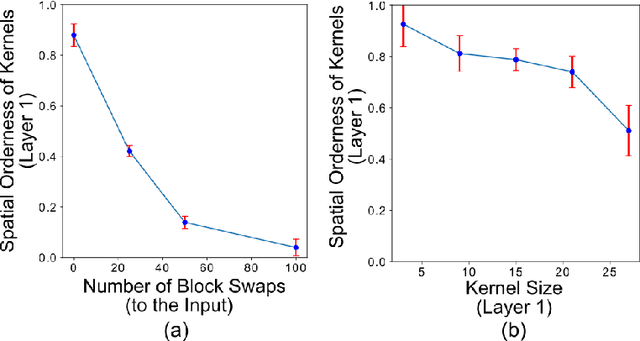

Abstract:Convolutional Neural Networks (CNN) have been pivotal to the success of many state-of-the-art classification problems, in a wide variety of domains (for e.g. vision, speech, graphs and medical imaging). A commonality within those domains is the presence of hierarchical, spatially agglomerative local-to-global interactions within the data. For two-dimensional images, such interactions may induce an a priori relationship between the pixel data and the underlying spatial ordering of the pixels. For instance in natural images, neighboring pixels are more likely contain similar values than non-neighboring pixels which are further apart. To that end, we propose a statistical metric called spatial orderness, which quantifies the extent to which the input data (2D) obeys the underlying spatial ordering at various scales. In our experiments, we mainly find that adding convolutional layers to a CNN could be counterproductive for data bereft of spatial order at higher scales. We also observe, quite counter-intuitively, that the spatial orderness of CNN feature maps show a synchronized increase during the intial stages of training, and validation performance only improves after spatial orderness of feature maps start decreasing. Lastly, we present a theoretical analysis (and empirical validation) of the spatial orderness of network weights, where we find that using smaller kernel sizes leads to kernels of greater spatial orderness and vice-versa.

Scale Steerable Filters for Locally Scale-Invariant Convolutional Neural Networks

Jun 10, 2019

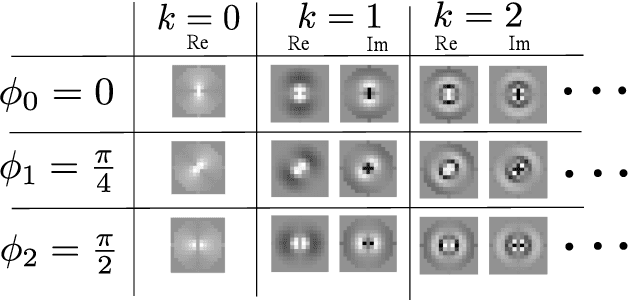

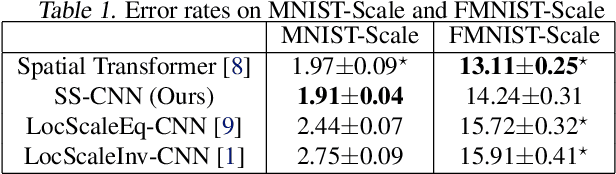

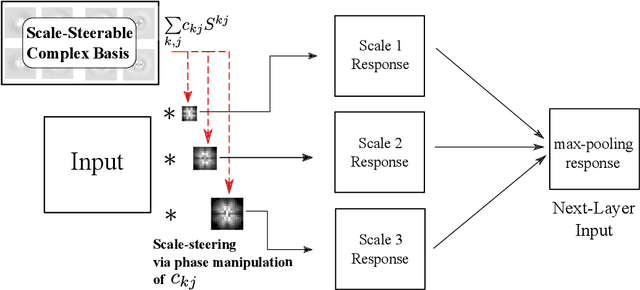

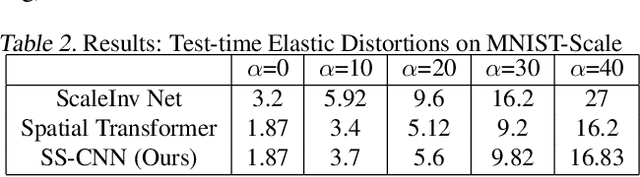

Abstract:Augmenting transformation knowledge onto a convolutional neural network's weights has often yielded significant improvements in performance. For rotational transformation augmentation, an important element to recent approaches has been the use of a steerable basis i.e. the circular harmonics. Here, we propose a scale-steerable filter basis for the locally scale-invariant CNN, denoted as log-radial harmonics. By replacing the kernels in the locally scale-invariant CNN \cite{lsi_cnn} with scale-steered kernels, significant improvements in performance can be observed on the MNIST-Scale and FMNIST-Scale datasets. Training with a scale-steerable basis results in filters which show meaningful structure, and feature maps demonstrate which demonstrate visibly higher spatial-structure preservation of input. Furthermore, the proposed scale-steerable CNN shows on-par generalization to global affine transformation estimation methods such as Spatial Transformers, in response to test-time data distortions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge