Nirav Ajmeri

University of Bristol

Language Models Do Not Embed Numbers Continuously

Oct 09, 2025

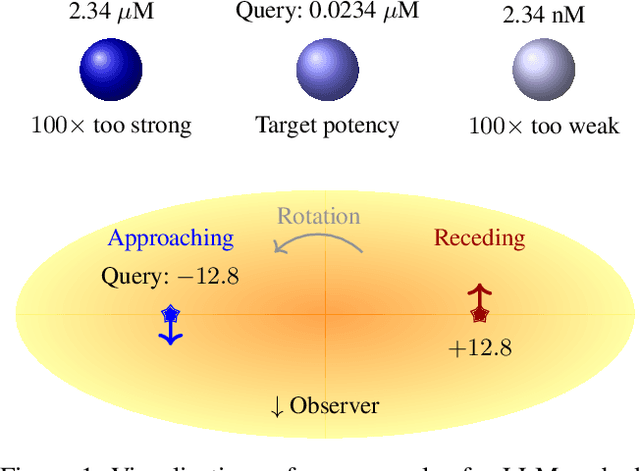

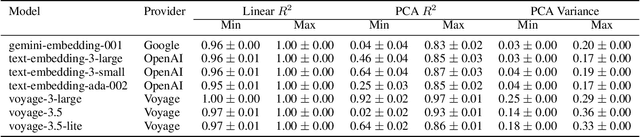

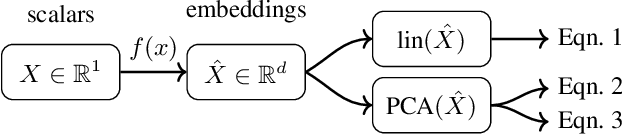

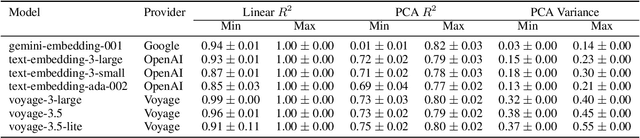

Abstract:Recent research has extensively studied how large language models manipulate integers in specific arithmetic tasks, and on a more fundamental level, how they represent numeric values. These previous works have found that language model embeddings can be used to reconstruct the original values, however, they do not evaluate whether language models actually model continuous values as continuous. Using expected properties of the embedding space, including linear reconstruction and principal component analysis, we show that language models not only represent numeric spaces as non-continuous but also introduce significant noise. Using models from three major providers (OpenAI, Google Gemini and Voyage AI), we show that while reconstruction is possible with high fidelity ($R^2 \geq 0.95$), principal components only explain a minor share of variation within the embedding space. This indicates that many components within the embedding space are orthogonal to the simple numeric input space. Further, both linear reconstruction and explained variance suffer with increasing decimal precision, despite the ordinal nature of the input space being fundamentally unchanged. The findings of this work therefore have implications for the many areas where embedding models are used, in-particular where high numerical precision, large magnitudes or mixed-sign values are common.

Operationalising Rawlsian Ethics for Fairness in Norm-Learning Agents

Dec 19, 2024Abstract:Social norms are standards of behaviour common in a society. However, when agents make decisions without considering how others are impacted, norms can emerge that lead to the subjugation of certain agents. We present RAWL-E, a method to create ethical norm-learning agents. RAWL-E agents operationalise maximin, a fairness principle from Rawlsian ethics, in their decision-making processes to promote ethical norms by balancing societal well-being with individual goals. We evaluate RAWL-E agents in simulated harvesting scenarios. We find that norms emerging in RAWL-E agent societies enhance social welfare, fairness, and robustness, and yield higher minimum experience compared to those that emerge in agent societies that do not implement Rawlsian ethics.

Artificial Intelligence for Collective Intelligence: A National-Scale Research Strategy

Nov 09, 2024

Abstract:Advances in artificial intelligence (AI) have great potential to help address societal challenges that are both collective in nature and present at national or trans-national scale. Pressing challenges in healthcare, finance, infrastructure and sustainability, for instance, might all be productively addressed by leveraging and amplifying AI for national-scale collective intelligence. The development and deployment of this kind of AI faces distinctive challenges, both technical and socio-technical. Here, a research strategy for mobilising inter-disciplinary research to address these challenges is detailed and some of the key issues that must be faced are outlined.

Value-Based Rationales Improve Social Experience: A Multiagent Simulation Study

Aug 04, 2024

Abstract:We propose Exanna, a framework to realize agents that incorporate values in decision making. An Exannaagent considers the values of itself and others when providing rationales for its actions and evaluating the rationales provided by others. Via multiagent simulation, we demonstrate that considering values in decision making and producing rationales, especially for norm-deviating actions, leads to (1) higher conflict resolution, (2) better social experience, (3) higher privacy, and (4) higher flexibility.

Norm Enforcement with a Soft Touch: Faster Emergence, Happier Agents

Jan 29, 2024Abstract:A multiagent system can be viewed as a society of autonomous agents, whose interactions can be effectively regulated via social norms. In general, the norms of a society are not hardcoded but emerge from the agents' interactions. Specifically, how the agents in a society react to each other's behavior and respond to the reactions of others determines which norms emerge in the society. We think of these reactions by an agent to the satisfactory or unsatisfactory behaviors of another agent as communications from the first agent to the second agent. Understanding these communications is a kind of social intelligence: these communications provide natural drivers for norm emergence by pushing agents toward certain behaviors, which can become established as norms. Whereas it is well-known that sanctioning can lead to the emergence of norms, we posit that a broader kind of social intelligence can prove more effective in promoting cooperation in a multiagent system. Accordingly, we develop Nest, a framework that models social intelligence in the form of a wider variety of communications and understanding of them than in previous work. To evaluate Nest, we develop a simulated pandemic environment and conduct simulation experiments to compare Nest with baselines considering a combination of three kinds of social communication: sanction, tell, and hint. We find that societies formed of Nest agents achieve norms faster; moreover, Nest agents effectively avoid undesirable consequences, which are negative sanctions and deviation from goals, and yield higher satisfaction for themselves than baseline agents despite requiring only an equivalent amount of information.

Social Value Orientation and Integral Emotions in Multi-Agent Systems

May 09, 2023

Abstract:Human social behavior is influenced by individual differences in social preferences. Social value orientation (SVO) is a measurable personality trait which indicates the relative importance an individual places on their own and on others' welfare when making decisions. SVO and other individual difference variables are strong predictors of human behavior and social outcomes. However, there are transient changes human behavior associated with emotions that are not captured by individual differences alone. Integral emotions, the emotions which arise in direct response to a decision-making scenario, have been linked to temporary shifts in decision-making preferences. In this work, we investigated the effects of moderating social preferences with integral emotions in multi-agent societies. We developed Svoie, a method for designing agents which make decisions based on established SVO policies, as well as alternative integral emotion policies in response to task outcomes. We conducted simulation experiments in a resource-sharing task environment, and compared societies of Svoie agents with societies of agents with fixed SVO policies. We find that societies of agents which adapt their behavior through integral emotions achieved similar collective welfare to societies of agents with fixed SVO policies, but with significantly reduced inequality between the welfare of agents with different SVO traits. We observed that by allowing agents to change their policy in response to task outcomes, agents can moderate their behavior to achieve greater social equality. \end{abstract}

Realistic Synthetic Social Networks with Graph Neural Networks

Dec 15, 2022Abstract:Social network analysis faces profound difficulties in sharing data between researchers due to privacy and security concerns. A potential remedy to this issue are synthetic networks, that closely resemble their real counterparts, but can be freely distributed. generating synthetic networks requires the creation of network topologies that, in application, function as realistically as possible. Widely applied models are currently rule-based and can struggle to reproduce structural dynamics. Lead by recent developments in Graph Neural Network (GNN) models for network generation we evaluate the potential of GNNs for synthetic social networks. Our GNN use is specifically within a reasonable use-case and includes empirical evaluation using Maximum Mean Discrepancy (MMD). We include social network specific measurements which allow evaluation of how realistically synthetic networks behave in typical social network analysis applications. We find that the Gated Recurrent Attention Network (GRAN) extends well to social networks, and in comparison to a benchmark popular rule-based generation Recursive-MATrix (R-MAT) method, is better able to replicate realistic structural dynamics. We find that GRAN is more computationally costly than R-MAT, but is not excessively costly to employ, so would be effective for researchers seeking to create datasets of synthetic social networks.

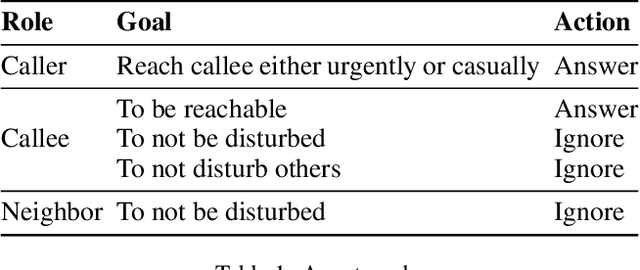

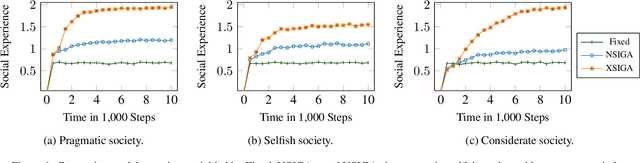

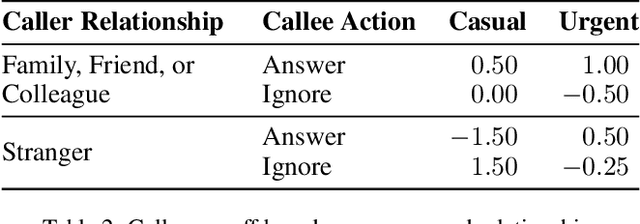

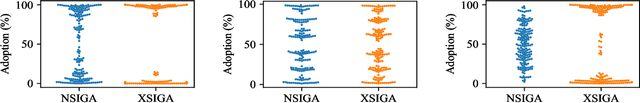

Socially Intelligent Genetic Agents for the Emergence of Explicit Norms

Aug 07, 2022

Abstract:Norms help regulate a society. Norms may be explicit (represented in structured form) or implicit. We address the emergence of explicit norms by developing agents who provide and reason about explanations for norm violations in deciding sanctions and identifying alternative norms. These agents use a genetic algorithm to produce norms and reinforcement learning to learn the values of these norms. We find that applying explanations leads to norms that provide better cohesion and goal satisfaction for the agents. Our results are stable for societies with differing attitudes of generosity.

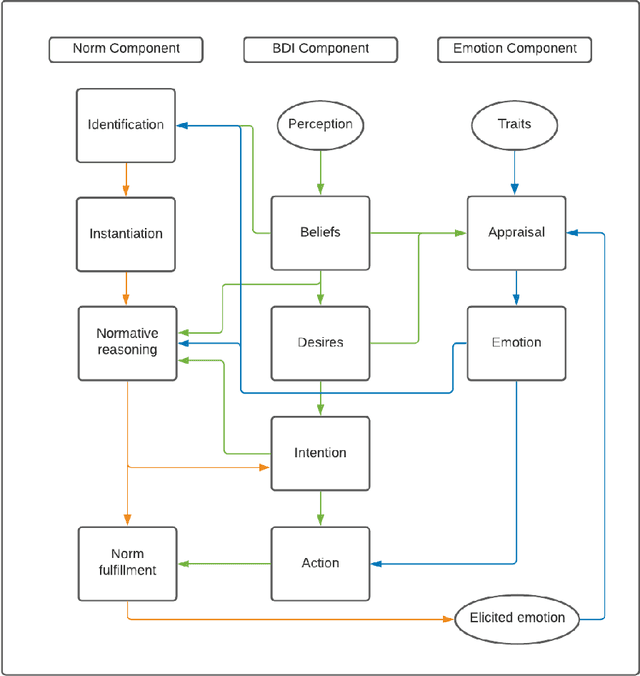

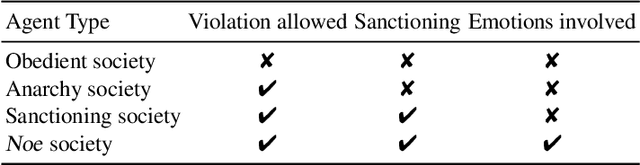

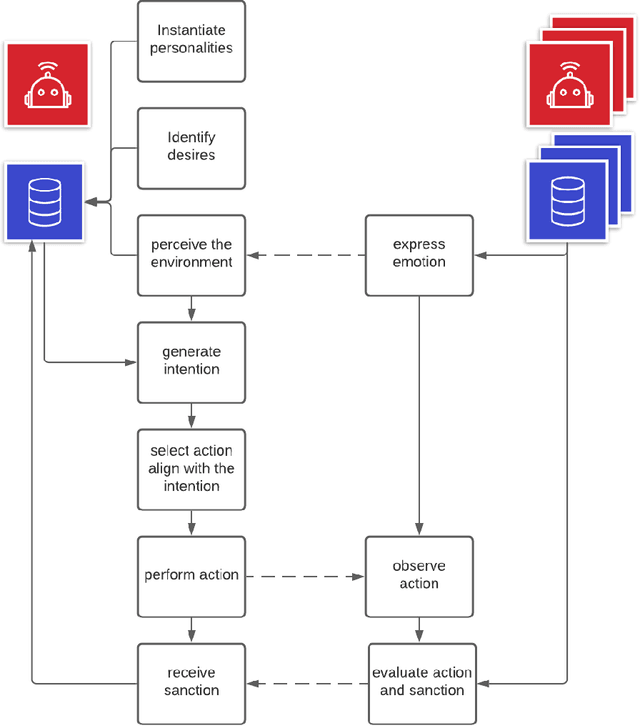

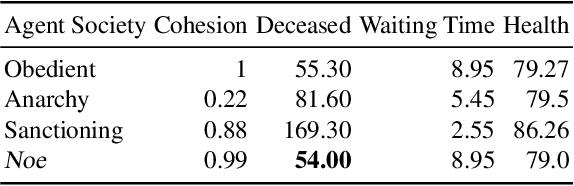

Noe: Norms Emergence and Robustness Based on Emotions in Multiagent Systems

Apr 30, 2021

Abstract:Social norms characterize collective and acceptable group conducts in human society. Furthermore, some social norms emerge from interactions of agents or humans. To achieve agent autonomy and make norm satisfaction explainable, we include emotions into the normative reasoning process, which evaluate whether to comply or violate a norm. Specifically, before selecting an action to execute, an agent observes the environment and infer the state and consequences with its internal states after norm satisfaction or violation of a social norm. Both norm satisfaction and violation provoke further emotions, and the subsequent emotions affect norm enforcement. This paper investigates how modeling emotions affect the emergence and robustness of social norms via social simulation experiments. We find that an ability in agents to consider emotional responses to the outcomes of norm satisfaction and violation (1) promote norm compliance; and (2) improve societal welfare.

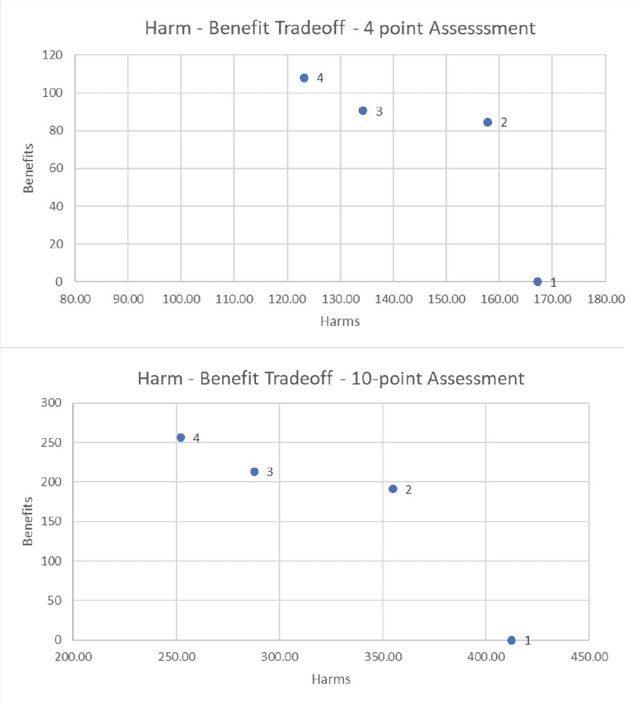

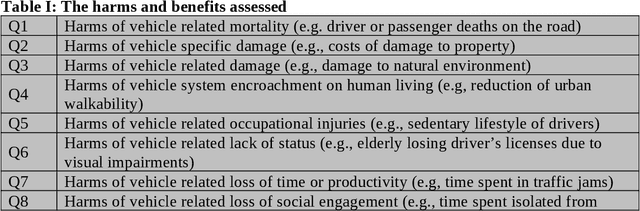

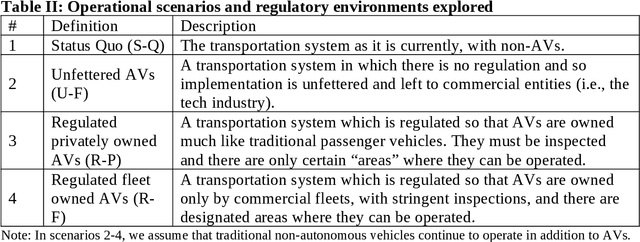

Toward a Rational and Ethical Sociotechnical System of Autonomous Vehicles: A Novel Application of Multi-Criteria Decision Analysis

Feb 04, 2021

Abstract:The expansion of artificial intelligence (AI) and autonomous systems has shown the potential to generate enormous social good while also raising serious ethical and safety concerns. AI technology is increasingly adopted in transportation. A survey of various in-vehicle technologies found that approximately 64% of the respondents used a smartphone application to assist with their travel. The top-used applications were navigation and real-time traffic information systems. Among those who used smartphones during their commutes, the top-used applications were navigation and entertainment. There is a pressing need to address relevant social concerns to allow for the development of systems of intelligent agents that are informed and cognizant of ethical standards. Doing so will facilitate the responsible integration of these systems in society. To this end, we have applied Multi-Criteria Decision Analysis (MCDA) to develop a formal Multi-Attribute Impact Assessment (MAIA) questionnaire for examining the social and ethical issues associated with the uptake of AI. We have focused on the domain of autonomous vehicles (AVs) because of their imminent expansion. However, AVs could serve as a stand-in for any domain where intelligent, autonomous agents interact with humans, either on an individual level (e.g., pedestrians, passengers) or a societal level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge