Nikita Kazeev

for the LHCb Simulation Project

MiAD: Mirage Atom Diffusion for De Novo Crystal Generation

Nov 18, 2025

Abstract:In recent years, diffusion-based models have demonstrated exceptional performance in searching for simultaneously stable, unique, and novel (S.U.N.) crystalline materials. However, most of these models don't have the ability to change the number of atoms in the crystal during the generation process, which limits the variability of model sampling trajectories. In this paper, we demonstrate the severity of this restriction and introduce a simple yet powerful technique, mirage infusion, which enables diffusion models to change the state of the atoms that make up the crystal from existent to non-existent (mirage) and vice versa. We show that this technique improves model quality by up to $\times2.5$ compared to the same model without this modification. The resulting model, Mirage Atom Diffusion (MiAD), is an equivariant joint diffusion model for de novo crystal generation that is capable of altering the number of atoms during the generation process. MiAD achieves an $8.2\%$ S.U.N. rate on the MP-20 dataset, which substantially exceeds existing state-of-the-art approaches. The source code can be found at \href{https://github.com/andrey-okhotin/miad.git}{\texttt{github.com/andrey-okhotin/miad}}.

Wyckoff Transformer: Generation of Symmetric Crystals

Mar 04, 2025Abstract:Symmetry rules that atoms obey when they bond together to form an ordered crystal play a fundamental role in determining their physical, chemical, and electronic properties such as electrical and thermal conductivity, optical and polarization behavior, and mechanical strength. Almost all known crystalline materials have internal symmetry. Consistently generating stable crystal structures is still an open challenge, specifically because such symmetry rules are not accounted for. To address this issue, we propose WyFormer, a generative model for materials conditioned on space group symmetry. We use Wyckoff positions as the basis for an elegant, compressed, and discrete structure representation. To model the distribution, we develop a permutation-invariant autoregressive model based on the Transformer and an absence of positional encoding. WyFormer has a unique and powerful synergy of attributes, proven by extensive experimentation: best-in-class symmetry-conditioned generation, physics-motivated inductive bias, competitive stability of the generated structures, competitive material property prediction quality, and unparalleled inference speed.

The LHCb ultra-fast simulation option, Lamarr: design and validation

Sep 22, 2023Abstract:Detailed detector simulation is the major consumer of CPU resources at LHCb, having used more than 90% of the total computing budget during Run 2 of the Large Hadron Collider at CERN. As data is collected by the upgraded LHCb detector during Run 3 of the LHC, larger requests for simulated data samples are necessary, and will far exceed the pledged resources of the experiment, even with existing fast simulation options. An evolution of technologies and techniques to produce simulated samples is mandatory to meet the upcoming needs of analysis to interpret signal versus background and measure efficiencies. In this context, we propose Lamarr, a Gaudi-based framework designed to offer the fastest solution for the simulation of the LHCb detector. Lamarr consists of a pipeline of modules parameterizing both the detector response and the reconstruction algorithms of the LHCb experiment. Most of the parameterizations are made of Deep Generative Models and Gradient Boosted Decision Trees trained on simulated samples or alternatively, where possible, on real data. Embedding Lamarr in the general LHCb Gauss Simulation framework allows combining its execution with any of the available generators in a seamless way. Lamarr has been validated by comparing key reconstructed quantities with Detailed Simulation. Good agreement of the simulated distributions is obtained with two-order-of-magnitude speed-up of the simulation phase.

Generative models uncertainty estimation

Oct 18, 2022

Abstract:In recent years fully-parametric fast simulation methods based on generative models have been proposed for a variety of high-energy physics detectors. By their nature, the quality of data-driven models degrades in the regions of the phase space where the data are sparse. Since machine-learning models are hard to analyse from the physical principles, the commonly used testing procedures are performed in a data-driven way and can't be reliably used in such regions. In our work we propose three methods to estimate the uncertainty of generative models inside and outside of the training phase space region, along with data-driven calibration techniques. A test of the proposed methods on the LHCb RICH fast simulation is also presented.

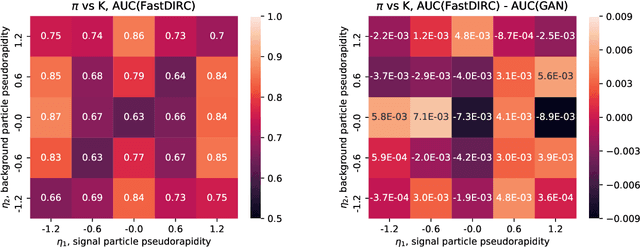

Towards Reliable Neural Generative Modeling of Detectors

Apr 21, 2022

Abstract:The increasing luminosities of future data taking at Large Hadron Collider and next generation collider experiments require an unprecedented amount of simulated events to be produced. Such large scale productions demand a significant amount of valuable computing resources. This brings a demand to use new approaches to event generation and simulation of detector responses. In this paper, we discuss the application of generative adversarial networks (GANs) to the simulation of the LHCb experiment events. We emphasize main pitfalls in the application of GANs and study the systematic effects in detail. The presented results are based on the Geant4 simulation of the LHCb Cherenkov detector.

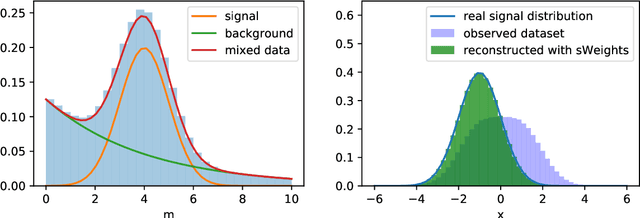

Machine Learning on data with sPlot background subtraction

Jun 14, 2019

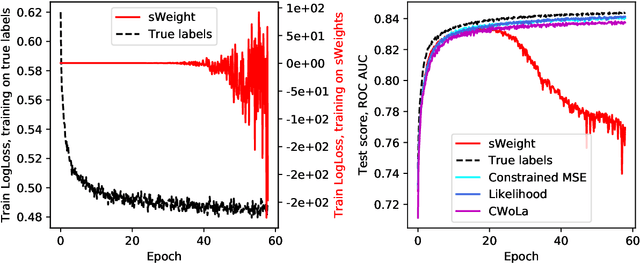

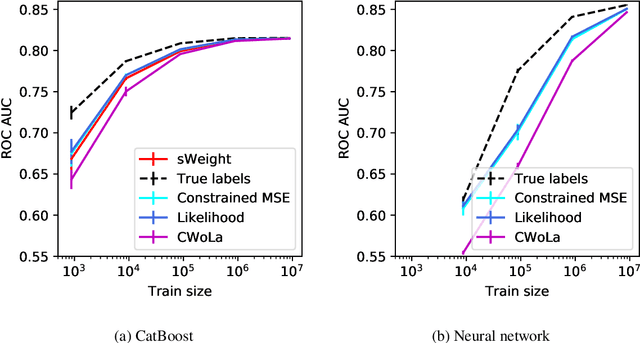

Abstract:Data analysis in high energy physics often deals with data samples consisting of a mixture of signal and background events. The sPlot technique is a common method to subtract the contribution of the background by assigning weights to events. Part of the weights are by design negative. Negative weights lead to the divergence of some machine learning algorithms training due to absence of the lower bound in the loss function. In this paper we propose a mathematically rigorous way to train machine learning algorithms on data samples with background described by sPlot to obtain signal probabilities conditioned on observables, without encountering negative event weight at all. This allows usage of any out-of-the-box machine learning methods on such data.

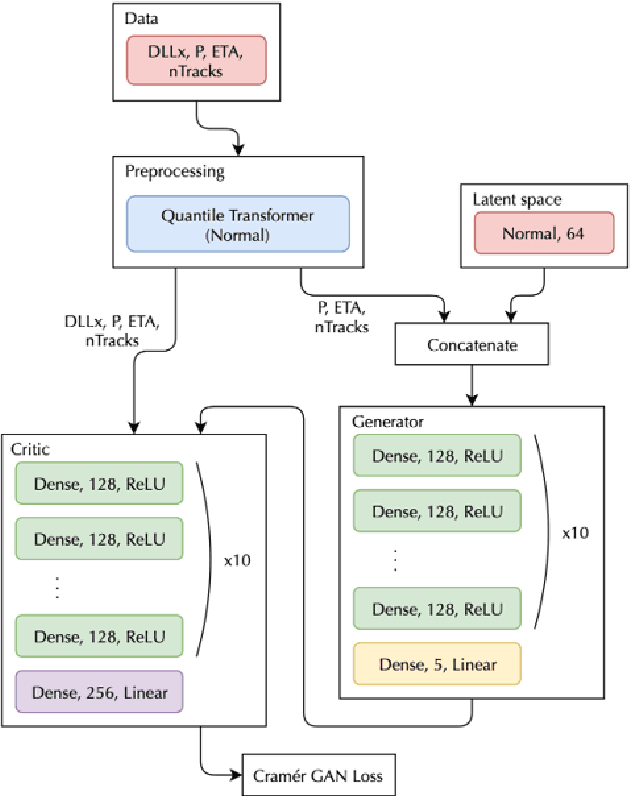

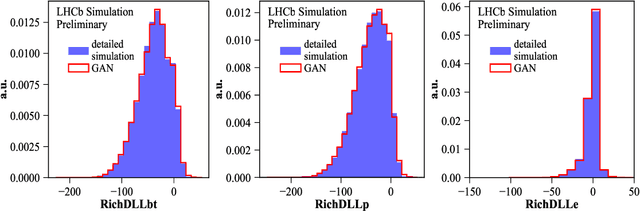

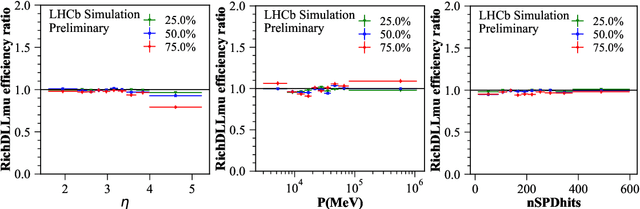

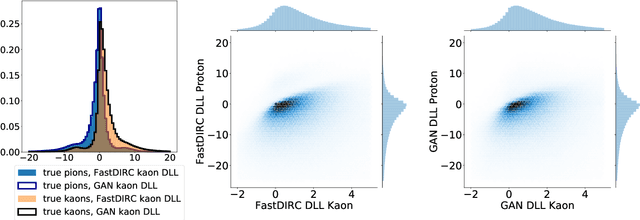

Fast Data-Driven Simulation of Cherenkov Detectors Using Generative Adversarial Networks

May 28, 2019

Abstract:The increasing luminosities of future Large Hadron Collider runs and next generation of collider experiments will require an unprecedented amount of simulated events to be produced. Such large scale productions are extremely demanding in terms of computing resources. Thus new approaches to event generation and simulation of detector responses are needed. In LHCb, the accurate simulation of Cherenkov detectors takes a sizeable fraction of CPU time. An alternative approach is described here, when one generates high-level reconstructed observables using a generative neural network to bypass low level details. This network is trained to reproduce the particle species likelihood function values based on the track kinematic parameters and detector occupancy. The fast simulation is trained using real data samples collected by LHCb during run 2. We demonstrate that this approach provides high-fidelity results.

Cherenkov Detectors Fast Simulation Using Neural Networks

Mar 28, 2019

Abstract:We propose a way to simulate Cherenkov detector response using a generative adversarial neural network to bypass low-level details. This network is trained to reproduce high level features of the simulated detector events based on input observables of incident particles. This allows the dramatic increase of simulation speed. We demonstrate that this approach provides simulation precision which is consistent with the baseline and discuss possible implications of these results.

Space Navigator: a Tool for the Optimization of Collision Avoidance Maneuvers

Feb 06, 2019

Abstract:The number of space objects will grow several times in a few years due to the planned launches of constellations of thousands microsatellites. It leads to a significant increase in the threat of satellite collisions. Spacecraft must undertake collision avoidance maneuvers to mitigate the risk. According to publicly available information, conjunction events are now manually handled by operators on the Earth. The manual maneuver planning requires qualified personnel and will be impractical for constellations of thousands satellites. In this paper we propose a new modular autonomous collision avoidance system called "Space Navigator". It is based on a novel maneuver optimization approach that combines domain knowledge with Reinforcement Learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge