Lucio Anderlini

for the LHCb Simulation Project

The AI_INFN Platform: Artificial Intelligence Development in the Cloud

Sep 26, 2025Abstract:Machine Learning (ML) is driving a revolution in the way scientists design, develop, and deploy data-intensive software. However, the adoption of ML presents new challenges for the computing infrastructure, particularly in terms of provisioning and orchestrating access to hardware accelerators for development, testing, and production. The INFN-funded project AI_INFN (Artificial Intelligence at INFN) aims at fostering the adoption of ML techniques within INFN use cases by providing support on multiple aspects, including the provisioning of AI-tailored computing resources. It leverages cloud-native solutions in the context of INFN Cloud, to share hardware accelerators as effectively as possible, ensuring the diversity of the Institute's research activities is not compromised. In this contribution, we provide an update on the commissioning of a Kubernetes platform designed to ease the development of GPU-powered data analysis workflows and their scalability on heterogeneous distributed computing resources, also using the offloading mechanism with Virtual Kubelet and InterLink API. This setup can manage workflows across different resource providers, including sites of the Worldwide LHC Computing Grid and supercomputers such as CINECA Leonardo, providing a model for use cases requiring dedicated infrastructures for different parts of the workload. Initial test results, emerging case studies, and integration scenarios will be presented with functional tests and benchmarks.

Supporting the development of Machine Learning for fundamental science in a federated Cloud with the AI_INFN platform

Feb 28, 2025

Abstract:Machine Learning (ML) is driving a revolution in the way scientists design, develop, and deploy data-intensive software. However, the adoption of ML presents new challenges for the computing infrastructure, particularly in terms of provisioning and orchestrating access to hardware accelerators for development, testing, and production. The INFN-funded project AI_INFN ("Artificial Intelligence at INFN") aims at fostering the adoption of ML techniques within INFN use cases by providing support on multiple aspects, including the provision of AI-tailored computing resources. It leverages cloud-native solutions in the context of INFN Cloud, to share hardware accelerators as effectively as possible, ensuring the diversity of the Institute's research activities is not compromised. In this contribution, we provide an update on the commissioning of a Kubernetes platform designed to ease the development of GPU-powered data analysis workflows and their scalability on heterogeneous, distributed computing resources, possibly federated as Virtual Kubelets with the interLink provider.

The LHCb ultra-fast simulation option, Lamarr: design and validation

Sep 22, 2023Abstract:Detailed detector simulation is the major consumer of CPU resources at LHCb, having used more than 90% of the total computing budget during Run 2 of the Large Hadron Collider at CERN. As data is collected by the upgraded LHCb detector during Run 3 of the LHC, larger requests for simulated data samples are necessary, and will far exceed the pledged resources of the experiment, even with existing fast simulation options. An evolution of technologies and techniques to produce simulated samples is mandatory to meet the upcoming needs of analysis to interpret signal versus background and measure efficiencies. In this context, we propose Lamarr, a Gaudi-based framework designed to offer the fastest solution for the simulation of the LHCb detector. Lamarr consists of a pipeline of modules parameterizing both the detector response and the reconstruction algorithms of the LHCb experiment. Most of the parameterizations are made of Deep Generative Models and Gradient Boosted Decision Trees trained on simulated samples or alternatively, where possible, on real data. Embedding Lamarr in the general LHCb Gauss Simulation framework allows combining its execution with any of the available generators in a seamless way. Lamarr has been validated by comparing key reconstructed quantities with Detailed Simulation. Good agreement of the simulated distributions is obtained with two-order-of-magnitude speed-up of the simulation phase.

Hyperparameter Optimization as a Service on INFN Cloud

Jan 13, 2023Abstract:The simplest and often most effective way of parallelizing the training of complex machine learning models is to execute several training instances on multiple machines, possibly scanning the hyperparameter space to optimize the underlying statistical model and the learning procedure. Often, such a meta learning procedure is limited by the ability of accessing securely a common database organizing the knowledge of the previous and ongoing trials. Exploiting opportunistic GPUs provided in different environments represents a further challenge when designing such optimization campaigns. In this contribution we discuss how a set of RestAPIs can be used to access a dedicated service based on INFN Cloud to monitor and possibly coordinate multiple training instances, with gradient-less optimization techniques, via simple HTTP requests. The service, named Hopaas (Hyperparameter OPtimization As A Service), is made of web interface and sets of APIs implemented with a FastAPI back-end running through Uvicorn and NGINX in a virtual instance of INFN Cloud. The optimization algorithms are currently based on Bayesian techniques as provided by Optuna. A Python front-end is also made available for quick prototyping. We present applications to hyperparameter optimization campaigns performed combining private, INFN Cloud and CINECA resources.

Generative models uncertainty estimation

Oct 18, 2022

Abstract:In recent years fully-parametric fast simulation methods based on generative models have been proposed for a variety of high-energy physics detectors. By their nature, the quality of data-driven models degrades in the regions of the phase space where the data are sparse. Since machine-learning models are hard to analyse from the physical principles, the commonly used testing procedures are performed in a data-driven way and can't be reliably used in such regions. In our work we propose three methods to estimate the uncertainty of generative models inside and outside of the training phase space region, along with data-driven calibration techniques. A test of the proposed methods on the LHCb RICH fast simulation is also presented.

Towards Reliable Neural Generative Modeling of Detectors

Apr 21, 2022

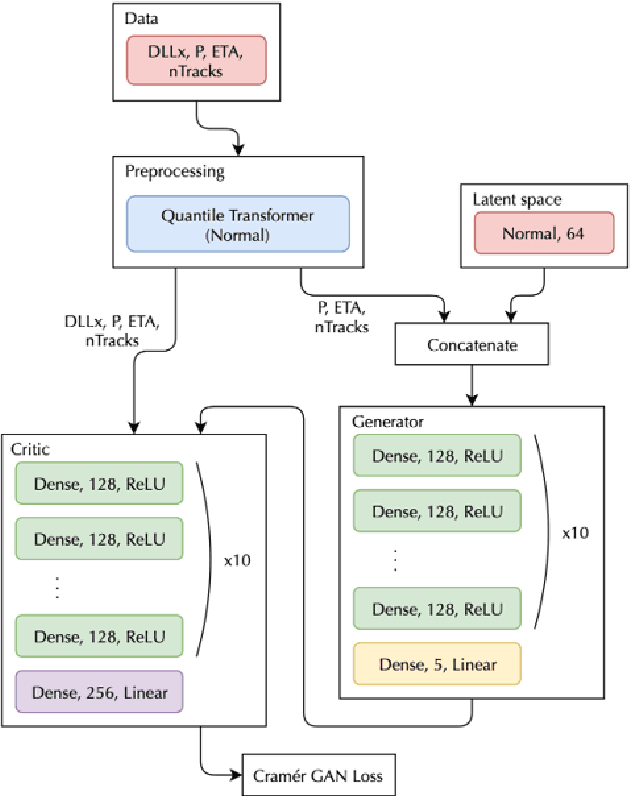

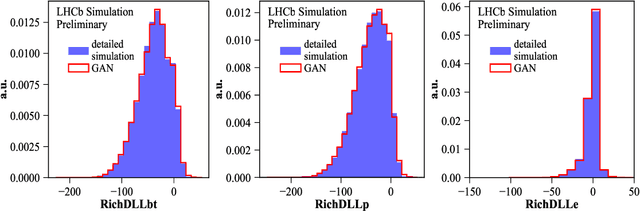

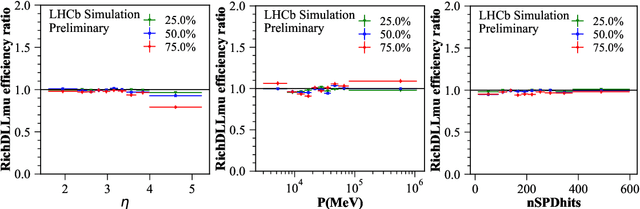

Abstract:The increasing luminosities of future data taking at Large Hadron Collider and next generation collider experiments require an unprecedented amount of simulated events to be produced. Such large scale productions demand a significant amount of valuable computing resources. This brings a demand to use new approaches to event generation and simulation of detector responses. In this paper, we discuss the application of generative adversarial networks (GANs) to the simulation of the LHCb experiment events. We emphasize main pitfalls in the application of GANs and study the systematic effects in detail. The presented results are based on the Geant4 simulation of the LHCb Cherenkov detector.

Fast Data-Driven Simulation of Cherenkov Detectors Using Generative Adversarial Networks

May 28, 2019

Abstract:The increasing luminosities of future Large Hadron Collider runs and next generation of collider experiments will require an unprecedented amount of simulated events to be produced. Such large scale productions are extremely demanding in terms of computing resources. Thus new approaches to event generation and simulation of detector responses are needed. In LHCb, the accurate simulation of Cherenkov detectors takes a sizeable fraction of CPU time. An alternative approach is described here, when one generates high-level reconstructed observables using a generative neural network to bypass low level details. This network is trained to reproduce the particle species likelihood function values based on the track kinematic parameters and detector occupancy. The fast simulation is trained using real data samples collected by LHCb during run 2. We demonstrate that this approach provides high-fidelity results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge