Fedor Ratnikov

Approach to Finding a Robust Deep Learning Model

May 22, 2025Abstract:The rapid development of machine learning (ML) and artificial intelligence (AI) applications requires the training of large numbers of models. This growing demand highlights the importance of training models without human supervision, while ensuring that their predictions are reliable. In response to this need, we propose a novel approach for determining model robustness. This approach, supplemented with a proposed model selection algorithm designed as a meta-algorithm, is versatile and applicable to any machine learning model, provided that it is appropriate for the task at hand. This study demonstrates the application of our approach to evaluate the robustness of deep learning models. To this end, we study small models composed of a few convolutional and fully connected layers, using common optimizers due to their ease of interpretation and computational efficiency. Within this framework, we address the influence of training sample size, model weight initialization, and inductive bias on the robustness of deep learning models.

Optimisation of the Accelerator Control by Reinforcement Learning: A Simulation-Based Approach

Mar 12, 2025Abstract:Optimizing accelerator control is a critical challenge in experimental particle physics, requiring significant manual effort and resource expenditure. Traditional tuning methods are often time-consuming and reliant on expert input, highlighting the need for more efficient approaches. This study aims to create a simulation-based framework integrated with Reinforcement Learning (RL) to address these challenges. Using \texttt{Elegant} as the simulation backend, we developed a Python wrapper that simplifies the interaction between RL algorithms and accelerator simulations, enabling seamless input management, simulation execution, and output analysis. The proposed RL framework acts as a co-pilot for physicists, offering intelligent suggestions to enhance beamline performance, reduce tuning time, and improve operational efficiency. As a proof of concept, we demonstrate the application of our RL approach to an accelerator control problem and highlight the improvements in efficiency and performance achieved through our methodology. We discuss how the integration of simulation tools with a Python-based RL framework provides a powerful resource for the accelerator physics community, showcasing the potential of machine learning in optimizing complex physical systems.

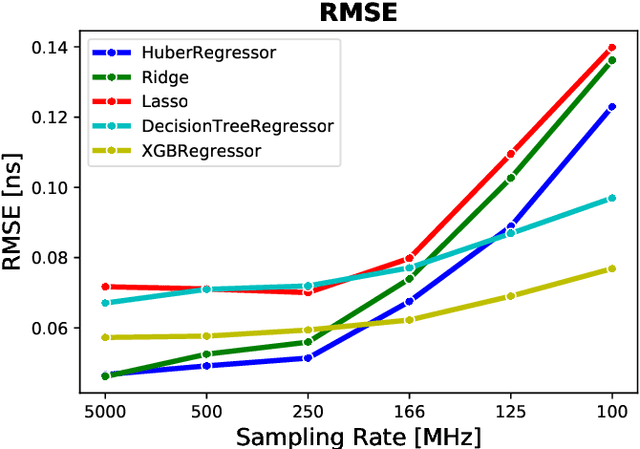

What Machine Learning Can Do for Focusing Aerogel Detectors

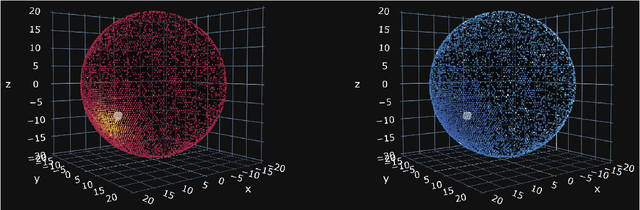

Dec 05, 2023Abstract:Particle identification at the Super Charm-Tau factory experiment will be provided by a Focusing Aerogel Ring Imaging CHerenkov detector (FARICH). The specifics of detector location make proper cooling difficult, therefore a significant number of ambient background hits are captured. They must be mitigated to reduce the data flow and improve particle velocity resolution. In this work we present several approaches to filtering signal hits, inspired by machine learning techniques from computer vision.

Energy reconstruction for large liquid scintillator detectors with machine learning techniques: aggregated features approach

Jun 17, 2022

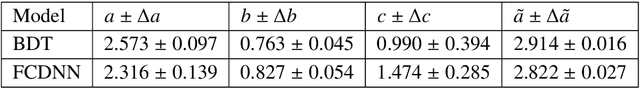

Abstract:Large scale detectors consisting of a liquid scintillator (LS) target surrounded by an array of photo-multiplier tubes (PMT) are widely used in modern neutrino experiments: Borexino, KamLAND, Daya Bay, Double Chooz, RENO, and upcoming JUNO with its satellite detector TAO. Such apparatuses are able to measure neutrino energy, which can be derived from the amount of light and its spatial and temporal distribution over PMT-channels. However, achieving a fine energy resolution in large scale detectors is challenging. In this work, we present machine learning methods for energy reconstruction in JUNO, the most advanced detector of its type. We focus on positron events in the energy range of 0-10 MeV which corresponds to the main signal in JUNO $-$ neutrinos originated from nuclear reactor cores and detected via an inverse beta-decay channel. We consider Boosted Decision Trees and Fully Connected Deep Neural Network trained on aggregated features, calculated using information collected by PMTs. We describe the details of our feature engineering procedure and show that machine learning models can provide energy resolution $\sigma = 3\%$ at 1 MeV using subsets of engineered features. The dataset for model training and testing is generated by the Monte Carlo method with the official JUNO software. Consideration of calibration sources for evaluation of the reconstruction algorithms performance on real data is also presented.

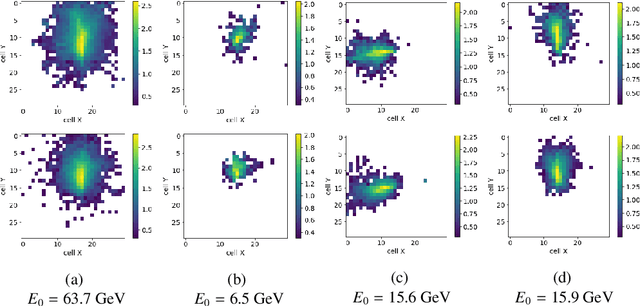

Using Machine Learning to Speed Up and Improve Calorimeter R&D

Mar 27, 2020

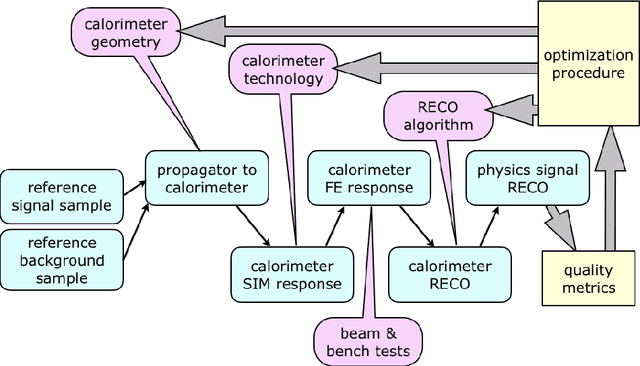

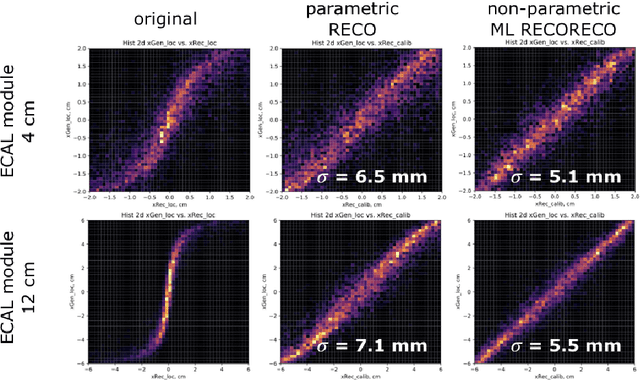

Abstract:Design of new experiments, as well as upgrade of ongoing ones, is a continuous process in the experimental high energy physics. Since the best solution is a trade-off between different kinds of limitations, a quick turn over is necessary to evaluate physics performance for different techniques in different configurations. Two typical problems which slow down evaluation of physics performance for particular approaches to calorimeter detector technologies and configurations are: - Emulating particular detector properties including raw detector response together with a signal processing chain to adequately simulate a calorimeter response for different signal and background conditions. This includes combining detector properties obtained from the general Geant simulation with properties obtained from different kinds of bench and beam tests of detector and electronics prototypes. - Building an adequate reconstruction algorithm for physics reconstruction of the detector response which is reasonably tuned to extract the most of the performance provided by the given detector configuration. Being approached from the first principles, both problems require significant development efforts. Fortunately, both problems may be addressed by using modern machine learning approaches, that allow a combination of available details of the detector techniques into corresponding higher level physics performance in a semi-automated way. In this paper, we discuss the use of advanced machine learning techniques to speed up and improve the precision of the detector development and optimisation cycle, with an emphasis on the experience and practical results obtained by applying this approach to epitomising the electromagnetic calorimeter design as a part of the upgrade project for the LHCb detector at LHC.

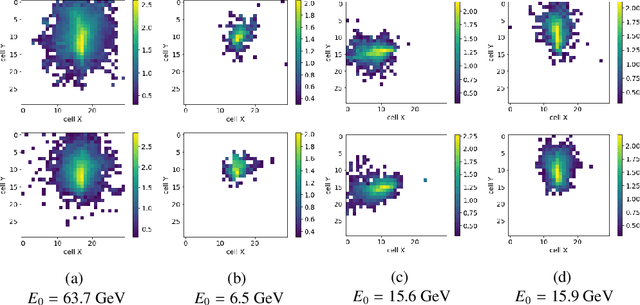

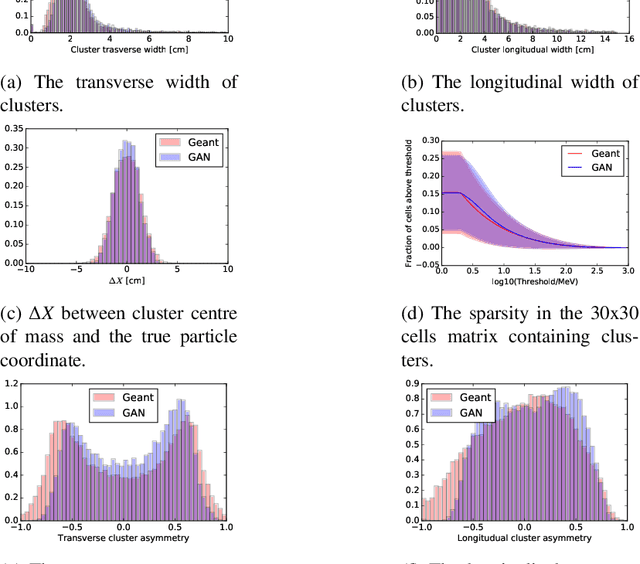

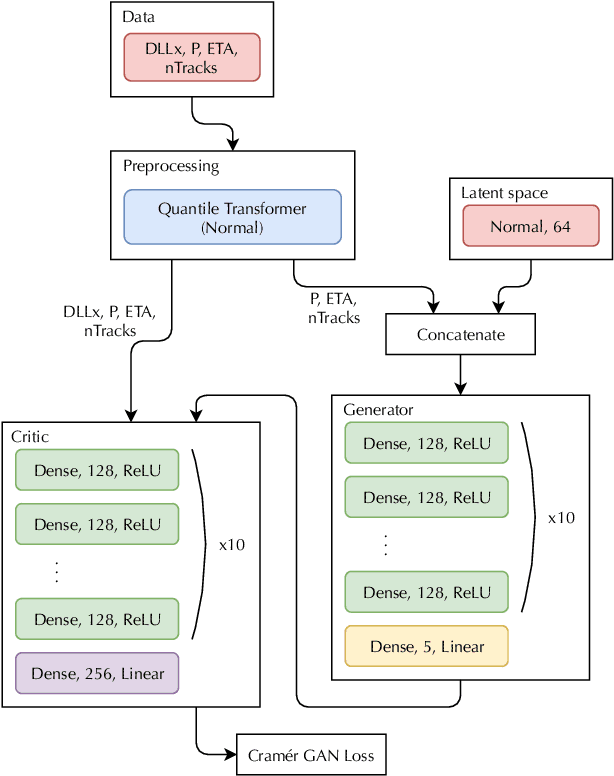

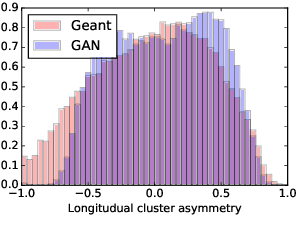

Generative Adversarial Networks for LHCb Fast Simulation

Mar 21, 2020

Abstract:LHCb is one of the major experiments operating at the Large Hadron Collider at CERN. The richness of the physics program and the increasing precision of the measurements in LHCb lead to the need of ever larger simulated samples. This need will increase further when the upgraded LHCb detector will start collecting data in the LHC Run 3. Given the computing resources pledged for the production of Monte Carlo simulated events in the next years, the use of fast simulation techniques will be mandatory to cope with the expected dataset size. In LHCb generative models, which are nowadays widely used for computer vision and image processing are being investigated in order to accelerate the generation of showers in the calorimeter and high-level responses of Cherenkov detector. We demonstrate that this approach provides high-fidelity results along with a significant speed increase and discuss possible implication of these results. We also present an implementation of this algorithm into LHCb simulation software and validation tests.

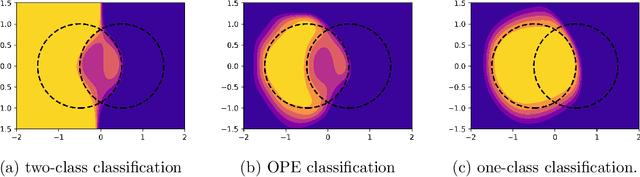

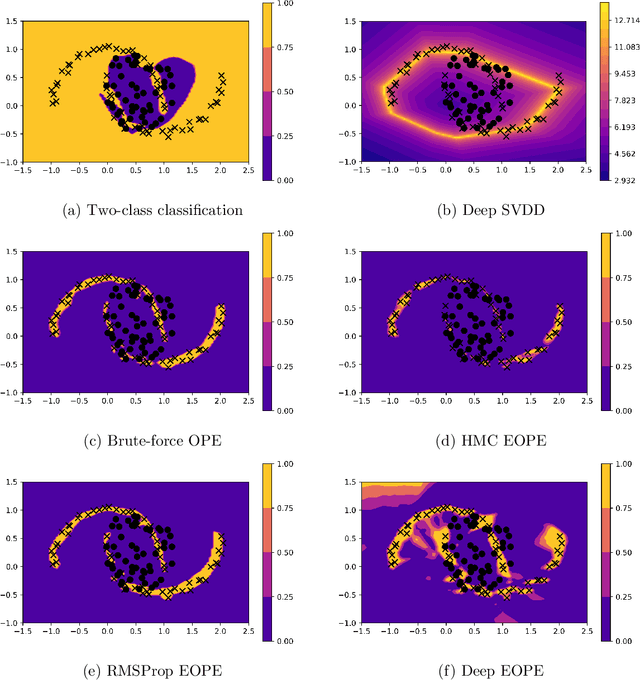

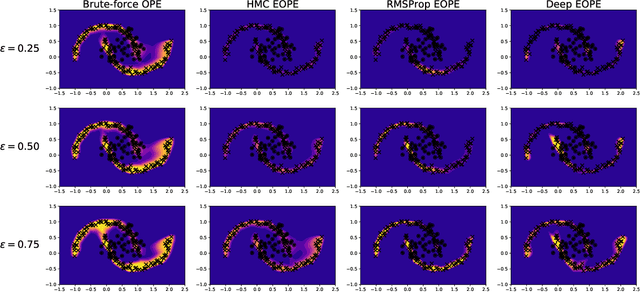

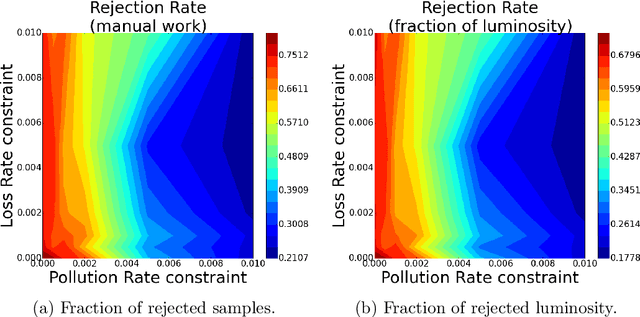

$(1 + \varepsilon)$-class Classification: an Anomaly Detection Method for Highly Imbalanced or Incomplete Data Sets

Jun 14, 2019

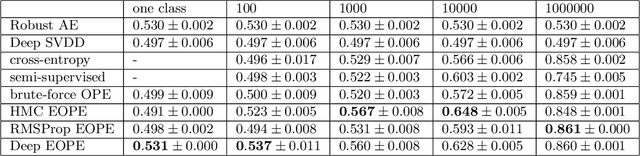

Abstract:Anomaly detection is not an easy problem since distribution of anomalous samples is unknown a priori. We explore a novel method that gives a trade-off possibility between one-class and two-class approaches, and leads to a better performance on anomaly detection problems with small or non-representative anomalous samples. The method is evaluated using several data sets and compared to a set of conventional one-class and two-class approaches.

Cherenkov Detectors Fast Simulation Using Neural Networks

Mar 28, 2019

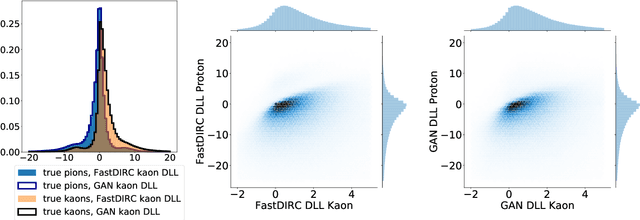

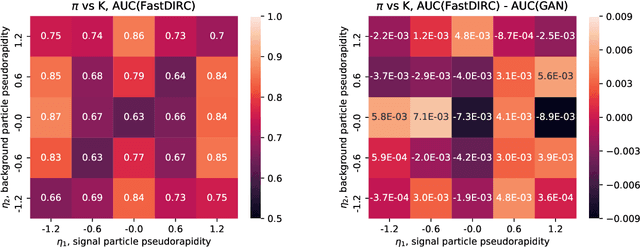

Abstract:We propose a way to simulate Cherenkov detector response using a generative adversarial neural network to bypass low-level details. This network is trained to reproduce high level features of the simulated detector events based on input observables of incident particles. This allows the dramatic increase of simulation speed. We demonstrate that this approach provides simulation precision which is consistent with the baseline and discuss possible implications of these results.

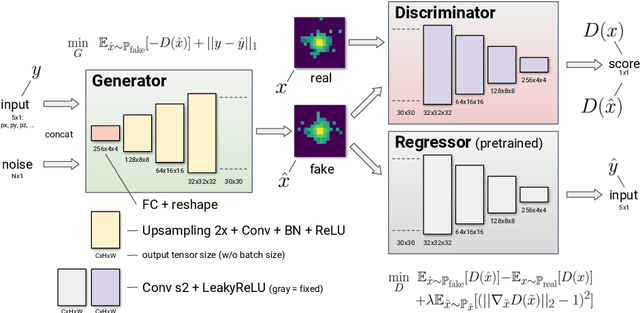

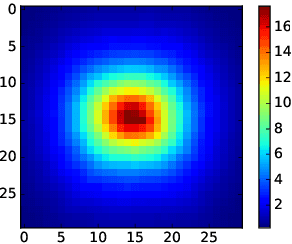

Generative Models for Fast Calorimeter Simulation.LHCb case

Dec 04, 2018

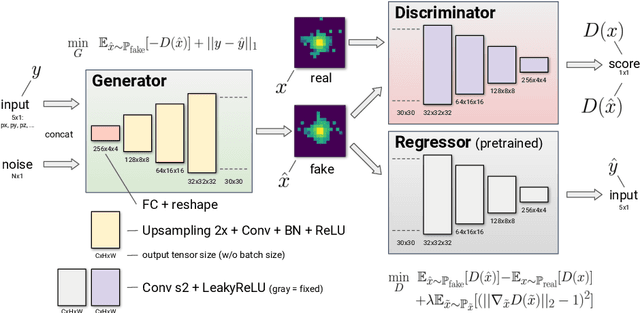

Abstract:Simulation is one of the key components in high energy physics. Historically it relies on the Monte Carlo methods which require a tremendous amount of computation resources. These methods may have difficulties with the expected High Luminosity Large Hadron Collider (HL LHC) need, so the experiment is in urgent need of new fast simulation techniques. We introduce a new Deep Learning framework based on Generative Adversarial Networks which can be faster than traditional simulation methods by 5 order of magnitude with reasonable simulation accuracy. This approach will allow physicists to produce a big enough amount of simulated data needed by the next HL LHC experiments using limited computing resources.

Towards automation of data quality system for CERN CMS experiment

Sep 25, 2017

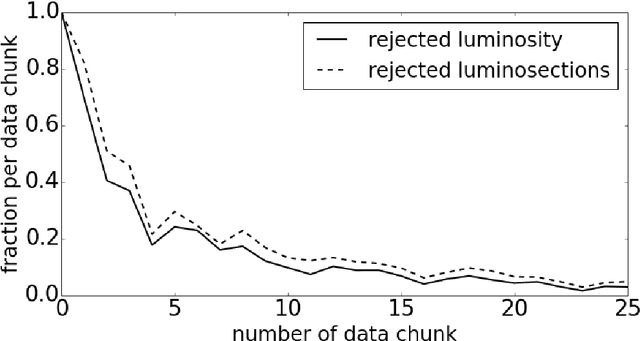

Abstract:Daily operation of a large-scale experiment is a challenging task, particularly from perspectives of routine monitoring of quality for data being taken. We describe an approach that uses Machine Learning for the automated system to monitor data quality, which is based on partial use of data qualified manually by detector experts. The system automatically classifies marginal cases: both of good an bad data, and use human expert decision to classify remaining "grey area" cases. This study uses collision data collected by the CMS experiment at LHC in 2010. We demonstrate that proposed workflow is able to automatically process at least 20\% of samples without noticeable degradation of the result.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge