Nektarios Koukourakis

Calibration-free quantitative phase imaging in multi-core fiber endoscopes using end-to-end deep learning

Dec 12, 2023

Abstract:Quantitative phase imaging (QPI) through multi-core fibers (MCFs) has been an emerging in vivo label-free endoscopic imaging modality with minimal invasiveness. However, the computational demands of conventional iterative phase retrieval algorithms have limited their real-time imaging potential. We demonstrate a learning-based MCF phase imaging method, that significantly reduced the phase reconstruction time to 5.5 ms, enabling video-rate imaging at 181 fps. Moreover, we introduce an innovative optical system that automatically generated the first open-source dataset tailored for MCF phase imaging, comprising 50,176 paired speckle and phase images. Our trained deep neural network (DNN) demonstrates robust phase reconstruction performance in experiments with a mean fidelity of up to 99.8\%. Such an efficient fiber phase imaging approach can broaden the applications of QPI in hard-to-reach areas.

AI-driven projection tomography with multicore fibre-optic cell rotation

Dec 12, 2023Abstract:Optical tomography has emerged as a non-invasive imaging method, providing three-dimensional insights into subcellular structures and thereby enabling a deeper understanding of cellular functions, interactions, and processes. Conventional optical tomography methods are constrained by a limited illumination scanning range, leading to anisotropic resolution and incomplete imaging of cellular structures. To overcome this problem, we employ a compact multi-core fibre-optic cell rotator system that facilitates precise optical manipulation of cells within a microfluidic chip, achieving full-angle projection tomography with isotropic resolution. Moreover, we demonstrate an AI-driven tomographic reconstruction workflow, which can be a paradigm shift from conventional computational methods, often demanding manual processing, to a fully autonomous process. The performance of the proposed cell rotation tomography approach is validated through the three-dimensional reconstruction of cell phantoms and HL60 human cancer cells. The versatility of this learning-based tomographic reconstruction workflow paves the way for its broad application across diverse tomographic imaging modalities, including but not limited to flow cytometry tomography and acoustic rotation tomography. Therefore, this AI-driven approach can propel advancements in cell biology, aiding in the inception of pioneering therapeutics, and augmenting early-stage cancer diagnostics.

Quantitative phase imaging through an ultra-thin lensless fiber endoscope

Dec 25, 2021

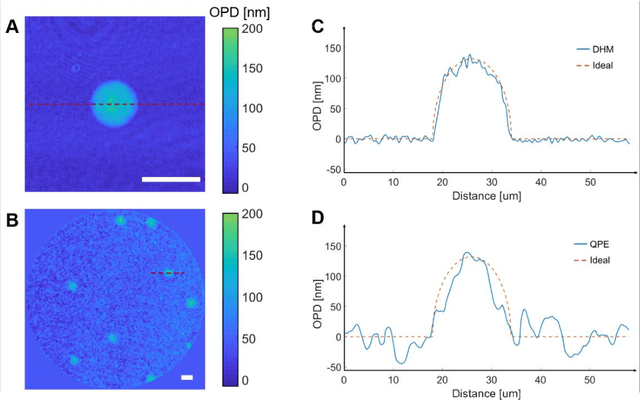

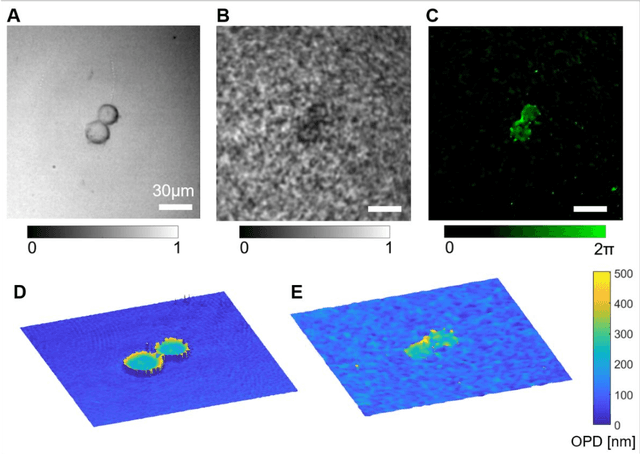

Abstract:Quantitative phase imaging (QPI) is a label-free technique providing both morphology and quantitative biophysical information in biomedicine. However, applying such a powerful technique to in vivo pathological diagnosis remains challenging. Multi-core fiber bundles (MCFs) enable ultra-thin probes for in vivo imaging, but current MCF imaging techniques are limited to amplitude imaging modalities. We demonstrate a computational lensless microendoscope that uses an ultra-thin bare MCF to perform quantitative phase imaging of biomedical samples with up to 1 {\mu}m lateral resolution and nanoscale axial resolution. The incident complex light field at the measurement side is precisely reconstructed from a single-shot far-field speckle pattern at the detection side, enabling digital focusing and 3D volumetric reconstruction without any mechanical movement. The accuracy of the quantitative phase reconstruction is validated by imaging the phase target and hydrogel beads through the MCF. With the proposed imaging modality, 3D imaging of human cancer cells is achieved through the ultra-thin fiber endoscope, promising widespread clinical applications.

Lensless multicore-fiber microendoscope for real-time tailored light field generation with phase encoder neural network (CoreNet)

Nov 24, 2021

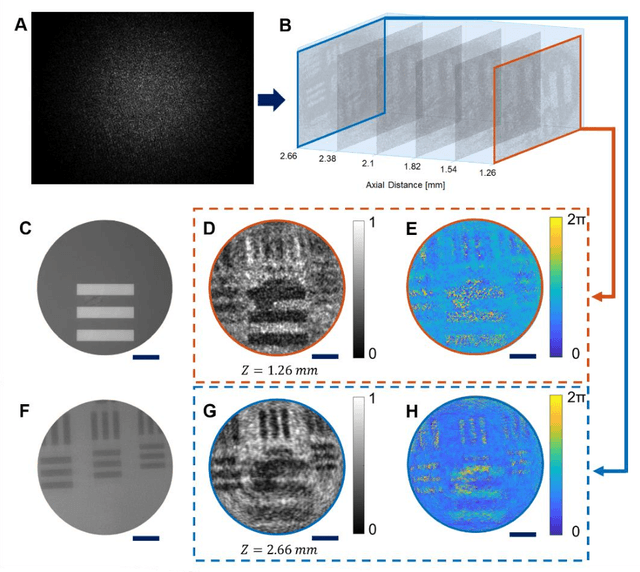

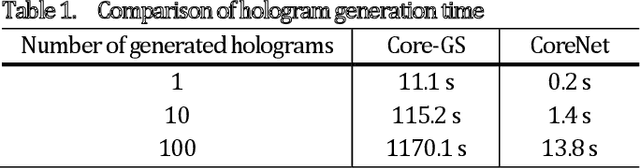

Abstract:The generation of tailored light with multi-core fiber (MCF) lensless microendoscopes is widely used in biomedicine. However, the computer-generated holograms (CGHs) used for such applications are typically generated by iterative algorithms, which demand high computation effort, limiting advanced applications like in vivo optogenetic stimulation and fiber-optic cell manipulation. The random and discrete distribution of the fiber cores induces strong spatial aliasing to the CGHs, hence, an approach that can rapidly generate tailored CGHs for MCFs is highly demanded. We demonstrate a novel phase encoder deep neural network (CoreNet), which can generate accurate tailored CGHs for MCFs at a near video-rate. Simulations show that CoreNet can speed up the computation time by two magnitudes and increase the fidelity of the generated light field compared to the conventional CGH techniques. For the first time, real-time generated tailored CGHs are on-the-fly loaded to the phase-only SLM for dynamic light fields generation through the MCF microendoscope in experiments. This paves the avenue for real-time cell rotation and several further applications that require real-time high-fidelity light delivery in biomedicine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge