Liangcai Cao

Ptychographic non-line-of-sight imaging for depth-resolved visualization of hidden objects

May 17, 2024

Abstract:Non-line-of-sight (NLOS) imaging enables the visualization of objects hidden from direct view, with applications in surveillance, remote sensing, and light detection and ranging. Here, we introduce a NLOS imaging technique termed ptychographic NLOS (pNLOS), which leverages coded ptychography for depth-resolved imaging of obscured objects. Our approach involves scanning a laser spot on a wall to illuminate the hidden objects in an obscured region. The reflected wavefields from these objects then travel back to the wall, get modulated by the wall's complex-valued profile, and the resulting diffraction patterns are captured by a camera. By modulating the object wavefields, the wall surface serves the role of the coded layer as in coded ptychography. As we scan the laser spot to different positions, the reflected object wavefields on the wall translate accordingly, with the shifts varying for objects at different depths. This translational diversity enables the acquisition of a set of modulated diffraction patterns referred to as a ptychogram. By processing the ptychogram, we recover both the objects at different depths and the modulation profile of the wall surface. Experimental results demonstrate high-resolution, high-fidelity imaging of hidden objects, showcasing the potential of pNLOS for depth-aware vision beyond the direct line of sight.

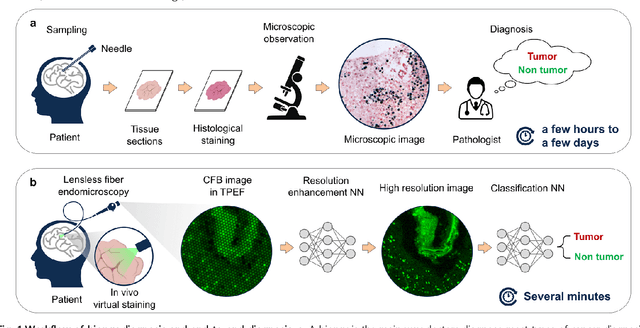

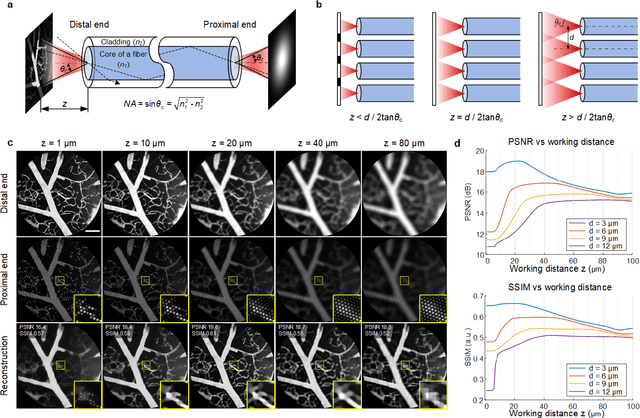

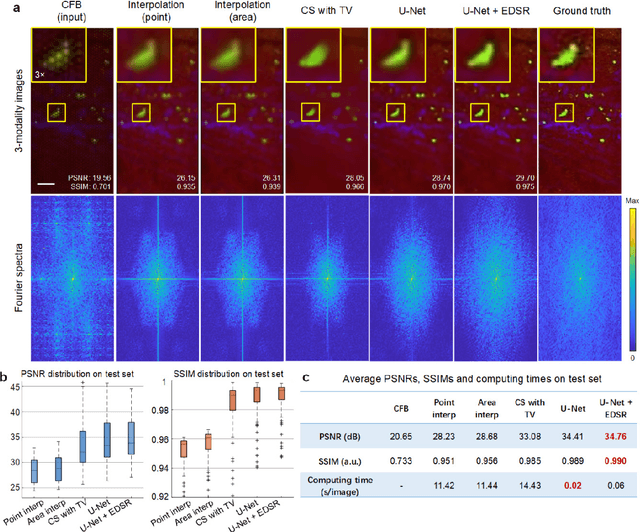

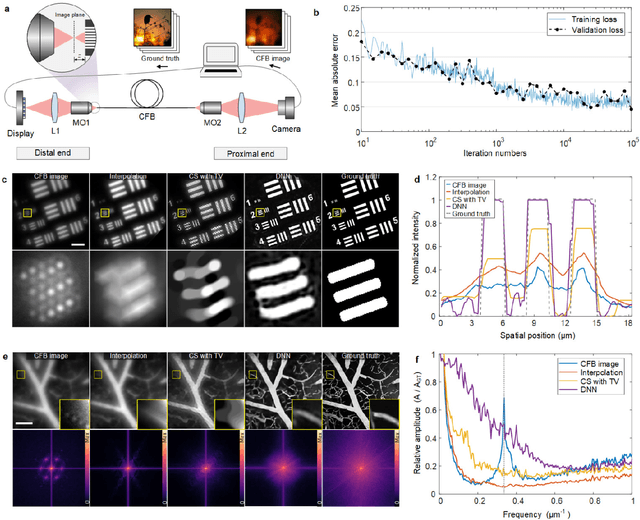

Learned end-to-end high-resolution lensless fiber imaging toward intraoperative real-time cancer diagnosis

Feb 28, 2022

Abstract:Endomicroscopy is indispensable for minimally invasive diagnostics in clinical practice. For optical keyhole monitoring of surgical interventions, high-resolution fiber endoscopic imaging is considered to be very promising, especially in combination with label-free imaging techniques to realize in vivo diagnosis. However, the inherent honeycomb-artifacts of coherent fiber bundles (CFB) reduce the resolution and limit the clinical applications. We propose an end-to-end lensless fiber imaging scheme toward intraoperative real-time cancer diagnosis. The framework includes resolution enhancement and classification networks that use single-shot fiber bundle images to provide both high-resolution images and tumor diagnosis result. The well-trained resolution enhancement network not only recovers high-resolution features beyond the physical limitations of CFB, but also helps improving tumor recognition rate. Especially for glioblastoma, the resolution enhancement network helps increasing the classification accuracy from 90.8% to 95.6%. The novel technique can enable histological real-time imaging through lensless fiber endoscopy and is promising for rapid and minimal-invasive intraoperative diagnosis in clinics.

Quantitative phase imaging through an ultra-thin lensless fiber endoscope

Dec 25, 2021

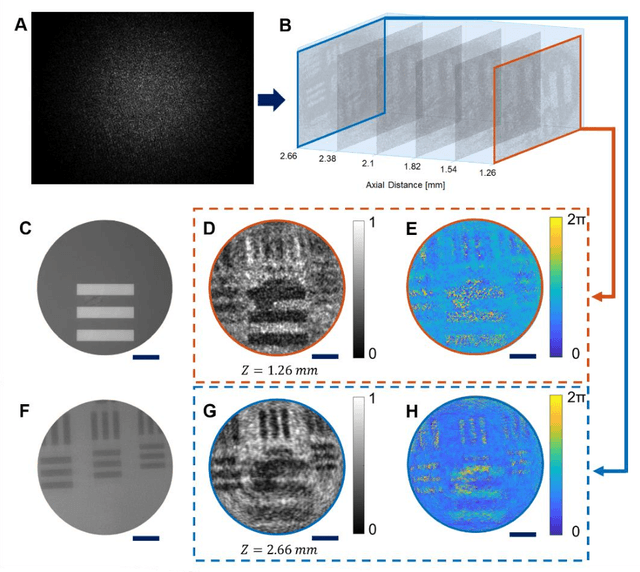

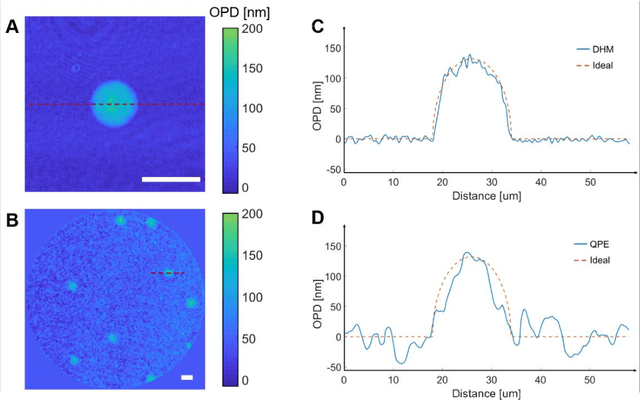

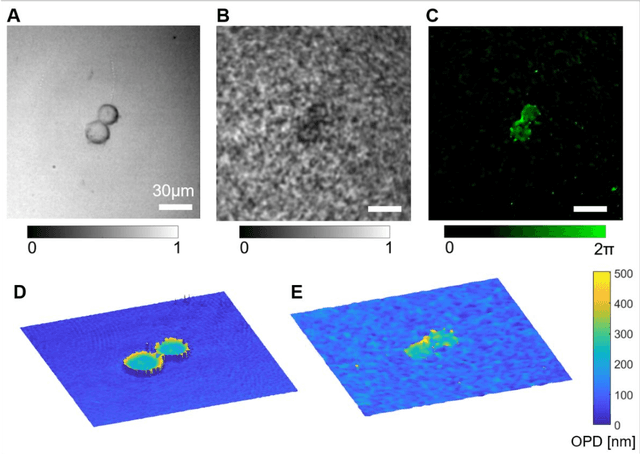

Abstract:Quantitative phase imaging (QPI) is a label-free technique providing both morphology and quantitative biophysical information in biomedicine. However, applying such a powerful technique to in vivo pathological diagnosis remains challenging. Multi-core fiber bundles (MCFs) enable ultra-thin probes for in vivo imaging, but current MCF imaging techniques are limited to amplitude imaging modalities. We demonstrate a computational lensless microendoscope that uses an ultra-thin bare MCF to perform quantitative phase imaging of biomedical samples with up to 1 {\mu}m lateral resolution and nanoscale axial resolution. The incident complex light field at the measurement side is precisely reconstructed from a single-shot far-field speckle pattern at the detection side, enabling digital focusing and 3D volumetric reconstruction without any mechanical movement. The accuracy of the quantitative phase reconstruction is validated by imaging the phase target and hydrogel beads through the MCF. With the proposed imaging modality, 3D imaging of human cancer cells is achieved through the ultra-thin fiber endoscope, promising widespread clinical applications.

Lensless multicore-fiber microendoscope for real-time tailored light field generation with phase encoder neural network (CoreNet)

Nov 24, 2021

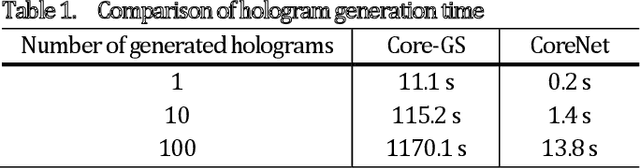

Abstract:The generation of tailored light with multi-core fiber (MCF) lensless microendoscopes is widely used in biomedicine. However, the computer-generated holograms (CGHs) used for such applications are typically generated by iterative algorithms, which demand high computation effort, limiting advanced applications like in vivo optogenetic stimulation and fiber-optic cell manipulation. The random and discrete distribution of the fiber cores induces strong spatial aliasing to the CGHs, hence, an approach that can rapidly generate tailored CGHs for MCFs is highly demanded. We demonstrate a novel phase encoder deep neural network (CoreNet), which can generate accurate tailored CGHs for MCFs at a near video-rate. Simulations show that CoreNet can speed up the computation time by two magnitudes and increase the fidelity of the generated light field compared to the conventional CGH techniques. For the first time, real-time generated tailored CGHs are on-the-fly loaded to the phase-only SLM for dynamic light fields generation through the MCF microendoscope in experiments. This paves the avenue for real-time cell rotation and several further applications that require real-time high-fidelity light delivery in biomedicine.

A Complex Constrained Total Variation Image Denoising Algorithm with Application to Phase Retrieval

Sep 12, 2021

Abstract:This paper considers the constrained total variation (TV) denoising problem for complex-valued images. We extend the definition of TV seminorms for real-valued images to dealing with complex-valued ones. In particular, we introduce two types of complex TV in both isotropic and anisotropic forms. To solve the constrained denoising problem, we adopt a dual approach and derive an accelerated gradient projection algorithm. We further generalize the proposed denoising algorithm as a key building block of the proximal gradient scheme to solve a vast class of complex constrained optimization problems with TV regularizers. As an example, we apply the proposed algorithmic framework to phase retrieval. We combine the complex TV regularizer with the conventional projection-based method within the constraint complex TV model. Initial results from both simulated and optical experiments demonstrate the validity of the constrained TV model in extracting sparsity priors within complex-valued images, while also utilizing physically tractable constraints that help speed up convergence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge