Necdet Serhat Aybat

A Variance-Reduced Stochastic Accelerated Primal Dual Algorithm

Feb 19, 2022

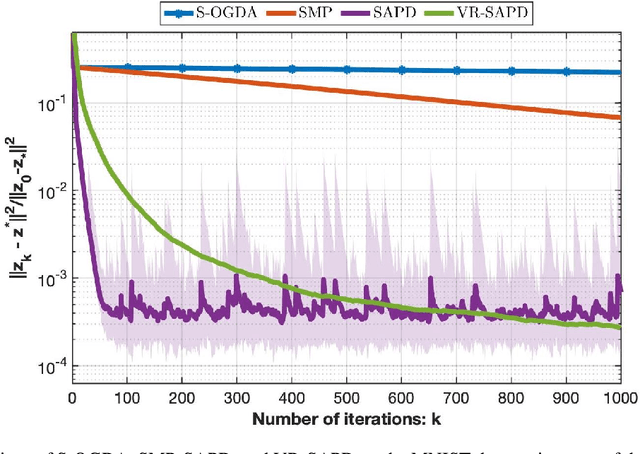

Abstract:In this work, we consider strongly convex strongly concave (SCSC) saddle point (SP) problems $\min_{x\in\mathbb{R}^{d_x}}\max_{y\in\mathbb{R}^{d_y}}f(x,y)$ where $f$ is $L$-smooth, $f(.,y)$ is $\mu$-strongly convex for every $y$, and $f(x,.)$ is $\mu$-strongly concave for every $x$. Such problems arise frequently in machine learning in the context of robust empirical risk minimization (ERM), e.g. $\textit{distributionally robust}$ ERM, where partial gradients are estimated using mini-batches of data points. Assuming we have access to an unbiased stochastic first-order oracle we consider the stochastic accelerated primal dual (SAPD) algorithm recently introduced in Zhang et al. [2021] for SCSC SP problems as a robust method against gradient noise. In particular, SAPD recovers the well-known stochastic gradient descent ascent (SGDA) as a special case when the momentum parameter is set to zero and can achieve an accelerated rate when the momentum parameter is properly tuned, i.e., improving the $\kappa \triangleq L/\mu$ dependence from $\kappa^2$ for SGDA to $\kappa$. We propose efficient variance-reduction strategies for SAPD based on Richardson-Romberg extrapolation and show that our method improves upon SAPD both in practice and in theory.

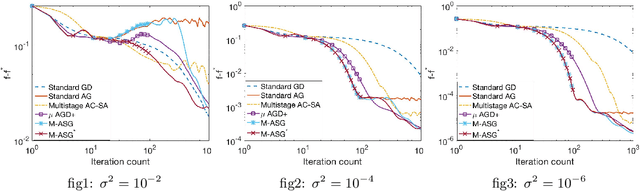

A Universally Optimal Multistage Accelerated Stochastic Gradient Method

Jan 25, 2019

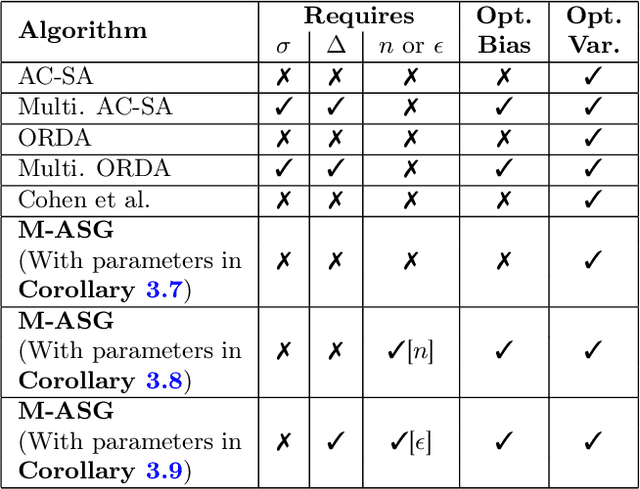

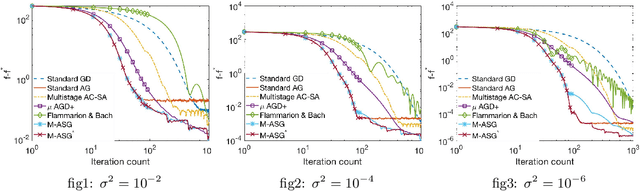

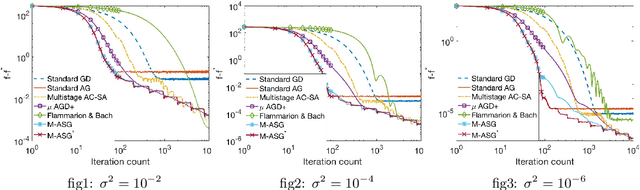

Abstract:We study the problem of minimizing a strongly convex and smooth function when we have noisy estimates of its gradient. We propose a novel multistage accelerated algorithm that is universally optimal in the sense that it achieves the optimal rate both in the deterministic and stochastic case and operates without knowledge of noise characteristics. The algorithm consists of stages that use a stochastic version of Nesterov's accelerated algorithm with a specific restart and parameters selected to achieve the fastest reduction in the bias-variance terms in the convergence rate bounds.

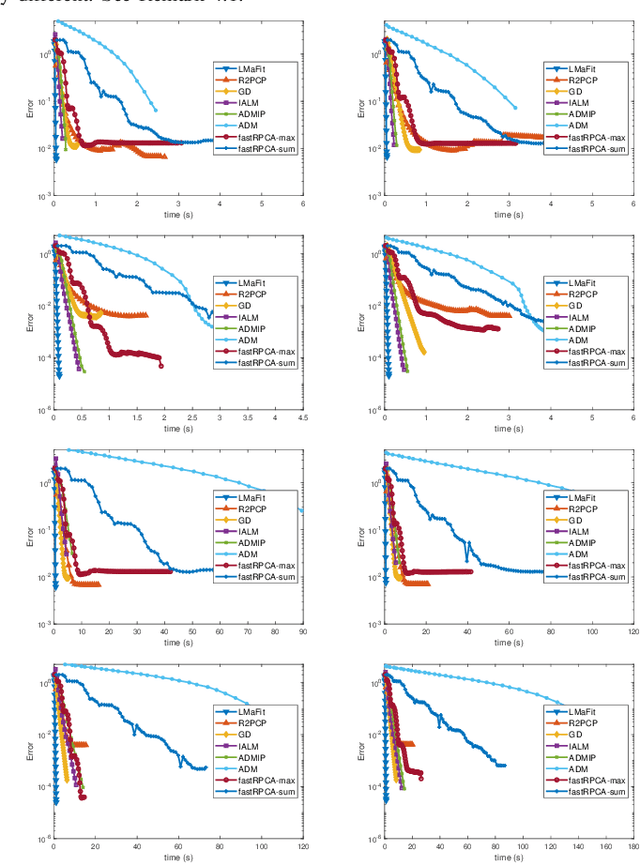

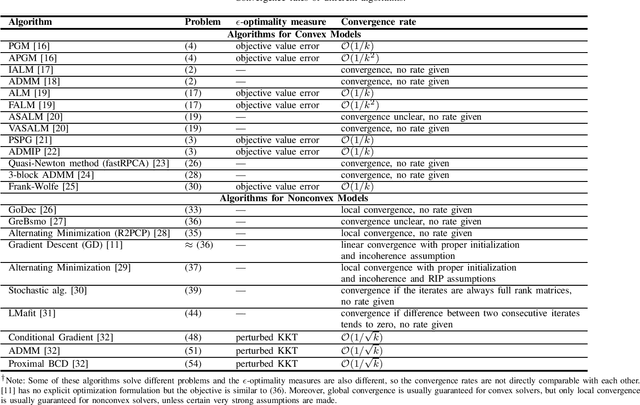

Efficient Optimization Algorithms for Robust Principal Component Analysis and Its Variants

Jun 09, 2018

Abstract:Robust PCA has drawn significant attention in the last decade due to its success in numerous application domains, ranging from bio-informatics, statistics, and machine learning to image and video processing in computer vision. Robust PCA and its variants such as sparse PCA and stable PCA can be formulated as optimization problems with exploitable special structures. Many specialized efficient optimization methods have been proposed to solve robust PCA and related problems. In this paper we review existing optimization methods for solving convex and nonconvex relaxations/variants of robust PCA, discuss their advantages and disadvantages, and elaborate on their convergence behaviors. We also provide some insights for possible future research directions including new algorithmic frameworks that might be suitable for implementing on multi-processor setting to handle large-scale problems.

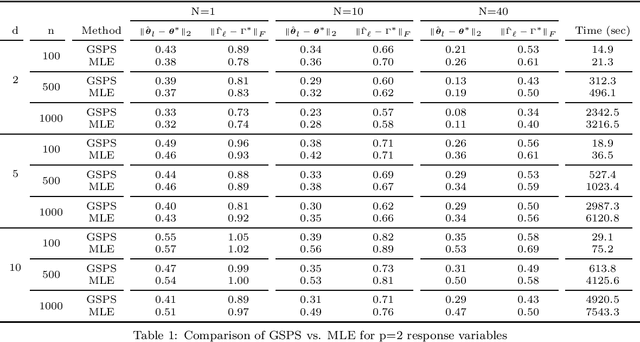

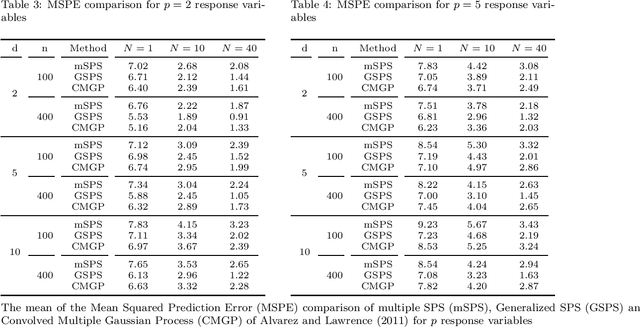

Generalized Sparse Precision Matrix Selection for Fitting Multivariate Gaussian Random Fields to Large Data Sets

Jul 06, 2017

Abstract:We present a new method for estimating multivariate, second-order stationary Gaussian Random Field (GRF) models based on the Sparse Precision matrix Selection (SPS) algorithm, proposed by Davanloo et al. (2015) for estimating scalar GRF models. Theoretical convergence rates for the estimated between-response covariance matrix and for the estimated parameters of the underlying spatial correlation function are established. Numerical tests using simulated and real datasets validate our theoretical findings. Data segmentation is used to handle large data sets.

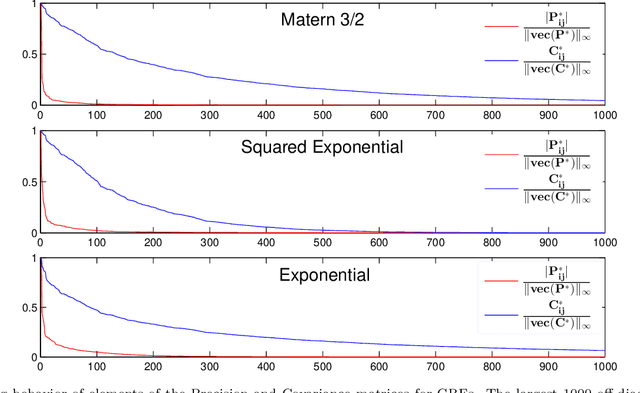

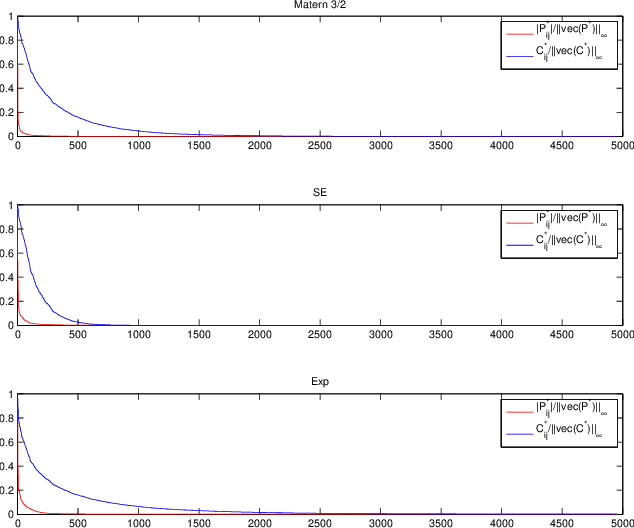

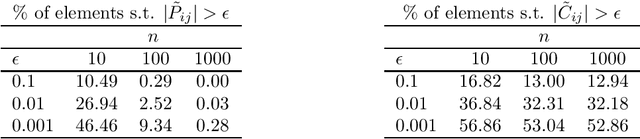

Sparse Precision Matrix Selection for Fitting Gaussian Random Field Models to Large Data Sets

Mar 03, 2016

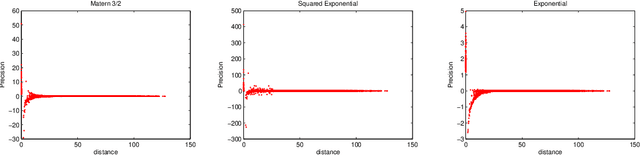

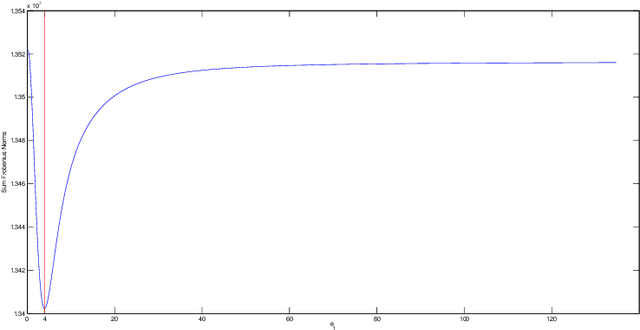

Abstract:Iterative methods for fitting a Gaussian Random Field (GRF) model to spatial data via maximum likelihood (ML) require $\mathcal{O}(n^3)$ floating point operations per iteration, where $n$ denotes the number of data locations. For large data sets, the $\mathcal{O}(n^3)$ complexity per iteration together with the non-convexity of the ML problem render traditional ML methods inefficient for GRF fitting. The problem is even more aggravated for anisotropic GRFs where the number of covariance function parameters increases with the process domain dimension. In this paper, we propose a new two-step GRF estimation procedure when the process is second-order stationary. First, a \emph{convex} likelihood problem regularized with a weighted $\ell_1$-norm, utilizing the available distance information between observation locations, is solved to fit a sparse \emph{{precision} (inverse covariance) matrix to the observed data using the Alternating Direction Method of Multipliers. Second, the parameters of the GRF spatial covariance function are estimated by solving a least squares problem. Theoretical error bounds for the proposed estimator are provided; moreover, convergence of the estimator is shown as the number of samples per location increases. The proposed method is numerically compared with state-of-the-art methods for big $n$. Data segmentation schemes are implemented to handle large data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge