Natalia G. Berloff

Programmable k-local Ising Machines and all-optical Kolmogorov-Arnold Networks on Photonic Platforms

Aug 24, 2025Abstract:We unify k-local Ising optimization and optical KAN function learning on a single photonic platform, establishing a critical convergence point in optical computing that enables interleaved discrete-continuous workflows. We introduce a single spacial light modulator (SLM)-centric primitive that realizes, in one stroke, all-optical k-local Ising interactions and fully optical Kolmogorov-Arnold network (KAN) layers. The central idea is to convert structural nonlinearity of a nominally linear photonic scatterer into a per-window computational resource by adding one relay pass through the same spatial light modulator. A folded 4f relay reimages the first Fourier plane onto the SLM so that each chosen spin clique or ridge channel occupies a disjoint window with its own second-pass phase patch. Propagation remains linear in the optical field, yet the measured intensity in each window becomes a freely programmable polynomial of the clique sum or projection amplitude. This yields native, per-clique k-local couplings without nonlinear media and, in parallel, the many independent univariate nonlinearities required by KAN layers, all with in-situ physical gradients for training using two-frame (forward and adjoint) physical gradients. We outline implementation on spatial photonic Ising machines, injection-locked VCSEL arrays, and the Microsoft analog optical computers. In all cases the hardware change is one extra lens and a fold (or an on-chip 4f loop), enabling a minimal overhead, massively parallel route to high-order optical Ising optimization and trainable, all-optical KAN processing.

Higher-Order Kuramoto Oscillator Network for Dense Associative Memory

Jul 29, 2025Abstract:Networks of phase oscillators can serve as dense associative memories if they incorporate higher-order coupling beyond the classical Kuramoto model's pairwise interactions. Here we introduce a generalized Kuramoto model with combined second-harmonic (pairwise) and fourth-harmonic (quartic) coupling, inspired by dense Hopfield memory theory. Using mean-field theory and its dynamical approximation, we obtain a phase diagram for dense associative memory model that exhibits a tricritical point at which the continuous onset of memory retrieval is supplanted by a discontinuous, hysteretic transition. In the quartic-dominated regime, the system supports bistable phase-locked states corresponding to stored memory patterns, with a sizable energy barrier between memory and incoherent states. We analytically determine this bistable region and show that the escape time from a memory state (due to noise) grows exponentially with network size, indicating robust storage. Extending the theory to finite memory load, we show that higher-order couplings achieve superlinear scaling of memory capacity with system size, far exceeding the limit of pairwise-only oscillators. Large-scale simulations of the oscillator network confirm our theoretical predictions, demonstrating rapid pattern retrieval and robust storage of many phase patterns. These results bridge the Kuramoto synchronization with modern Hopfield memories, pointing toward experimental realization of high-capacity, analog associative memory in oscillator systems.

Exact Spin Elimination in Ising Hamiltonians and Energy-Based Machine Learning

May 12, 2025Abstract:We present an exact spin-elimination technique that reduces the dimensionality of both quadratic and k-local Ising Hamiltonians while preserving their original ground-state configurations. By systematically replacing each removed spin with an effective interaction among its neighbors, our method lowers the total spin count without invoking approximations or iterative recalculations. This capability is especially beneficial for hardware-constrained platforms, classical or quantum, that can directly implement multi-body interactions but have limited qubit or spin resources. We demonstrate three key advances enabled by this technique. First, we handle larger instances of benchmark problems such as Max-Cut on cubic graphs without exceeding a 2-local interaction limit. Second, we reduce qubit requirements in QAOA-based integer factorization on near-term quantum devices, thus extending the feasible range of integers to be factorized. Third, we improve memory capacity in Hopfield associative memories and enhance memory retrieval by suppressing spurious attractors, enhancing retrieval performance. Our spin-elimination procedure trades local spin complexity for higher-order couplings or higher node degrees in a single pass, opening new avenues for scaling up combinatorial optimization and energy-based machine learning on near-term hardware. Finally, these results underscore that the next-generation physical spin machines will likely capitalize on k-local spin Hamiltonians to offer an alternative to classical computations.

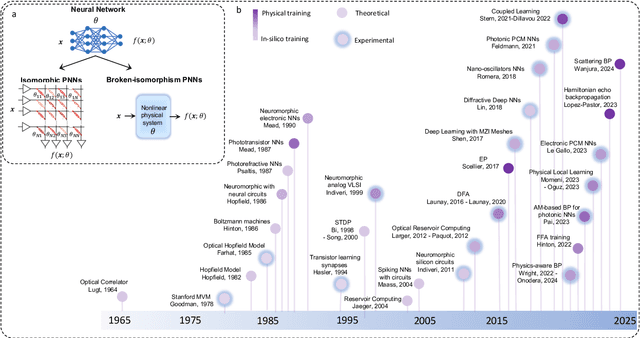

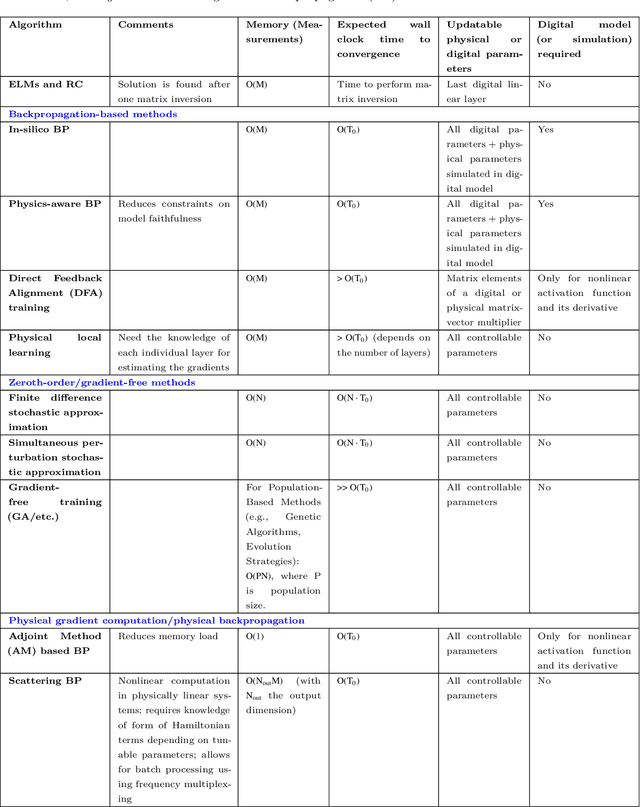

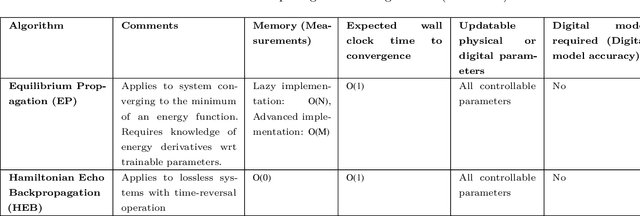

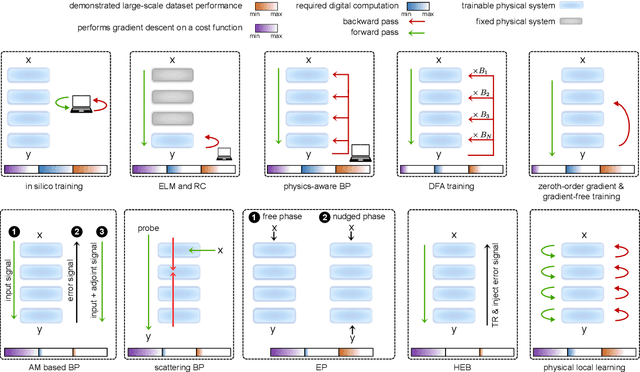

Training of Physical Neural Networks

Jun 05, 2024

Abstract:Physical neural networks (PNNs) are a class of neural-like networks that leverage the properties of physical systems to perform computation. While PNNs are so far a niche research area with small-scale laboratory demonstrations, they are arguably one of the most underappreciated important opportunities in modern AI. Could we train AI models 1000x larger than current ones? Could we do this and also have them perform inference locally and privately on edge devices, such as smartphones or sensors? Research over the past few years has shown that the answer to all these questions is likely "yes, with enough research": PNNs could one day radically change what is possible and practical for AI systems. To do this will however require rethinking both how AI models work, and how they are trained - primarily by considering the problems through the constraints of the underlying hardware physics. To train PNNs at large scale, many methods including backpropagation-based and backpropagation-free approaches are now being explored. These methods have various trade-offs, and so far no method has been shown to scale to the same scale and performance as the backpropagation algorithm widely used in deep learning today. However, this is rapidly changing, and a diverse ecosystem of training techniques provides clues for how PNNs may one day be utilized to create both more efficient realizations of current-scale AI models, and to enable unprecedented-scale models.

Efficient Computation Using Spatial-Photonic Ising Machines: Utilizing Low-Rank and Circulant Matrix Constraints

Jun 03, 2024

Abstract:We explore the potential of spatial-photonic Ising machines (SPIMs) to address computationally intensive Ising problems that employ low-rank and circulant coupling matrices. Our results indicate that the performance of SPIMs is critically affected by the rank and precision of the coupling matrices. By developing and assessing advanced decomposition techniques, we expand the range of problems SPIMs can solve, overcoming the limitations of traditional Mattis-type matrices. Our approach accommodates a diverse array of coupling matrices, including those with inherently low ranks, applicable to complex NP-complete problems. We explore the practical benefits of low-rank approximation in optimization tasks, particularly in financial optimization, to demonstrate the real-world applications of SPIMs. Finally, we evaluate the computational limitations imposed by SPIM hardware precision and suggest strategies to optimize the performance of these systems within these constraints.

Large-scale Sustainable Search on Unconventional Computing Hardware

Apr 06, 2021

Abstract:Since the advent of the Internet, quantifying the relative importance of web pages is at the core of search engine methods. According to one algorithm, PageRank, the worldwide web structure is represented by the Google matrix, whose principal eigenvector components assign a numerical value to web pages for their ranking. Finding such a dominant eigenvector on an ever-growing number of web pages becomes a computationally intensive task incompatible with Moore's Law. We demonstrate that special-purpose optical machines such as networks of optical parametric oscillators, lasers, and gain-dissipative condensates, may aid in accelerating the reliable reconstruction of principal eigenvectors of real-life web graphs. We discuss the feasibility of simulating the PageRank algorithm on large Google matrices using such unconventional hardware. We offer alternative rankings based on the minimisation of spin Hamiltonians. Our estimates show that special-purpose optical machines may provide dramatic improvements in power consumption over classical computing architectures.

XY Neural Networks

Mar 31, 2021

Abstract:The classical XY model is a lattice model of statistical mechanics notable for its universality in the rich hierarchy of the optical, laser and condensed matter systems. We show how to build complex structures for machine learning based on the XY model's nonlinear blocks. The final target is to reproduce the deep learning architectures, which can perform complicated tasks usually attributed to such architectures: speech recognition, visual processing, or other complex classification types with high quality. We developed the robust and transparent approach for the construction of such models, which has universal applicability (i.e. does not strongly connect to any particular physical system), allows many possible extensions while at the same time preserving the simplicity of the methodology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge