Naim Dahnoun

Advanced Millimeter-Wave Radar System for Real-Time Multiple Human Tracking and Fall Detection

Mar 08, 2024

Abstract:This study explores an indoor system for tracking multiple humans and detecting falls, employing three Millimeter-Wave radars from Texas Instruments placed on x-y-z surfaces. Compared to wearables and camera methods, Millimeter-Wave radar is not plagued by mobility inconvenience, lighting conditions, or privacy issues. We establish a real-time framework to integrate signals received from these radars, allowing us to track the position and body status of human targets non-intrusively. To ensure the overall accuracy of our system, we conduct an initial evaluation of radar characteristics, covering aspects such as resolution, interference between radars, and coverage area. Additionally, we introduce innovative strategies, including Dynamic DBSCAN clustering based on signal energy levels, a probability matrix for enhanced target tracking, target status prediction for fall detection, and a feedback loop for noise reduction. We conduct an extensive evaluation using over 300 minutes of data, which equates to approximately 360,000 frames. Our prototype system exhibits remarkable performance, achieving a precision of 98.9% for tracking a single target and 96.5% and 94.0% for tracking two and three targets in human tracking scenarios, respectively. Moreover, in the field of human fall detection, the system demonstrates a high accuracy of 98.2%, underscoring its effectiveness in distinguishing falls from other statuses.

MiliPoint: A Point Cloud Dataset for mmWave Radar

Sep 23, 2023

Abstract:Millimetre-wave (mmWave) radar has emerged as an attractive and cost-effective alternative for human activity sensing compared to traditional camera-based systems. mmWave radars are also non-intrusive, providing better protection for user privacy. However, as a Radio Frequency (RF) based technology, mmWave radars rely on capturing reflected signals from objects, making them more prone to noise compared to cameras. This raises an intriguing question for the deep learning community: Can we develop more effective point set-based deep learning methods for such attractive sensors? To answer this question, our work, termed MiliPoint, delves into this idea by providing a large-scale, open dataset for the community to explore how mmWave radars can be utilised for human activity recognition. Moreover, MiliPoint stands out as it is larger in size than existing datasets, has more diverse human actions represented, and encompasses all three key tasks in human activity recognition. We have also established a range of point-based deep neural networks such as DGCNN, PointNet++ and PointTransformer, on MiliPoint, which can serve to set the ground baseline for further development.

Millimetre-wave Radar for Low-Cost 3D Imaging: A Performance Study

Jan 31, 2023Abstract:Millimetre-wave (mmWave) radars can generate 3D point clouds to represent objects in the scene. However, the accuracy and density of the generated point cloud can be lower than a laser sensor. Although researchers have used mmWave radars for various applications, there are few quantitative evaluations on the quality of the point cloud generated by the radar and there is a lack of a standard on how this quality can be assessed. This work aims to fill the gap in the literature. A radar simulator is built to evaluate the most common data processing chains of 3D point cloud construction and to examine the capability of the mmWave radar as a 3D imaging sensor under various factors. It will be shown that the radar detection can be noisy and have an imbalance distribution. To address the problem, a novel super-resolution point cloud construction (SRPC) algorithm is proposed to improve the spatial resolution of the point cloud and is shown to be able to produce a more natural point cloud and reduce outliers.

Real-Time Stereo Vision for Road Surface 3-D Reconstruction

Aug 29, 2018

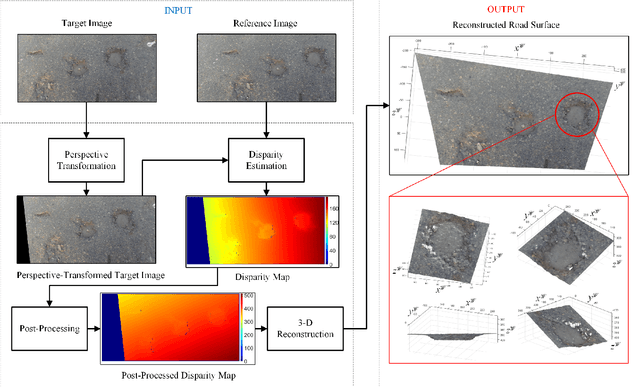

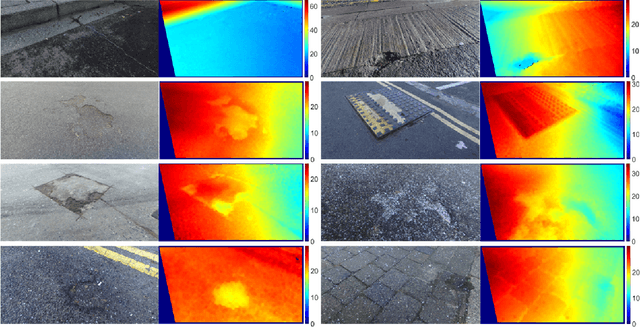

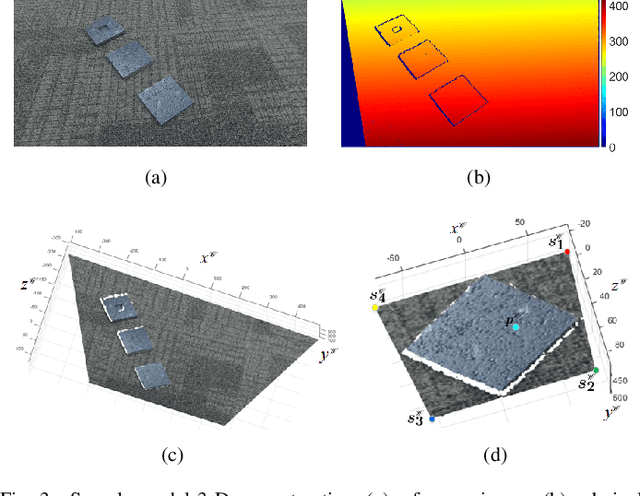

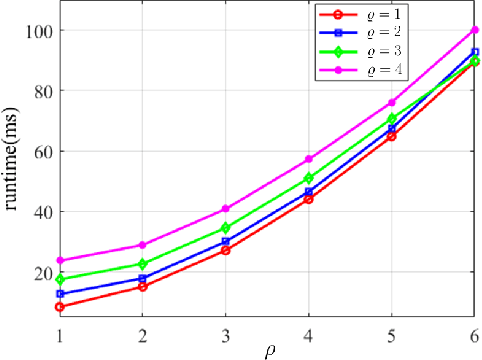

Abstract:Stereo vision techniques have been widely used in civil engineering to acquire 3-D road data. The two important factors of stereo vision are accuracy and speed. However, it is very challenging to achieve both of them simultaneously and therefore the main aim of developing a stereo vision system is to improve the trade-off between these two factors. In this paper, we present a real-time stereo vision system used for road surface 3-D reconstruction. The proposed system is developed from our previously published 3-D reconstruction algorithm where the perspective view of the target image is first transformed into the reference view, which not only increases the disparity accuracy but also improves the processing speed. Then, the correlation cost between each pair of blocks is computed and stored in two 3-D cost volumes. To adaptively aggregate the matching costs from neighbourhood systems, bilateral filtering is performed on the cost volumes. This greatly reduces the ambiguities during stereo matching and further improves the precision of the estimated disparities. Finally, the subpixel resolution is achieved by conducting a parabola interpolation and the subpixel disparity map is used to reconstruct the 3-D road surface. The proposed algorithm is implemented on an NVIDIA GTX 1080 GPU for the real-time purpose. The experimental results illustrate that the reconstruction accuracy is around 3 mm.

A Novel Disparity Transformation Algorithm for Road Segmentation

Aug 08, 2018

Abstract:The disparity information provided by stereo cameras has enabled advanced driver assistance systems to estimate road area more accurately and effectively. In this paper, a novel disparity transformation algorithm is proposed to extract road areas from dense disparity maps by making the disparity value of the road pixels become similar. The transformation is achieved using two parameters: roll angle and fitted disparity value with respect to each row. To achieve a better processing efficiency, golden section search and dynamic programming are utilised to estimate the roll angle and the fitted disparity value, respectively. By performing a rotation around the estimated roll angle, the disparity distribution of each row becomes very compact. This further improves the accuracy of the road model estimation, as demonstrated by the various experimental results in this paper. Finally, the Otsu's thresholding method is applied to the transformed disparity map and the roads can be accurately segmented at pixel level.

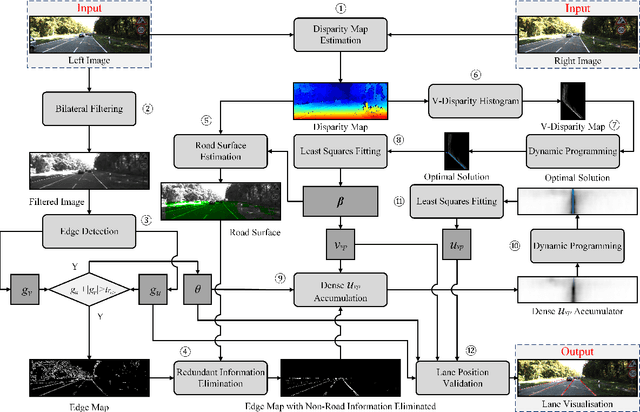

Real-time stereo vision-based lane detection system

Jul 08, 2018

Abstract:The detection of multiple curved lane markings on a non-flat road surface is still a challenging task for automotive applications. To make an improvement, the depth information can be used to greatly enhance the robustness of the lane detection systems. The proposed system in this paper is developed from our previous work where the dense vanishing point Vp is estimated globally to assist the detection of multiple curved lane markings. However, the outliers in the optimal solution may severely affect the accuracy of the least squares fitting when estimating Vp. Therefore, in this paper we use Random Sample Consensus to update the inliers and outliers iteratively until the fraction of the number of inliers versus the total number exceeds our pre-set threshold. This significantly helps the system to overcome some suddenly changing conditions. Furthermore, we propose a novel lane position validation approach which provides a piecewise weight based on Vp and the gradient to reduce the gradient magnitude of the non-lane candidates. Then, we compute the energy of each possible solution and select all satisfying lane positions for visualisation. The proposed system is implemented on a heterogeneous system which consists of an Intel Core i7-4720HQ CPU and a NVIDIA GTX 970M GPU. A processing speed of 143 fps has been achieved, which is over 38 times faster than our previous work. Also, in order to evaluate the detection precision, we tested 2495 frames with 5361 lanes from the KITTI database (1637 lanes more than our previous experiment). It is shown that the overall successful detection rate is improved from 98.7% to 99.5%.

* 24 pages, 10 figures

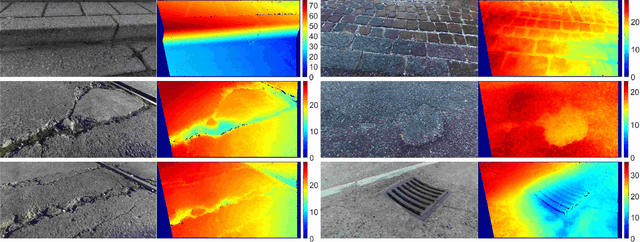

Road surface 3d reconstruction based on dense subpixel disparity map estimation

Jul 05, 2018

Abstract:Various 3D reconstruction methods have enabled civil engineers to detect damage on a road surface. To achieve the millimetre accuracy required for road condition assessment, a disparity map with subpixel resolution needs to be used. However, none of the existing stereo matching algorithms are specially suitable for the reconstruction of the road surface. Hence in this paper, we propose a novel dense subpixel disparity estimation algorithm with high computational efficiency and robustness. This is achieved by first transforming the perspective view of the target frame into the reference view, which not only increases the accuracy of the block matching for the road surface but also improves the processing speed. The disparities are then estimated iteratively using our previously published algorithm where the search range is propagated from three estimated neighbouring disparities. Since the search range is obtained from the previous iteration, errors may occur when the propagated search range is not sufficient. Therefore, a correlation maxima verification is performed to rectify this issue, and the subpixel resolution is achieved by conducting a parabola interpolation enhancement. Furthermore, a novel disparity global refinement approach developed from the Markov Random Fields and Fast Bilateral Stereo is introduced to further improve the accuracy of the estimated disparity map, where disparities are updated iteratively by minimising the energy function that is related to their interpolated correlation polynomials. The algorithm is implemented in C language with a near real-time performance. The experimental results illustrate that the absolute error of the reconstruction varies from 0.1 mm to 3 mm.

* 11 pages, 16 figures, IEEE Transactions on Image Processing

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge