Nadezhda Semenova

Impact of internal noise on convolutional neural networks

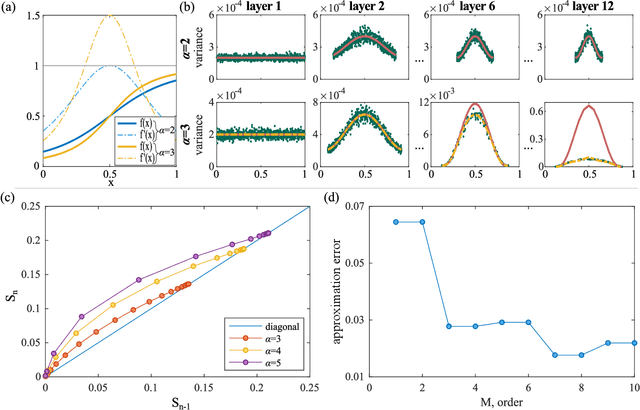

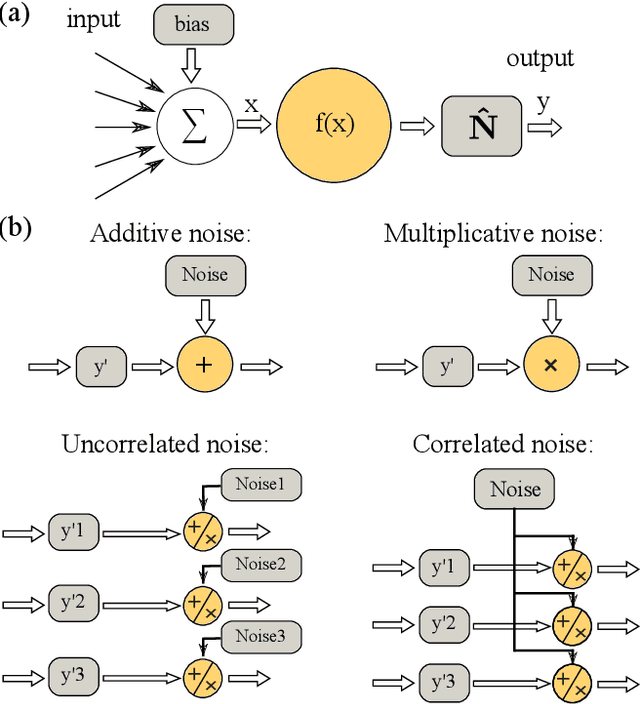

May 10, 2025Abstract:In this paper, we investigate the impact of noise on a simplified trained convolutional network. The types of noise studied originate from a real optical implementation of a neural network, but we generalize these types to enhance the applicability of our findings on a broader scale. The noise types considered include additive and multiplicative noise, which relate to how noise affects individual neurons, as well as correlated and uncorrelated noise, which pertains to the influence of noise across one layers. We demonstrate that the propagation of uncorrelated noise primarily depends on the statistical properties of the connection matrices. Specifically, the mean value of the connection matrix following the layer impacted by noise governs the propagation of correlated additive noise, while the mean of its square contributes to the accumulation of uncorrelated noise. Additionally, we propose an analytical assessment of the noise level in the network's output signal, which shows a strong correlation with the results of numerical simulations.

Internal noise in hardware deep and recurrent neural networks helps with learning

Apr 18, 2025

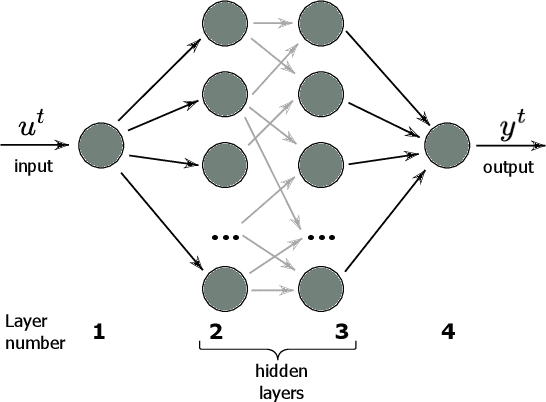

Abstract:Recently, the field of hardware neural networks has been actively developing, where neurons and their connections are not simulated on a computer but are implemented at the physical level, transforming the neural network into a tangible device. In this paper, we investigate how internal noise during the training of neural networks affects the final performance of recurrent and deep neural networks. We consider feedforward networks (FNN) and echo state networks (ESN) as examples. The types of noise examined originated from a real optical implementation of a neural network. However, these types were subsequently generalized to enhance the applicability of our findings on a broader scale. The noise types considered include additive and multiplicative noise, which depend on how noise influences each individual neuron, and correlated and uncorrelated noise, which pertains to the impact of noise on groups of neurons (such as the hidden layer of FNNs or the reservoir of ESNs). In this paper, we demonstrate that, in most cases, both deep and echo state networks benefit from internal noise during training, as it enhances their resilience to noise. Consequently, the testing performance at the same noise intensities is significantly higher for networks trained with noise than for those trained without it. Notably, only multiplicative correlated noise during training has minimal has almost no impact on both deep and recurrent networks.

Impact of white noise in artificial neural networks trained for classification: performance and noise mitigation strategies

Nov 07, 2024Abstract:In recent years, the hardware implementation of neural networks, leveraging physical coupling and analog neurons has substantially increased in relevance. Such nonlinear and complex physical networks provide significant advantages in speed and energy efficiency, but are potentially susceptible to internal noise when compared to digital emulations of such networks. In this work, we consider how additive and multiplicative Gaussian white noise on the neuronal level can affect the accuracy of the network when applied for specific tasks and including a softmax function in the readout layer. We adapt several noise reduction techniques to the essential setting of classification tasks, which represent a large fraction of neural network computing. We find that these adjusted concepts are highly effective in mitigating the detrimental impact of noise.

Impact of white Gaussian internal noise on analog echo-state neural networks

May 13, 2024Abstract:In recent years, more and more works have appeared devoted to the analog (hardware) implementation of artificial neural networks, in which neurons and the connection between them are based not on computer calculations, but on physical principles. Such networks offer improved energy efficiency and, in some cases, scalability, but may be susceptible to internal noise. This paper studies the influence of noise on the functioning of recurrent networks using the example of trained echo state networks (ESNs). The most common reservoir connection matrices were chosen as various topologies of ESNs: random uniform and band matrices with different connectivity. White Gaussian noise was chosen as the influence, and according to the way of its introducing it was additive or multiplicative, as well as correlated or uncorrelated. In the paper, we show that the propagation of noise in reservoir is mainly controlled by the statistical properties of the output connection matrix, namely the mean and the mean square. Depending on these values, more correlated or uncorrelated noise accumulates in the network. We also show that there are conditions under which even noise with an intensity of $10^{-20}$ is already enough to completely lose the useful signal. In the article we show which types of noise are most critical for networks with different activation functions (hyperbolic tangent, sigmoid and linear) and if the network is self-closed.

Symbiosis of an artificial neural network and models of biological neurons: training and testing

Feb 03, 2023

Abstract:In this paper we show the possibility of creating and identifying the features of an artificial neural network (ANN) which consists of mathematical models of biological neurons. The FitzHugh--Nagumo (FHN) system is used as an example of model demonstrating simplified neuron activity. First, in order to reveal how biological neurons can be embedded within an ANN, we train the ANN with nonlinear neurons to solve a a basic image recognition problem with MNIST database; and next, we describe how FHN systems can be introduced into this trained ANN. After all, we show that an ANN with FHN systems inside can be successfully trained and its accuracy becomes larger. What has been done above opens up great opportunities in terms of the direction of analog neural networks, in which artificial neurons can be replaced by biological ones. \end{abstract}

Simple method for detecting sleep episodes in rats ECoG using machine learning

Feb 02, 2023Abstract:In this paper we propose a new method for the automatic recognition of the state of behavioral sleep (BS) and waking state (WS) in freely moving rats using their electrocorticographic (ECoG) data. Three-channels ECoG signals were recorded from frontal left, frontal right and occipital right cortical areas. We employed a simple artificial neural network (ANN), in which the mean values and standard deviations of ECoG signals from two or three channels were used as inputs for the ANN. Results of wavelet-based recognition of BS/WS in the same data were used to train the ANN and evaluate correctness of our classifier. We tested different combinations of ECoG channels for detecting BS/WS. Our results showed that the accuracy of ANN classification did not depend on ECoG-channel. For any ECoG-channel, networks were trained on one rat and applied to another rat with an accuracy of at least 80~\%. Itis important that we used a very simple network topology to achieve a relatively high accuracy of classification. Our classifier was based on a simple linear combination of input signals with some weights, and these weights could be replaced by the averaged weights of all trained ANNs without decreases in classification accuracy. In all, we introduce a new sleep recognition method that does not require additional network training. It is enough to know the coefficients and the equations suggested in this paper. The proposed method showed very fast performance and simple computations, therefore it could be used in real time experiments. It might be of high demand in preclinical studies in rodents that require vigilance control or monitoring of sleep-wake patterns.

Noise mitigation strategies in physical feedforward neural networks

Apr 20, 2022

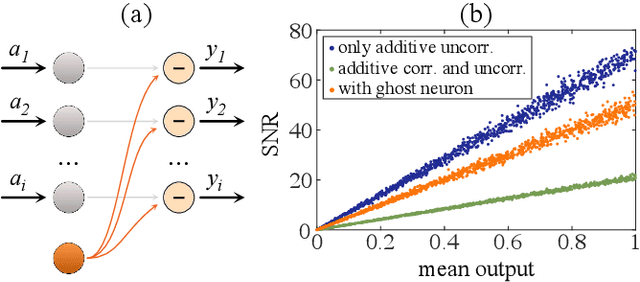

Abstract:Physical neural networks are promising candidates for next generation artificial intelligence hardware. In such architectures, neurons and connections are physically realized and do not leverage digital, i.e. practically infinite signal-to-noise ratio digital concepts. They therefore are prone to noise, and base don analytical derivations we here introduce connectivity topologies, ghost neurons as well as pooling as noise mitigation strategies. Finally, we demonstrate the effectiveness of the combined methods based on a fully trained neural network classifying the MNIST handwritten digits.

The general aspects of noise in analogue hardware deep neural networks

Mar 12, 2021

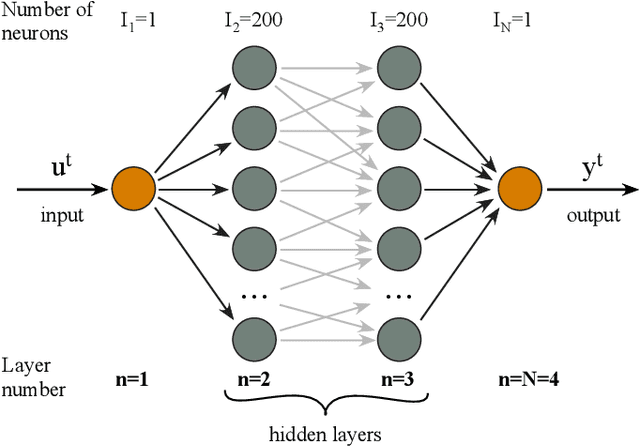

Abstract:Deep neural networks unlocked a vast range of new applications by solving tasks of which many were previouslydeemed as reserved to higher human intelligence. One of the developments enabling this success was a boost incomputing power provided by special purpose hardware, such as graphic or tensor processing units. However,these do not leverage fundamental features of neural networks like parallelism and analog state variables.Instead, they emulate neural networks relying on computing power, which results in unsustainable energyconsumption and comparatively low speed. Fully parallel and analogue hardware promises to overcomethese challenges, yet the impact of analogue neuron noise and its propagation, i.e. accumulation, threatensrendering such approaches inept. Here, we analyse for the first time the propagation of noise in paralleldeep neural networks comprising noisy nonlinear neurons. We develop an analytical treatment for both,symmetric networks to highlight the underlying mechanisms, and networks trained with back propagation.We find that noise accumulation is generally bound, and adding additional network layers does not worsenthe signal to noise ratio beyond this limit. Most importantly, noise accumulation can be suppressed entirelywhen neuron activation functions have a slope smaller than unity. We therefore developed the frameworkfor noise of deep neural networks implemented in analog systems, and identify criteria allowing engineers todesign noise-resilient novel neural network hardware.

Fundamental aspects of noise in analog-hardware neural networks

Jul 21, 2019

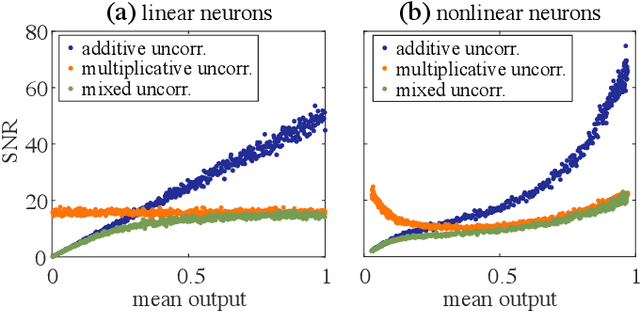

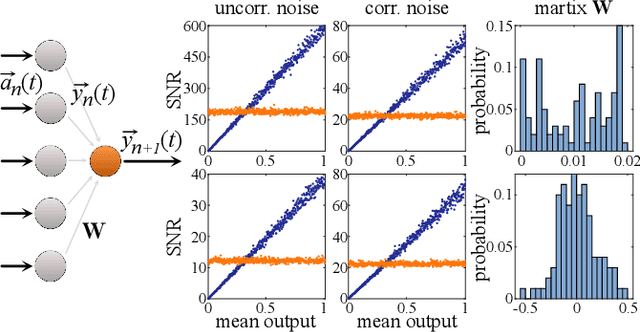

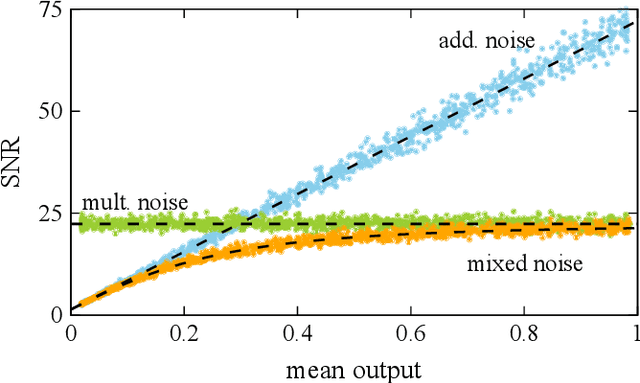

Abstract:We study and analyze the fundamental aspects of noise propagation in recurrent as well as deep, multi-layer networks. The main focus of our study are neural networks in analogue hardware, yet the methodology provides insight for networks in general. The system under study consists of noisy linear nodes, and we investigate the signal-to-noise ratio at the network's outputs which is the upper limit to such a system's computing accuracy. We consider additive and multiplicative noise which can be purely local as well as correlated across populations of neurons. This covers the chief internal-perturbations of hardware networks and noise amplitudes were obtained from a physically implemented recurrent neural network and therefore correspond to a real-world system. Analytic solutions agree exceptionally well with numerical data, enabling clear identification of the most critical components and aspects for noise management. Focusing on linear nodes isolates the impact of network connections and allows us to derive strategies for mitigating noise. Our work is the starting point in addressing this aspect of analogue neural networks, and our results identify notoriously sensitive points while simultaneously highlighting the robustness of such computational systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge