The general aspects of noise in analogue hardware deep neural networks

Paper and Code

Mar 12, 2021

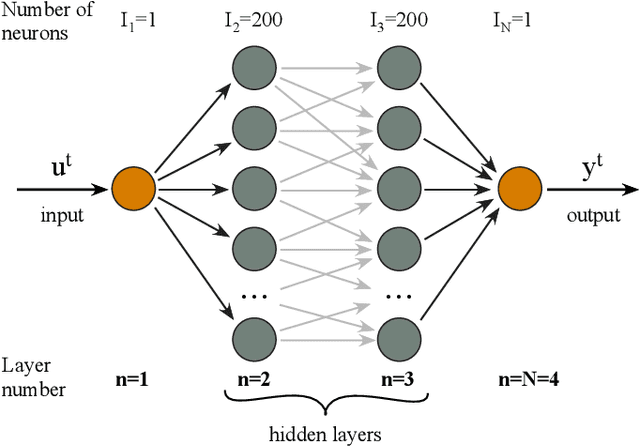

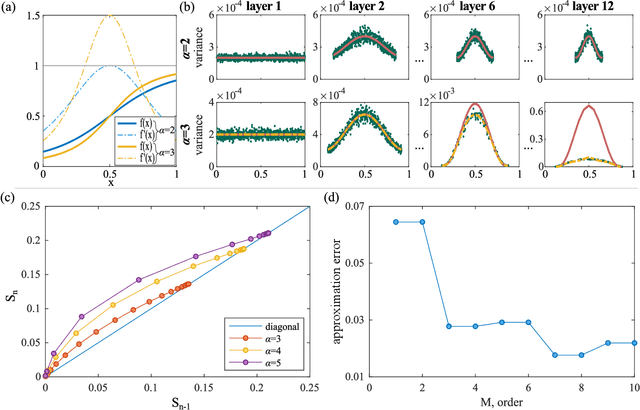

Deep neural networks unlocked a vast range of new applications by solving tasks of which many were previouslydeemed as reserved to higher human intelligence. One of the developments enabling this success was a boost incomputing power provided by special purpose hardware, such as graphic or tensor processing units. However,these do not leverage fundamental features of neural networks like parallelism and analog state variables.Instead, they emulate neural networks relying on computing power, which results in unsustainable energyconsumption and comparatively low speed. Fully parallel and analogue hardware promises to overcomethese challenges, yet the impact of analogue neuron noise and its propagation, i.e. accumulation, threatensrendering such approaches inept. Here, we analyse for the first time the propagation of noise in paralleldeep neural networks comprising noisy nonlinear neurons. We develop an analytical treatment for both,symmetric networks to highlight the underlying mechanisms, and networks trained with back propagation.We find that noise accumulation is generally bound, and adding additional network layers does not worsenthe signal to noise ratio beyond this limit. Most importantly, noise accumulation can be suppressed entirelywhen neuron activation functions have a slope smaller than unity. We therefore developed the frameworkfor noise of deep neural networks implemented in analog systems, and identify criteria allowing engineers todesign noise-resilient novel neural network hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge