Nabarun Deb

Parametric Mean-Field empirical Bayes in high-dimensional linear regression

Jan 23, 2026Abstract:In this paper, we consider the problem of parametric empirical Bayes estimation of an i.i.d. prior in high-dimensional Bayesian linear regression, with random design. We obtain the asymptotic distribution of the variational Empirical Bayes (vEB) estimator, which approximately maximizes a variational lower bound of the intractable marginal likelihood. We characterize a sharp phase transition behavior for the vEB estimator -- namely that it is information theoretically optimal (in terms of limiting variance) up to $p=o(n^{2/3})$ while it suffers from a sub-optimal convergence rate in higher dimensions. In the first regime, i.e., when $p=o(n^{2/3})$, we show how the estimated prior can be calibrated to enable valid coordinate-wise and delocalized inference, both under the \emph{empirical Bayes posterior} and the oracle posterior. In the second regime, we propose a debiasing technique as a way to improve the performance of the vEB estimator beyond $p=o(n^{2/3})$. Extensive numerical experiments corroborate our theoretical findings.

Pivotal CLTs for Pseudolikelihood via Conditional Centering in Dependent Random Fields

Oct 06, 2025Abstract:In this paper, we study fluctuations of conditionally centered statistics of the form $$N^{-1/2}\sum_{i=1}^N c_i(g(\sigma_i)-\mathbb{E}_N[g(\sigma_i)|\sigma_j,j\neq i])$$ where $(\sigma_1,\ldots ,\sigma_N)$ are sampled from a dependent random field, and $g$ is some bounded function. Our first main result shows that under weak smoothness assumptions on the conditional means (which cover both sparse and dense interactions), the above statistic converges to a Gaussian \emph{scale mixture} with a random scale determined by a \emph{quadratic variance} and an \emph{interaction component}. We also show that under appropriate studentization, the limit becomes a pivotal Gaussian. We leverage this theory to develop a general asymptotic framework for maximum pseudolikelihood (MPLE) inference in dependent random fields. We apply our results to Ising models with pairwise as well as higher-order interactions and exponential random graph models (ERGMs). In particular, we obtain a joint central limit theorem for the inverse temperature and magnetization parameters via the joint MPLE (to our knowledge, the first such result in dense, irregular regimes), and we derive conditionally centered edge CLTs and marginal MPLE CLTs for ERGMs without restricting to the ``sub-critical" region. Our proof is based on a method of moments approach via combinatorial decision-tree pruning, which may be of independent interest.

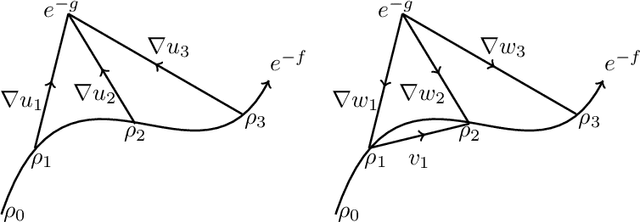

No-Regret Generative Modeling via Parabolic Monge-Ampère PDE

Apr 12, 2025Abstract:We introduce a novel generative modeling framework based on a discretized parabolic Monge-Amp\`ere PDE, which emerges as a continuous limit of the Sinkhorn algorithm commonly used in optimal transport. Our method performs iterative refinement in the space of Brenier maps using a mirror gradient descent step. We establish theoretical guarantees for generative modeling through the lens of no-regret analysis, demonstrating that the iterates converge to the optimal Brenier map under a variety of step-size schedules. As a technical contribution, we derive a new Evolution Variational Inequality tailored to the parabolic Monge-Amp\`ere PDE, connecting geometry, transportation cost, and regret. Our framework accommodates non-log-concave target distributions, constructs an optimal sampling process via the Brenier map, and integrates favorable learning techniques from generative adversarial networks and score-based diffusion models. As direct applications, we illustrate how our theory paves new pathways for generative modeling and variational inference.

Trade-off Between Dependence and Complexity for Nonparametric Learning -- an Empirical Process Approach

Jan 17, 2024Abstract:Empirical process theory for i.i.d. observations has emerged as a ubiquitous tool for understanding the generalization properties of various statistical problems. However, in many applications where the data exhibit temporal dependencies (e.g., in finance, medical imaging, weather forecasting etc.), the corresponding empirical processes are much less understood. Motivated by this observation, we present a general bound on the expected supremum of empirical processes under standard $\beta/\rho$-mixing assumptions. Unlike most prior work, our results cover both the long and the short-range regimes of dependence. Our main result shows that a non-trivial trade-off between the complexity of the underlying function class and the dependence among the observations characterizes the learning rate in a large class of nonparametric problems. This trade-off reveals a new phenomenon, namely that even under long-range dependence, it is possible to attain the same rates as in the i.i.d. setting, provided the underlying function class is complex enough. We demonstrate the practical implications of our findings by analyzing various statistical estimators in both fixed and growing dimensions. Our main examples include a comprehensive case study of generalization error bounds in nonparametric regression over smoothness classes in fixed as well as growing dimension using neural nets, shape-restricted multivariate convex regression, estimating the optimal transport (Wasserstein) distance between two probability distributions, and classification under the Mammen-Tsybakov margin condition -- all under appropriate mixing assumptions. In the process, we also develop bounds on $L_r$ ($1\le r\le 2$)-localized empirical processes with dependent observations, which we then leverage to get faster rates for (a) tuning-free adaptation, and (b) set-structured learning problems.

Wasserstein Mirror Gradient Flow as the limit of the Sinkhorn Algorithm

Jul 31, 2023

Abstract:We prove that the sequence of marginals obtained from the iterations of the Sinkhorn algorithm or the iterative proportional fitting procedure (IPFP) on joint densities, converges to an absolutely continuous curve on the $2$-Wasserstein space, as the regularization parameter $\varepsilon$ goes to zero and the number of iterations is scaled as $1/\varepsilon$ (and other technical assumptions). This limit, which we call the Sinkhorn flow, is an example of a Wasserstein mirror gradient flow, a concept we introduce here inspired by the well-known Euclidean mirror gradient flows. In the case of Sinkhorn, the gradient is that of the relative entropy functional with respect to one of the marginals and the mirror is half of the squared Wasserstein distance functional from the other marginal. Interestingly, the norm of the velocity field of this flow can be interpreted as the metric derivative with respect to the linearized optimal transport (LOT) distance. An equivalent description of this flow is provided by the parabolic Monge-Amp\`{e}re PDE whose connection to the Sinkhorn algorithm was noticed by Berman (2020). We derive conditions for exponential convergence for this limiting flow. We also construct a Mckean-Vlasov diffusion whose marginal distributions follow the Sinkhorn flow.

Rates of Estimation of Optimal Transport Maps using Plug-in Estimators via Barycentric Projections

Jul 04, 2021Abstract:Optimal transport maps between two probability distributions $\mu$ and $\nu$ on $\mathbb{R}^d$ have found extensive applications in both machine learning and statistics. In practice, these maps need to be estimated from data sampled according to $\mu$ and $\nu$. Plug-in estimators are perhaps most popular in estimating transport maps in the field of computational optimal transport. In this paper, we provide a comprehensive analysis of the rates of convergences for general plug-in estimators defined via barycentric projections. Our main contribution is a new stability estimate for barycentric projections which proceeds under minimal smoothness assumptions and can be used to analyze general plug-in estimators. We illustrate the usefulness of this stability estimate by first providing rates of convergence for the natural discrete-discrete and semi-discrete estimators of optimal transport maps. We then use the same stability estimate to show that, under additional smoothness assumptions of Besov type or Sobolev type, wavelet based or kernel smoothed plug-in estimators respectively speed up the rates of convergence and significantly mitigate the curse of dimensionality suffered by the natural discrete-discrete/semi-discrete estimators. As a by-product of our analysis, we also obtain faster rates of convergence for plug-in estimators of $W_2(\mu,\nu)$, the Wasserstein distance between $\mu$ and $\nu$, under the aforementioned smoothness assumptions, thereby complementing recent results in Chizat et al. (2020). Finally, we illustrate the applicability of our results in obtaining rates of convergence for Wasserstein barycenters between two probability distributions and obtaining asymptotic detection thresholds for some recent optimal-transport based tests of independence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge