Mutlu Demirer

Mayo Clinic Florida Radiology, USA

Fair Evaluation of Federated Learning Algorithms for Automated Breast Density Classification: The Results of the 2022 ACR-NCI-NVIDIA Federated Learning Challenge

May 22, 2024Abstract:The correct interpretation of breast density is important in the assessment of breast cancer risk. AI has been shown capable of accurately predicting breast density, however, due to the differences in imaging characteristics across mammography systems, models built using data from one system do not generalize well to other systems. Though federated learning (FL) has emerged as a way to improve the generalizability of AI without the need to share data, the best way to preserve features from all training data during FL is an active area of research. To explore FL methodology, the breast density classification FL challenge was hosted in partnership with the American College of Radiology, Harvard Medical School's Mass General Brigham, University of Colorado, NVIDIA, and the National Institutes of Health National Cancer Institute. Challenge participants were able to submit docker containers capable of implementing FL on three simulated medical facilities, each containing a unique large mammography dataset. The breast density FL challenge ran from June 15 to September 5, 2022, attracting seven finalists from around the world. The winning FL submission reached a linear kappa score of 0.653 on the challenge test data and 0.413 on an external testing dataset, scoring comparably to a model trained on the same data in a central location.

* 16 pages, 9 figures

Integration and Implementation Strategies for AI Algorithm Deployment with Smart Routing Rules and Workflow Management

Nov 21, 2023

Abstract:This paper reviews the challenges hindering the widespread adoption of artificial intelligence (AI) solutions in the healthcare industry, focusing on computer vision applications for medical imaging, and how interoperability and enterprise-grade scalability can be used to address these challenges. The complex nature of healthcare workflows, intricacies in managing large and secure medical imaging data, and the absence of standardized frameworks for AI development pose significant barriers and require a new paradigm to address them. The role of interoperability is examined in this paper as a crucial factor in connecting disparate applications within healthcare workflows. Standards such as DICOM, Health Level 7 (HL7), and Integrating the Healthcare Enterprise (IHE) are highlighted as foundational for common imaging workflows. A specific focus is placed on the role of DICOM gateways, with Smart Routing Rules and Workflow Management leading transformational efforts in this area. To drive enterprise scalability, new tools are needed. Project MONAI, established in 2019, is introduced as an initiative aiming to redefine the development of medical AI applications. The MONAI Deploy App SDK, a component of Project MONAI, is identified as a key tool in simplifying the packaging and deployment process, enabling repeatable, scalable, and standardized deployment patterns for AI applications. The abstract underscores the potential impact of successful AI adoption in healthcare, offering physicians both life-saving and time-saving insights and driving efficiencies in radiology department workflows. The collaborative efforts between academia and industry, are emphasized as essential for advancing the adoption of healthcare AI solutions.

A multi-reconstruction study of breast density estimation using Deep Learning

Feb 17, 2022

Abstract:Breast density estimation is one of the key tasks in recognizing individuals predisposed to breast cancer. It is often challenging because of low contrast and fluctuations in mammograms' fatty tissue background. Most of the time, the breast density is estimated manually where a radiologist assigns one of the four density categories decided by the Breast Imaging and Reporting Data Systems (BI-RADS). There have been efforts in the direction of automating a breast density classification pipeline. Breast density estimation is one of the key tasks performed during a screening exam. Dense breasts are more susceptible to breast cancer. The density estimation is challenging because of low contrast and fluctuations in mammograms' fatty tissue background. Traditional mammograms are being replaced by tomosynthesis and its other low radiation dose variants (for example Hologic' Intelligent 2D and C-View). Because of the low-dose requirement, increasingly more screening centers are favoring the Intelligent 2D view and C-View. Deep-learning studies for breast density estimation use only a single modality for training a neural network. However, doing so restricts the number of images in the dataset. In this paper, we show that a neural network trained on all the modalities at once performs better than a neural network trained on any single modality. We discuss these results using the area under the receiver operator characteristics curves.

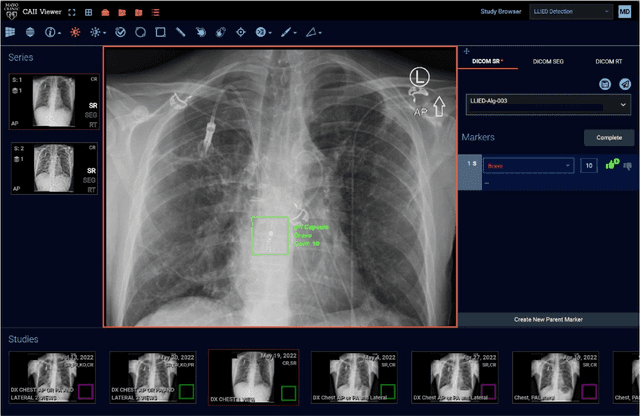

Cascading Neural Network Methodology for Artificial Intelligence-Assisted Radiographic Detection and Classification of Lead-Less Implanted Electronic Devices within the Chest

Aug 25, 2021

Abstract:Background & Purpose: Chest X-Ray (CXR) use in pre-MRI safety screening for Lead-Less Implanted Electronic Devices (LLIEDs), easily overlooked or misidentified on a frontal view (often only acquired), is common. Although most LLIED types are "MRI conditional": 1. Some are stringently conditional; 2. Different conditional types have specific patient- or device- management requirements; and 3. Particular types are "MRI unsafe". This work focused on developing CXR interpretation-assisting Artificial Intelligence (AI) methodology with: 1. 100% detection for LLIED presence/location; and 2. High classification in LLIED typing. Materials & Methods: Data-mining (03/1993-02/2021) produced an AI Model Development Population (1,100 patients/4,871 images) creating 4,924 LLIED Region-Of-Interests (ROIs) (with image-quality grading) used in Training, Validation, and Testing. For developing the cascading neural network (detection via Faster R-CNN and classification via Inception V3), "ground-truth" CXR annotation (ROI labeling per LLIED), as well as inference display (as Generated Bounding Boxes (GBBs)), relied on a GPU-based graphical user interface. Results: To achieve 100% LLIED detection, probability threshold reduction to 0.00002 was required by Model 1, resulting in increasing GBBs per LLIED-related ROI. Targeting LLIED-type classification following detection of all LLIEDs, Model 2 multi-classified to reach high-performance while decreasing falsely positive GBBs. Despite 24% suboptimal ROI image quality, classification was correct in 98.9% and AUCs for the 9 LLIED-types were 1.00 for 8 and 0.92 for 1. For all misclassification cases: 1. None involved stringently conditional or unsafe LLIEDs; and 2. Most were attributable to suboptimal images. Conclusion: This project successfully developed a LLIED-related AI methodology supporting: 1. 100% detection; and 2. Typically 100% type classification.

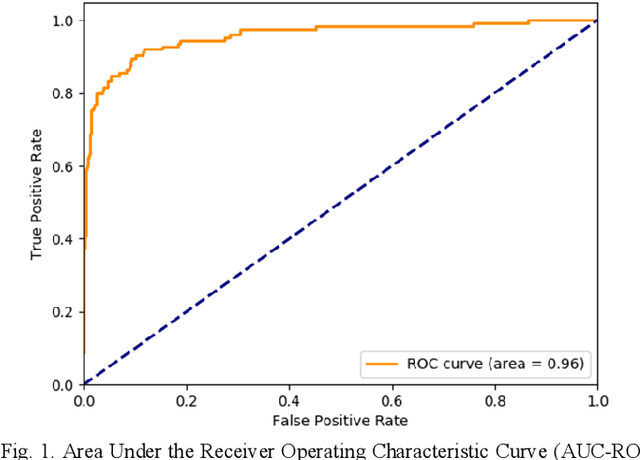

Artificial Intelligence to Assist in Exclusion of Coronary Atherosclerosis during CCTA Evaluation of Chest-Pain in the Emergency Department: Preparing an Application for Real-World Use

Aug 10, 2020

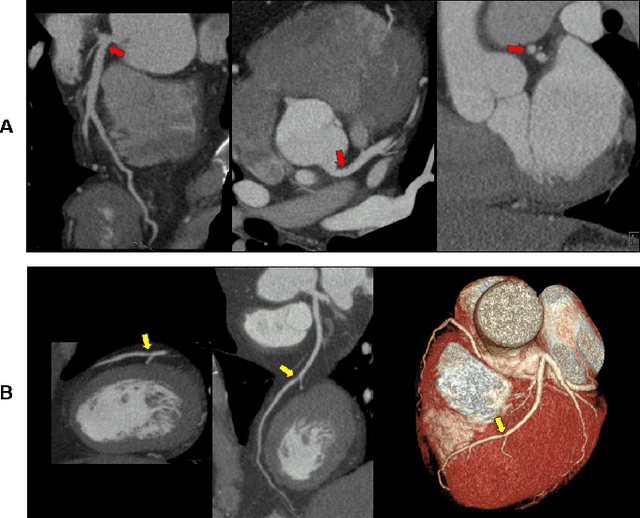

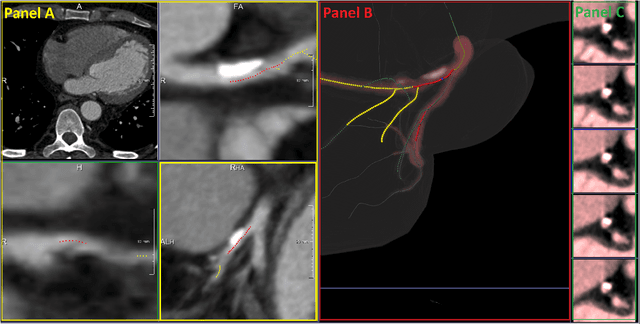

Abstract:Coronary Computed Tomography Angiography (CCTA) evaluation of chest-pain patients in an Emergency Department (ED) is considered appropriate. While a negative CCTA interpretation supports direct patient discharge from an ED, labor-intensive analyses are required, with accuracy in jeopardy from distractions. We describe the development of an Artificial Intelligence (AI) algorithm and workflow for assisting interpreting physicians in CCTA screening for the absence of coronary atherosclerosis. The two-phase approach consisted of (1) Phase 1 - focused on the development and preliminary testing of an algorithm for vessel-centerline extraction classification in a balanced study population (n = 500 with 50% disease prevalence) derived by retrospective random case selection; and (2) Phase 2 - concerned with simulated-clinical Trialing of the developed algorithm on a per-case basis in a more real-world study population (n = 100 with 28% disease prevalence) from an ED chest-pain series. This allowed pre-deployment evaluation of the AI-based CCTA screening application which provides a vessel-by-vessel graphic display of algorithm inference results integrated into a clinically capable viewer. Algorithm performance evaluation used Area Under the Receiver-Operating-Characteristic Curve (AUC-ROC); confusion matrices reflected ground-truth vs AI determinations. The vessel-based algorithm demonstrated strong performance with AUC-ROC = 0.96. In both Phase 1 and Phase 2, independent of disease prevalence differences, negative predictive values at the case level were very high at 95%. The rate of completion of the algorithm workflow process (96% with inference results in 55-80 seconds) in Phase 2 depended on adequate image quality. There is potential for this AI application to assist in CCTA interpretation to help extricate atherosclerosis from chest-pain presentations.

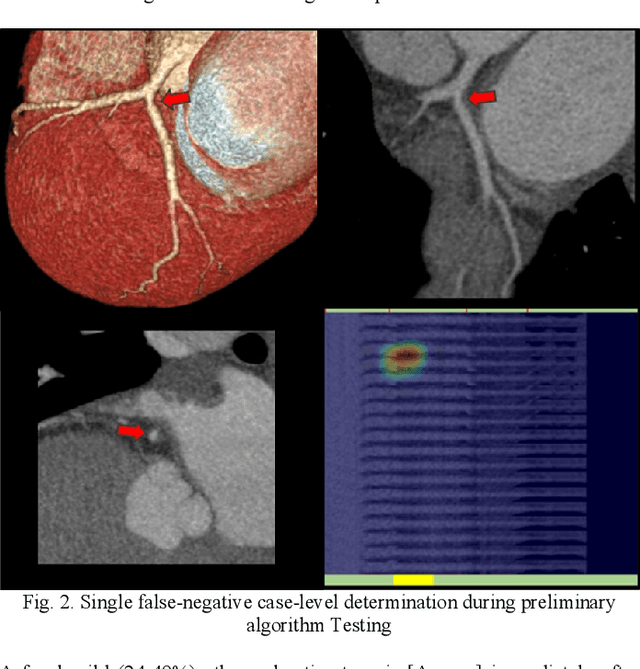

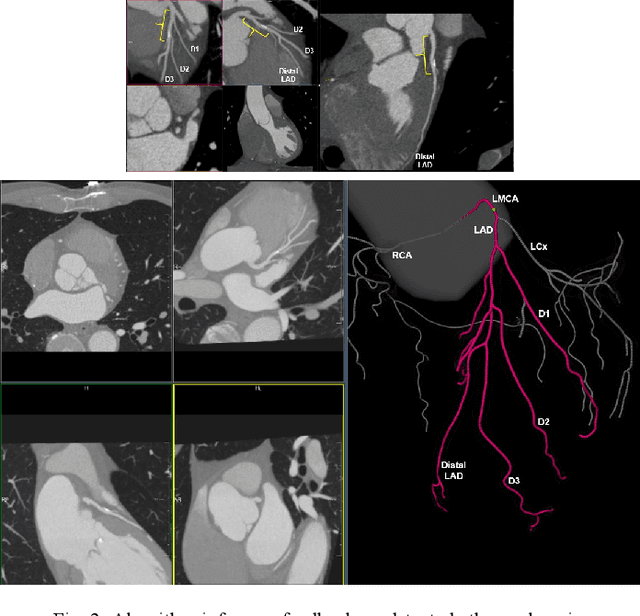

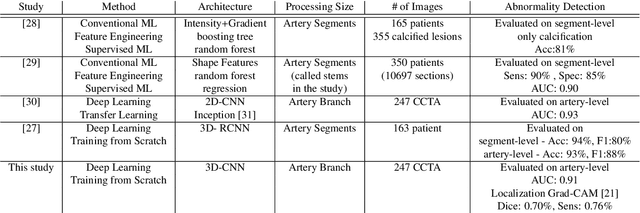

Coronary Artery Classification and Weakly Supervised Abnormality Localization on Coronary CT Angiography with 3-Dimensional Convolutional Neural Networks

Nov 26, 2019

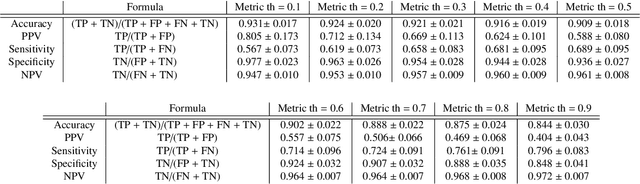

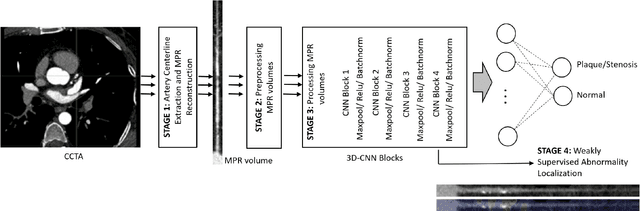

Abstract:We propose a fully automated algorithm based on a deep-learning framework enabling screening of a Coronary Computed Tomography Angiography (CCTA) examination for confident detection of the presence or complete absence of atherosclerotic plaque of the coronary arteries. The system starts with extracting the coronary arteries and their branches from CCTA datasets and representing them with multi-planar reformatted volumes; pre-processing and augmentation techniques are then applied to increase the robustness and generalization ability of the system. A 3-Dimensional Convolutional Neural Network (3D-CNN) is utilized to model pathological changes (e.g., calcification) in coronary arteries/branches. The system then learns the discriminatory features between vessels with and without atherosclerosis. The discriminative features at the final convolutional layer are visualized with a saliency map approach to localize the visual clues related to atherosclerosis. We have evaluated the system on a reference dataset representing 247 patients with atherosclerosis and 246 patients free of atherosclerosis. With 5-fold cross-validation, an accuracy = 90.9%, with Positive Predictive Value = 58.8%, Sensitivity = 68.9%, Specificity of 93.6%, and Negative Predictive Value = 96.1% are achieved at the artery/branch level with a threshold of 0.5. The average area under the curve = 0.91. The system indicates a high negative predictive value, which may be potentially useful for assisting physicians in identifying patients with no coronary atherosclerosis that need no further diagnostic evaluation.

Are Quantitative Features of Lung Nodules Reproducible at Different CT Acquisition and Reconstruction Parameters?

Aug 14, 2019

Abstract:Consistency and duplicability in Computed Tomography (CT) output is essential to quantitative imaging for lung cancer detection and monitoring. This study of CT-detected lung nodules investigated the reproducibility of volume-, density-, and texture-based features (outcome variables) over routine ranges of radiation-dose, reconstruction kernel, and slice thickness. CT raw data of 23 nodules were reconstructed using 320 acquisition/reconstruction conditions (combinations of 4 doses, 10 kernels, and 8 thicknesses). Scans at 12.5%, 25%, and 50% of protocol dose were simulated; reduced-dose and full-dose data were reconstructed using conventional filtered back-projection and iterative-reconstruction kernels at a range of thicknesses (0.6-5.0 mm). Full-dose/B50f kernel reconstructions underwent expert segmentation for reference Region-Of-Interest (ROI) and nodule volume per thickness; each ROI was applied to 40 corresponding images (combinations of 4 doses and 10 kernels). Typical texture analysis metrics (including 5 histogram features, 13 Gray Level Co-occurrence Matrix, 5 Run Length Matrix, 2 Neighboring Gray-Level Dependence Matrix, and 2 Neighborhood Gray-Tone Difference Matrix) were computed per ROI. Reconstruction conditions resulting in no significant change in volume, density, or texture metrics were identified as "compatible pairs" for a given outcome variable. Our results indicate that as thickness increases, volumetric reproducibility decreases, while reproducibility of histogram- and texture-based features across different acquisition and reconstruction parameters improves. In order to achieve concomitant reproducibility of volumetric and radiomic results across studies, balanced standardization of the imaging acquisition parameters is required.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge