Mukesh Singhal

Symmetric Rank-One Quasi-Newton Methods for Deep Learning Using Cubic Regularization

Feb 17, 2025Abstract:Stochastic gradient descent and other first-order variants, such as Adam and AdaGrad, are commonly used in the field of deep learning due to their computational efficiency and low-storage memory requirements. However, these methods do not exploit curvature information. Consequently, iterates can converge to saddle points or poor local minima. On the other hand, Quasi-Newton methods compute Hessian approximations which exploit this information with a comparable computational budget. Quasi-Newton methods re-use previously computed iterates and gradients to compute a low-rank structured update. The most widely used quasi-Newton update is the L-BFGS, which guarantees a positive semi-definite Hessian approximation, making it suitable in a line search setting. However, the loss functions in DNNs are non-convex, where the Hessian is potentially non-positive definite. In this paper, we propose using a limited-memory symmetric rank-one quasi-Newton approach which allows for indefinite Hessian approximations, enabling directions of negative curvature to be exploited. Furthermore, we use a modified adaptive regularized cubics approach, which generates a sequence of cubic subproblems that have closed-form solutions with suitable regularization choices. We investigate the performance of our proposed method on autoencoders and feed-forward neural network models and compare our approach to state-of-the-art first-order adaptive stochastic methods as well as other quasi-Newton methods.x

VQUNet: Vector Quantization U-Net for Defending Adversarial Atacks by Regularizing Unwanted Noise

Jun 05, 2024

Abstract:Deep Neural Networks (DNN) have become a promising paradigm when developing Artificial Intelligence (AI) and Machine Learning (ML) applications. However, DNN applications are vulnerable to fake data that are crafted with adversarial attack algorithms. Under adversarial attacks, the prediction accuracy of DNN applications suffers, making them unreliable. In order to defend against adversarial attacks, we introduce a novel noise-reduction procedure, Vector Quantization U-Net (VQUNet), to reduce adversarial noise and reconstruct data with high fidelity. VQUNet features a discrete latent representation learning through a multi-scale hierarchical structure for both noise reduction and data reconstruction. The empirical experiments show that the proposed VQUNet provides better robustness to the target DNN models, and it outperforms other state-of-the-art noise-reduction-based defense methods under various adversarial attacks for both Fashion-MNIST and CIFAR10 datasets. When there is no adversarial attack, the defense method has less than 1% accuracy degradation for both datasets.

* 8 pages, 6 figures

Adversarial Poisoning Attacks and Defense for General Multi-Class Models Based On Synthetic Reduced Nearest Neighbors

Feb 11, 2021

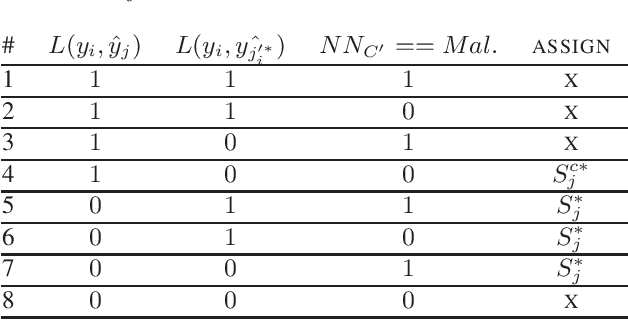

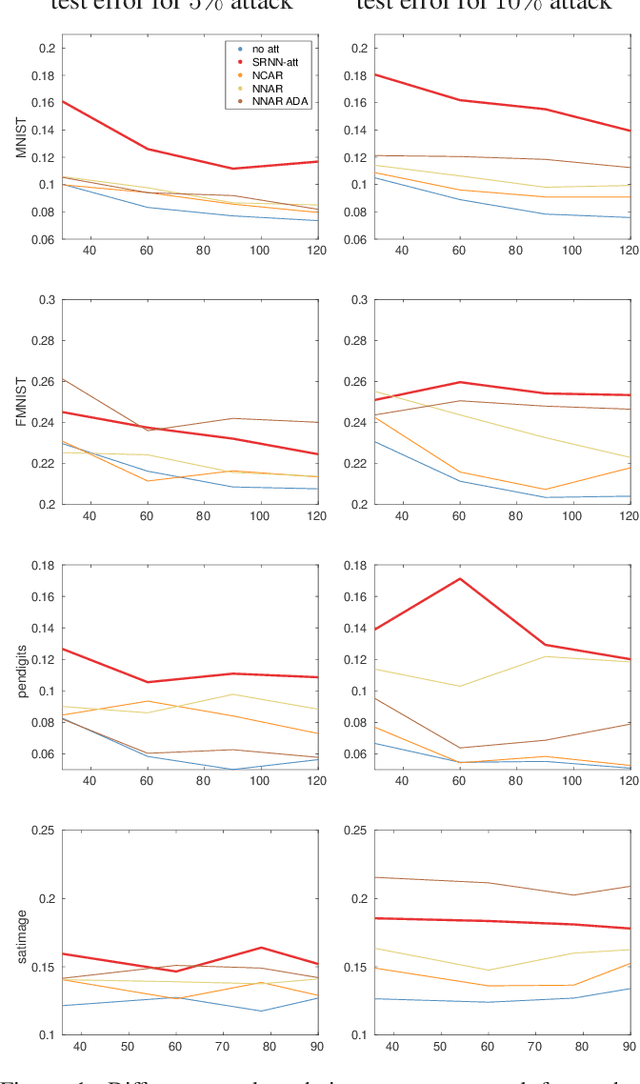

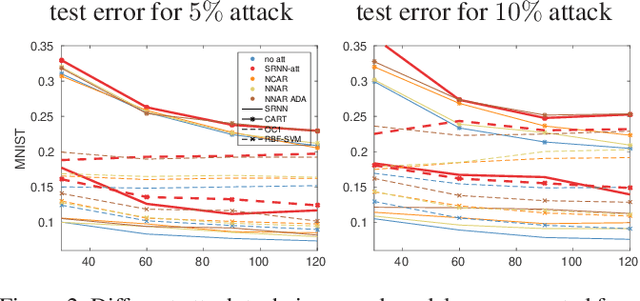

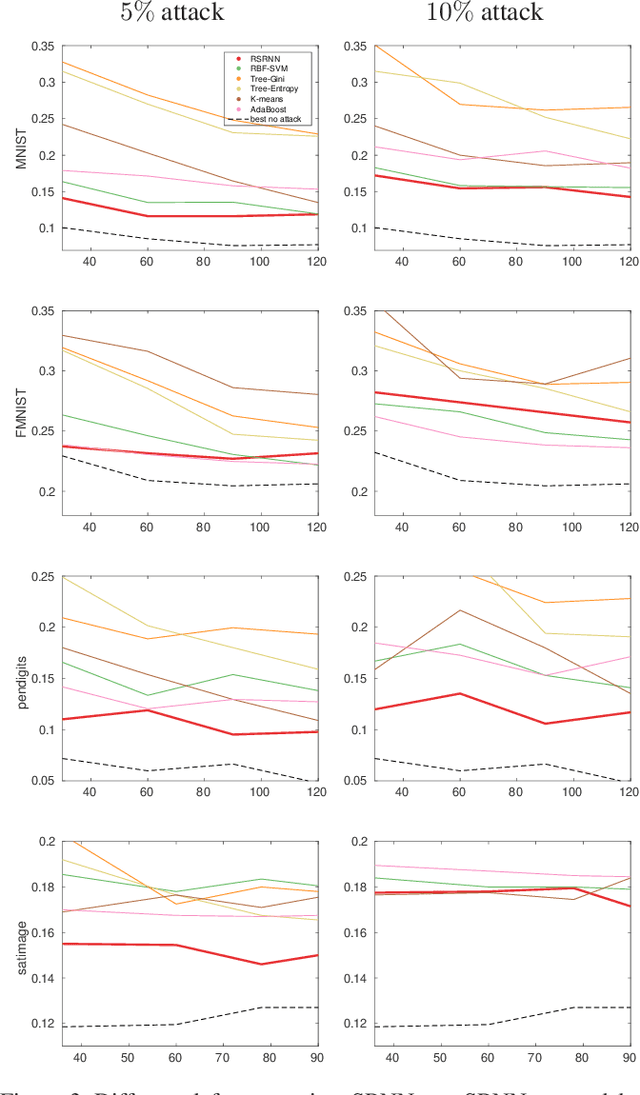

Abstract:State-of-the-art machine learning models are vulnerable to data poisoning attacks whose purpose is to undermine the integrity of the model. However, the current literature on data poisoning attacks is mainly focused on ad hoc techniques that are only applicable to specific machine learning models. Additionally, the existing data poisoning attacks in the literature are limited to either binary classifiers or to gradient-based algorithms. To address these limitations, this paper first proposes a novel model-free label-flipping attack based on the multi-modality of the data, in which the adversary targets the clusters of classes while constrained by a label-flipping budget. The complexity of our proposed attack algorithm is linear in time over the size of the dataset. Also, the proposed attack can increase the error up to two times for the same attack budget. Second, a novel defense technique based on the Synthetic Reduced Nearest Neighbor (SRNN) model is proposed. The defense technique can detect and exclude flipped samples on the fly during the training procedure. Through extensive experimental analysis, we demonstrate that (i) the proposed attack technique can deteriorate the accuracy of several models drastically, and (ii) under the proposed attack, the proposed defense technique significantly outperforms other conventional machine learning models in recovering the accuracy of the targeted model.

Do You Want Your Autonomous Car To Drive Like You?

Feb 05, 2018

Abstract:With progress in enabling autonomous cars to drive safely on the road, it is time to start asking how they should be driving. A common answer is that they should be adopting their users' driving style. This makes the assumption that users want their autonomous cars to drive like they drive - aggressive drivers want aggressive cars, defensive drivers want defensive cars. In this paper, we put that assumption to the test. We find that users tend to prefer a significantly more defensive driving style than their own. Interestingly, they prefer the style they think is their own, even though their actual driving style tends to be more aggressive. We also find that preferences do depend on the specific driving scenario, opening the door for new ways of learning driving style preference.

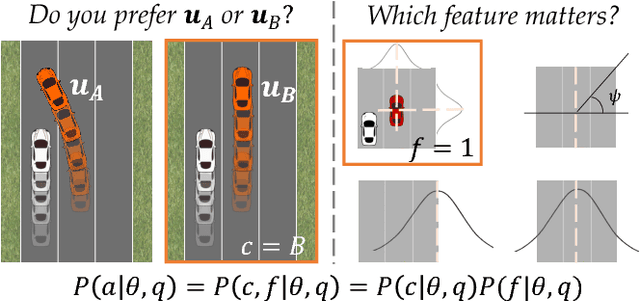

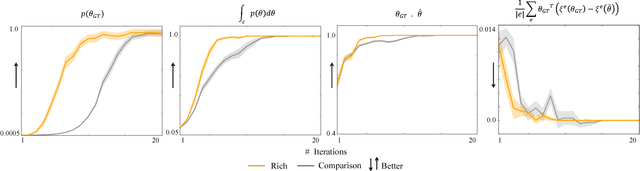

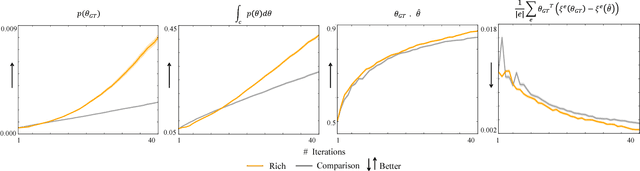

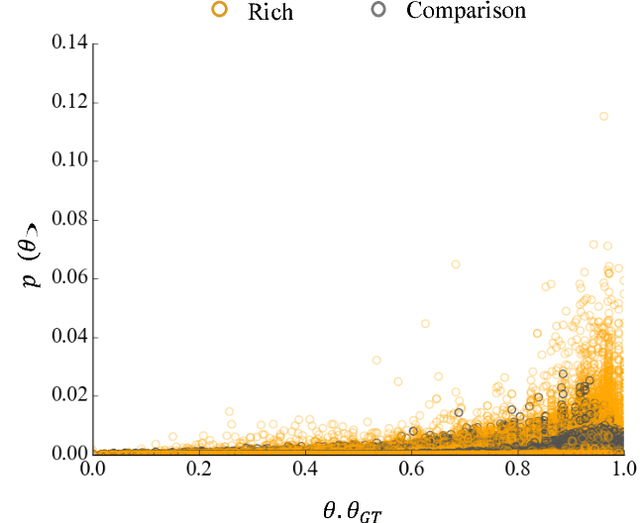

Learning from Richer Human Guidance: Augmenting Comparison-Based Learning with Feature Queries

Feb 05, 2018

Abstract:We focus on learning the desired objective function for a robot. Although trajectory demonstrations can be very informative of the desired objective, they can also be difficult for users to provide. Answers to comparison queries, asking which of two trajectories is preferable, are much easier for users, and have emerged as an effective alternative. Unfortunately, comparisons are far less informative. We propose that there is much richer information that users can easily provide and that robots ought to leverage. We focus on augmenting comparisons with feature queries, and introduce a unified formalism for treating all answers as observations about the true desired reward. We derive an active query selection algorithm, and test these queries in simulation and on real users. We find that richer, feature-augmented queries can extract more information faster, leading to robots that better match user preferences in their behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge