Morten Mossige

Safety assurance of an industrial robotic control system using hardware/software co-verification

Dec 27, 2021

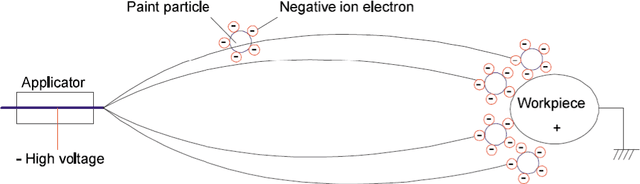

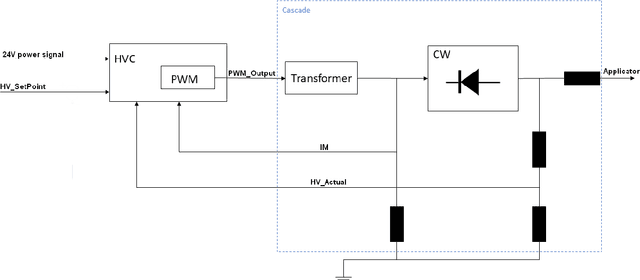

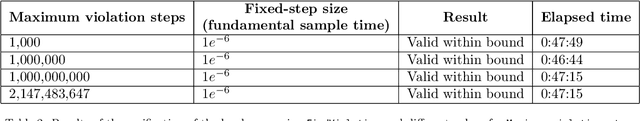

Abstract:As a general trend in industrial robotics, an increasing number of safety functions are being developed or re-engineered to be handled in software rather than by physical hardware such as safety relays or interlock circuits. This trend reinforces the importance of supplementing traditional, input-based testing and quality procedures which are widely used in industry today, with formal verification and model-checking methods. To this end, this paper focuses on a representative safety-critical system in an ABB industrial paint robot, namely the High-Voltage electrostatic Control system (HVC). The practical convergence of the high-voltage produced by the HVC, essential for safe operation, is formally verified using a novel and general co-verification framework where hardware and software models are related via platform mappings. This approach enables the pragmatic combination of highly diverse and specialised tools. The paper's main contribution includes details on how hardware abstraction and verification results can be transferred between tools in order to verify system-level safety properties. It is noteworthy that the HVC application considered in this paper has a rather generic form of a feedback controller. Hence, the co-verification framework and experiences reported here are also highly relevant for any cyber-physical system tracking a setpoint reference.

Time-aware Test Case Execution Scheduling for Cyber-Physical Systems

Feb 12, 2019

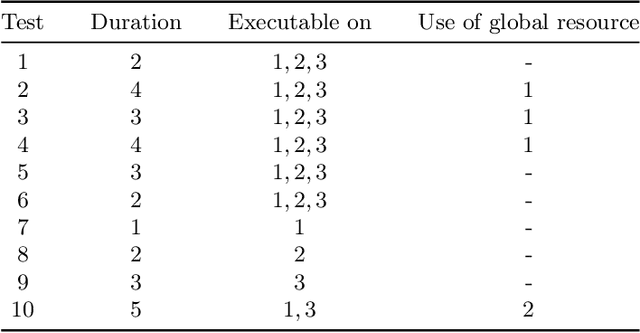

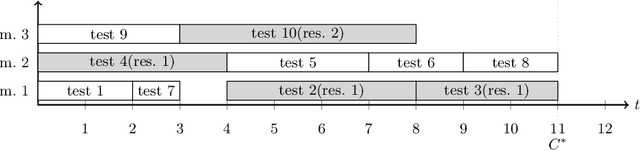

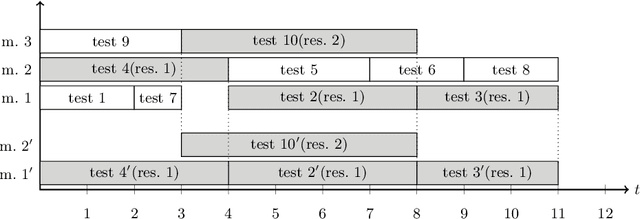

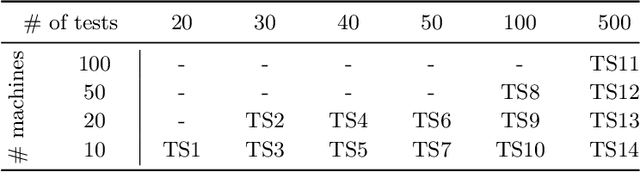

Abstract:Testing cyber-physical systems involves the execution of test cases on target-machines equipped with the latest release of a software control system. When testing industrial robots, it is common that the target machines need to share some common resources, e.g., costly hardware devices, and so there is a need to schedule test case execution on the target machines, accounting for these shared resources. With a large number of such tests executed on a regular basis, this scheduling becomes difficult to manage manually. In fact, with manual test execution planning and scheduling, some robots may remain unoccupied for long periods of time and some test cases may not be executed. This paper introduces TC-Sched, a time-aware method for automated test case execution scheduling. TC-Sched uses Constraint Programming to schedule tests to run on multiple machines constrained by the tests' access to shared resources, such as measurement or networking devices. The CP model is written in SICStus Prolog and uses the Cumulatives global constraint. Given a set of test cases, a set of machines, and a set of shared resources, TC-Sched produces an execution schedule where each test is executed once with minimal time between when a source code change is committed and the test results are reported to the developer. Experiments reveal that TC-Sched can schedule 500 test cases over 100 machines in less than 4 minutes for 99.5% of the instances. In addition, TC-Sched largely outperforms simpler methods based on a greedy algorithm and is suitable for deployment on industrial robot testing.

* Published in the 23rd International Conference on Principles and Practice of Constraint Programming (CP 2017)

Reinforcement Learning for Automatic Test Case Prioritization and Selection in Continuous Integration

Nov 09, 2018

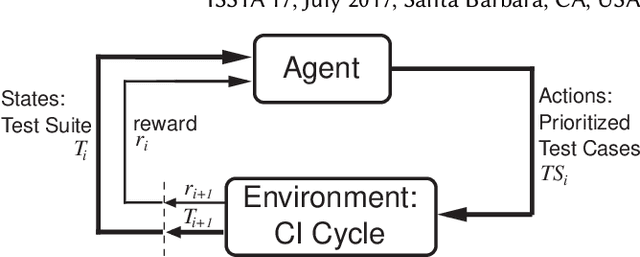

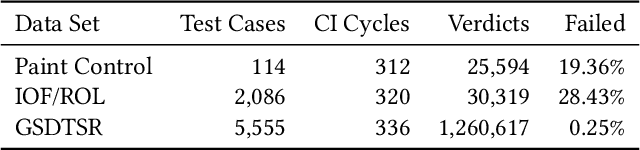

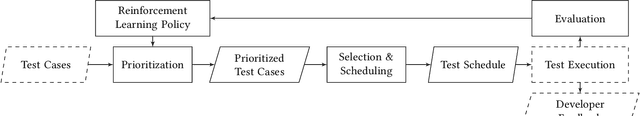

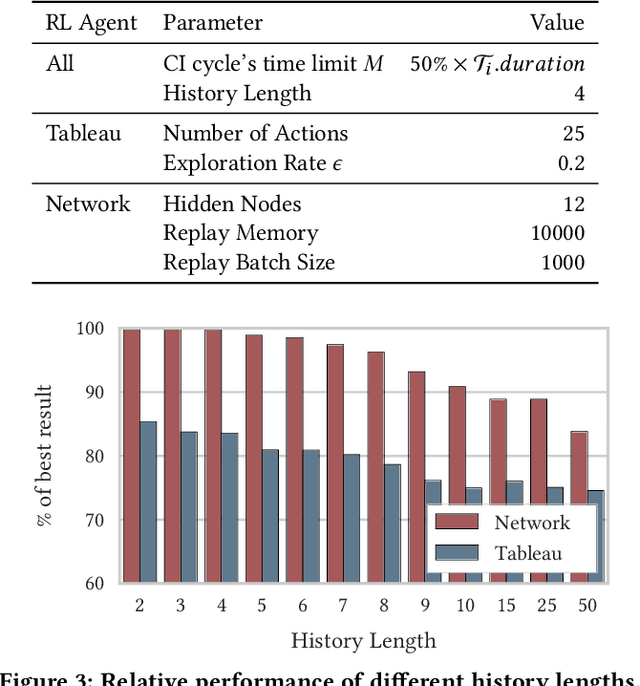

Abstract:Testing in Continuous Integration (CI) involves test case prioritization, selection, and execution at each cycle. Selecting the most promising test cases to detect bugs is hard if there are uncertainties on the impact of committed code changes or, if traceability links between code and tests are not available. This paper introduces Retecs, a new method for automatically learning test case selection and prioritization in CI with the goal to minimize the round-trip time between code commits and developer feedback on failed test cases. The Retecs method uses reinforcement learning to select and prioritize test cases according to their duration, previous last execution and failure history. In a constantly changing environment, where new test cases are created and obsolete test cases are deleted, the Retecs method learns to prioritize error-prone test cases higher under guidance of a reward function and by observing previous CI cycles. By applying Retecs on data extracted from three industrial case studies, we show for the first time that reinforcement learning enables fruitful automatic adaptive test case selection and prioritization in CI and regression testing.

Rotational Diversity in Multi-Cycle Assignment Problems

Nov 08, 2018

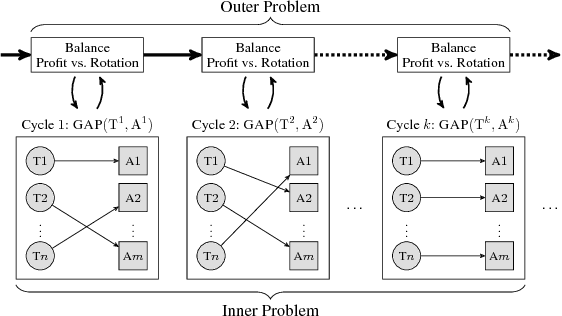

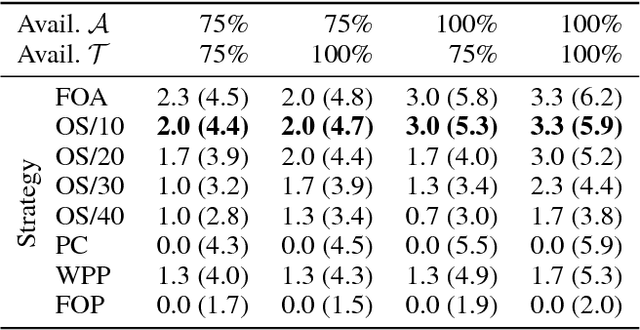

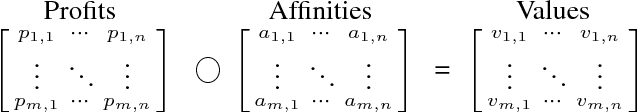

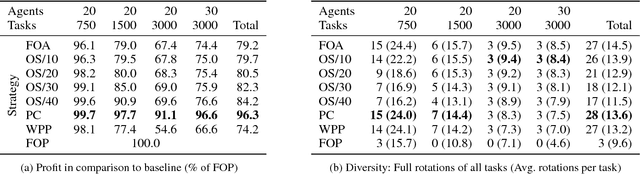

Abstract:In multi-cycle assignment problems with rotational diversity, a set of tasks has to be repeatedly assigned to a set of agents. Over multiple cycles, the goal is to achieve a high diversity of assignments from tasks to agents. At the same time, the assignments' profit has to be maximized in each cycle. Due to changing availability of tasks and agents, planning ahead is infeasible and each cycle is an independent assignment problem but influenced by previous choices. We approach the multi-cycle assignment problem as a two-part problem: Profit maximization and rotation are combined into one objective value, and then solved as a General Assignment Problem. Rotational diversity is maintained with a single execution of the costly assignment model. Our simple, yet effective method is applicable to different domains and applications. Experiments show the applicability on a multi-cycle variant of the multiple knapsack problem and a real-world case study on the test case selection and assignment problem, an example from the software engineering domain, where test cases have to be distributed over compatible test machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge