Mohammad Tahsin Mostafiz

The Potential of Wearable Sensors for Assessing Patient Acuity in Intensive Care Unit (ICU)

Nov 03, 2023

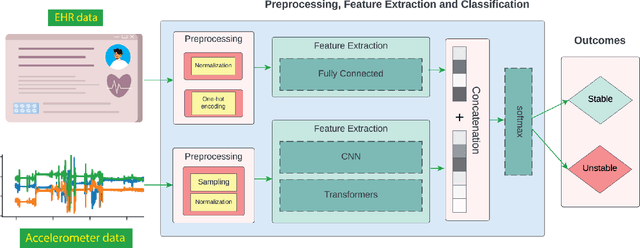

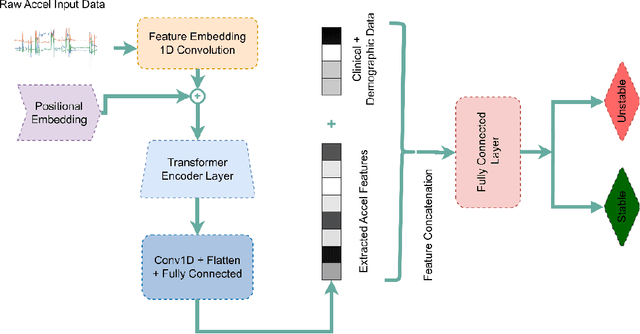

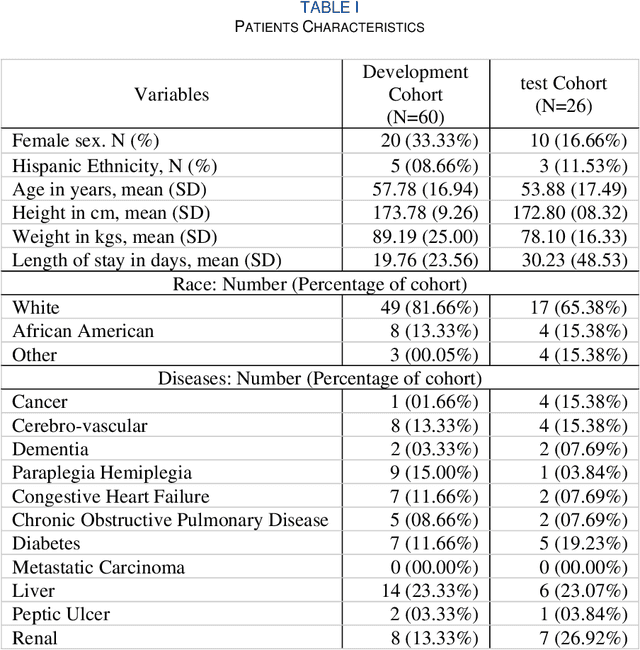

Abstract:Acuity assessments are vital in critical care settings to provide timely interventions and fair resource allocation. Traditional acuity scores rely on manual assessments and documentation of physiological states, which can be time-consuming, intermittent, and difficult to use for healthcare providers. Furthermore, such scores do not incorporate granular information such as patients' mobility level, which can indicate recovery or deterioration in the ICU. We hypothesized that existing acuity scores could be potentially improved by employing Artificial Intelligence (AI) techniques in conjunction with Electronic Health Records (EHR) and wearable sensor data. In this study, we evaluated the impact of integrating mobility data collected from wrist-worn accelerometers with clinical data obtained from EHR for developing an AI-driven acuity assessment score. Accelerometry data were collected from 86 patients wearing accelerometers on their wrists in an academic hospital setting. The data was analyzed using five deep neural network models: VGG, ResNet, MobileNet, SqueezeNet, and a custom Transformer network. These models outperformed a rule-based clinical score (SOFA= Sequential Organ Failure Assessment) used as a baseline, particularly regarding the precision, sensitivity, and F1 score. The results showed that while a model relying solely on accelerometer data achieved limited performance (AUC 0.50, Precision 0.61, and F1-score 0.68), including demographic information with the accelerometer data led to a notable enhancement in performance (AUC 0.69, Precision 0.75, and F1-score 0.67). This work shows that the combination of mobility and patient information can successfully differentiate between stable and unstable states in critically ill patients.

EVHA: Explainable Vision System for Hardware Testing and Assurance -- An Overview

Jul 20, 2022

Abstract:Due to the ever-growing demands for electronic chips in different sectors the semiconductor companies have been mandated to offshore their manufacturing processes. This unwanted matter has made security and trustworthiness of their fabricated chips concerning and caused creation of hardware attacks. In this condition, different entities in the semiconductor supply chain can act maliciously and execute an attack on the design computing layers, from devices to systems. Our attack is a hardware Trojan that is inserted during mask generation/fabrication in an untrusted foundry. The Trojan leaves a footprint in the fabricated through addition, deletion, or change of design cells. In order to tackle this problem, we propose Explainable Vision System for Hardware Testing and Assurance (EVHA) in this work that can detect the smallest possible change to a design in a low-cost, accurate, and fast manner. The inputs to this system are Scanning Electron Microscopy (SEM) images acquired from the Integrated Circuits (ICs) under examination. The system output is determination of IC status in terms of having any defect and/or hardware Trojan through addition, deletion, or change in the design cells at the cell-level. This article provides an overview on the design, development, implementation, and analysis of our defense system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge