Mohamed S. Shehata

MedDChest: A Content-Aware Multimodal Foundational Vision Model for Thoracic Imaging

Nov 06, 2025Abstract:The performance of vision models in medical imaging is often hindered by the prevailing paradigm of fine-tuning backbones pre-trained on out-of-domain natural images. To address this fundamental domain gap, we propose MedDChest, a new foundational Vision Transformer (ViT) model optimized specifically for thoracic imaging. We pre-trained MedDChest from scratch on a massive, curated, multimodal dataset of over 1.2 million images, encompassing different modalities including Chest X-ray and Computed Tomography (CT) compiled from 10 public sources. A core technical contribution of our work is Guided Random Resized Crops, a novel content-aware data augmentation strategy that biases sampling towards anatomically relevant regions, overcoming the inefficiency of standard cropping techniques on medical scans. We validate our model's effectiveness by fine-tuning it on a diverse set of downstream diagnostic tasks. Comprehensive experiments empirically demonstrate that MedDChest significantly outperforms strong, publicly available ImageNet-pretrained models. By establishing the superiority of large-scale, in-domain pre-training combined with domain-specific data augmentation, MedDChest provides a powerful and robust feature extractor that serves as a significantly better starting point for a wide array of thoracic diagnostic tasks. The model weights will be made publicly available to foster future research and applications.

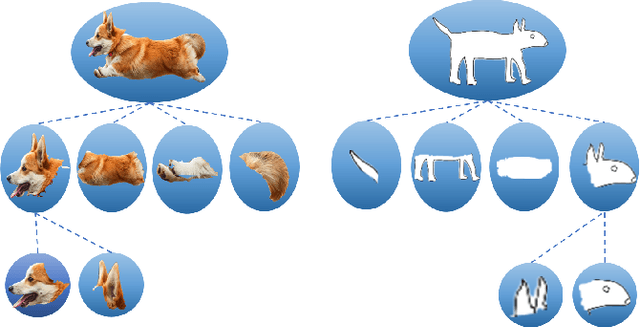

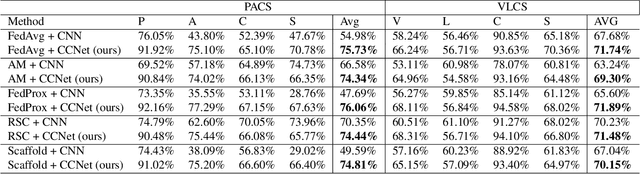

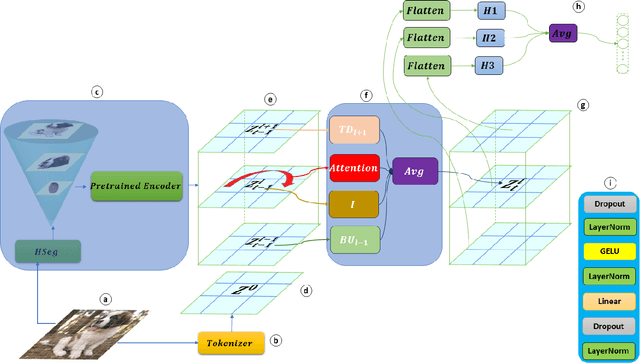

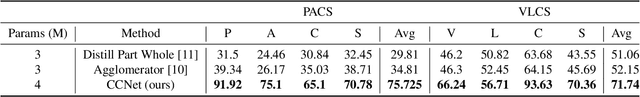

FedPartWhole: Federated domain generalization via consistent part-whole hierarchies

Jul 20, 2024

Abstract:Federated Domain Generalization (FedDG), aims to tackle the challenge of generalizing to unseen domains at test time while catering to the data privacy constraints that prevent centralized data storage from different domains originating at various clients. Existing approaches can be broadly categorized into four groups: domain alignment, data manipulation, learning strategies, and optimization of model aggregation weights. This paper proposes a novel approach to Federated Domain Generalization that tackles the problem from the perspective of the backbone model architecture. The core principle is that objects, even under substantial domain shifts and appearance variations, maintain a consistent hierarchical structure of parts and wholes. For instance, a photograph and a sketch of a dog share the same hierarchical organization, consisting of a head, body, limbs, and so on. The introduced architecture explicitly incorporates a feature representation for the image parse tree. To the best of our knowledge, this is the first work to tackle Federated Domain Generalization from a model architecture standpoint. Our approach outperforms a convolutional architecture of comparable size by over 12\%, despite utilizing fewer parameters. Additionally, it is inherently interpretable, contrary to the black-box nature of CNNs, which fosters trust in its predictions, a crucial asset in federated learning.

Universal Medical Imaging Model for Domain Generalization with Data Privacy

Jul 20, 2024

Abstract:Achieving domain generalization in medical imaging poses a significant challenge, primarily due to the limited availability of publicly labeled datasets in this domain. This limitation arises from concerns related to data privacy and the necessity for medical expertise to accurately label the data. In this paper, we propose a federated learning approach to transfer knowledge from multiple local models to a global model, eliminating the need for direct access to the local datasets used to train each model. The primary objective is to train a global model capable of performing a wide variety of medical imaging tasks. This is done while ensuring the confidentiality of the private datasets utilized during the training of these models. To validate the effectiveness of our approach, extensive experiments were conducted on eight datasets, each corresponding to a different medical imaging application. The client's data distribution in our experiments varies significantly as they originate from diverse domains. Despite this variation, we demonstrate a statistically significant improvement over a state-of-the-art baseline utilizing masked image modeling over a diverse pre-training dataset that spans different body parts and scanning types. This improvement is achieved by curating information learned from clients without accessing any labeled dataset on the server.

MedMAE: A Self-Supervised Backbone for Medical Imaging Tasks

Jul 20, 2024

Abstract:Medical imaging tasks are very challenging due to the lack of publicly available labeled datasets. Hence, it is difficult to achieve high performance with existing deep-learning models as they require a massive labeled dataset to be trained effectively. An alternative solution is to use pre-trained models and fine-tune them using the medical imaging dataset. However, all existing models are pre-trained using natural images, which is a completely different domain from that of medical imaging, which leads to poor performance due to domain shift. To overcome these problems, we propose a large-scale unlabeled dataset of medical images and a backbone pre-trained using the proposed dataset with a self-supervised learning technique called Masked autoencoder. This backbone can be used as a pre-trained model for any medical imaging task, as it is trained to learn a visual representation of different types of medical images. To evaluate the performance of the proposed backbone, we used four different medical imaging tasks. The results are compared with existing pre-trained models. These experiments show the superiority of our proposed backbone in medical imaging tasks.

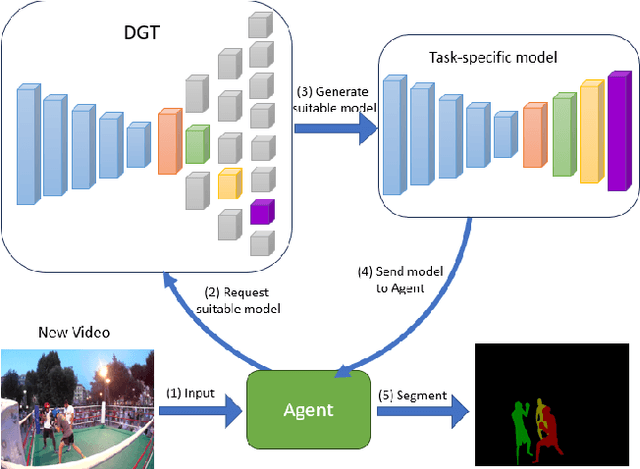

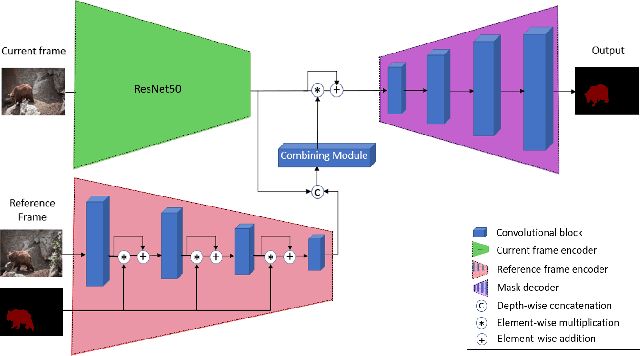

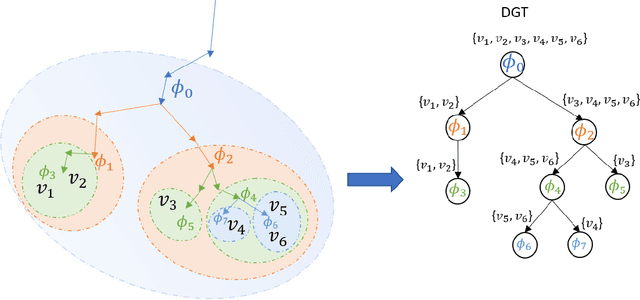

Lifelong Learning Using a Dynamically Growing Tree of Sub-networks for Domain Generalization in Video Object Segmentation

May 29, 2024

Abstract:Current state-of-the-art video object segmentation models have achieved great success using supervised learning with massive labeled training datasets. However, these models are trained using a single source domain and evaluated using videos sampled from the same source domain. When these models are evaluated using videos sampled from a different target domain, their performance degrades significantly due to poor domain generalization, i.e., their inability to learn from multi-domain sources simultaneously using traditional supervised learning. In this paper, We propose a dynamically growing tree of sub-networks (DGT) to learn effectively from multi-domain sources. DGT uses a novel lifelong learning technique that allows the model to continuously and effectively learn from new domains without forgetting the previously learned domains. Hence, the model can generalize to out-of-domain videos. The proposed work is evaluated using single-source in-domain (traditional video object segmentation), multi-source in-domain, and multi-source out-of-domain video object segmentation. The results of DGT show a single source in-domain performance gain of 0.2% and 3.5% on the DAVIS16 and DAVIS17 datasets, respectively. However, when DGT is evaluated using in-domain multi-sources, the results show superior performance compared to state-of-the-art video object segmentation and other lifelong learning techniques with an average performance increase in the F-score of 6.9% with minimal catastrophic forgetting. Finally, in the out-of-domain experiment, the performance of DGT is 2.7% and 4% better than state-of-the-art in 1 and 5-shots, respectively.

Automated Human Cell Classification in Sparse Datasets using Few-Shot Learning

Jul 27, 2021

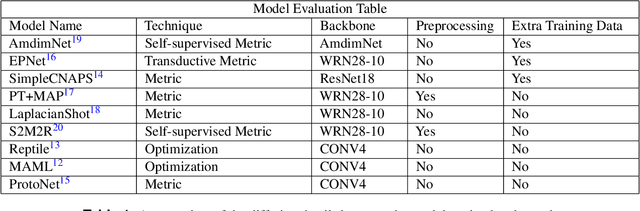

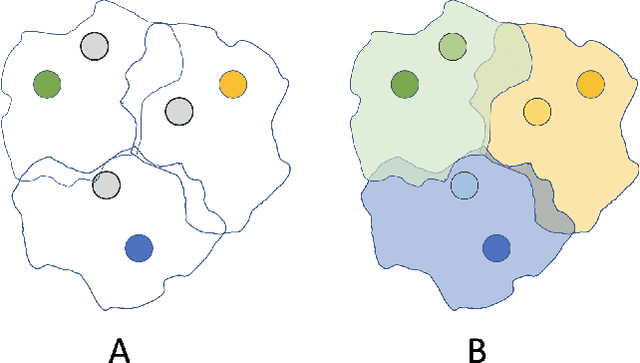

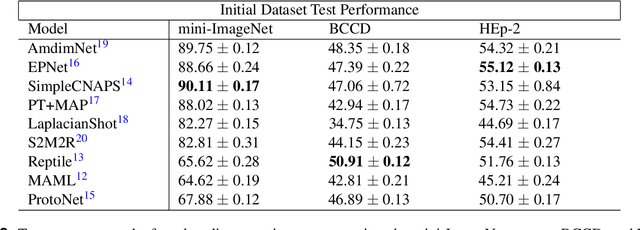

Abstract:Classifying and analyzing human cells is a lengthy procedure, often involving a trained professional. In an attempt to expedite this process, an active area of research involves automating cell classification through use of deep learning-based techniques. In practice, a large amount of data is required to accurately train these deep learning models. However, due to the sparse human cell datasets currently available, the performance of these models is typically low. This study investigates the feasibility of using few-shot learning-based techniques to mitigate the data requirements for accurate training. The study is comprised of three parts: First, current state-of-the-art few-shot learning techniques are evaluated on human cell classification. The selected techniques are trained on a non-medical dataset and then tested on two out-of-domain, human cell datasets. The results indicate that, overall, the test accuracy of state-of-the-art techniques decreased by at least 30% when transitioning from a non-medical dataset to a medical dataset. Second, this study evaluates the potential benefits, if any, to varying the backbone architecture and training schemes in current state-of-the-art few-shot learning techniques when used in human cell classification. Even with these variations, the overall test accuracy decreased from 88.66% on non-medical datasets to 44.13% at best on the medical datasets. Third, this study presents future directions for using few-shot learning in human cell classification. In general, few-shot learning in its current state performs poorly on human cell classification. The study proves that attempts to modify existing network architectures are not effective and concludes that future research effort should be focused on improving robustness towards out-of-domain testing using optimization-based or self-supervised few-shot learning techniques.

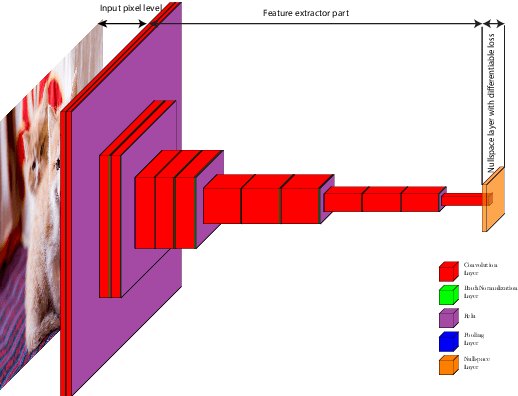

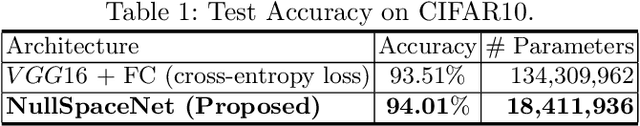

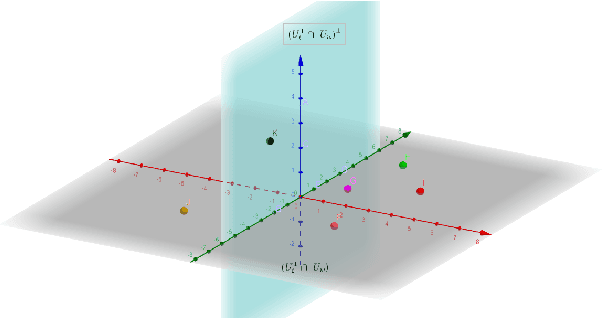

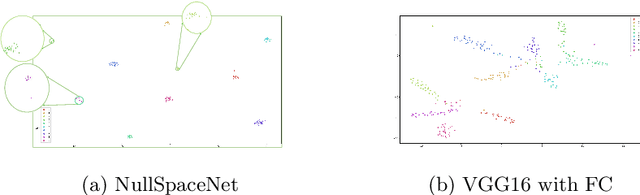

NullSpaceNet: Nullspace Convoluional Neural Network with Differentiable Loss Function

Apr 25, 2020

Abstract:We propose NullSpaceNet, a novel network that maps from the pixel level input to a joint-nullspace (as opposed to the traditional feature space), where the newly learned joint-nullspace features have clearer interpretation and are more separable. NullSpaceNet ensures that all inputs from the same class are collapsed into one point in this new joint-nullspace, and the different classes are collapsed into different points with high separation margins. Moreover, a novel differentiable loss function is proposed that has a closed-form solution with no free-parameters. NullSpaceNet exhibits superior performance when tested against VGG16 with fully-connected layer over 4 different datasets, with accuracy gain of up to 4.55%, a reduction in learnable parameters from 135M to 19M, and reduction in inference time of 99% in favor of NullSpaceNet. This means that NullSpaceNet needs less than 1% of the time it takes a traditional CNN to classify a batch of images with better accuracy.

DomainSiam: Domain-Aware Siamese Network for Visual Object Tracking

Aug 21, 2019

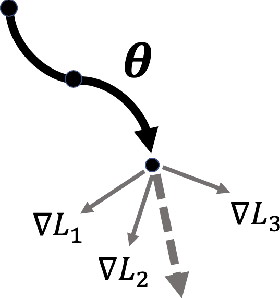

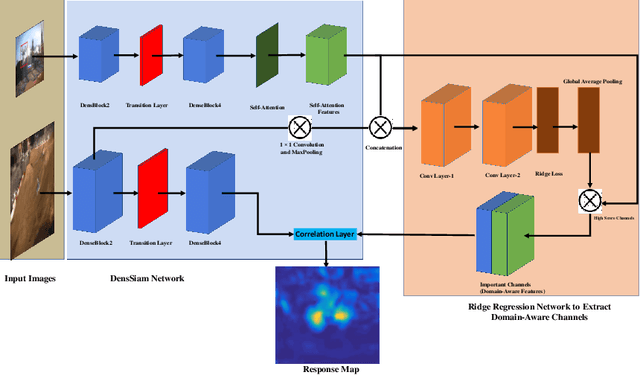

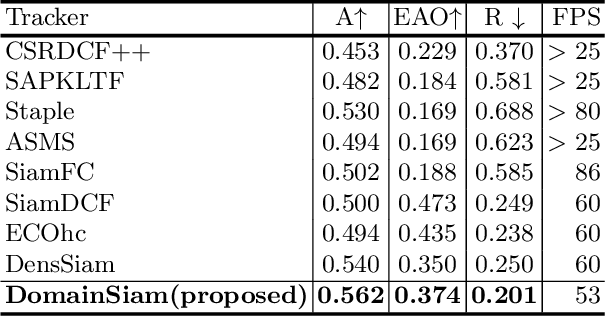

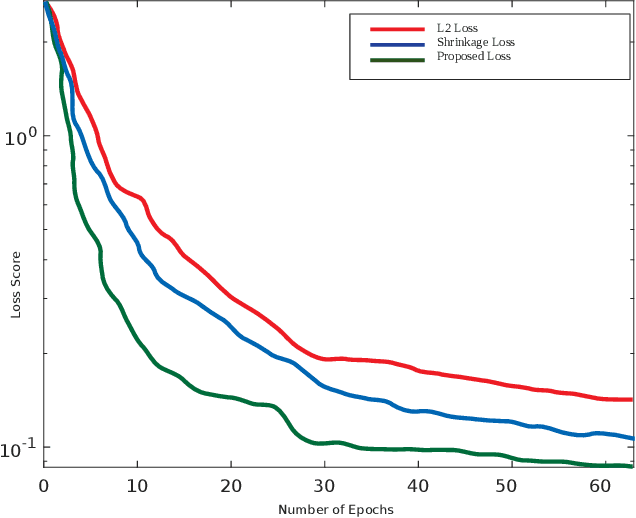

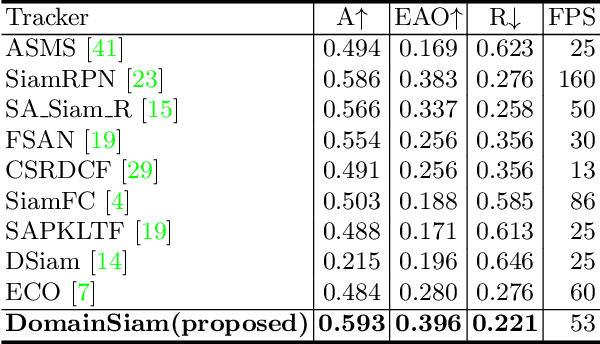

Abstract:Visual object tracking is a fundamental task in the field of computer vision. Recently, Siamese trackers have achieved state-of-the-art performance on recent benchmarks. However, Siamese trackers do not fully utilize semantic and objectness information from pre-trained networks that have been trained on the image classification task. Furthermore, the pre-trained Siamese architecture is sparsely activated by the category label which leads to unnecessary calculations and overfitting. In this paper, we propose to learn a Domain-Aware, that is fully utilizing semantic and objectness information while producing a class-agnostic using a ridge regression network. Moreover, to reduce the sparsity problem, we solve the ridge regression problem with a differentiable weighted-dynamic loss function. Our tracker, dubbed DomainSiam, improves the feature learning in the training phase and generalization capability to other domains. Extensive experiments are performed on five tracking benchmarks including OTB2013 and OTB2015 for a validation set; as well as the VOT2017, VOT2018, LaSOT, TrackingNet, and GOT10k for a testing set. DomainSiam achieves state-of-the-art performance on these benchmarks while running at 53 FPS.

* 13 pages

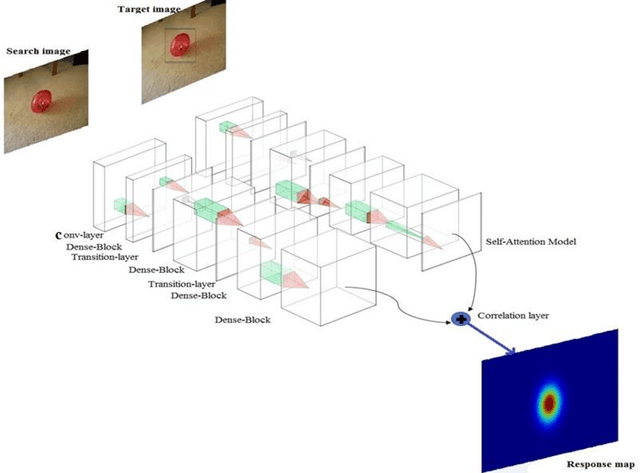

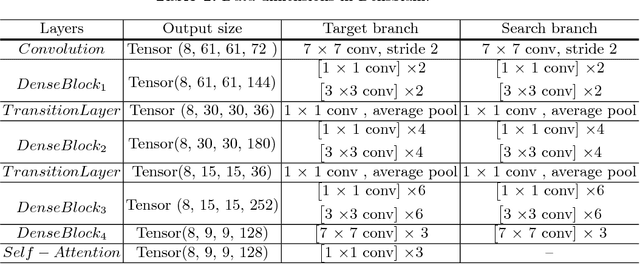

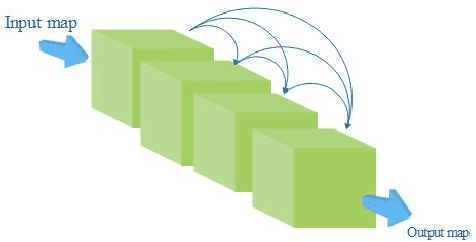

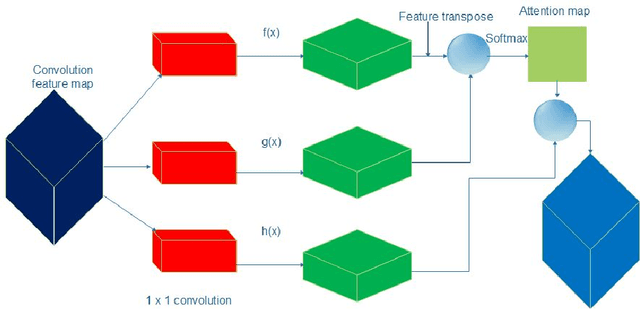

DensSiam: End-to-End Densely-Siamese Network with Self-Attention Model for Object Tracking

Sep 07, 2018

Abstract:Convolutional Siamese neural networks have been recently used to track objects using deep features. Siamese architecture can achieve real time speed, however it is still difficult to find a Siamese architecture that maintains the generalization capability, high accuracy and speed while decreasing the number of shared parameters especially when it is very deep. Furthermore, a conventional Siamese architecture usually processes one local neighborhood at a time, which makes the appearance model local and non-robust to appearance changes. To overcome these two problems, this paper proposes DensSiam, a novel convolutional Siamese architecture, which uses the concept of dense layers and connects each dense layer to all layers in a feed-forward fashion with a similarity-learning function. DensSiam also includes a Self-Attention mechanism to force the network to pay more attention to the non-local features during offline training. Extensive experiments are performed on four tracking benchmarks: OTB2013 and OTB2015 for validation set; and VOT2015, VOT2016 and VOT2017 for testing set. The obtained results show that DensSiam achieves superior results on these benchmarks compared to other current state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge