Miguel Saavedra-Ruiz

The Harmonic Exponential Filter for Nonparametric Estimation on Motion Groups

Aug 01, 2024

Abstract:Bayesian estimation is a vital tool in robotics as it allows systems to update the belief of the robot state using incomplete information from noisy sensors. To render the state estimation problem tractable, many systems assume that the motion and measurement noise, as well as the state distribution, are all unimodal and Gaussian. However, there are numerous scenarios and systems that do not comply with these assumptions. Existing non-parametric filters that are used to model multimodal distributions have drawbacks that limit their ability to represent a diverse set of distributions. In this paper, we introduce a novel approach to nonparametric Bayesian filtering to cope with multimodal distributions using harmonic exponential distributions. This approach leverages two key insights of harmonic exponential distributions: a) the product of two distributions can be expressed as the element-wise addition of their log-likelihood Fourier coefficients, and b) the convolution of two distributions can be efficiently computed as the tensor product of their Fourier coefficients. These observations enable the development of an efficient and exact solution to the Bayes filter up to the band limit of a Fourier transform. We demonstrate our filter's superior performance compared with established nonparametric filtering methods across a range of simulated and real-world localization tasks.

One-4-All: Neural Potential Fields for Embodied Navigation

Mar 07, 2023Abstract:A fundamental task in robotics is to navigate between two locations. In particular, real-world navigation can require long-horizon planning using high-dimensional RGB images, which poses a substantial challenge for end-to-end learning-based approaches. Current semi-parametric methods instead achieve long-horizon navigation by combining learned modules with a topological memory of the environment, often represented as a graph over previously collected images. However, using these graphs in practice typically involves tuning a number of pruning heuristics to avoid spurious edges, limit runtime memory usage and allow reasonably fast graph queries. In this work, we present One-4-All (O4A), a method leveraging self-supervised and manifold learning to obtain a graph-free, end-to-end navigation pipeline in which the goal is specified as an image. Navigation is achieved by greedily minimizing a potential function defined continuously over the O4A latent space. Our system is trained offline on non-expert exploration sequences of RGB data and controls, and does not require any depth or pose measurements. We show that O4A can reach long-range goals in 8 simulated Gibson indoor environments, and further demonstrate successful real-world navigation using a Jackal UGV platform.

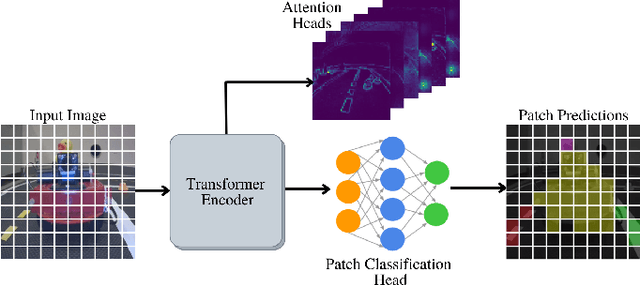

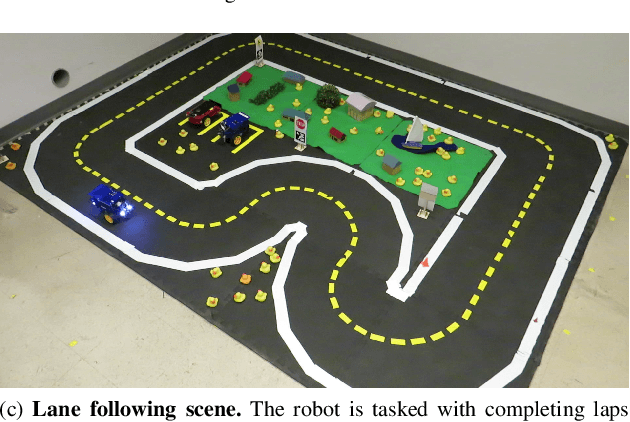

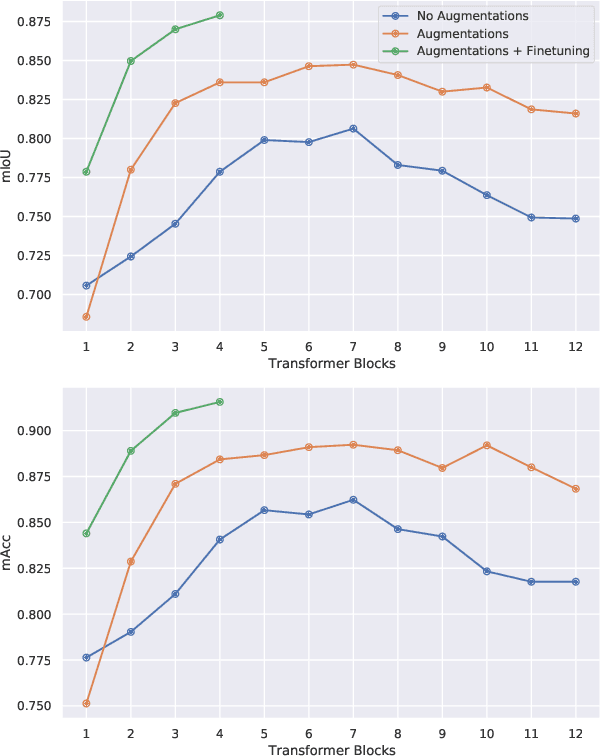

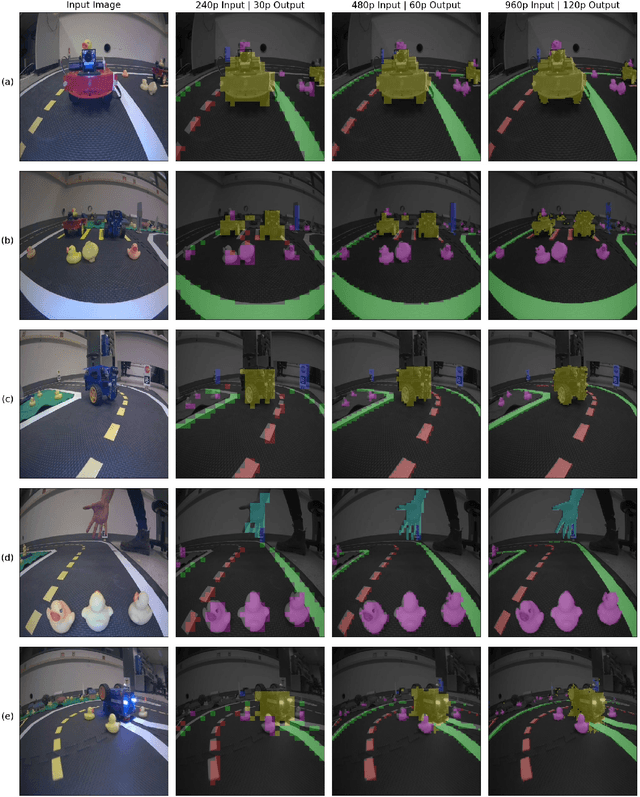

Monocular Robot Navigation with Self-Supervised Pretrained Vision Transformers

Mar 07, 2022

Abstract:In this work, we consider the problem of learning a perception model for monocular robot navigation using few annotated images. Using a Vision Transformer (ViT) pretrained with a label-free self-supervised method, we successfully train a coarse image segmentation model for the Duckietown environment using 70 training images. Our model performs coarse image segmentation at the 8x8 patch level, and the inference resolution can be adjusted to balance prediction granularity and real-time perception constraints. We study how best to adapt a ViT to our task and environment, and find that some lightweight architectures can yield good single-image segmentations at a usable frame rate, even on CPU. The resulting perception model is used as the backbone for a simple yet robust visual servoing agent, which we deploy on a differential drive mobile robot to perform two tasks: lane following and obstacle avoidance.

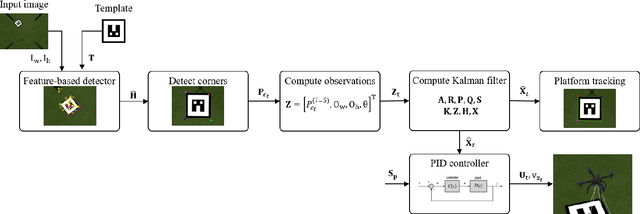

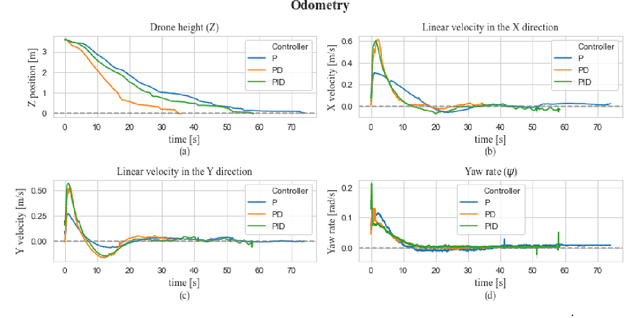

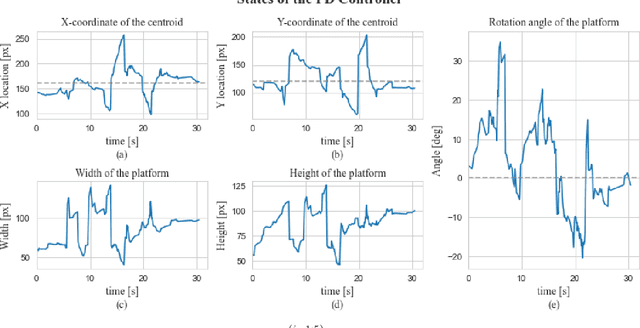

Monocular visual autonomous landing system for quadcopter drones using software in the loop

Aug 14, 2021

Abstract:Autonomous landing is a capability that is essential to achieve the full potential of multi-rotor drones in many social and industrial applications. The implementation and testing of this capability on physical platforms is risky and resource-intensive; hence, in order to ensure both a sound design process and a safe deployment, simulations are required before implementing a physical prototype. This paper presents the development of a monocular visual system, using a software-in-the-loop methodology, that autonomously and efficiently lands a quadcopter drone on a predefined landing pad, thus reducing the risks of the physical testing stage. In addition to ensuring that the autonomous landing system as a whole fulfils the design requirements using a Gazebo-based simulation, our approach provides a tool for safe parameter tuning and design testing prior to physical implementation. Finally, the proposed monocular vision-only approach to landing pad tracking made it possible to effectively implement the system in an F450 quadcopter drone with the standard computational capabilities of an Odroid XU4 embedded processor.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge