Miguel Domingo

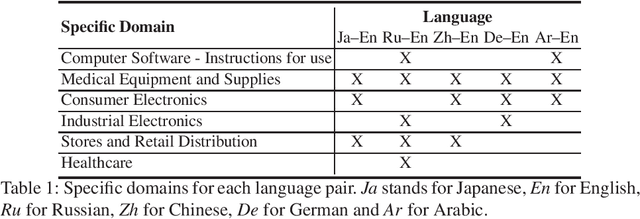

Findings of the Covid-19 MLIA Machine Translation Task

Nov 14, 2022

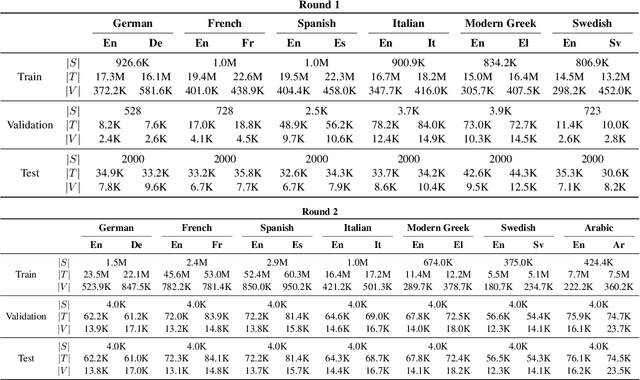

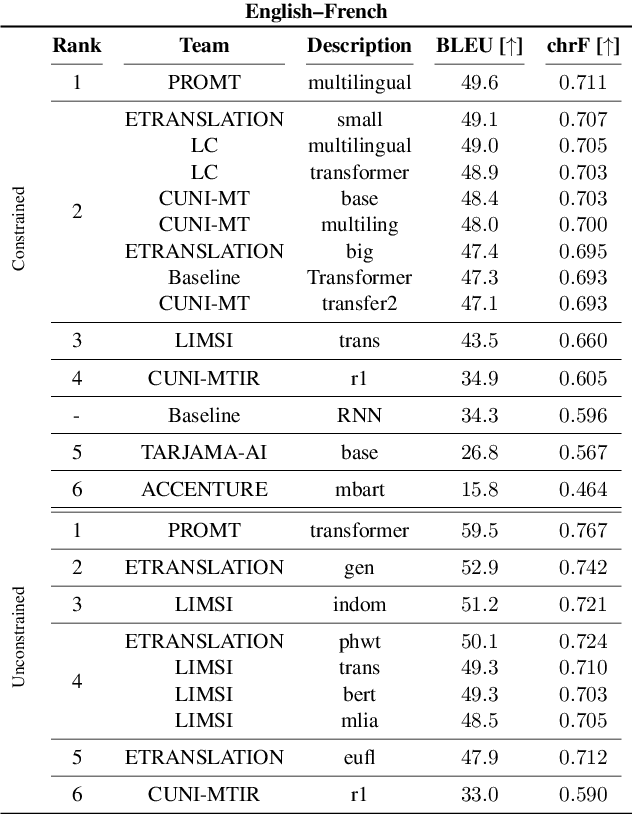

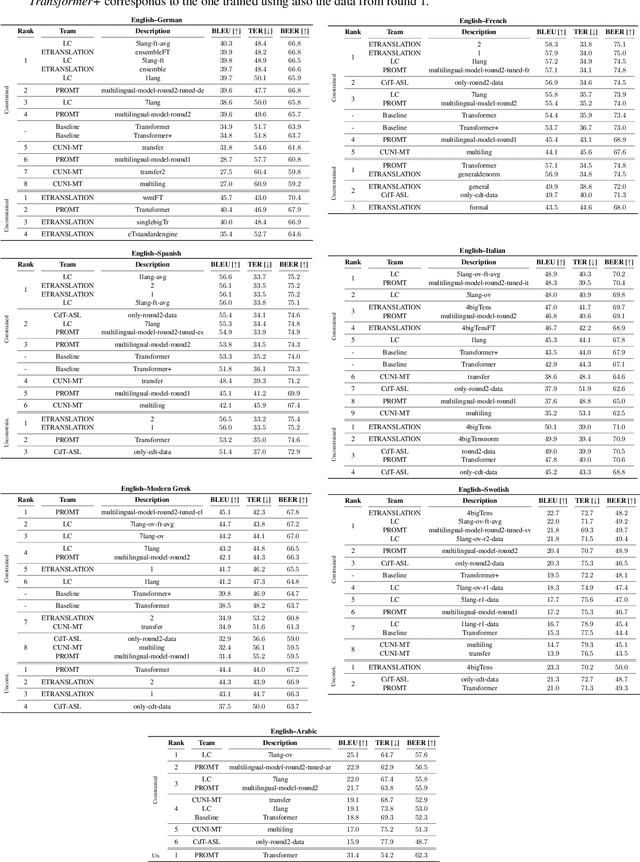

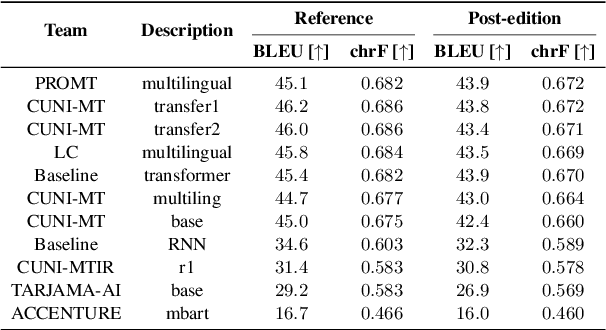

Abstract:This work presents the results of the machine translation (MT) task from the Covid-19 MLIA @ Eval initiative, a community effort to improve the generation of MT systems focused on the current Covid-19 crisis. Nine teams took part in this event, which was divided in two rounds and involved seven different language pairs. Two different scenarios were considered: one in which only the provided data was allowed, and a second one in which the use of external resources was allowed. Overall, best approaches were based on multilingual models and transfer learning, with an emphasis on the importance of applying a cleaning process to the training data.

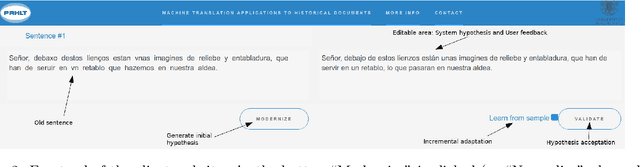

Two Demonstrations of the Machine Translation Applications to Historical Documents

Feb 02, 2021

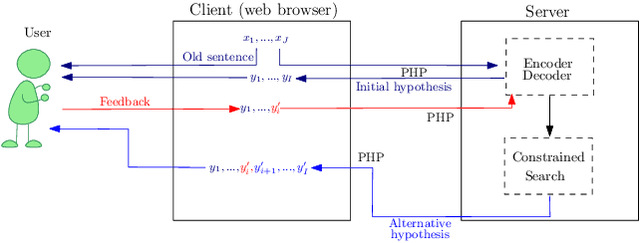

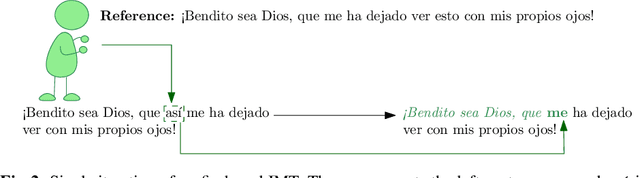

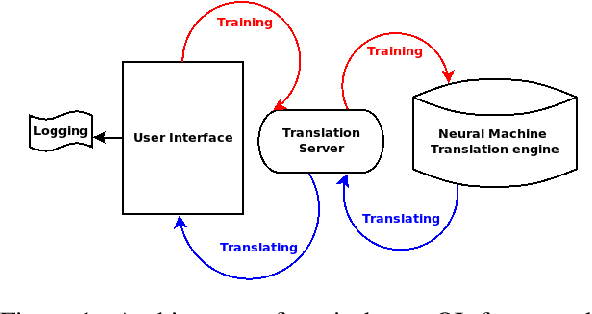

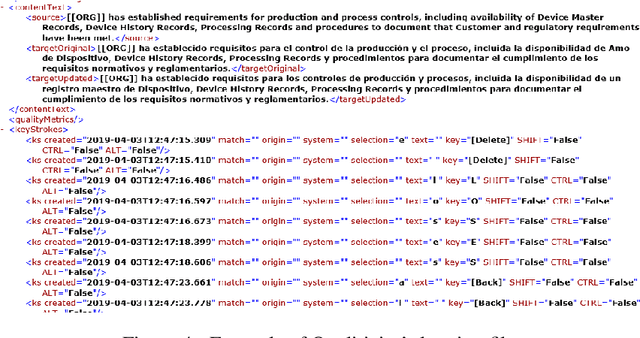

Abstract:We present our demonstration of two machine translation applications to historical documents. The first task consists in generating a new version of a historical document, written in the modern version of its original language. The second application is limited to a document's orthography. It adapts the document's spelling to modern standards in order to achieve an orthography consistency and accounting for the lack of spelling conventions. We followed an interactive, adaptive framework that allows the user to introduce corrections to the system's hypothesis. The system reacts to these corrections by generating a new hypothesis that takes them into account. Once the user is satisfied with the system's hypothesis and validates it, the system adapts its model following an online learning strategy. This system is implemented following a client-server architecture. We developed a website which communicates with the neural models. All code is open-source and publicly available. The demonstration is hosted at http://demosmt.prhlt.upv.es/mthd/.

An Interactive Machine Translation Framework for Modernizing Historical Documents

Oct 08, 2019

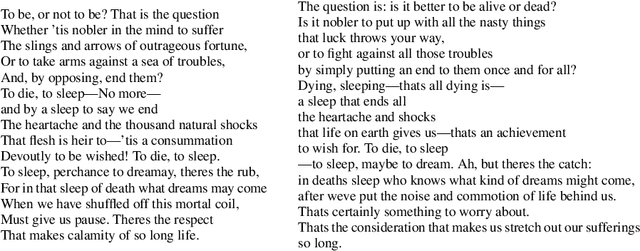

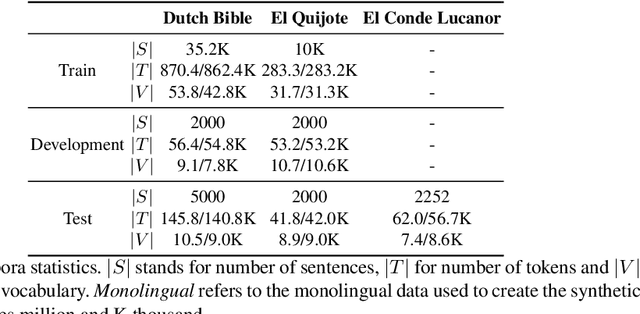

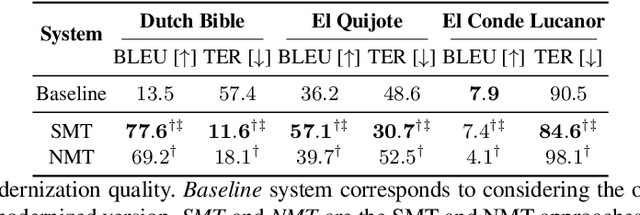

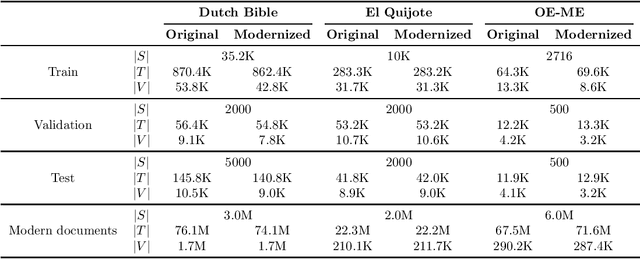

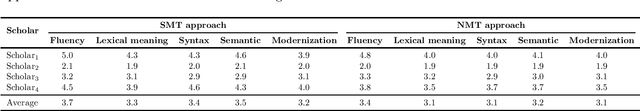

Abstract:Due to the nature of human language, historical documents are hard to comprehend by contemporary people. This limits their accessibility to scholars specialized in the time period in which the documents were written. Modernization aims at breaking this language barrier by generating a new version of a historical document, written in the modern version of the document's original language. However, while it is able to increase the document's comprehension, modernization is still far from producing an error-free version. In this work, we propose a collaborative framework in which a scholar can work together with the machine to generate the new version. We tested our approach on a simulated environment, achieving significant reductions of the human effort needed to produce the modernized version of the document.

Modernizing Historical Documents: a User Study

Jul 01, 2019

Abstract:Accessibility to historical documents is mostly limited to scholars. This is due to the language barrier inherent in human language and the linguistic properties of these documents. Given a historical document, modernization aims to generate a new version of it, written in the modern version of the document's language. Its goal is to tackle the language barrier, decreasing the comprehension difficulty and making historical documents accessible to a broader audience. In this work, we proposed a new neural machine translation approach that profits from modern documents to enrich its systems. We tested this approach with both automatic and human evaluation, and conducted a user study. Results showed that modernization is successfully reaching its goal, although it still has room for improvement.

Demonstration of a Neural Machine Translation System with Online Learning for Translators

Jun 21, 2019

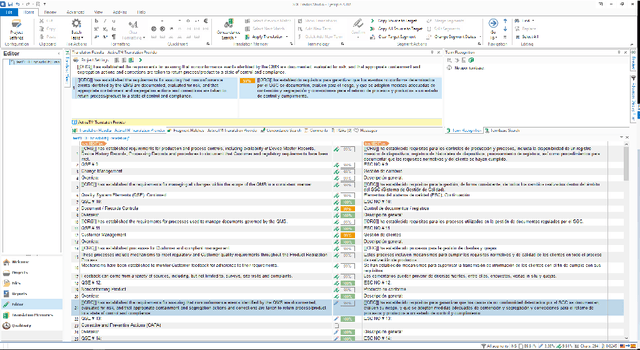

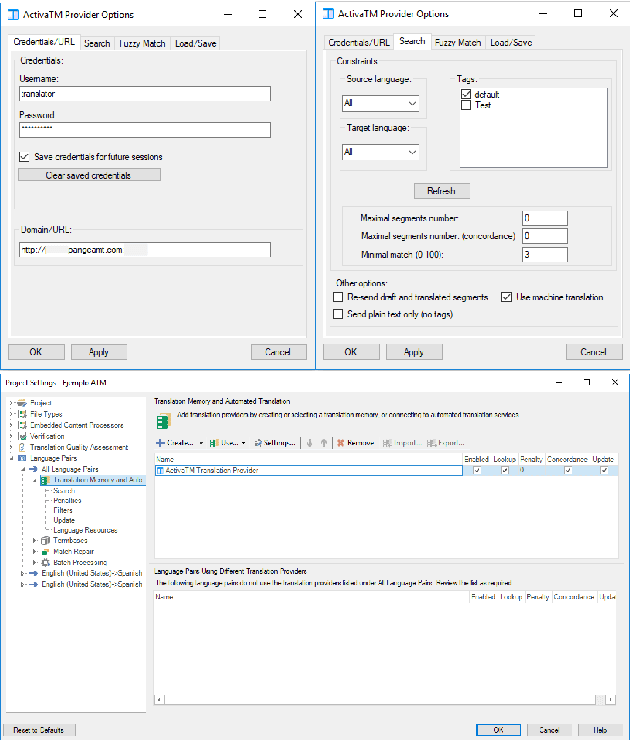

Abstract:We introduce a demonstration of our system, which implements online learning for neural machine translation in a production environment. These techniques allow the system to continuously learn from the corrections provided by the translators. We implemented an end-to-end platform integrating our machine translation servers to one of the most common user interfaces for professional translators: SDL Trados Studio. Our objective was to save post-editing effort as the machine is continuously learning from human choices and adapting the models to a specific domain or user style.

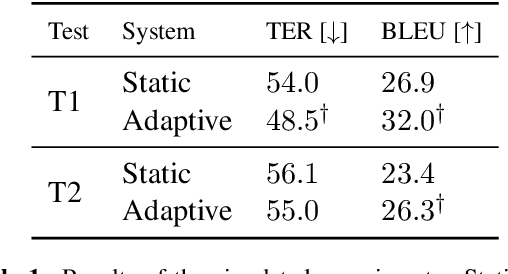

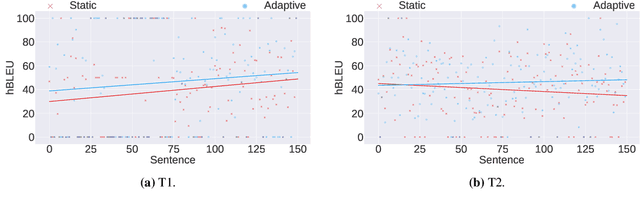

Incremental Adaptation of NMT for Professional Post-editors: A User Study

Jun 21, 2019

Abstract:A common use of machine translation in the industry is providing initial translation hypotheses, which are later supervised and post-edited by a human expert. During this revision process, new bilingual data are continuously generated. Machine translation systems can benefit from these new data, incrementally updating the underlying models under an online learning paradigm. We conducted a user study on this scenario, for a neural machine translation system. The experimentation was carried out by professional translators, with a vast experience in machine translation post-editing. The results showed a reduction in the required amount of human effort needed when post-editing the outputs of the system, improvements in the translation quality and a positive perception of the adaptive system by the users.

How Much Does Tokenization Affect Neural Machine Translation?

Dec 27, 2018

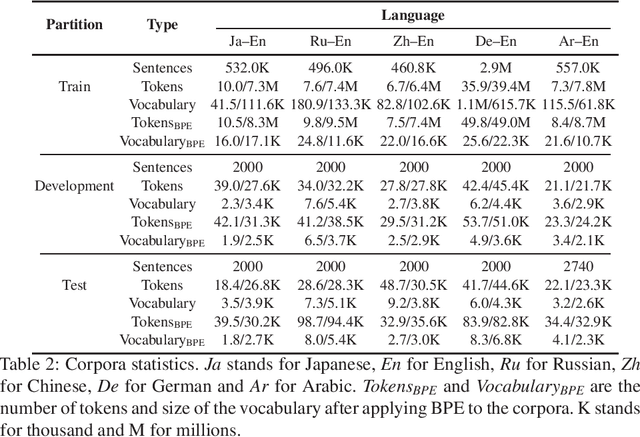

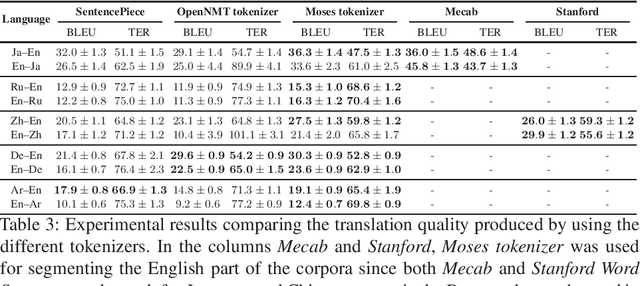

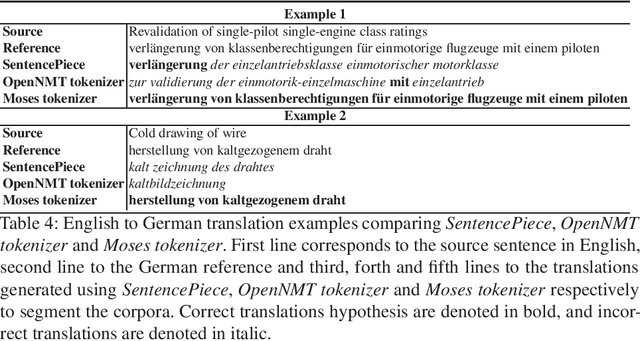

Abstract:Tokenization or segmentation is a wide concept that covers simple processes such as separating punctuation from words, or more sophisticated processes such as applying morphological knowledge. Neural Machine Translation (NMT) requires a limited-size vocabulary for computational cost and enough examples to estimate word embeddings. Separating punctuation and splitting tokens into words or subwords has proven to be helpful to reduce vocabulary and increase the number of examples of each word, improving the translation quality. Tokenization is more challenging when dealing with languages with no separator between words. In order to assess the impact of the tokenization in the quality of the final translation on NMT, we experimented on five tokenizers over ten language pairs. We reached the conclusion that the tokenization significantly affects the final translation quality and that the best tokenizer differs for different language pairs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge