Michael V. Boland

Identifying factors associated with fast visual field progression in patients with ocular hypertension based on unsupervised machine learning

Sep 26, 2023Abstract:Purpose: To identify ocular hypertension (OHT) subtypes with different trends of visual field (VF) progression based on unsupervised machine learning and to discover factors associated with fast VF progression. Participants: A total of 3133 eyes of 1568 ocular hypertension treatment study (OHTS) participants with at least five follow-up VF tests were included in the study. Methods: We used a latent class mixed model (LCMM) to identify OHT subtypes using standard automated perimetry (SAP) mean deviation (MD) trajectories. We characterized the subtypes based on demographic, clinical, ocular, and VF factors at the baseline. We then identified factors driving fast VF progression using generalized estimating equation (GEE) and justified findings qualitatively and quantitatively. Results: The LCMM model discovered four clusters (subtypes) of eyes with different trajectories of MD worsening. The number of eyes in clusters were 794 (25%), 1675 (54%), 531 (17%) and 133 (4%). We labelled the clusters as Improvers, Stables, Slow progressors, and Fast progressors based on their mean of MD decline, which were 0.08, -0.06, -0.21, and -0.45 dB/year, respectively. Eyes with fast VF progression had higher baseline age, intraocular pressure (IOP), pattern standard deviation (PSD) and refractive error (RE), but lower central corneal thickness (CCT). Fast progression was associated with calcium channel blockers, being male, heart disease history, diabetes history, African American race, stroke history, and migraine headaches.

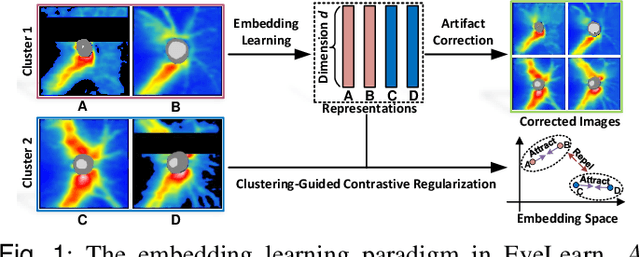

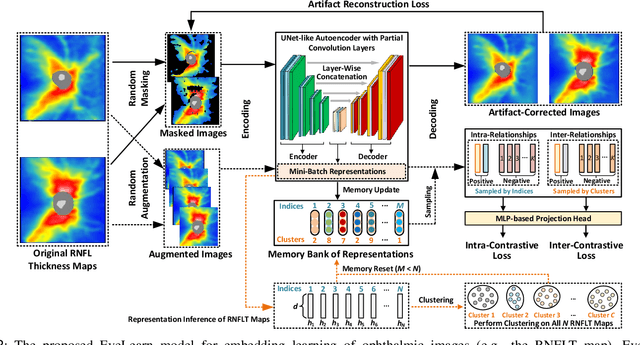

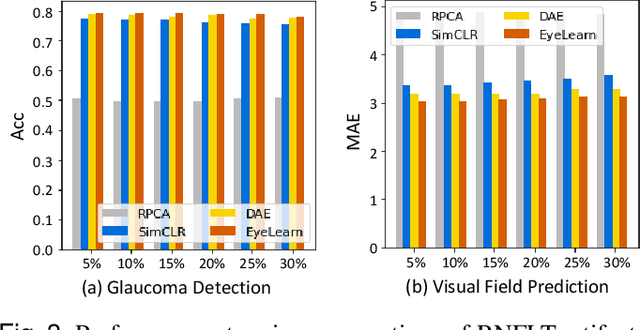

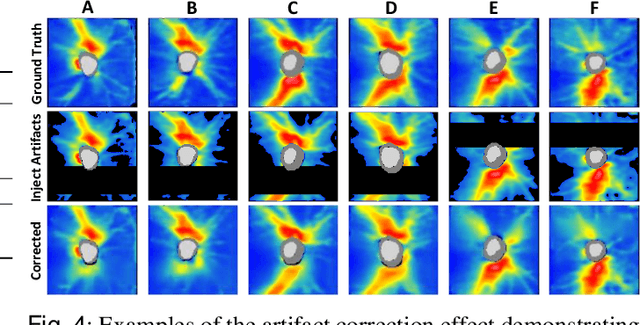

Artifact-Tolerant Clustering-Guided Contrastive Embedding Learning for Ophthalmic Images

Sep 02, 2022

Abstract:Ophthalmic images and derivatives such as the retinal nerve fiber layer (RNFL) thickness map are crucial for detecting and monitoring ophthalmic diseases (e.g., glaucoma). For computer-aided diagnosis of eye diseases, the key technique is to automatically extract meaningful features from ophthalmic images that can reveal the biomarkers (e.g., RNFL thinning patterns) linked to functional vision loss. However, representation learning from ophthalmic images that links structural retinal damage with human vision loss is non-trivial mostly due to large anatomical variations between patients. The task becomes even more challenging in the presence of image artifacts, which are common due to issues with image acquisition and automated segmentation. In this paper, we propose an artifact-tolerant unsupervised learning framework termed EyeLearn for learning representations of ophthalmic images. EyeLearn has an artifact correction module to learn representations that can best predict artifact-free ophthalmic images. In addition, EyeLearn adopts a clustering-guided contrastive learning strategy to explicitly capture the intra- and inter-image affinities. During training, images are dynamically organized in clusters to form contrastive samples in which images in the same or different clusters are encouraged to learn similar or dissimilar representations, respectively. To evaluate EyeLearn, we use the learned representations for visual field prediction and glaucoma detection using a real-world ophthalmic image dataset of glaucoma patients. Extensive experiments and comparisons with state-of-the-art methods verified the effectiveness of EyeLearn for learning optimal feature representations from ophthalmic images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge