Michael O'Connell

Hierarchical Meta-learning-based Adaptive Controller

Nov 23, 2023Abstract:We study how to design learning-based adaptive controllers that enable fast and accurate online adaptation in changing environments. In these settings, learning is typically done during an initial (offline) design phase, where the vehicle is exposed to different environmental conditions and disturbances (e.g., a drone exposed to different winds) to collect training data. Our work is motivated by the observation that real-world disturbances fall into two categories: 1) those that can be directly monitored or controlled during training, which we call "manageable", and 2) those that cannot be directly measured or controlled (e.g., nominal model mismatch, air plate effects, and unpredictable wind), which we call "latent". Imprecise modeling of these effects can result in degraded control performance, particularly when latent disturbances continuously vary. This paper presents the Hierarchical Meta-learning-based Adaptive Controller (HMAC) to learn and adapt to such multi-source disturbances. Within HMAC, we develop two techniques: 1) Hierarchical Iterative Learning, which jointly trains representations to caption the various sources of disturbances, and 2) Smoothed Streaming Meta-Learning, which learns to capture the evolving structure of latent disturbances over time (in addition to standard meta-learning on the manageable disturbances). Experimental results demonstrate that HMAC exhibits more precise and rapid adaptation to multi-source disturbances than other adaptive controllers.

Neural-Fly Enables Rapid Learning for Agile Flight in Strong Winds

May 13, 2022Abstract:Executing safe and precise flight maneuvers in dynamic high-speed winds is important for the ongoing commoditization of uninhabited aerial vehicles (UAVs). However, because the relationship between various wind conditions and its effect on aircraft maneuverability is not well understood, it is challenging to design effective robot controllers using traditional control design methods. We present Neural-Fly, a learning-based approach that allows rapid online adaptation by incorporating pretrained representations through deep learning. Neural-Fly builds on two key observations that aerodynamics in different wind conditions share a common representation and that the wind-specific part lies in a low-dimensional space. To that end, Neural-Fly uses a proposed learning algorithm, domain adversarially invariant meta-learning (DAIML), to learn the shared representation, only using 12 minutes of flight data. With the learned representation as a basis, Neural-Fly then uses a composite adaptation law to update a set of linear coefficients for mixing the basis elements. When evaluated under challenging wind conditions generated with the Caltech Real Weather Wind Tunnel, with wind speeds up to 43.6 kilometers/hour (12.1 meters/second), Neural-Fly achieves precise flight control with substantially smaller tracking error than state-of-the-art nonlinear and adaptive controllers. In addition to strong empirical performance, the exponential stability of Neural-Fly results in robustness guarantees. Last, our control design extrapolates to unseen wind conditions, is shown to be effective for outdoor flights with only onboard sensors, and can transfer across drones with minimal performance degradation.

Meta-Learning-Based Robust Adaptive Flight Control Under Uncertain Wind Conditions

Mar 04, 2021

Abstract:Realtime model learning proves challenging for complex dynamical systems, such as drones flying in variable wind conditions. Machine learning technique such as deep neural networks have high representation power but is often too slow to update onboard. On the other hand, adaptive control relies on simple linear parameter models can update as fast as the feedback control loop. We propose an online composite adaptation method that treats outputs from a deep neural network as a set of basis functions capable of representing different wind conditions. To help with training, meta-learning techniques are used to optimize the network output useful for adaptation. We validate our approach by flying a drone in an open air wind tunnel under varying wind conditions and along challenging trajectories. We compare the result with other adaptive controller with different basis function sets and show improvement over tracking and prediction errors.

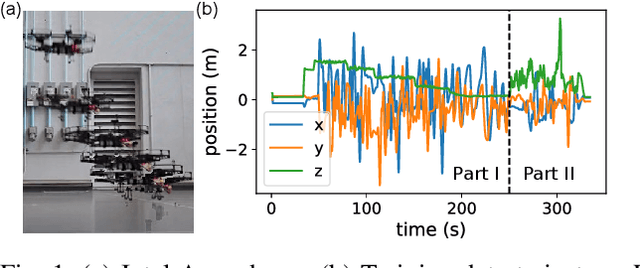

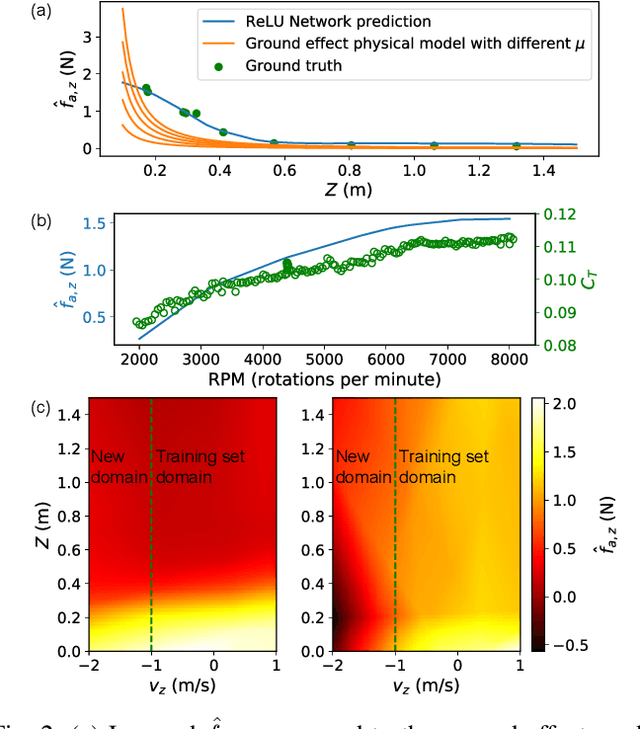

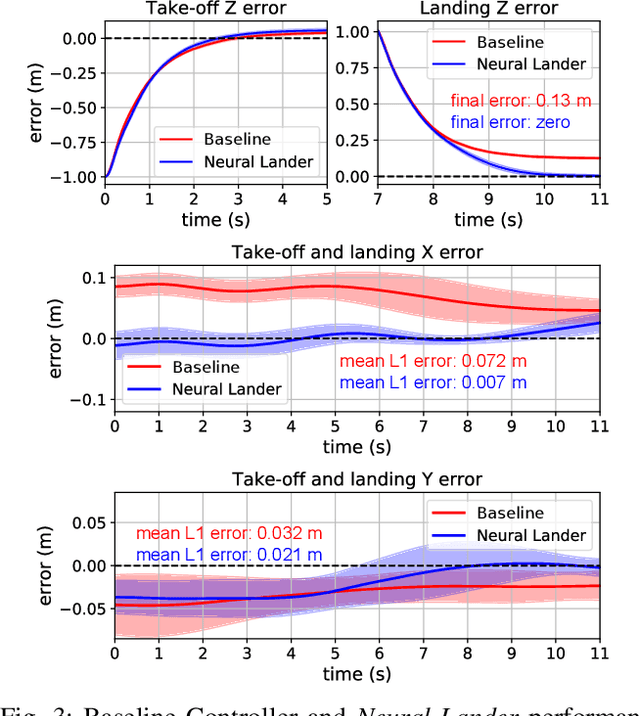

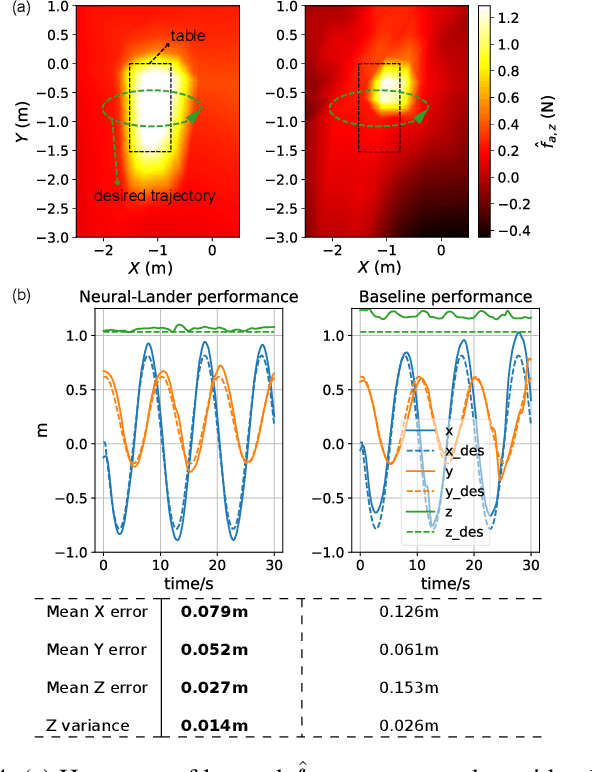

Neural Lander: Stable Drone Landing Control using Learned Dynamics

Mar 04, 2019

Abstract:Precise near-ground trajectory control is difficult for multi-rotor drones, due to the complex aerodynamic effects caused by interactions between multi-rotor airflow and the environment. Conventional control methods often fail to properly account for these complex effects and fall short in accomplishing smooth landing. In this paper, we present a novel deep-learning-based robust nonlinear controller (Neural Lander) that improves control performance of a quadrotor during landing. Our approach combines a nominal dynamics model with a Deep Neural Network (DNN) that learns high-order interactions. We apply spectral normalization (SN) to constrain the Lipschitz constant of the DNN. Leveraging this Lipschitz property, we design a nonlinear feedback linearization controller using the learned model and prove system stability with disturbance rejection. To the best of our knowledge, this is the first DNN-based nonlinear feedback controller with stability guarantees that can utilize arbitrarily large neural nets. Experimental results demonstrate that the proposed controller significantly outperforms a Baseline Nonlinear Tracking Controller in both landing and cross-table trajectory tracking cases. We also empirically show that the DNN generalizes well to unseen data outside the training domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge