Meng Fu

Approximately Invertible Neural Network for Learned Image Compression

Aug 30, 2024

Abstract:Learned image compression have attracted considerable interests in recent years. It typically comprises an analysis transform, a synthesis transform, quantization and an entropy coding model. The analysis transform and synthesis transform are used to encode an image to latent feature and decode the quantized feature to reconstruct the image, and can be regarded as coupled transforms. However, the analysis transform and synthesis transform are designed independently in the existing methods, making them unreliable in high-quality image compression. Inspired by the invertible neural networks in generative modeling, invertible modules are used to construct the coupled analysis and synthesis transforms. Considering the noise introduced in the feature quantization invalidates the invertible process, this paper proposes an Approximately Invertible Neural Network (A-INN) framework for learned image compression. It formulates the rate-distortion optimization in lossy image compression when using INN with quantization, which differentiates from using INN for generative modelling. Generally speaking, A-INN can be used as the theoretical foundation for any INN based lossy compression method. Based on this formulation, A-INN with a progressive denoising module (PDM) is developed to effectively reduce the quantization noise in the decoding. Moreover, a Cascaded Feature Recovery Module (CFRM) is designed to learn high-dimensional feature recovery from low-dimensional ones to further reduce the noise in feature channel compression. In addition, a Frequency-enhanced Decomposition and Synthesis Module (FDSM) is developed by explicitly enhancing the high-frequency components in an image to address the loss of high-frequency information inherent in neural network based image compression. Extensive experiments demonstrate that the proposed A-INN outperforms the existing learned image compression methods.

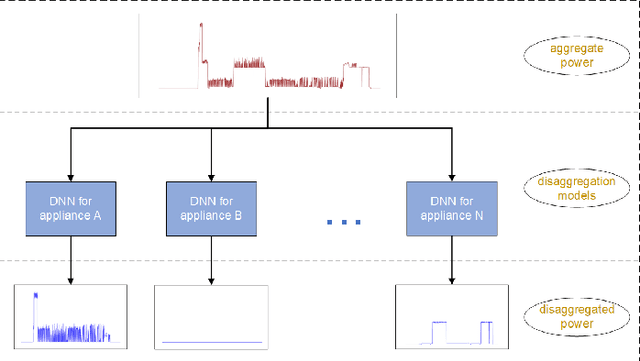

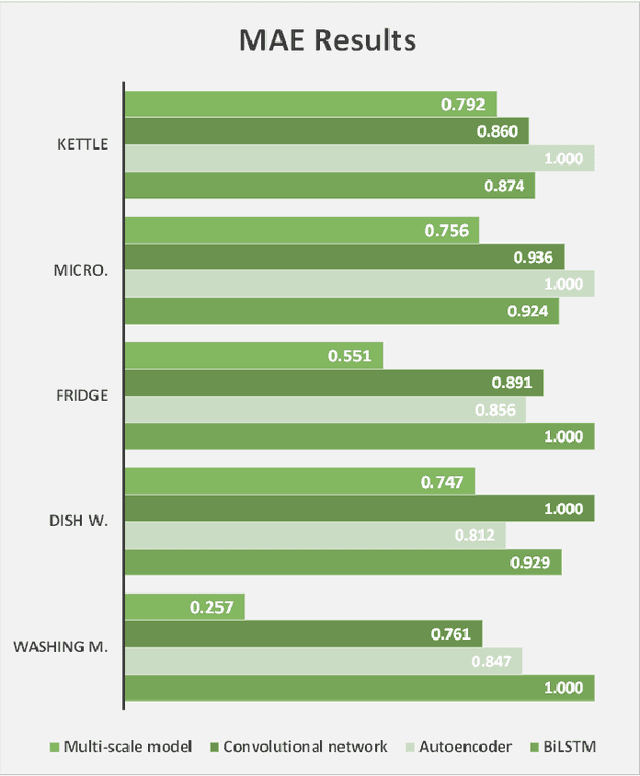

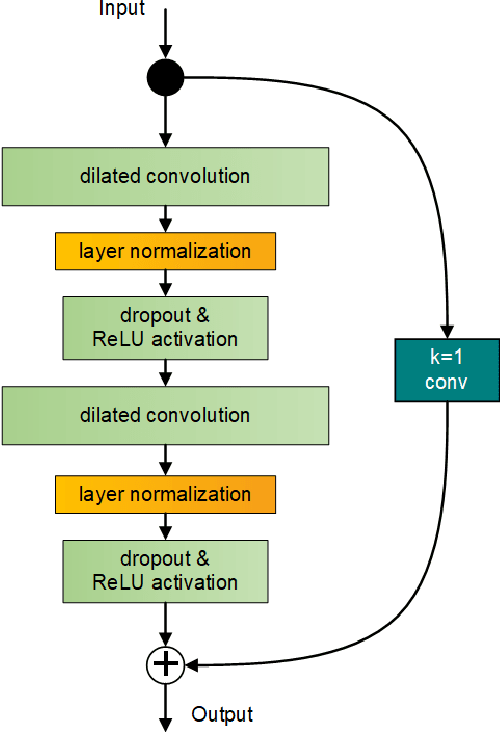

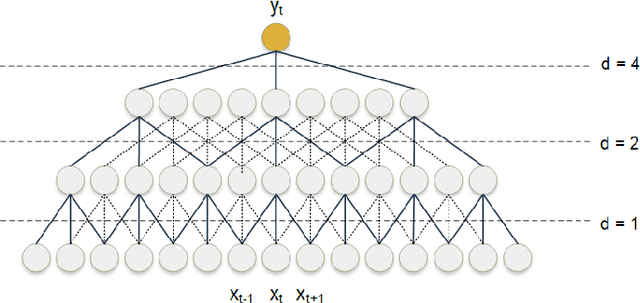

Sequence-to-Sequence Load Disaggregation Using Multi-Scale Residual Neural Network

Sep 25, 2020

Abstract:With the increased demand on economy and efficiency of measurement technology, Non-Intrusive Load Monitoring (NILM) has received more and more attention as a cost-effective way to monitor electricity and provide feedback to users. Deep neural networks has been shown a great potential in the field of load disaggregation. In this paper, firstly, a new convolutional model based on residual blocks is proposed to avoid the degradation problem which traditional networks more or less suffer from when network layers are increased in order to learn more complex features. Secondly, we propose dilated convolution to curtail the excessive quantity of model parameters and obtain bigger receptive field, and multi-scale structure to learn mixed data features in a more targeted way. Thirdly, we give details about generating training and test set under certain rules. Finally, the algorithm is tested on real-house public dataset, UK-DALE, with three existing neural networks. The results are compared and analysed, the proposed model shows improvements on F1 score, MAE as well as model complexity across different appliances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge