Matthias Hullin

Occlusion Fields: An Implicit Representation for Non-Line-of-Sight Surface Reconstruction

Mar 22, 2022

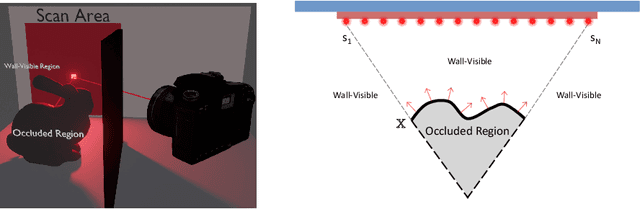

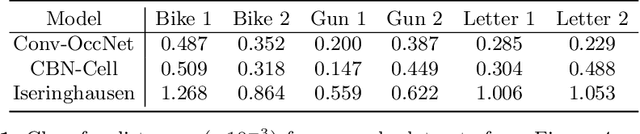

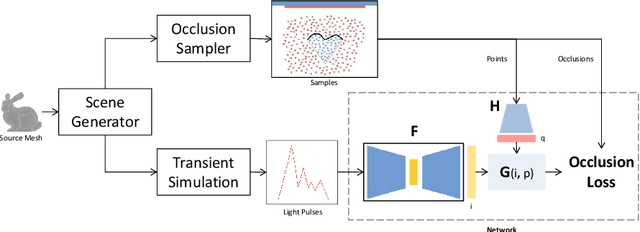

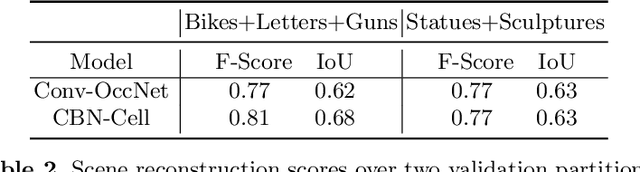

Abstract:Non-line-of-sight reconstruction (NLoS) is a novel indirect imaging modality that aims to recover objects or scene parts outside the field of view from measurements of light that is indirectly scattered off a directly visible, diffuse wall. Despite recent advances in acquisition and reconstruction techniques, the well-posedness of the problem at large, and the recoverability of objects and their shapes in particular, remains an open question. The commonly employed Fermat path criterion is rather conservative with this regard, as it classifies some surfaces as unrecoverable, although they contribute to the signal. In this paper, we use a simpler necessary criterion for an opaque surface patch to be recoverable. Such piece of surface must be directly visible from some point on the wall, and it must occlude the space behind itself. Inspired by recent advances in neural implicit representations, we devise a new representation and reconstruction technique for NLoS scenes that unifies the treatment of recoverability with the reconstruction itself. Our approach, which we validate on various synthetic and experimental datasets, exhibits interesting properties. Unlike memory-inefficient volumetric representations, ours allows to infer adaptively tessellated surfaces from time-of-flight measurements of moderate resolution. It can further recover features beyond the Fermat path criterion, and it is robust to significant amounts of self-occlusion. We believe that this is the first time that these properties have been achieved in one system that, as an additional benefit, is trainable and hence suited for data-driven approaches.

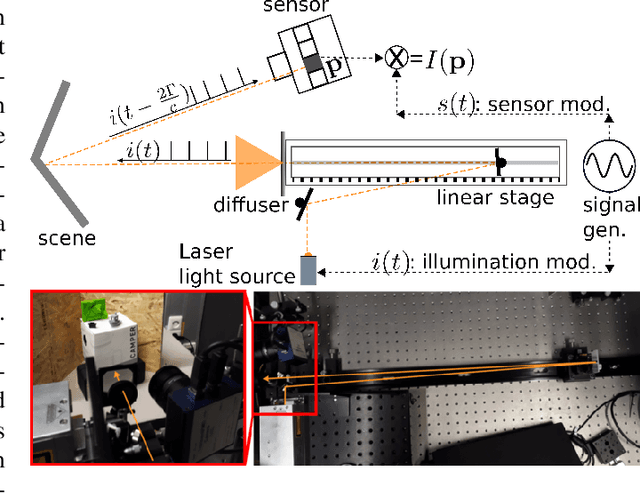

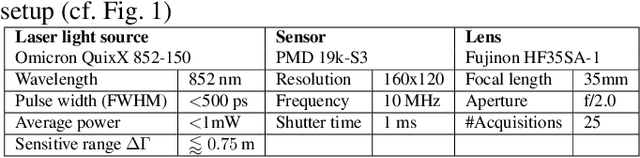

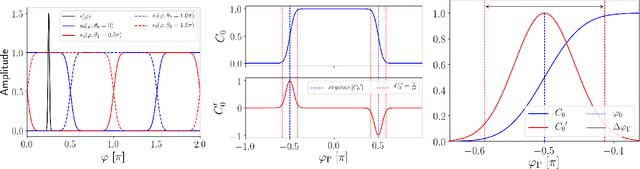

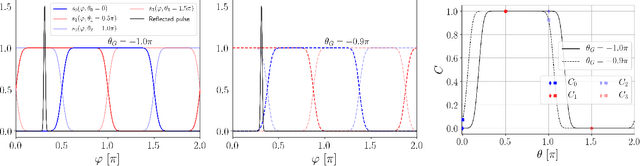

A new operation mode for depth-focused high-sensitivity ToF range finding

Sep 06, 2019

Abstract:We introduce pulsed correlation time-of-flight (PC-ToF) sensing, a new operation mode for correlation time-of-flight range sensors that combines a sub-nanosecond laser pulse source with a rectangular demodulation at the sensor side. In contrast to previous work, our proposed measurement scheme attempts not to optimize depth accuracy over the full measurement: With PC-ToF we trade the global sensitivity of a standard C-ToF setup for measurements with strongly localized high sensitivity -- we greatly enhance the depth resolution for the acquisition of scene features around a desired depth of interest. Using real-world experiments, we show that our technique is capable of achieving depth resolutions down to 2mm using a modulation frequency as low as 10MHz and an optical power as low as 1mW. This makes PC-ToF especially viable for low-power applications.

Neural network identification of people hidden from view with a single-pixel, single-photon detector

Sep 21, 2017

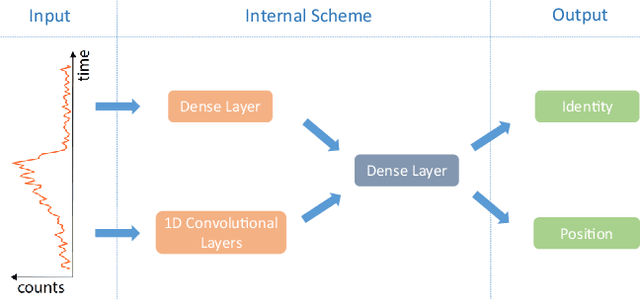

Abstract:Light scattered from multiple surfaces can be used to retrieve information of hidden environments. However, full three-dimensional retrieval of an object hidden from view by a wall has only been achieved with scanning systems and requires intensive computational processing of the retrieved data. Here we use a non-scanning, single-photon single-pixel detector in combination with an artificial neural network: this allows us to locate the position and to also simultaneously provide the actual identity of a hidden person, chosen from a database of people (N=3). Artificial neural networks applied to specific computational imaging problems can therefore enable novel imaging capabilities with hugely simplified hardware and processing times

Snapshot Difference Imaging using Time-of-Flight Sensors

May 19, 2017

Abstract:Computational photography encompasses a diversity of imaging techniques, but one of the core operations performed by many of them is to compute image differences. An intuitive approach to computing such differences is to capture several images sequentially and then process them jointly. Usually, this approach leads to artifacts when recording dynamic scenes. In this paper, we introduce a snapshot difference imaging approach that is directly implemented in the sensor hardware of emerging time-of-flight cameras. With a variety of examples, we demonstrate that the proposed snapshot difference imaging technique is useful for direct-global illumination separation, for direct imaging of spatial and temporal image gradients, for direct depth edge imaging, and more.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge