Matthew McCallum

A Novel 1D State Space for Efficient Music Rhythmic Analysis

Nov 01, 2021

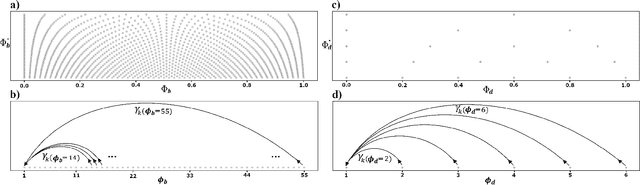

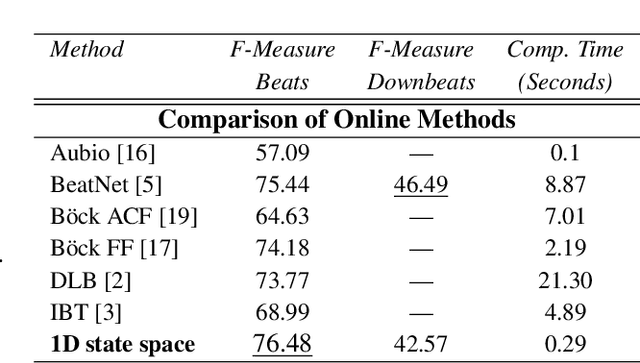

Abstract:Inferring music time structures has a broad range of applications in music production, processing and analysis. Scholars have proposed various methods to analyze different aspects of time structures, including beat, downbeat, tempo and meter. Many of the state-of-the-art methods, however, are computationally expensive. This makes them inapplicable in real-world industrial settings where the scale of the music collections can be millions. This paper proposes a new state space approach for music time structure analysis. The proposed approach collapses the commonly used 2D state spaces into 1D through a jump-back reward strategy. This reduces the state space size drastically. We then utilize the proposed method for casual, joint beat, downbeat, tempo, and meter tracking, and compare it against several previous beat and downbeat tracking methods. The proposed method delivers comparable performance with the state-of-the-art joint casual models with a much smaller state space and a more than 30 times speedup.

Mood Classification Using Listening Data

Oct 22, 2020

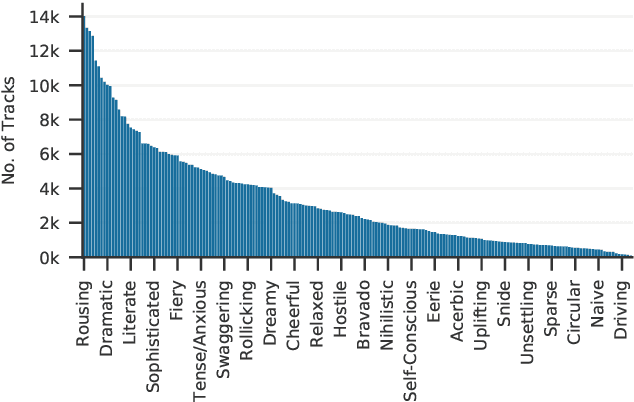

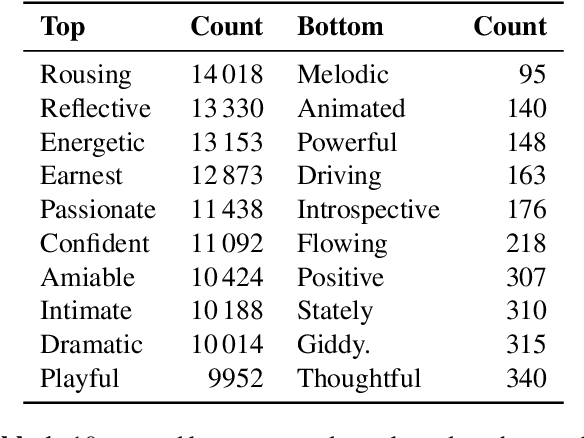

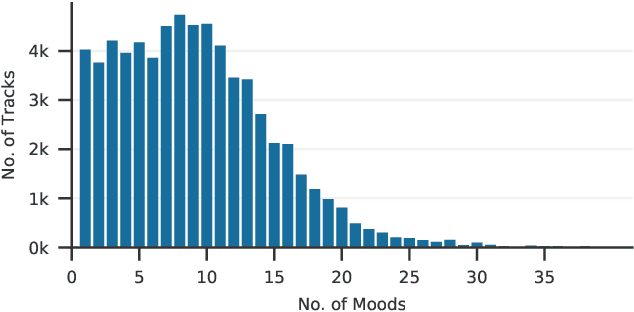

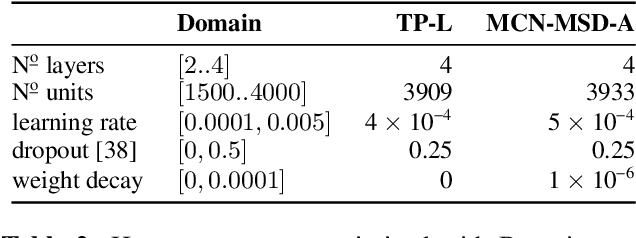

Abstract:The mood of a song is a highly relevant feature for exploration and recommendation in large collections of music. These collections tend to require automatic methods for predicting such moods. In this work, we show that listening-based features outperform content-based ones when classifying moods: embeddings obtained through matrix factorization of listening data appear to be more informative of a track mood than embeddings based on its audio content. To demonstrate this, we compile a subset of the Million Song Dataset, totalling 67k tracks, with expert annotations of 188 different moods collected from AllMusic. Our results on this novel dataset not only expose the limitations of current audio-based models, but also aim to foster further reproducible research on this timely topic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge