A Novel 1D State Space for Efficient Music Rhythmic Analysis

Paper and Code

Nov 01, 2021

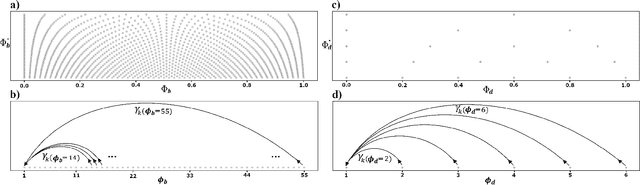

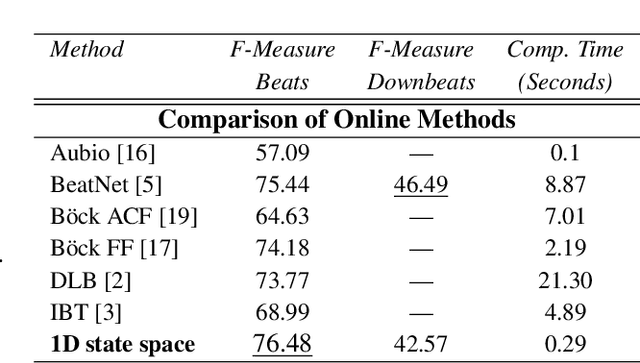

Inferring music time structures has a broad range of applications in music production, processing and analysis. Scholars have proposed various methods to analyze different aspects of time structures, including beat, downbeat, tempo and meter. Many of the state-of-the-art methods, however, are computationally expensive. This makes them inapplicable in real-world industrial settings where the scale of the music collections can be millions. This paper proposes a new state space approach for music time structure analysis. The proposed approach collapses the commonly used 2D state spaces into 1D through a jump-back reward strategy. This reduces the state space size drastically. We then utilize the proposed method for casual, joint beat, downbeat, tempo, and meter tracking, and compare it against several previous beat and downbeat tracking methods. The proposed method delivers comparable performance with the state-of-the-art joint casual models with a much smaller state space and a more than 30 times speedup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge