Matthew Beveridge

Hierarchical Material Recognition from Local Appearance

May 28, 2025Abstract:We introduce a taxonomy of materials for hierarchical recognition from local appearance. Our taxonomy is motivated by vision applications and is arranged according to the physical traits of materials. We contribute a diverse, in-the-wild dataset with images and depth maps of the taxonomy classes. Utilizing the taxonomy and dataset, we present a method for hierarchical material recognition based on graph attention networks. Our model leverages the taxonomic proximity between classes and achieves state-of-the-art performance. We demonstrate the model's potential to generalize to adverse, real-world imaging conditions, and that novel views rendered using the depth maps can enhance this capability. Finally, we show the model's capacity to rapidly learn new materials in a few-shot learning setting.

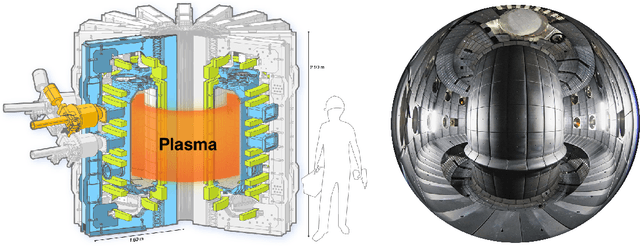

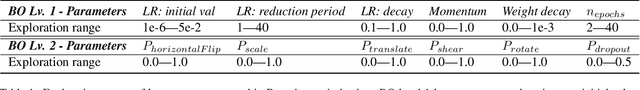

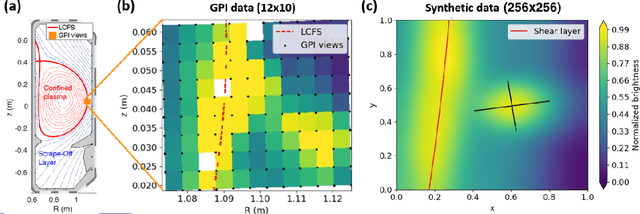

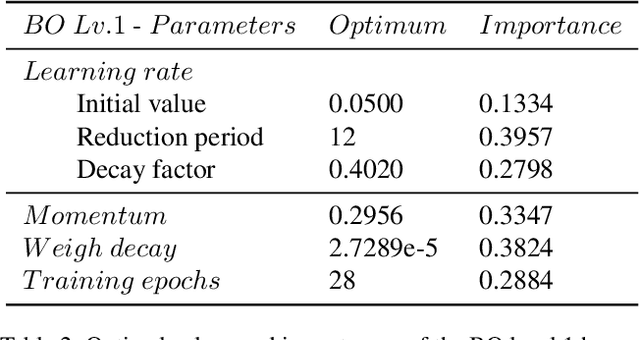

Tracking Blobs in the Turbulent Edge Plasma of Tokamak Fusion Reactors

Nov 16, 2021

Abstract:The analysis of turbulent flows is a significant area in fusion plasma physics. Current theoretical models quantify the degree of turbulence based on the evolution of certain plasma density structures, called blobs. In this work we track the shape and the position of these blobs in high frequency video data obtained from Gas Puff Imaging (GPI) diagnostics, by training a mask R-CNN model on synthetic data and testing on both synthetic and real data. As a result, our model effectively tracks blob structures on both synthetic and real experimental GPI data, showing its prospect as a powerful tool to estimate blob statistics linked with edge turbulence of the tokamak plasma.

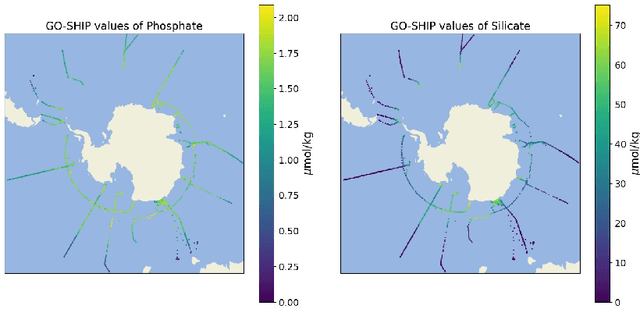

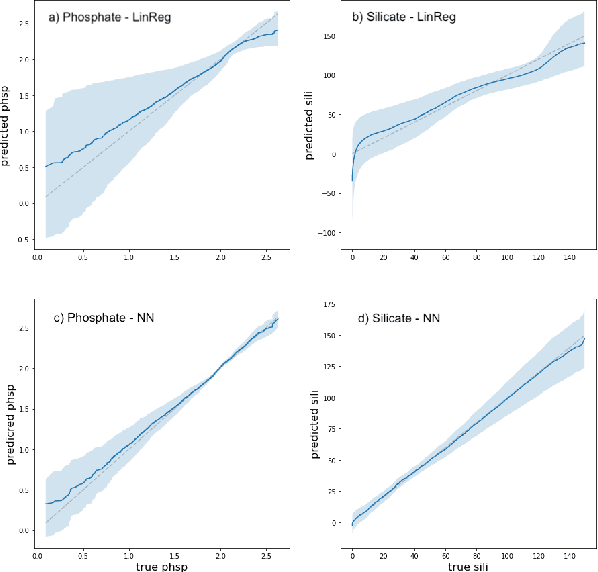

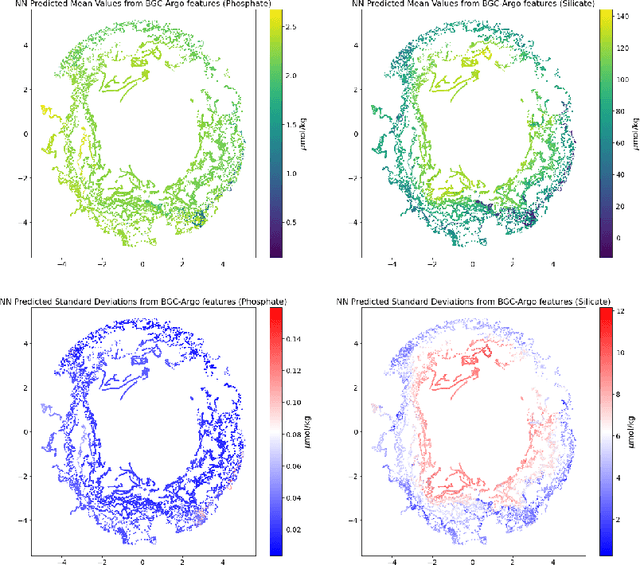

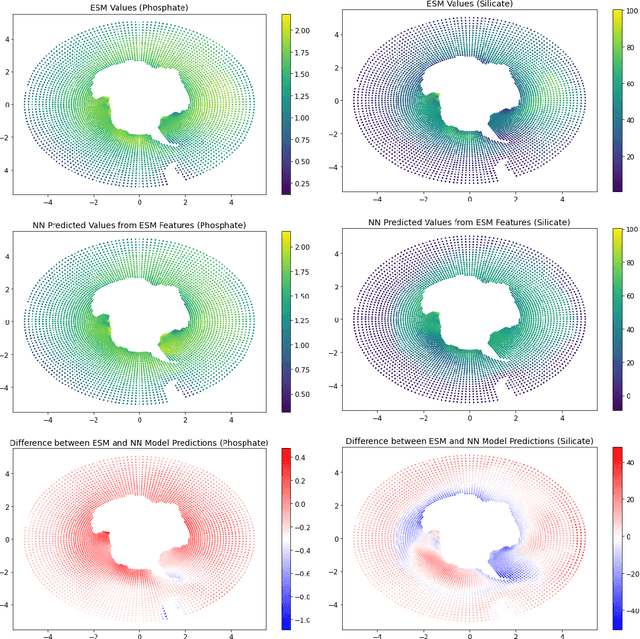

Predicting Critical Biogeochemistry of the Southern Ocean for Climate Monitoring

Oct 30, 2021

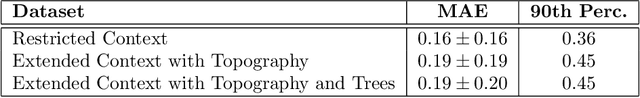

Abstract:The Biogeochemical-Argo (BGC-Argo) program is building a network of globally distributed, sensor-equipped robotic profiling floats, improving our understanding of the climate system and how it is changing. These floats, however, are limited in the number of variables measured. In this study, we train neural networks to predict silicate and phosphate values in the Southern Ocean from temperature, pressure, salinity, oxygen, nitrate, and location and apply these models to earth system model (ESM) and BGC-Argo data to expand the utility of this ocean observation network. We trained our neural networks on observations from the Global Ocean Ship-Based Hydrographic Investigations Program (GO-SHIP) and use dropout regularization to provide uncertainty bounds around our predicted values. Our neural network significantly improves upon linear regression but shows variable levels of uncertainty across the ranges of predicted variables. We explore the generalization of our estimators to test data outside our training distribution from both ESM and BGC-Argo data. Our use of out-of-distribution test data to examine shifts in biogeochemical parameters and calculate uncertainty bounds around estimates advance the state-of-the-art in oceanographic data and climate monitoring. We make our data and code publicly available.

* 6 pages, 4 figures

Predicting Atlantic Multidecadal Variability

Oct 29, 2021

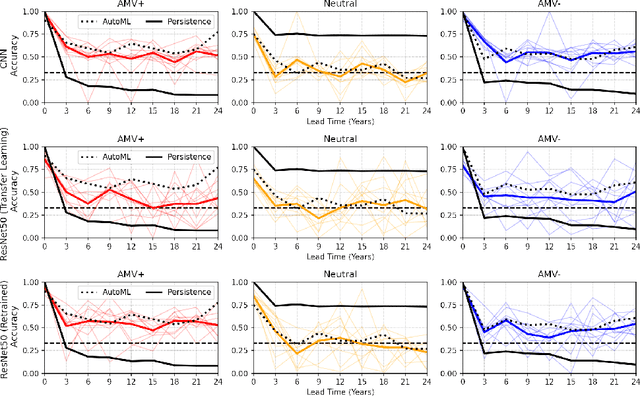

Abstract:Atlantic Multidecadal Variability (AMV) describes variations of North Atlantic sea surface temperature with a typical cycle of between 60 and 70 years. AMV strongly impacts local climate over North America and Europe, therefore prediction of AMV, especially the extreme values, is of great societal utility for understanding and responding to regional climate change. This work tests multiple machine learning models to improve the state of AMV prediction from maps of sea surface temperature, salinity, and sea level pressure in the North Atlantic region. We use data from the Community Earth System Model 1 Large Ensemble Project, a state-of-the-art climate model with 3,440 years of data. Our results demonstrate that all of the models we use outperform the traditional persistence forecast baseline. Predicting the AMV is important for identifying future extreme temperatures and precipitation, as well as hurricane activity, in Europe and North America up to 25 years in advance.

* 7 pages, 3 figures

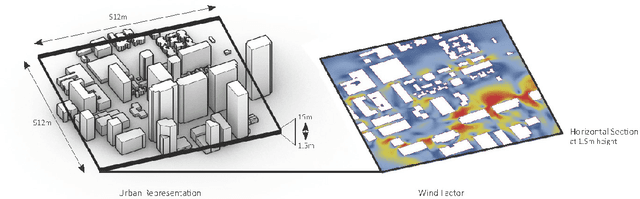

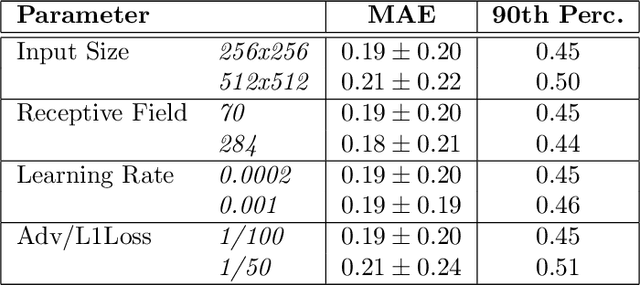

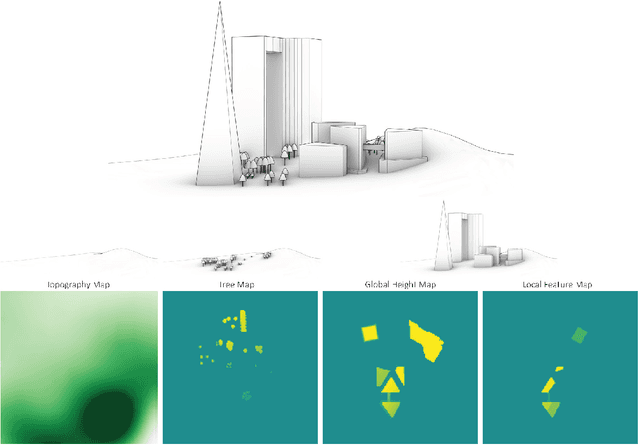

Pedestrian Wind Factor Estimation in Complex Urban Environments

Oct 06, 2021

Abstract:Urban planners and policy makers face the challenge of creating livable and enjoyable cities for larger populations in much denser urban conditions. While the urban microclimate holds a key role in defining the quality of urban spaces today and in the future, the integration of wind microclimate assessment in early urban design and planning processes remains a challenge due to the complexity and high computational expense of computational fluid dynamics (CFD) simulations. This work develops a data-driven workflow for real-time pedestrian wind comfort estimation in complex urban environments which may enable designers, policy makers and city residents to make informed decisions about mobility, health, and energy choices. We use a conditional generative adversarial network (cGAN) architecture to reduce the computational computation while maintaining high confidence levels and interpretability, adequate representation of urban complexity, and suitability for pedestrian comfort estimation. We demonstrate high quality wind field approximations while reducing computation time from days to seconds.

* 16 pages, 5 figures

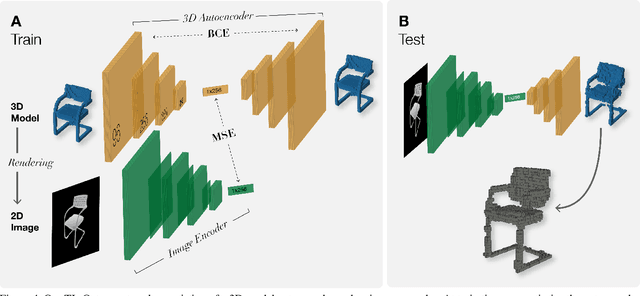

Image2Lego: Customized LEGO Set Generation from Images

Aug 19, 2021

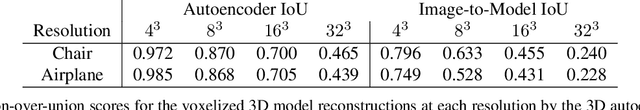

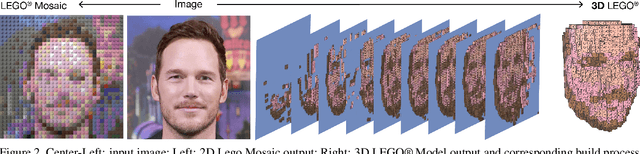

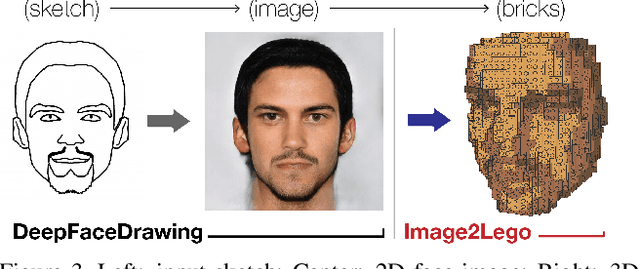

Abstract:Although LEGO sets have entertained generations of children and adults, the challenge of designing customized builds matching the complexity of real-world or imagined scenes remains too great for the average enthusiast. In order to make this feat possible, we implement a system that generates a LEGO brick model from 2D images. We design a novel solution to this problem that uses an octree-structured autoencoder trained on 3D voxelized models to obtain a feasible latent representation for model reconstruction, and a separate network trained to predict this latent representation from 2D images. LEGO models are obtained by algorithmic conversion of the 3D voxelized model to bricks. We demonstrate first-of-its-kind conversion of photographs to 3D LEGO models. An octree architecture enables the flexibility to produce multiple resolutions to best fit a user's creative vision or design needs. In order to demonstrate the broad applicability of our system, we generate step-by-step building instructions and animations for LEGO models of objects and human faces. Finally, we test these automatically generated LEGO sets by constructing physical builds using real LEGO bricks.

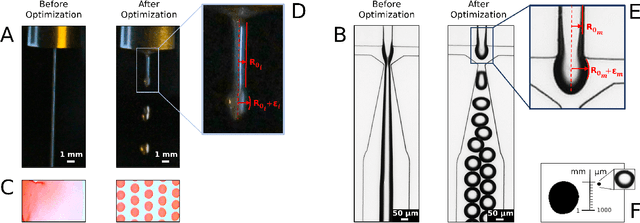

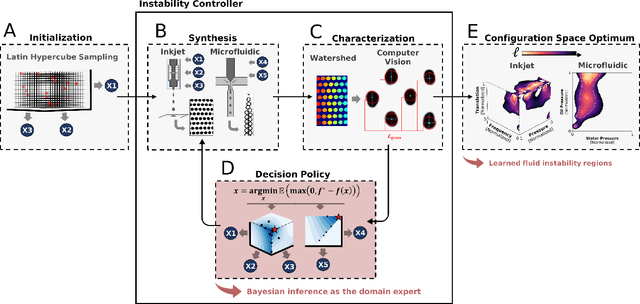

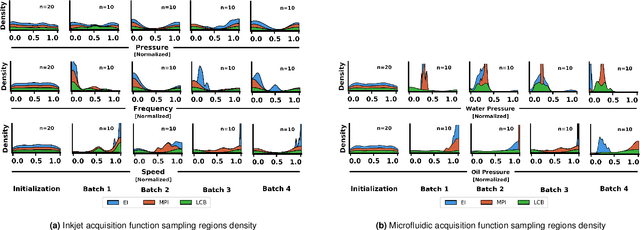

Autonomous Optimization of Fluid Systems at Varying Length Scales

Jun 10, 2021

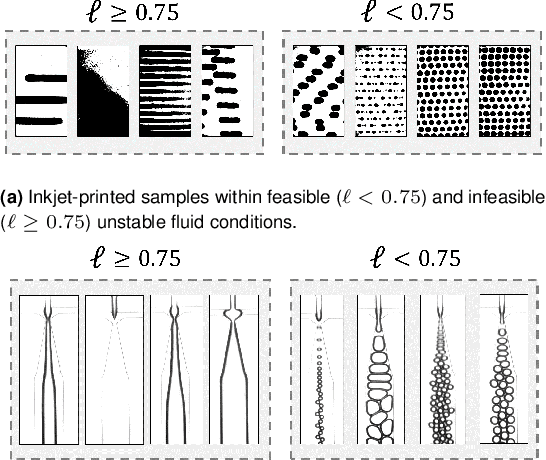

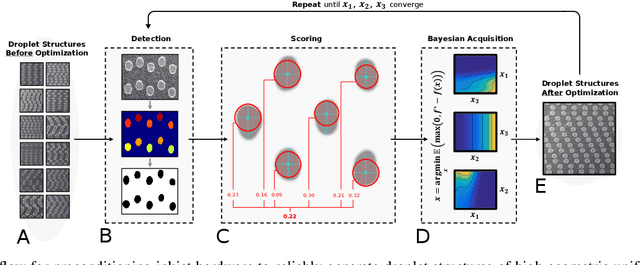

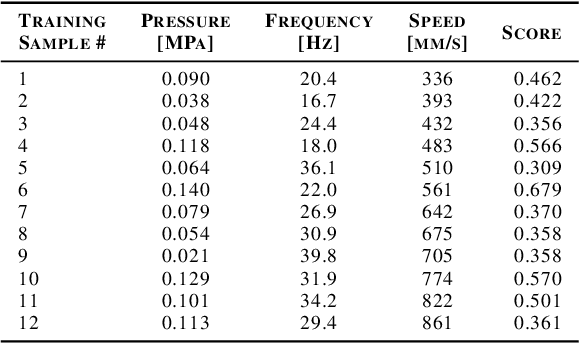

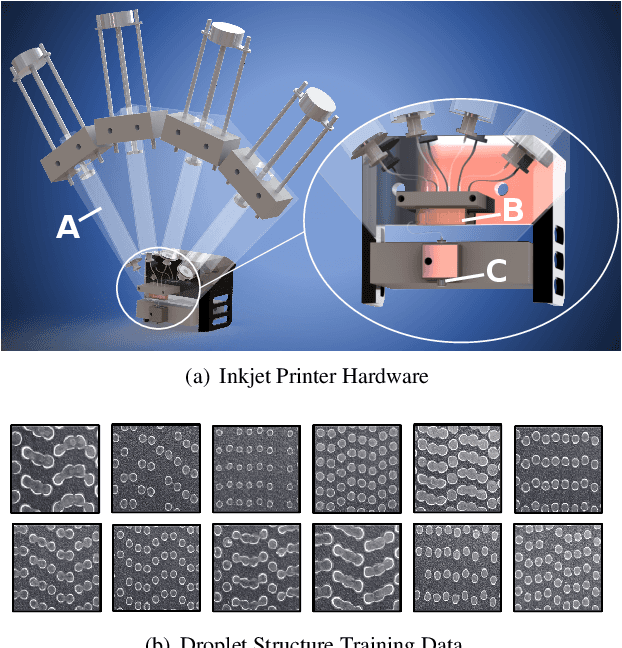

Abstract:Autonomous optimization is a process by which hardware conditions are discovered that generate an optimized experimental product without the guidance of a domain expert. We design an autonomous optimization framework to discover the experimental conditions within fluid systems that generate discrete and uniform droplet patterns. Generating discrete and uniform droplets requires high-precision control over the experimental conditions of a fluid system. Fluid stream instabilities, such as Rayleigh-Plateau instability and capillary instability, drive the separation of a flow into individual droplets. However, because this phenomenon leverages an instability, by nature the hardware must be precisely tuned to achieve uniform, repeatable droplets. Typically this requires a domain expert in the loop and constant re-tuning depending on the hardware configuration and liquid precursor selection. Herein, we propose a computer vision-driven Bayesian optimization framework to discover the precise hardware conditions that generate uniform, reproducible droplets with the desired features, leveraging flow instability without a domain expert in the loop. This framework is validated on two fluid systems, at the micrometer and millimeter length scales, using microfluidic and inkjet systems, respectively, indicating the application breadth of this approach.

Online Preconditioning of Experimental Inkjet Hardware by Bayesian Optimization in Loop

May 06, 2021

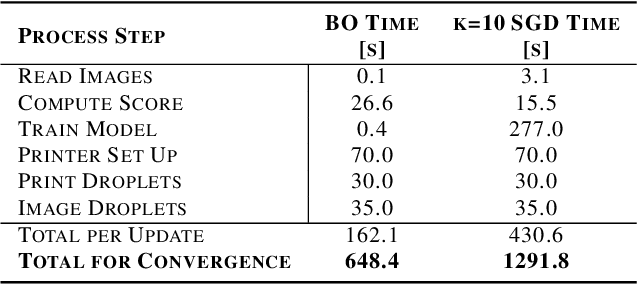

Abstract:High-performance semiconductor optoelectronics such as perovskites have high-dimensional and vast composition spaces that govern the performance properties of the material. To cost-effectively search these composition spaces, we utilize a high-throughput experimentation method of rapidly printing discrete droplets via inkjet deposition, in which each droplet is comprised of a unique permutation of semiconductor materials. However, inkjet printer systems are not optimized to run high-throughput experimentation on semiconductor materials. Thus, in this work, we develop a computer vision-driven Bayesian optimization framework for optimizing the deposited droplet structures from an inkjet printer such that it is tuned to perform high-throughput experimentation on semiconductor materials. The goal of this framework is to tune to the hardware conditions of the inkjet printer in the shortest amount of time using the fewest number of droplet samples such that we minimize the time and resources spent on setting the system up for material discovery applications. We demonstrate convergence on optimum inkjet hardware conditions in 10 minutes using Bayesian optimization of computer vision-scored droplet structures. We compare our Bayesian optimization results with stochastic gradient descent.

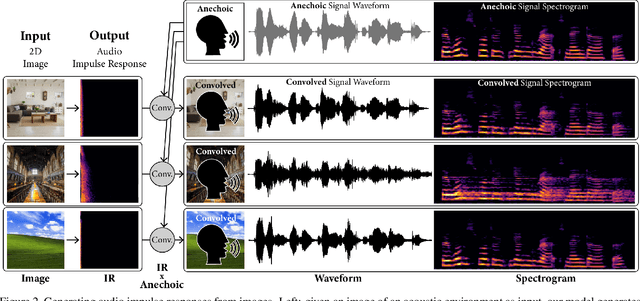

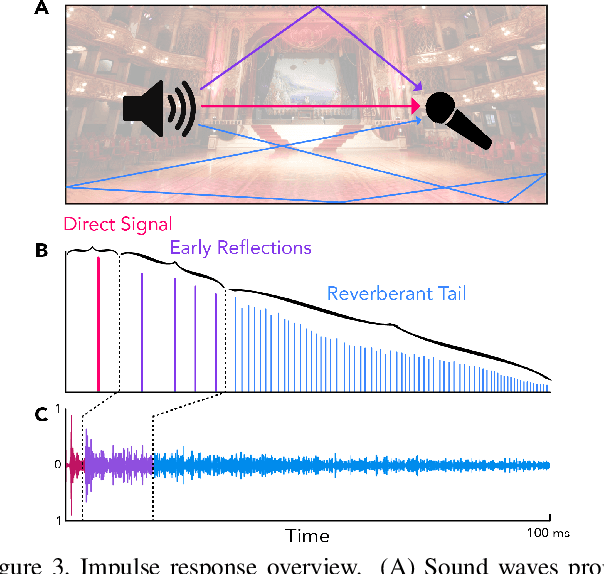

Image2Reverb: Cross-Modal Reverb Impulse Response Synthesis

Mar 26, 2021

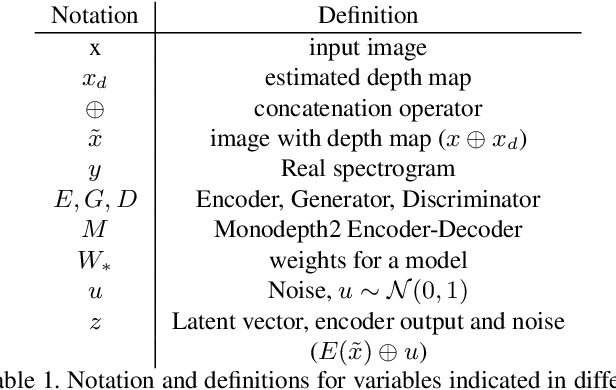

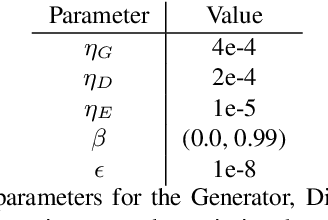

Abstract:Measuring the acoustic characteristics of a space is often done by capturing its impulse response (IR), a representation of how a full-range stimulus sound excites it. This is the first work that generates an IR from a single image, which we call Image2Reverb. This IR is then applied to other signals using convolution, simulating the reverberant characteristics of the space shown in the image. Recording these IRs is both time-intensive and expensive, and often infeasible for inaccessible locations. We use an end-to-end neural network architecture to generate plausible audio impulse responses from single images of acoustic environments. We evaluate our method both by comparisons to ground truth data and by human expert evaluation. We demonstrate our approach by generating plausible impulse responses from diverse settings and formats including well known places, musical halls, rooms in paintings, images from animations and computer games, synthetic environments generated from text, panoramic images, and video conference backgrounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge