Matt Benatan

An adaptive approach to Bayesian Optimization with switching costs

May 14, 2024

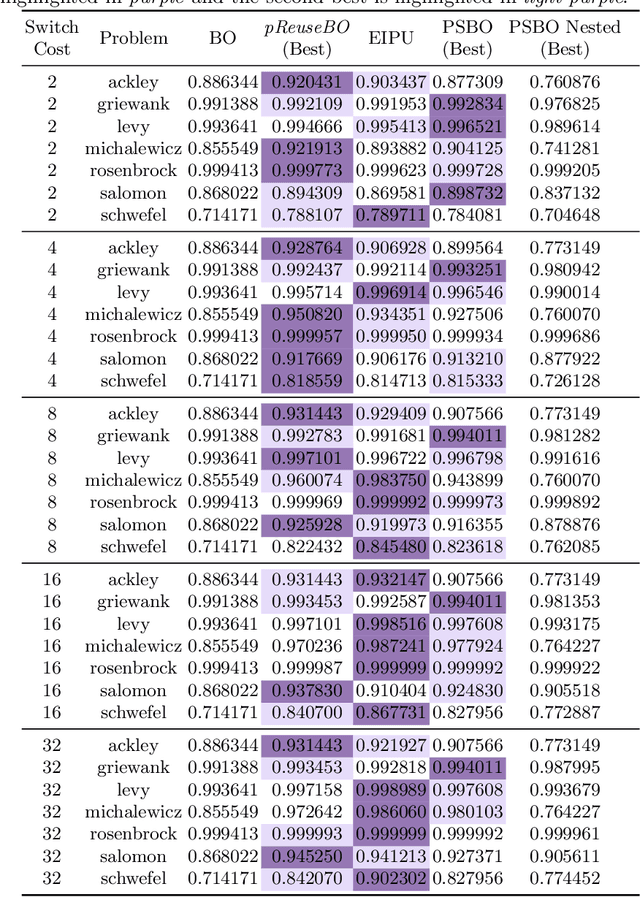

Abstract:We investigate modifications to Bayesian Optimization for a resource-constrained setting of sequential experimental design where changes to certain design variables of the search space incur a switching cost. This models the scenario where there is a trade-off between evaluating more while maintaining the same setup, or switching and restricting the number of possible evaluations due to the incurred cost. We adapt two process-constrained batch algorithms to this sequential problem formulation, and propose two new methods: one cost-aware and one cost-ignorant. We validate and compare the algorithms using a set of 7 scalable test functions in different dimensionalities and switching-cost settings for 30 total configurations. Our proposed cost-aware hyperparameter-free algorithm yields comparable results to tuned process-constrained algorithms in all settings we considered, suggesting some degree of robustness to varying landscape features and cost trade-offs. This method starts to outperform the other algorithms with increasing switching-cost. Our work broadens out from other recent Bayesian Optimization studies in resource-constrained settings that consider a batch setting only. While the contributions of this work are relevant to the general class of resource-constrained problems, they are particularly relevant to problems where adaptability to varying resource availability is of high importance

Model-agnostic variable importance for predictive uncertainty: an entropy-based approach

Oct 19, 2023

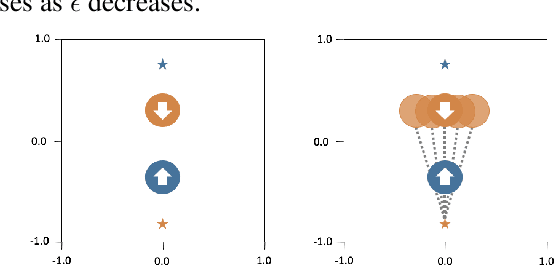

Abstract:In order to trust the predictions of a machine learning algorithm, it is necessary to understand the factors that contribute to those predictions. In the case of probabilistic and uncertainty-aware models, it is necessary to understand not only the reasons for the predictions themselves, but also the model's level of confidence in those predictions. In this paper, we show how existing methods in explainability can be extended to uncertainty-aware models and how such extensions can be used to understand the sources of uncertainty in a model's predictive distribution. In particular, by adapting permutation feature importance, partial dependence plots, and individual conditional expectation plots, we demonstrate that novel insights into model behaviour may be obtained and that these methods can be used to measure the impact of features on both the entropy of the predictive distribution and the log-likelihood of the ground truth labels under that distribution. With experiments using both synthetic and real-world data, we demonstrate the utility of these approaches in understanding both the sources of uncertainty and their impact on model performance.

SonOpt: Sonifying Bi-objective Population-Based Optimization Algorithms

Feb 24, 2022

Abstract:We propose SonOpt, the first (open source) data sonification application for monitoring the progress of bi-objective population-based optimization algorithms during search, to facilitate algorithm understanding. SonOpt provides insights into convergence/stagnation of search, the evolution of the approximation set shape, location of recurring points in the approximation set, and population diversity. The benefits of data sonification have been shown for various non-optimization related monitoring tasks. However, very few attempts have been made in the context of optimization and their focus has been exclusively on single-objective problems. In comparison, SonOpt is designed for bi-objective optimization problems, relies on objective function values of non-dominated solutions only, and is designed with the user (listener) in mind; avoiding convolution of multiple sounds and prioritising ease of familiarizing with the system. This is achieved using two sonification paths relying on the concepts of wavetable and additive synthesis. This paper motivates and describes the architecture of SonOpt, and then validates SonOpt for two popular multi-objective optimization algorithms (NSGA-II and MOEA/D). Experience SonOpt yourself via https://github.com/tasos-a/SonOpt-1.0 .

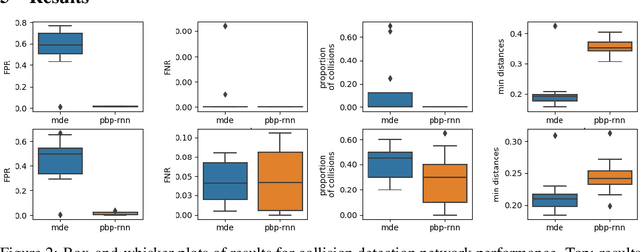

Fully Bayesian Recurrent Neural Networks for Safe Reinforcement Learning

Nov 26, 2019

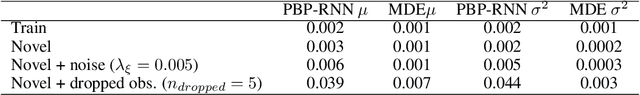

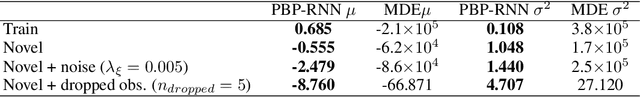

Abstract:Reinforcement Learning (RL) has demonstrated state-of-the-art results in a number of autonomous system applications, however many of the underlying algorithms rely on black-box predictions. This results in poor explainability of the behaviour of these systems, raising concerns as to their use in safety-critical applications. Recent work has demonstrated that uncertainty-aware models exhibit more cautious behaviours through the incorporation of model uncertainty estimates. In this work, we build on Probabilistic Backpropagation to introduce a fully Bayesian Recurrent Neural Network architecture. We apply this within a Safe RL scenario, and demonstrate that the proposed method significantly outperforms a popular approach for obtaining model uncertainties in collision avoidance tasks. Furthermore, we demonstrate that the proposed approach requires less training and is far more efficient than the current leading method, both in terms of compute resource and memory footprint.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge