Matan Rusanovsky

Memories of Forgotten Concepts

Dec 01, 2024

Abstract:Diffusion models dominate the space of text-to-image generation, yet they may produce undesirable outputs, including explicit content or private data. To mitigate this, concept ablation techniques have been explored to limit the generation of certain concepts. In this paper, we reveal that the erased concept information persists in the model and that erased concept images can be generated using the right latent. Utilizing inversion methods, we show that there exist latent seeds capable of generating high quality images of erased concepts. Moreover, we show that these latents have likelihoods that overlap with those of images outside the erased concept. We extend this to demonstrate that for every image from the erased concept set, we can generate many seeds that generate the erased concept. Given the vast space of latents capable of generating ablated concept images, our results suggest that fully erasing concept information may be intractable, highlighting possible vulnerabilities in current concept ablation techniques.

CapeX: Category-Agnostic Pose Estimation from Textual Point Explanation

Jun 01, 2024

Abstract:Conventional 2D pose estimation models are constrained by their design to specific object categories. This limits their applicability to predefined objects. To overcome these limitations, category-agnostic pose estimation (CAPE) emerged as a solution. CAPE aims to facilitate keypoint localization for diverse object categories using a unified model, which can generalize from minimal annotated support images. Recent CAPE works have produced object poses based on arbitrary keypoint definitions annotated on a user-provided support image. Our work departs from conventional CAPE methods, which require a support image, by adopting a text-based approach instead of the support image. Specifically, we use a pose-graph, where nodes represent keypoints that are described with text. This representation takes advantage of the abstraction of text descriptions and the structure imposed by the graph. Our approach effectively breaks symmetry, preserves structure, and improves occlusion handling. We validate our novel approach using the MP-100 benchmark, a comprehensive dataset spanning over 100 categories and 18,000 images. Under a 1-shot setting, our solution achieves a notable performance boost of 1.07\%, establishing a new state-of-the-art for CAPE. Additionally, we enrich the dataset by providing text description annotations, further enhancing its utility for future research.

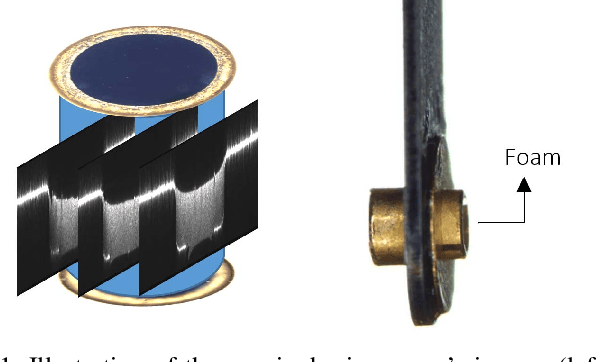

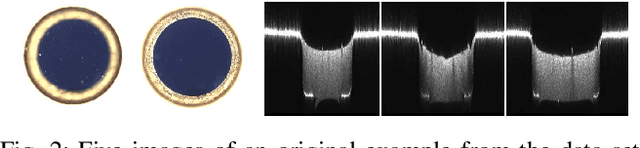

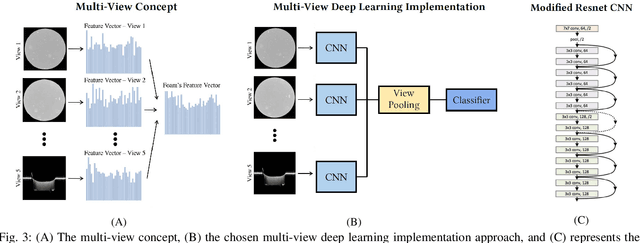

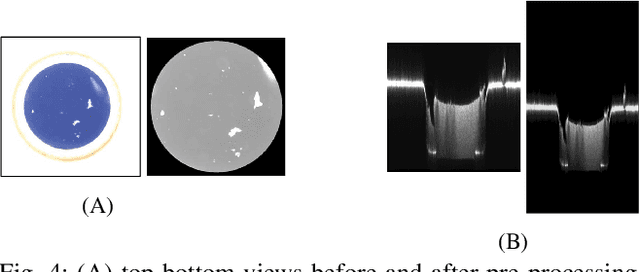

Determining HEDP Foams' Quality with Multi-View Deep Learning Classification

Aug 10, 2022

Abstract:High energy density physics (HEDP) experiments commonly involve a dynamic wave-front propagating inside a low-density foam. This effect affects its density and hence, its transparency. A common problem in foam production is the creation of defective foams. Accurate information on their dimension and homogeneity is required to classify the foams' quality. Therefore, those parameters are being characterized using a 3D-measuring laser confocal microscope. For each foam, five images are taken: two 2D images representing the top and bottom surface foam planes and three images of side cross-sections from 3D scannings. An expert has to do the complicated, harsh, and exhausting work of manually classifying the foam's quality through the image set and only then determine whether the foam can be used in experiments or not. Currently, quality has two binary levels of normal vs. defective. At the same time, experts are commonly required to classify a sub-class of normal-defective, i.e., foams that are defective but might be sufficient for the needed experiment. This sub-class is problematic due to inconclusive judgment that is primarily intuitive. In this work, we present a novel state-of-the-art multi-view deep learning classification model that mimics the physicist's perspective by automatically determining the foams' quality classification and thus aids the expert. Our model achieved 86\% accuracy on upper and lower surface foam planes and 82\% on the entire set, suggesting interesting heuristics to the problem. A significant added value in this work is the ability to regress the foam quality instead of binary deduction and even explain the decision visually. The source code used in this work, as well as other relevant sources, are available at: https://github.com/Scientific-Computing-Lab-NRCN/Multi-View-Foams.git

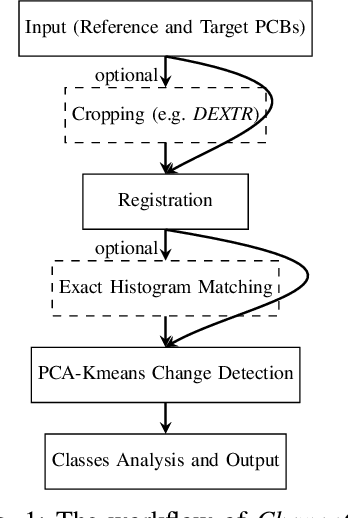

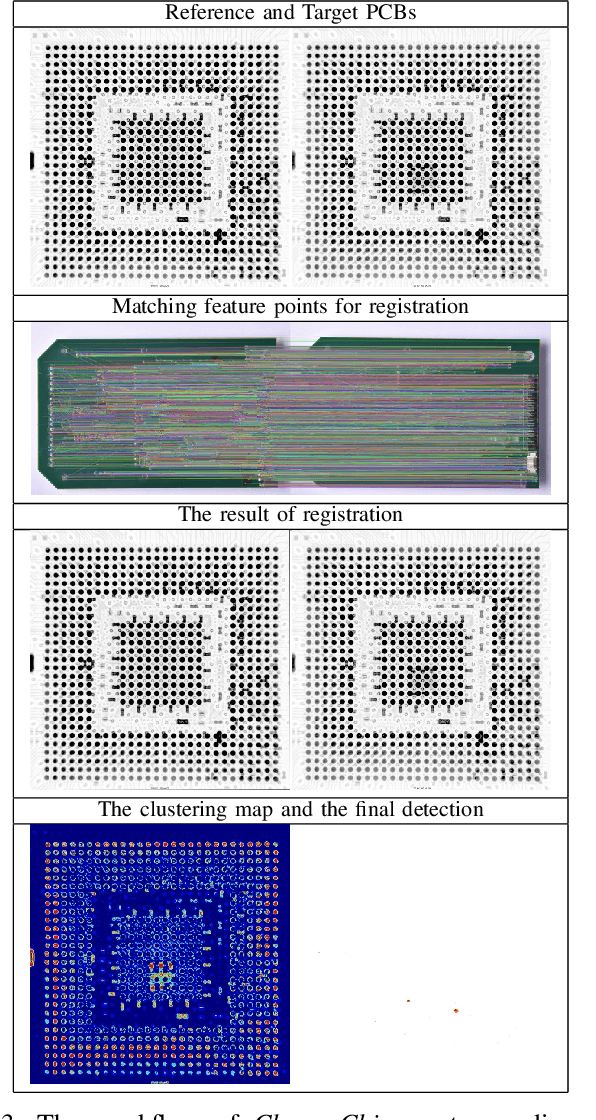

ChangeChip: A Reference-Based Unsupervised Change Detection for PCB Defect Detection

Sep 13, 2021

Abstract:The usage of electronic devices increases, and becomes predominant in most aspects of life. Surface Mount Technology (SMT) is the most common industrial method for manufacturing electric devices in which electrical components are mounted directly onto the surface of a Printed Circuit Board (PCB). Although the expansion of electronic devices affects our lives in a productive way, failures or defects in the manufacturing procedure of those devices might also be counterproductive and even harmful in some cases. It is therefore desired and sometimes crucial to ensure zero-defect quality in electronic devices and their production. While traditional Image Processing (IP) techniques are not sufficient to produce a complete solution, other promising methods like Deep Learning (DL) might also be challenging for PCB inspection, mainly because such methods require big adequate datasets which are missing, not available or not updated in the rapidly growing field of PCBs. Thus, PCB inspection is conventionally performed manually by human experts. Unsupervised Learning (UL) methods may potentially be suitable for PCB inspection, having learning capabilities on the one hand, while not relying on large datasets on the other. In this paper, we introduce ChangeChip, an automated and integrated change detection system for defect detection in PCBs, from soldering defects to missing or misaligned electronic elements, based on Computer Vision (CV) and UL. We achieve good quality defect detection by applying an unsupervised change detection between images of a golden PCB (reference) and the inspected PCB under various setting. In this work, we also present CD-PCB, a synthesized labeled dataset of 20 pairs of PCB images for evaluation of defect detection algorithms.

An End-to-End Computer Vision Methodology for Quantitative Metallography

Apr 22, 2021

Abstract:Metallography is crucial for a proper assessment of material's properties. It involves mainly the investigation of spatial distribution of grains and the occurrence and characteristics of inclusions or precipitates. This work presents an holistic artificial intelligence model for Anomaly Detection that automatically quantifies the degree of anomaly of impurities in alloys. We suggest the following examination process: (1) Deep semantic segmentation is performed on the inclusions (based on a suitable metallographic database of alloys and corresponding tags of inclusions), producing inclusions masks that are saved into a separated database. (2) Deep image inpainting is performed to fill the removed inclusions parts, resulting in 'clean' metallographic images, which contain the background of grains. (3) Grains' boundaries are marked using deep semantic segmentation (based on another metallographic database of alloys), producing boundaries that are ready for further inspection on the distribution of grains' size. (4) Deep anomaly detection and pattern recognition is performed on the inclusions masks to determine spatial, shape and area anomaly detection of the inclusions. Finally, the system recommends to an expert on areas of interests for further examination. The performance of the model is presented and analyzed based on few representative cases. Although the models presented here were developed for metallography analysis, most of them can be generalized to a wider set of problems in which anomaly detection of geometrical objects is desired. All models as well as the data-sets that were created for this work, are publicly available at https://github.com/Scientific-Computing-Lab-NRCN/MLography.

Complete CVDL Methodology for Investigating Hydrodynamic Instabilities

Apr 26, 2020

Abstract:In fluid dynamics, one of the most important research fields is hydrodynamic instabilities and their evolution in different flow regimes. The investigation of said instabilities is concerned with the highly non-linear dynamics. Currently, three main methods are used for understanding of such phenomenon - namely analytical models, experiments and simulations - and all of them are primarily investigated and correlated using human expertise. In this work we claim and demonstrate that a major portion of this research effort could and should be analysed using recent breakthrough advancements in the field of Computer Vision with Deep Learning (CVDL, or Deep Computer-Vision). Specifically, we target and evaluate specific state-of-the-art techniques - such as Image Retrieval, Template Matching, Parameters Regression and Spatiotemporal Prediction - for the quantitative and qualitative benefits they provide. In order to do so we focus in this research on one of the most representative instabilities, the Rayleigh-Taylor one, simulate its behaviour and create an open-sourced state-of-the-art annotated database (RayleAI). Finally, we use adjusted experimental results and novel physical loss methodologies to validate the correspondence of the predicted results to actual physical reality to prove the models efficiency. The techniques which were developed and proved in this work can be served as essential tools for physicists in the field of hydrodynamics for investigating a variety of physical systems, and also could be used via Transfer Learning to other instabilities research. A part of the techniques can be easily applied on already exist simulation results. All models as well as the data-set that was created for this work, are publicly available at: https://github.com/scientific-computing-nrcn/SimulAI.

MLography: An Automated Quantitative Metallography Model for Impurities Anomaly Detection using Novel Data Mining and Deep Learning Approach

Feb 27, 2020Abstract:The micro-structure of most of the engineering alloys contains some inclusions and precipitates, which may affect their properties, therefore it is crucial to characterize them. In this work we focus on the development of a state-of-the-art artificial intelligence model for Anomaly Detection named MLography to automatically quantify the degree of anomaly of impurities in alloys. For this purpose, we introduce several anomaly detection measures: Spatial, Shape and Area anomaly, that successfully detect the most anomalous objects based on their objective, given that the impurities were already labeled. The first two measures quantify the degree of anomaly of each object by how each object is distant and big compared to its neighborhood, and by the abnormally of its own shape respectively. The last measure, combines the former two and highlights the most anomalous regions among all input images, for later (physical) examination. The performance of the model is presented and analyzed based on few representative cases. We stress that although the models presented here were developed for metallography analysis, most of them can be generalized to a wider set of problems in which anomaly detection of geometrical objects is desired. All models as well as the data-set that was created for this work, are publicly available at: https://github.com/matanr/MLography.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge