Masud Moshtaghi

Controlled Text Generation with Hidden Representation Transformations

May 31, 2023

Abstract:We propose CHRT (Control Hidden Representation Transformation) - a controlled language generation framework that steers large language models to generate text pertaining to certain attributes (such as toxicity). CHRT gains attribute control by modifying the hidden representation of the base model through learned transformations. We employ a contrastive-learning framework to learn these transformations that can be combined to gain multi-attribute control. The effectiveness of CHRT is experimentally shown by comparing it with seven baselines over three attributes. CHRT outperforms all the baselines in the task of detoxification, positive sentiment steering, and text simplification while minimizing the loss in linguistic qualities. Further, our approach has the lowest inference latency of only 0.01 seconds more than the base model, making it the most suitable for high-performance production environments. We open-source our code and release two novel datasets to further propel controlled language generation research.

Online Cluster Validity Indices for Streaming Data

Jan 08, 2018

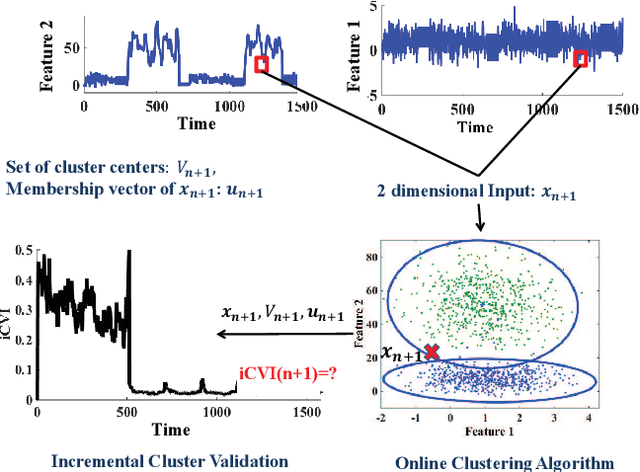

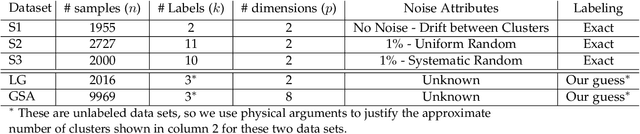

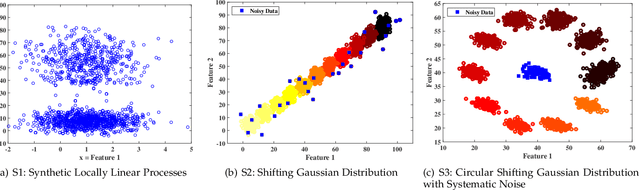

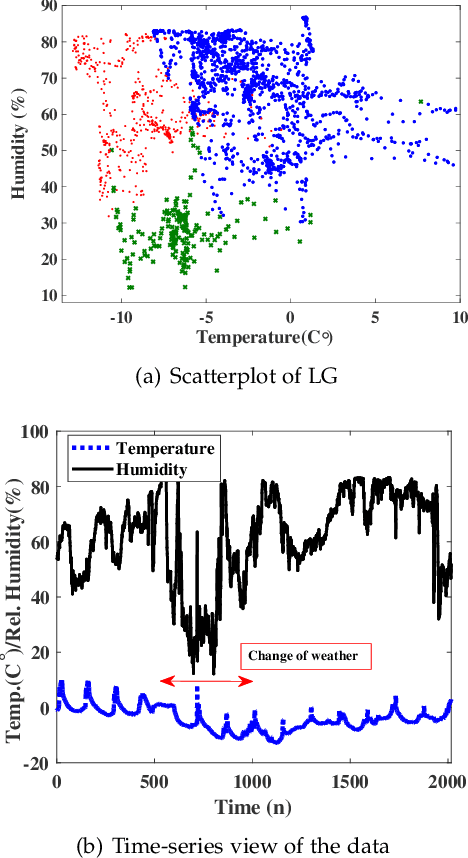

Abstract:Cluster analysis is used to explore structure in unlabeled data sets in a wide range of applications. An important part of cluster analysis is validating the quality of computationally obtained clusters. A large number of different internal indices have been developed for validation in the offline setting. However, this concept has not been extended to the online setting. A key challenge is to find an efficient incremental formulation of an index that can capture both cohesion and separation of the clusters over potentially infinite data streams. In this paper, we develop two online versions (with and without forgetting factors) of the Xie-Beni and Davies-Bouldin internal validity indices, and analyze their characteristics, using two streaming clustering algorithms (sk-means and online ellipsoidal clustering), and illustrate their use in monitoring evolving clusters in streaming data. We also show that incremental cluster validity indices are capable of sending a distress signal to online monitors when evolving clusters go awry. Our numerical examples indicate that the incremental Xie-Beni index with forgetting factor is superior to the other three indices tested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge