Masaharu Munetomo

Introducing Competitive Mechanism to Differential Evolution for Numerical Optimization

Jun 08, 2024

Abstract:This paper introduces a novel competitive mechanism into differential evolution (DE), presenting an effective DE variant named competitive DE (CDE). CDE features a simple yet efficient mutation strategy: DE/winner-to-best/1. Essentially, the proposed DE/winner-to-best/1 strategy can be recognized as an intelligent integration of the existing mutation strategies of DE/rand-to-best/1 and DE/cur-to-best/1. The incorporation of DE/winner-to-best/1 and the competitive mechanism provide new avenues for advancing DE techniques. Moreover, in CDE, the scaling factor $F$ and mutation rate $Cr$ are determined by a random number generator following a normal distribution, as suggested by previous research. To investigate the performance of the proposed CDE, comprehensive numerical experiments are conducted on CEC2017 and engineering simulation optimization tasks, with CMA-ES, JADE, and other state-of-the-art optimizers and DE variants employed as competitor algorithms. The experimental results and statistical analyses highlight the promising potential of CDE as an alternative optimizer for addressing diverse optimization challenges.

Large Language Model Assisted Adversarial Robustness Neural Architecture Search

Jun 08, 2024

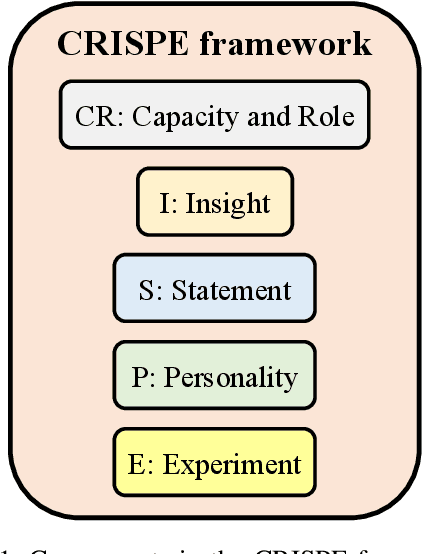

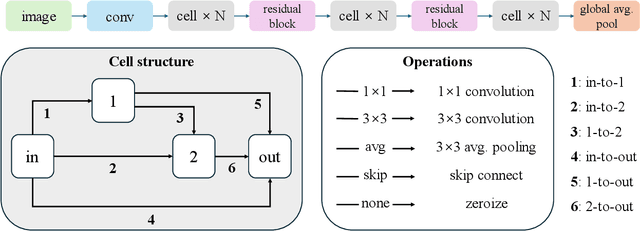

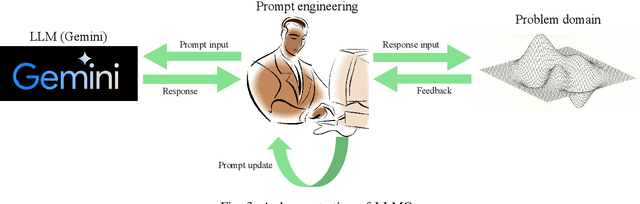

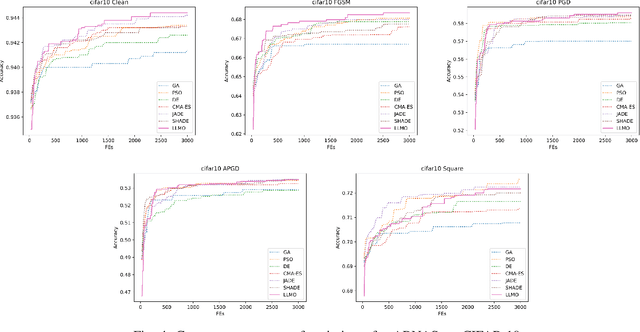

Abstract:Motivated by the potential of large language models (LLMs) as optimizers for solving combinatorial optimization problems, this paper proposes a novel LLM-assisted optimizer (LLMO) to address adversarial robustness neural architecture search (ARNAS), a specific application of combinatorial optimization. We design the prompt using the standard CRISPE framework (i.e., Capacity and Role, Insight, Statement, Personality, and Experiment). In this study, we employ Gemini, a powerful LLM developed by Google. We iteratively refine the prompt, and the responses from Gemini are adapted as solutions to ARNAS instances. Numerical experiments are conducted on NAS-Bench-201-based ARNAS tasks with CIFAR-10 and CIFAR-100 datasets. Six well-known meta-heuristic algorithms (MHAs) including genetic algorithm (GA), particle swarm optimization (PSO), differential evolution (DE), and its variants serve as baselines. The experimental results confirm the competitiveness of the proposed LLMO and highlight the potential of LLMs as effective combinatorial optimizers. The source code of this research can be downloaded from \url{https://github.com/RuiZhong961230/LLMO}.

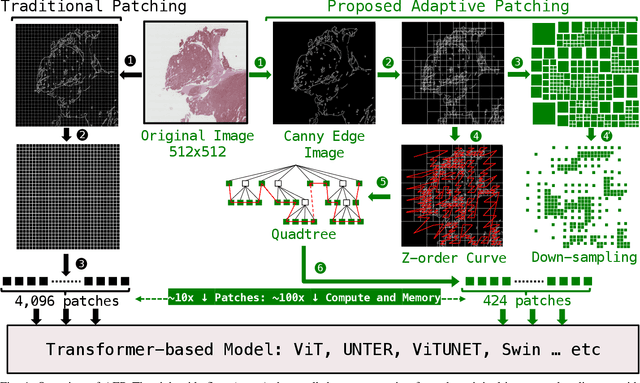

Adaptive Patching for High-resolution Image Segmentation with Transformers

Apr 15, 2024

Abstract:Attention-based models are proliferating in the space of image analytics, including segmentation. The standard method of feeding images to transformer encoders is to divide the images into patches and then feed the patches to the model as a linear sequence of tokens. For high-resolution images, e.g. microscopic pathology images, the quadratic compute and memory cost prohibits the use of an attention-based model, if we are to use smaller patch sizes that are favorable in segmentation. The solution is to either use custom complex multi-resolution models or approximate attention schemes. We take inspiration from Adapative Mesh Refinement (AMR) methods in HPC by adaptively patching the images, as a pre-processing step, based on the image details to reduce the number of patches being fed to the model, by orders of magnitude. This method has a negligible overhead, and works seamlessly with any attention-based model, i.e. it is a pre-processing step that can be adopted by any attention-based model without friction. We demonstrate superior segmentation quality over SoTA segmentation models for real-world pathology datasets while gaining a geomean speedup of $6.9\times$ for resolutions up to $64K^2$, on up to $2,048$ GPUs.

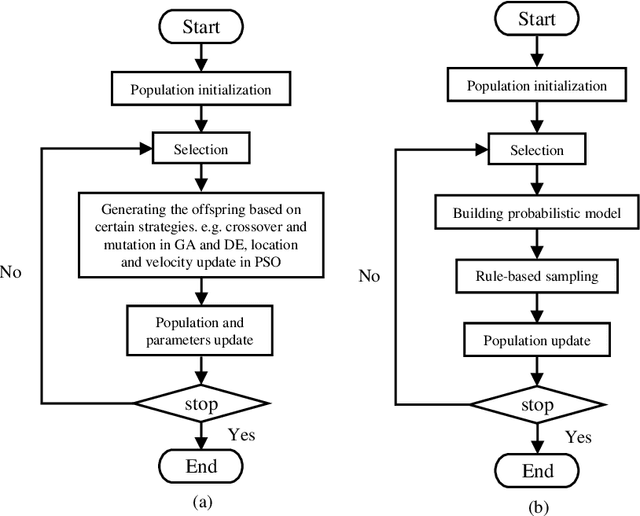

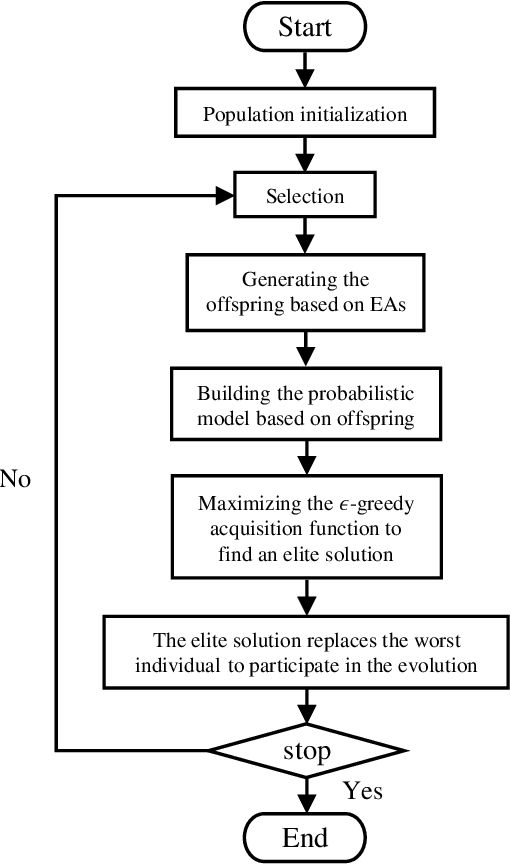

Accelerating the Evolutionary Algorithms by Gaussian Process Regression with $ε$-greedy acquisition function

Oct 13, 2022

Abstract:In this paper, we propose a novel method to estimate the elite individual to accelerate the convergence of optimization. Inspired by the Bayesian Optimization Algorithm (BOA), the Gaussian Process Regression (GPR) is applied to approximate the fitness landscape of original problems based on every generation of optimization. And simple but efficient $\epsilon$-greedy acquisition function is employed to find a promising solution in the surrogate model. Proximity Optimal Principle (POP) states that well-performed solutions have a similar structure, and there is a high probability of better solutions existing around the elite individual. Based on this hypothesis, in each generation of optimization, we replace the worst individual in Evolutionary Algorithms (EAs) with the elite individual to participate in the evolution process. To illustrate the scalability of our proposal, we combine our proposal with the Genetic Algorithm (GA), Differential Evolution (DE), and CMA-ES. Experimental results in CEC2013 benchmark functions show our proposal has a broad prospect to estimate the elite individual and accelerate the convergence of optimization.

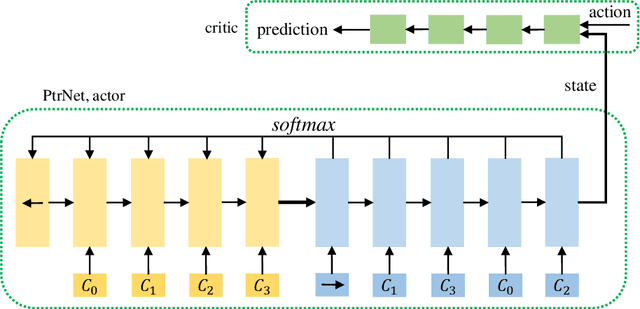

Accelerating the Genetic Algorithm for Large-scale Traveling Salesman Problems by Cooperative Coevolutionary Pointer Network with Reinforcement Learning

Sep 27, 2022

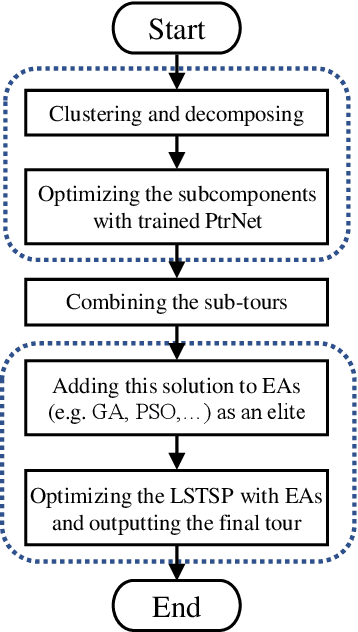

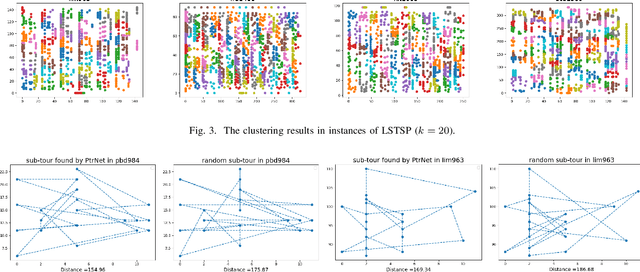

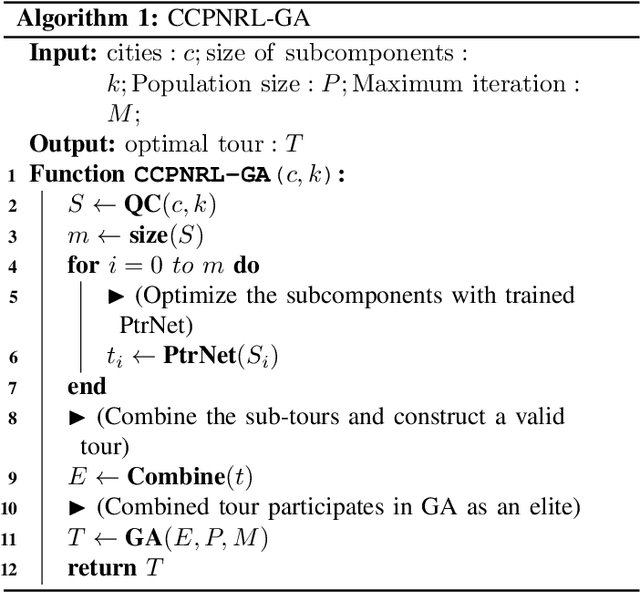

Abstract:In this paper, we propose a two-stage optimization strategy for solving the Large-scale Traveling Salesman Problems (LSTSPs) named CCPNRL-GA. First, we hypothesize that the participation of a well-performed individual as an elite can accelerate the convergence of optimization. Based on this hypothesis, in the first stage, we cluster the cities and decompose the LSTSPs into multiple subcomponents, and each subcomponent is optimized with a reusable Pointer Network (PtrNet). After subcomponents optimization, we combine all sub-tours to form a valid solution, this solution joins the second stage of optimization with GA. We validate the performance of our proposal on 10 LSTSPs and compare it with traditional EAs. Experimental results show that the participation of an elite individual can greatly accelerate the optimization of LSTSPs, and our proposal has broad prospects for dealing with LSTSPs.

Cooperative coevolutionary Modified Differential Evolution with Distance-based Selection for Large-Scale Optimization Problems in noisy environments through an automatic Random Grouping

Sep 06, 2022

Abstract:Many optimization problems suffer from noise, and nonlinearity check-based decomposition methods (e.g. Differential Grouping) will completely fail to detect the interactions between variables in multiplicative noisy environments, thus, it is difficult to decompose the large-scale optimization problems (LSOPs) in noisy environments. In this paper, we propose an automatic Random Grouping (aRG), which does not need any explicit hyperparameter specified by users. Simulation experiments and mathematical analysis show that aRG can detect the interactions between variables without the fitness landscape knowledge, and the sub-problems decomposed by aRG have smaller scales, which is easier for EAs to optimize. Based on the cooperative coevolution (CC) framework, we introduce an advanced optimizer named Modified Differential Evolution with Distance-based Selection (MDE-DS) to enhance the search ability in noisy environments. Compared with canonical DE, the parameter self-adaptation, the balance between diversification and intensification, and the distance-based probability selection endow MDE-DS with stronger ability in exploration and exploitation. To evaluate the performance of our proposal, we design $500$-D and $1000$-D problems with various separability in noisy environments based on the CEC2013 LSGO Suite. Numerical experiments show that our proposal has broad prospects to solve LSOPs in noisy environments and can be easily extended to higher-dimensional problems.

Accelerating differential evolution algorithm with Gaussian sampling based on estimating the convergence points

Aug 31, 2022

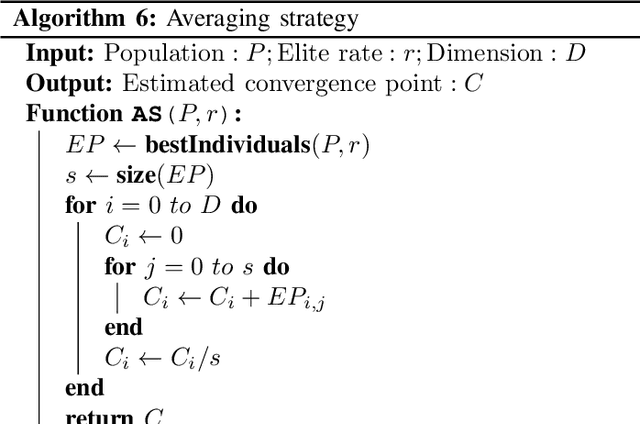

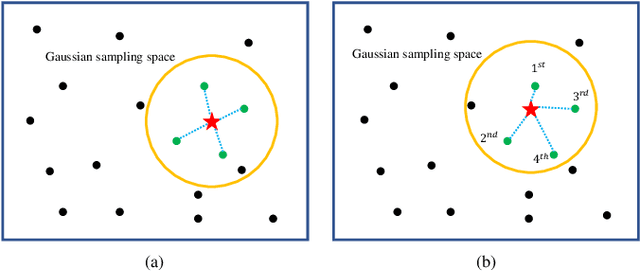

Abstract:In this paper, we propose a simple strategy for estimating the convergence point approximately by averaging the elite sub-population. Based on this idea, we derive two methods, which are ordinary averaging strategy, and weighted averaging strategy. We also design a Gaussian sampling operator with the mean of the estimated convergence point with a certain standard deviation. This operator is combined with the traditional differential evolution algorithm (DE) to accelerate the convergence. Numerical experiments show that our proposal can accelerate the DE on most functions of 28 low-dimensional test functions on the CEC2013 Suite, and our proposal can easily be extended to combine with other population-based evolutionary algorithms with a simple modification.

Cooperative coevolutionary hybrid NSGA-II with Linkage Measurement Minimization for Large-scale Multi-objective optimization

Aug 29, 2022

Abstract:In this paper, we propose a variable grouping method based on cooperative coevolution for large-scale multi-objective problems (LSMOPs), named Linkage Measurement Minimization (LMM). And for the sub-problem optimization stage, a hybrid NSGA-II with a Gaussian sampling operator based on an estimated convergence point is proposed. In the variable grouping stage, according to our previous research, we treat the variable grouping problem as a combinatorial optimization problem, and the linkage measurement function is designed based on linkage identification by the nonlinearity check on real code (LINC-R). We extend this variable grouping method to LSMOPs. In the sub-problem optimization stage, we hypothesize that there is a higher probability of existing better solutions around the Pareto Front (PF). Based on this hypothesis, we estimate a convergence point at every generation of optimization and perform Gaussian sampling around the convergence point. The samples with good objective value will participate in the optimization as elites. Numerical experiments show that our variable grouping method is better than some popular variable grouping methods, and hybrid NSGA-II has broad prospects for multi-objective problem optimization.

GTOPX Space Mission Benchmarks

Nov 05, 2020

Abstract:This contribution introduces the GTOPX space mission benchmark collection, which is an extension of GTOP database published by the European Space Agency (ESA). GTOPX consists of ten individual benchmark instances representing real-world interplanetary space trajectory design problems. In regard to the original GTOP collection, GTOPX includes three new problem instances featuring mixed-integer and multi-objective properties. GTOPX enables a simplified user handling, unified benchmark function call and some minor bug corrections to the original GTOP implementation. Furthermore, GTOPX is linked from it's original C++ source code to Python and Matlab based on dynamic link libraries, assuring computationally fast and accurate reproduction of the benchmark results in all three programming languages. Space mission trajectory design problems as those represented in GTOPX are known to be highly non-linear and difficult to solve. The GTOPX collection, therefore, aims particularly at researchers wishing to put advanced (meta)heuristic and hybrid optimization algorithms to the test. The goal of this paper is to provide researchers with a manual and reference to the newly available GTOPX benchmark software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge