Maryam Hashemzadeh

Sub-goal Distillation: A Method to Improve Small Language Agents

May 04, 2024

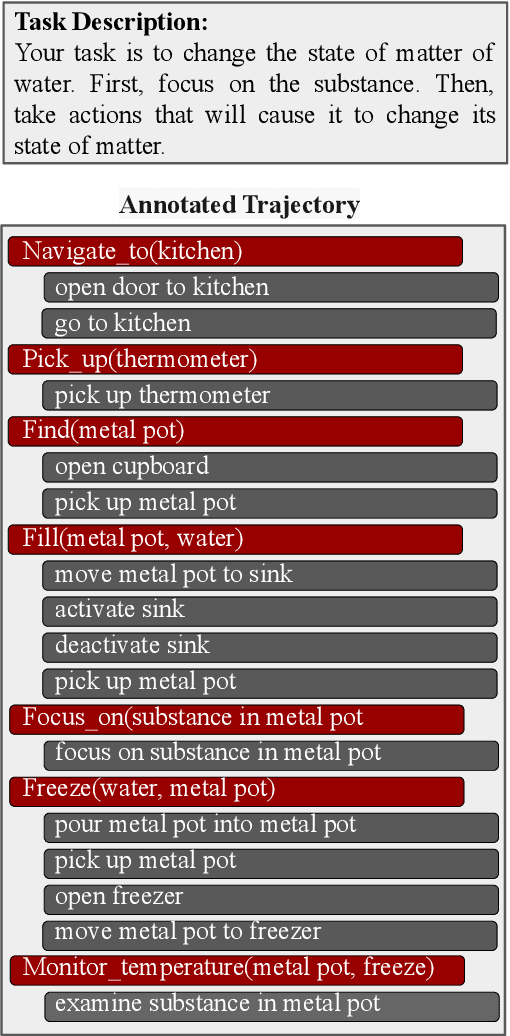

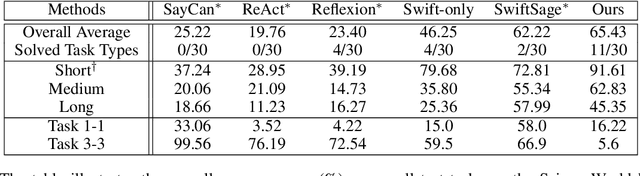

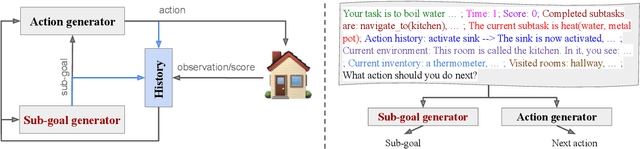

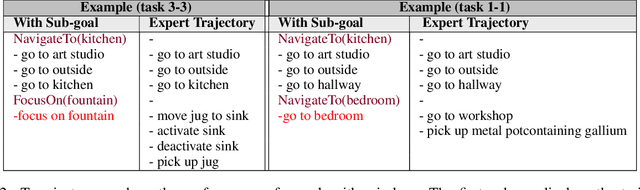

Abstract:While Large Language Models (LLMs) have demonstrated significant promise as agents in interactive tasks, their substantial computational requirements and restricted number of calls constrain their practical utility, especially in long-horizon interactive tasks such as decision-making or in scenarios involving continuous ongoing tasks. To address these constraints, we propose a method for transferring the performance of an LLM with billions of parameters to a much smaller language model (770M parameters). Our approach involves constructing a hierarchical agent comprising a planning module, which learns through Knowledge Distillation from an LLM to generate sub-goals, and an execution module, which learns to accomplish these sub-goals using elementary actions. In detail, we leverage an LLM to annotate an oracle path with a sequence of sub-goals towards completing a goal. Subsequently, we utilize this annotated data to fine-tune both the planning and execution modules. Importantly, neither module relies on real-time access to an LLM during inference, significantly reducing the overall cost associated with LLM interactions to a fixed cost. In ScienceWorld, a challenging and multi-task interactive text environment, our method surpasses standard imitation learning based solely on elementary actions by 16.7% (absolute). Our analysis highlights the efficiency of our approach compared to other LLM-based methods. Our code and annotated data for distillation can be found on GitHub.

From Language to Language-ish: How Brain-Like is an LSTM's Representation of Nonsensical Language Stimuli?

Oct 14, 2020

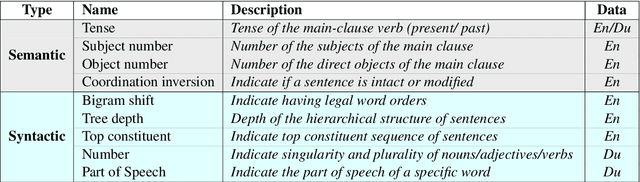

Abstract:The representations generated by many models of language (word embeddings, recurrent neural networks and transformers) correlate to brain activity recorded while people read. However, these decoding results are usually based on the brain's reaction to syntactically and semantically sound language stimuli. In this study, we asked: how does an LSTM (long short term memory) language model, trained (by and large) on semantically and syntactically intact language, represent a language sample with degraded semantic or syntactic information? Does the LSTM representation still resemble the brain's reaction? We found that, even for some kinds of nonsensical language, there is a statistically significant relationship between the brain's activity and the representations of an LSTM. This indicates that, at least in some instances, LSTMs and the human brain handle nonsensical data similarly.

Exploiting generalization in the subspaces for faster model-based learning

Oct 25, 2017

Abstract:Due to the lack of enough generalization in the state-space, common methods in Reinforcement Learning (RL) suffer from slow learning speed especially in the early learning trials. This paper introduces a model-based method in discrete state-spaces for increasing learning speed in terms of required experience (but not required computational time) by exploiting generalization in the experiences of the subspaces. A subspace is formed by choosing a subset of features in the original state representation (full-space). Generalization and faster learning in a subspace are due to many-to-one mapping of experiences from the full-space to each state in the subspace. Nevertheless, due to inherent perceptual aliasing in the subspaces, the policy suggested by each subspace does not generally converge to the optimal policy. Our approach, called Model Based Learning with Subspaces (MoBLeS), calculates confidence intervals of the estimated Q-values in the full-space and in the subspaces. These confidence intervals are used in the decision making, such that the agent benefits the most from the possible generalization while avoiding from detriment of the perceptual aliasing in the subspaces. Convergence of MoBLeS to the optimal policy is theoretically investigated. Additionally, we show through several experiments that MoBLeS improves the learning speed in the early trials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge