Martin Servin

Safety filtering of robotic manipulation under environment uncertainty: a computational approach

Sep 16, 2025Abstract:Robotic manipulation in dynamic and unstructured environments requires safety mechanisms that exploit what is known and what is uncertain about the world. Existing safety filters often assume full observability, limiting their applicability in real-world tasks. We propose a physics-based safety filtering scheme that leverages high-fidelity simulation to assess control policies under uncertainty in world parameters. The method combines dense rollout with nominal parameters and parallelizable sparse re-evaluation at critical state-transitions, quantified through generalized factors of safety for stable grasping and actuator limits, and targeted uncertainty reduction through probing actions. We demonstrate the approach in a simulated bimanual manipulation task with uncertain object mass and friction, showing that unsafe trajectories can be identified and filtered efficiently. Our results highlight physics-based sparse safety evaluation as a scalable strategy for safe robotic manipulation under uncertainty.

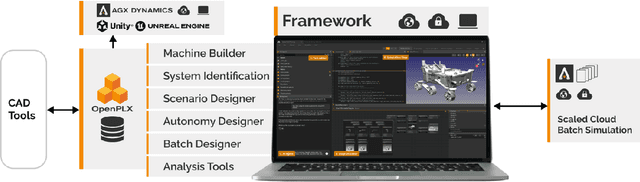

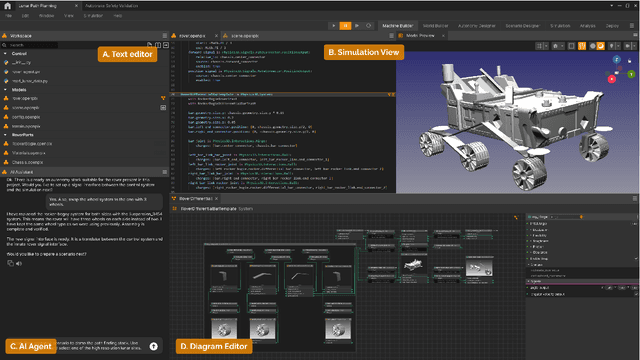

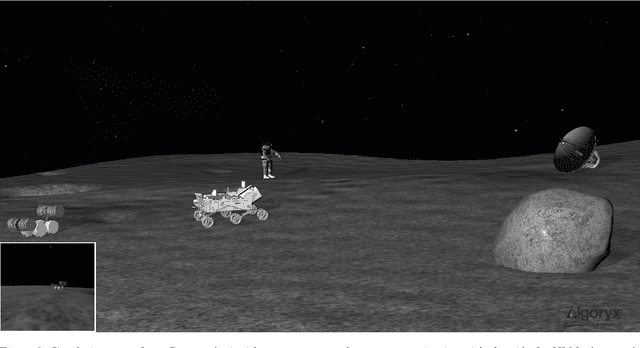

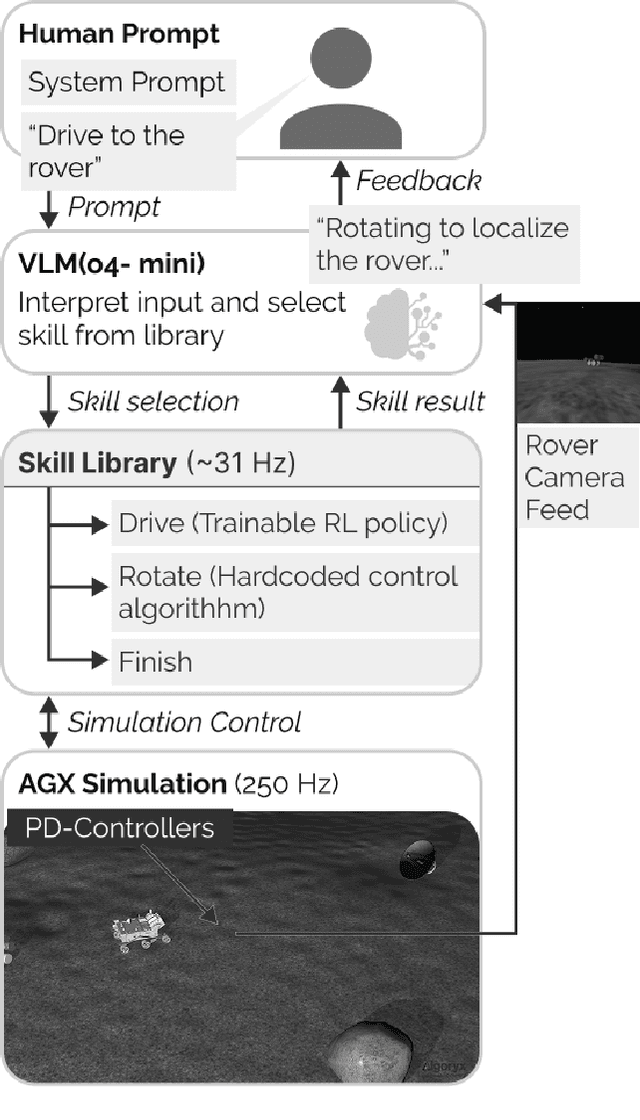

An integrated process for design and control of lunar robotics using AI and simulation

Sep 15, 2025

Abstract:We envision an integrated process for developing lunar construction equipment, where physical design and control are explored in parallel. In this paper, we describe a technical framework that supports this process. It relies on OpenPLX, a readable/writable declarative language that links CAD-models and autonomous systems to high-fidelity, real-time 3D simulations of contacting multibody dynamics, machine regolith interaction forces, and non-ideal sensors. To demonstrate its capabilities, we present two case studies, including an autonomous lunar rover that combines a vision-language model for navigation with a reinforcement learning-based control policy for locomotion.

Developing a Calibrated Physics-Based Digital Twin for Construction Vehicles

Aug 12, 2025Abstract:This paper presents the development of a calibrated digital twin of a wheel loader. A calibrated digital twin integrates a construction vehicle with a high-fidelity digital model allowing for automated diagnostics and optimization of operations as well as pre-planning simulations enhancing automation capabilities. The high-fidelity digital model is a virtual twin of the physical wheel loader. It uses a physics-based multibody dynamic model of the wheel loader in the software AGX Dynamics. Interactions of the wheel loader's bucket while in use in construction can be simulated in the virtual model. Calibration makes this simulation of high-fidelity which can enhance realistic planning for automation of construction operations. In this work, a wheel loader was instrumented with several sensors used to calibrate the digital model. The calibrated digital twin was able to estimate the magnitude of the forces on the bucket base with high accuracy, providing a high-fidelity simulation.

A simulation framework for autonomous lunar construction work

May 28, 2025Abstract:We present a simulation framework for lunar construction work involving multiple autonomous machines. The framework supports modelling of construction scenarios and autonomy solutions, execution of the scenarios in simulation, and analysis of work time and energy consumption throughout the construction project. The simulations are based on physics-based models for contacting multibody dynamics and deformable terrain, including vehicle-soil interaction forces and soil flow in real time. A behaviour tree manages the operational logic and error handling, which enables the representation of complex behaviours through a discrete set of simpler tasks in a modular hierarchical structure. High-level decision-making is separated from lower-level control algorithms, with the two connected via ROS2. Excavation movements are controlled through inverse kinematics and tracking controllers. The framework is tested and demonstrated on two different lunar construction scenarios.

Synthesizing multi-log grasp poses

Mar 18, 2024Abstract:Multi-object grasping is a challenging task. It is important for energy and cost-efficient operation of industrial crane manipulators, such as those used to collect tree logs off the forest floor and onto forest machines. In this work, we used synthetic data from physics simulations to explore how data-driven modeling can be used to infer multi-object grasp poses from images. We showed that convolutional neural networks can be trained specifically for synthesizing multi-object grasps. Using RGB-Depth images and instance segmentation masks as input, a U-Net model outputs grasp maps with corresponding grapple orientation and opening width. Given an observation of a pile of logs, the model can be used to synthesize and rate the possible grasp poses and select the most suitable one, with the possibility to respect changing operational constraints such as lift capacity and reach. When tested on previously unseen data, the proposed model found successful grasp poses with an accuracy of 95%.

Examining the simulation-to-reality gap of a wheel loader digging in deformable terrain

Oct 10, 2023Abstract:We investigate how well a physics-based simulator can replicate a real wheel loader performing bucket filling in a pile of soil. The comparison is made using field test time series of the vehicle motion and actuation forces, loaded mass, and total work. The vehicle was modeled as a rigid multibody system with frictional contacts, driveline, and linear actuators. For the soil, we tested discrete element models of different resolutions, with and without multiscale acceleration. The spatio-temporal resolution ranged between 50-400 mm and 2-500 ms, and the computational speed was between 1/10,000 to 5 times faster than real-time. The simulation-to-reality gap was found to be around 10% and exhibited a weak dependence on the level of fidelity, e.g., compatible with real-time simulation. Furthermore, the sensitivity of an optimized force feedback controller under transfer between different simulation domains was investigated. The domain bias was observed to cause a performance reduction of 5% despite the domain gap being about 15%.

Predictor models for high-performance wheel loading

Sep 22, 2023

Abstract:Autonomous wheel loading involves selecting actions that maximize the total performance over many repetitions. The actions should be well adapted to the current state of the pile and its future states. Selecting the best actions is difficult since the pile states are consequences of previous actions and thus are highly unknown. To aid the selection of actions, this paper investigates data-driven models to predict the loaded mass, time, work, and resulting pile state of a loading action given the initial pile state. Deep neural networks were trained on data using over 10,000 simulations to an accuracy of 91-97,% with the pile state represented either by a heightmap or by its slope and curvature. The net outcome of sequential loading actions is predicted by repeating the model inference at five milliseconds per loading. As errors accumulate during the inferences, long-horizon predictions need to be combined with a physics-based model.

Multi-log grasping using reinforcement learning and virtual visual servoing

Sep 06, 2023

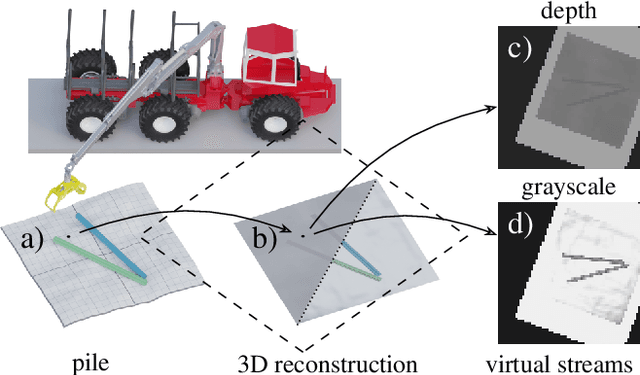

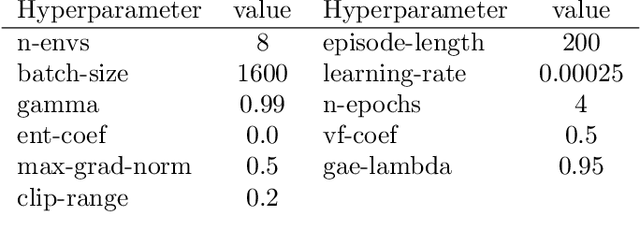

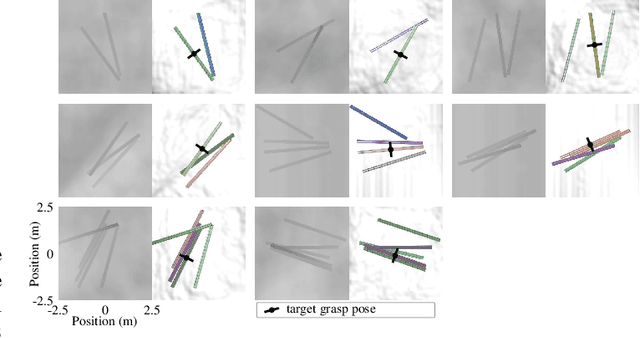

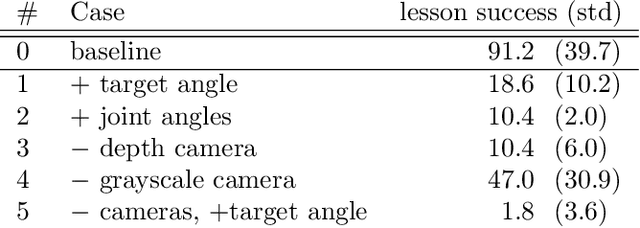

Abstract:We explore multi-log grasping using reinforcement learning and virtual visual servoing for automated forwarding. Automation of forest processes is a major challenge, and many techniques regarding robot control pose different challenges due to the unstructured and harsh outdoor environment. Grasping multiple logs involves problems of dynamics and path planning, where the interaction between the grapple, logs, terrain, and obstacles requires visual information. To address these challenges, we separate image segmentation from crane control and utilize a virtual camera to provide an image stream from 3D reconstructed data. We use Cartesian control to simplify domain transfer. Since log piles are static, visual servoing using a 3D reconstruction of the pile and its surroundings is equivalent to using real camera data until the point of grasping. This relaxes the limit on computational resources and time for the challenge of image segmentation, and allows for collecting data in situations where the log piles are not occluded. The disadvantage is the lack of information during grasping. We demonstrate that this problem is manageable and present an agent that is 95% successful in picking one or several logs from challenging piles of 2--5 logs.

Sim-to-real transfer of active suspension control using deep reinforcement learning

Jun 21, 2023

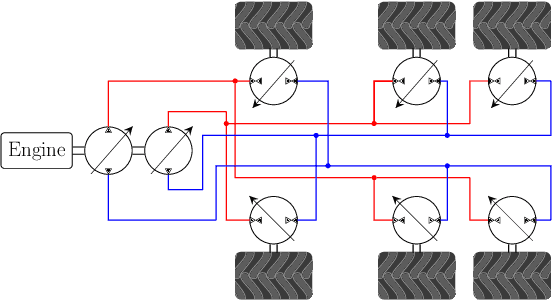

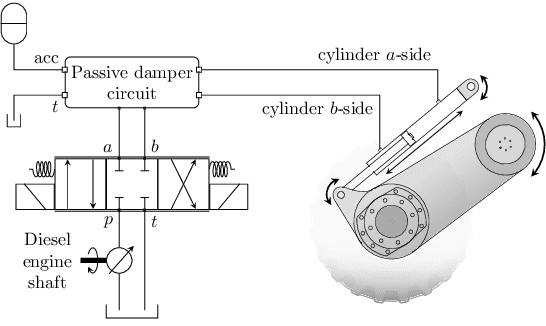

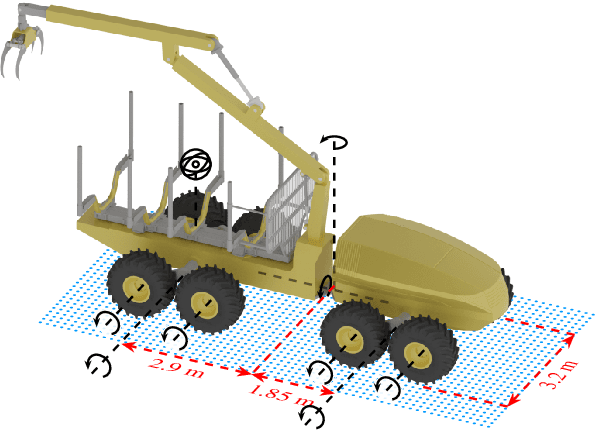

Abstract:We explore sim-to-real transfer of deep reinforcement learning controllers for a heavy vehicle with active suspensions designed for traversing rough terrain. While related research primarily focuses on lightweight robots with electric motors and fast actuation, this study uses a forestry vehicle with a complex hydraulic driveline and slow actuation. We simulate the vehicle using multibody dynamics and apply system identification to find an appropriate set of simulation parameters. We then train policies in simulation using various techniques to mitigate the sim-to-real gap, including domain randomization, action delays, and a reward penalty to encourage smooth control. In reality, the policies trained with action delays and a penalty for erratic actions perform at nearly the same level as in simulation. In experiments on level ground, the motion trajectories closely overlap when turning to either side, as well as in a route tracking scenario. When faced with a ramp that requires active use of the suspensions, the simulated and real motions are in close alignment. This shows that the actuator model together with system identification yields a sufficiently accurate model of the actuators. We observe that policies trained without the additional action penalty exhibit fast switching or bang-bang control. These present smooth motions and high performance in simulation but transfer poorly to reality. We find that policies make marginal use of the local height map for perception, showing no indications of look-ahead planning. However, the strong transfer capabilities entail that further development concerning perception and performance can be largely confined to simulation.

Learning multiobjective rough terrain traversability

Apr 13, 2022

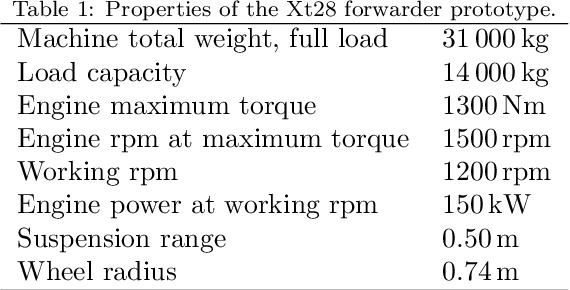

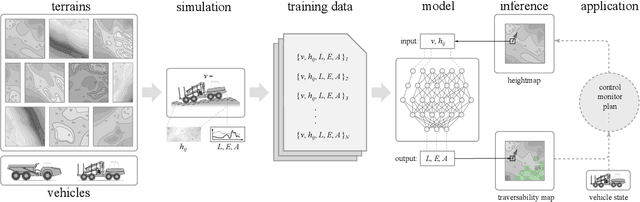

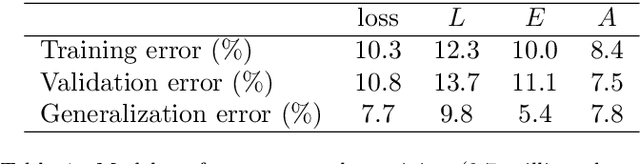

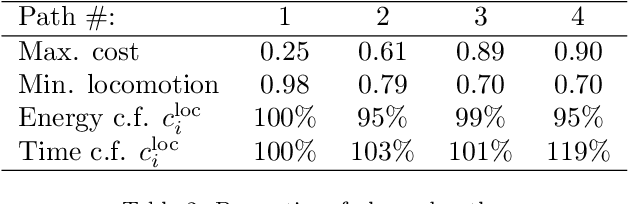

Abstract:We present a method that uses high-resolution topography data of rough terrain, and ground vehicle simulation, to predict traversability. Traversability is expressed as three independent measures: the ability to traverse the terrain at a target speed, energy consumption, and acceleration. The measures are continuous and reflect different objectives for planning that go beyond binary classification. A deep neural network is trained to predict the traversability measures from the local heightmap and target speed. To produce training data, we use an articulated vehicle with wheeled bogie suspensions and procedurally generated terrains. We evaluate the model on laser-scanned forest terrains, previously unseen by the model. The model predicts traversability with an accuracy of 90%. Predictions rely on features from the high-dimensional terrain data that surpass local roughness and slope relative to the heading. Correlations show that the three traversability measures are complementary to each other. With an inference speed 3000 times faster than the ground truth simulation and trivially parallelizable, the model is well suited for traversability analysis and optimal path planning over large areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge