Daniel Lindmark

Safety filtering of robotic manipulation under environment uncertainty: a computational approach

Sep 16, 2025Abstract:Robotic manipulation in dynamic and unstructured environments requires safety mechanisms that exploit what is known and what is uncertain about the world. Existing safety filters often assume full observability, limiting their applicability in real-world tasks. We propose a physics-based safety filtering scheme that leverages high-fidelity simulation to assess control policies under uncertainty in world parameters. The method combines dense rollout with nominal parameters and parallelizable sparse re-evaluation at critical state-transitions, quantified through generalized factors of safety for stable grasping and actuator limits, and targeted uncertainty reduction through probing actions. We demonstrate the approach in a simulated bimanual manipulation task with uncertain object mass and friction, showing that unsafe trajectories can be identified and filtered efficiently. Our results highlight physics-based sparse safety evaluation as a scalable strategy for safe robotic manipulation under uncertainty.

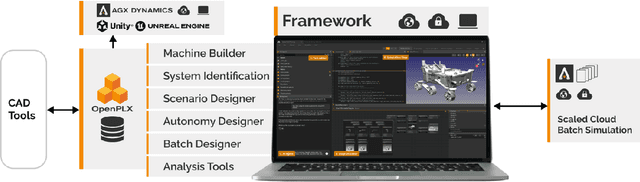

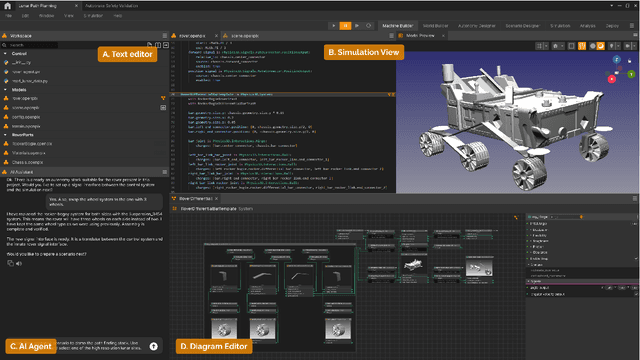

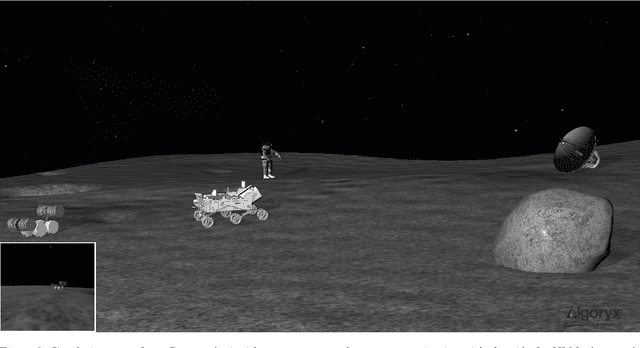

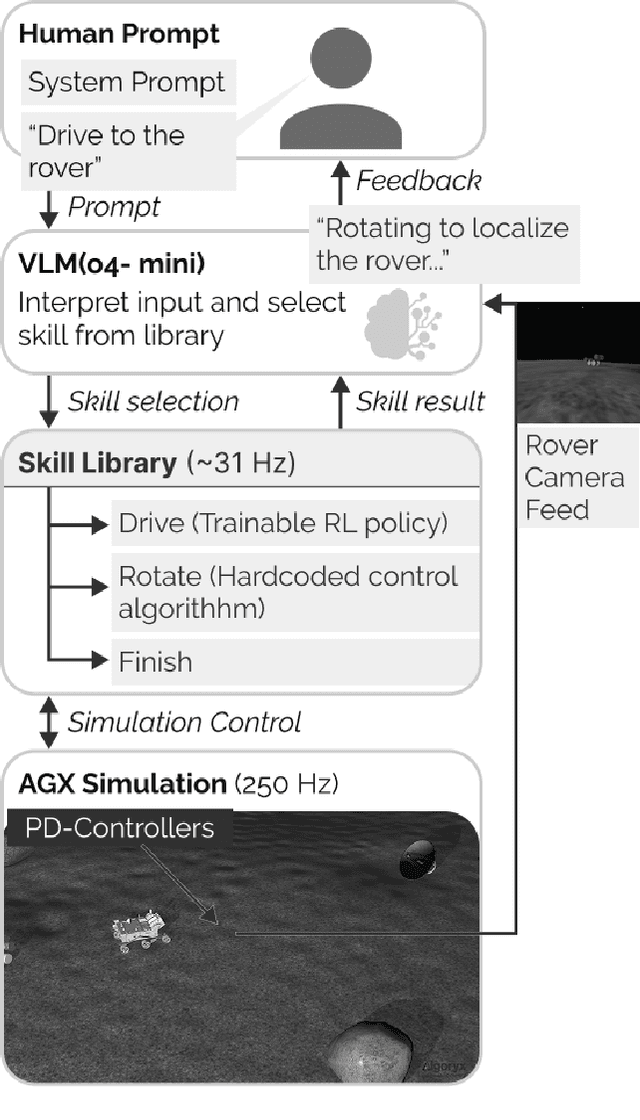

An integrated process for design and control of lunar robotics using AI and simulation

Sep 15, 2025

Abstract:We envision an integrated process for developing lunar construction equipment, where physical design and control are explored in parallel. In this paper, we describe a technical framework that supports this process. It relies on OpenPLX, a readable/writable declarative language that links CAD-models and autonomous systems to high-fidelity, real-time 3D simulations of contacting multibody dynamics, machine regolith interaction forces, and non-ideal sensors. To demonstrate its capabilities, we present two case studies, including an autonomous lunar rover that combines a vision-language model for navigation with a reinforcement learning-based control policy for locomotion.

Developing a Calibrated Physics-Based Digital Twin for Construction Vehicles

Aug 12, 2025Abstract:This paper presents the development of a calibrated digital twin of a wheel loader. A calibrated digital twin integrates a construction vehicle with a high-fidelity digital model allowing for automated diagnostics and optimization of operations as well as pre-planning simulations enhancing automation capabilities. The high-fidelity digital model is a virtual twin of the physical wheel loader. It uses a physics-based multibody dynamic model of the wheel loader in the software AGX Dynamics. Interactions of the wheel loader's bucket while in use in construction can be simulated in the virtual model. Calibration makes this simulation of high-fidelity which can enhance realistic planning for automation of construction operations. In this work, a wheel loader was instrumented with several sensors used to calibrate the digital model. The calibrated digital twin was able to estimate the magnitude of the forces on the bucket base with high accuracy, providing a high-fidelity simulation.

A simulation framework for autonomous lunar construction work

May 28, 2025Abstract:We present a simulation framework for lunar construction work involving multiple autonomous machines. The framework supports modelling of construction scenarios and autonomy solutions, execution of the scenarios in simulation, and analysis of work time and energy consumption throughout the construction project. The simulations are based on physics-based models for contacting multibody dynamics and deformable terrain, including vehicle-soil interaction forces and soil flow in real time. A behaviour tree manages the operational logic and error handling, which enables the representation of complex behaviours through a discrete set of simpler tasks in a modular hierarchical structure. High-level decision-making is separated from lower-level control algorithms, with the two connected via ROS2. Excavation movements are controlled through inverse kinematics and tracking controllers. The framework is tested and demonstrated on two different lunar construction scenarios.

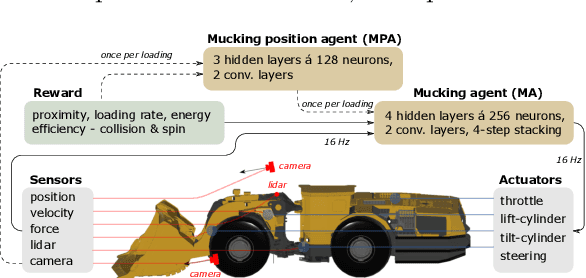

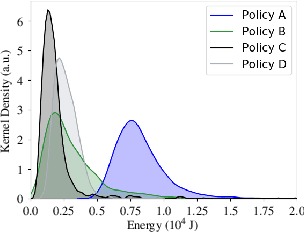

Continuous control of an underground loader using deep reinforcement learning

Mar 23, 2021

Abstract:Reinforcement learning control of an underground loader is investigated in simulated environment, using a multi-agent deep neural network approach. At the start of each loading cycle, one agent selects the dig position from a depth camera image of the pile of fragmented rock. A second agent is responsible for continuous control of the vehicle, with the goal of filling the bucket at the selected loading point, while avoiding collisions, getting stuck, or losing ground traction. It relies on motion and force sensors, as well as on camera and lidar. Using a soft actor-critic algorithm the agents learn policies for efficient bucket filling over many subsequent loading cycles, with clear ability to adapt to the changing environment. The best results, on average 75% of the max capacity, are obtained when including a penalty for energy usage in the reward.

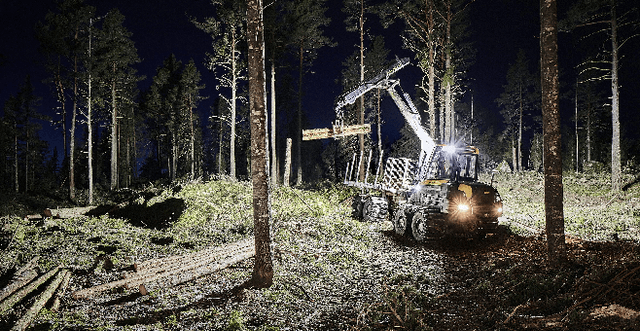

Reinforcement Learning Control of a Forestry Crane Manipulator

Mar 03, 2021

Abstract:Forestry machines are heavy vehicles performing complex manipulation tasks in unstructured production forest environments. Together with the complex dynamics of the on-board hydraulically actuated cranes, the rough forest terrains have posed a particular challenge in forestry automation. In this study, the feasibility of applying reinforcement learning control to forestry crane manipulators is investigated in a simulated environment. Our results show that it is possible to learn successful actuator-space control policies for energy efficient log grasping by invoking a simple curriculum in a deep reinforcement learning setup. Given the pose of the selected logs, our best control policy reaches a grasping success rate of 97%. Including an energy-optimization goal in the reward function, the energy consumption is significantly reduced compared to control policies learned without incentive for energy optimization, while the increase in cycle time is marginal. The energy-optimization effects can be observed in the overall smoother motion and acceleration profiles during crane manipulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge