Martin Giese

Generating Realistic Arm Movements in Reinforcement Learning: A Quantitative Comparison of Reward Terms and Task Requirements

Feb 21, 2024

Abstract:The mimicking of human-like arm movement characteristics involves the consideration of three factors during control policy synthesis: (a) chosen task requirements, (b) inclusion of noise during movement execution and (c) chosen optimality principles. Previous studies showed that when considering these factors (a-c) individually, it is possible to synthesize arm movements that either kinematically match the experimental data or reproduce the stereotypical triphasic muscle activation pattern. However, to date no quantitative comparison has been made on how realistic the arm movement generated by each factor is; as well as whether a partial or total combination of all factors results in arm movements with human-like kinematic characteristics and a triphasic muscle pattern. To investigate this, we used reinforcement learning to learn a control policy for a musculoskeletal arm model, aiming to discern which combination of factors (a-c) results in realistic arm movements according to four frequently reported stereotypical characteristics. Our findings indicate that incorporating velocity and acceleration requirements into the reaching task, employing reward terms that encourage minimization of mechanical work, hand jerk, and control effort, along with the inclusion of noise during movement, leads to the emergence of realistic human arm movements in reinforcement learning. We expect that the gained insights will help in the future to better predict desired arm movements and corrective forces in wearable assistive devices.

Multi-Domain Norm-referenced Encoding Enables Data Efficient Transfer Learning of Facial Expression Recognition

Apr 05, 2023

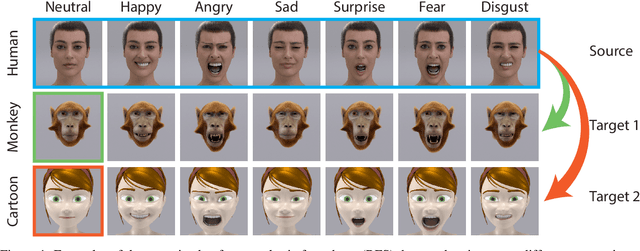

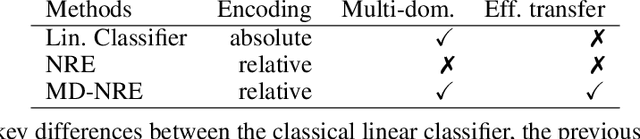

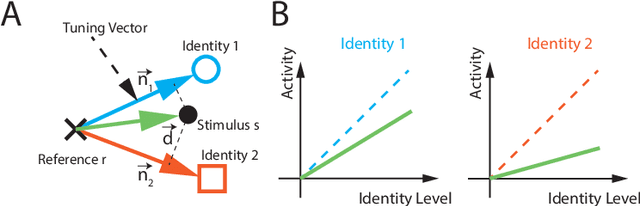

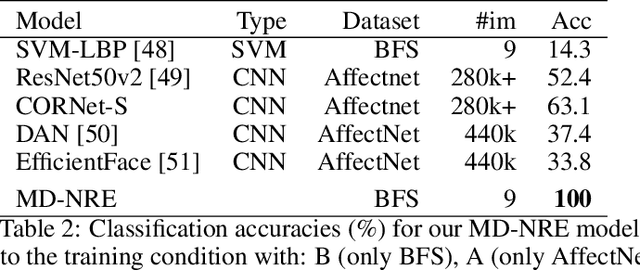

Abstract:People can innately recognize human facial expressions in unnatural forms, such as when depicted on the unusual faces drawn in cartoons or when applied to an animal's features. However, current machine learning algorithms struggle with out-of-domain transfer in facial expression recognition (FER). We propose a biologically-inspired mechanism for such transfer learning, which is based on norm-referenced encoding, where patterns are encoded in terms of difference vectors relative to a domain-specific reference vector. By incorporating domain-specific reference frames, we demonstrate high data efficiency in transfer learning across multiple domains. Our proposed architecture provides an explanation for how the human brain might innately recognize facial expressions on varying head shapes (humans, monkeys, and cartoon avatars) without extensive training. Norm-referenced encoding also allows the intensity of the expression to be read out directly from neural unit activity, similar to face-selective neurons in the brain. Our model achieves a classification accuracy of 92.15\% on the FERG dataset with extreme data efficiency. We train our proposed mechanism with only 12 images, including a single image of each class (facial expression) and one image per domain (avatar). In comparison, the authors of the FERG dataset achieved a classification accuracy of 89.02\% with their FaceExpr model, which was trained on 43,000 images.

GeoFault: A well-founded fault ontology for interoperability in geological modeling

Feb 14, 2023Abstract:Geological modeling currently uses various computer-based applications. Data harmonization at the semantic level by means of ontologies is essential for making these applications interoperable. Since geo-modeling is currently part of multidisciplinary projects, semantic harmonization is required to model not only geological knowledge but also to integrate other domain knowledge at a general level. For this reason, the domain ontologies used for describing geological knowledge must be based on a sound ontology background to ensure the described geological knowledge is integratable. This paper presents a domain ontology: GeoFault, resting on the Basic Formal Ontology BFO (Arp et al., 2015) and the GeoCore ontology (Garcia et al., 2020). It models the knowledge related to geological faults. Faults are essential to various industries but are complex to model. They can be described as thin deformed rock volumes or as spatial arrangements resulting from the different displacements of geological blocks. At a broader scale, faults are currently described as mere surfaces, which are the components of complex fault arrays. The reference to the BFO and GeoCore package allows assigning these various fault elements to define ontology classes and their logical linkage within a consistent ontology framework. The GeoFault ontology covers the core knowledge of faults 'strico sensu,' excluding ductile shear deformations. This considered vocabulary is essentially descriptive and related to regional to outcrop scales, excluding microscopic, orogenic, and tectonic plate structures. The ontology is molded in OWL 2, validated by competency questions with two use cases, and tested using an in-house ontology-driven data entry application. The work of GeoFault provides a solid framework for disambiguating fault knowledge and a foundation of fault data integration for the applications and the users.

Generating Sparse Counterfactual Explanations For Multivariate Time Series

Jun 02, 2022

Abstract:Since neural networks play an increasingly important role in critical sectors, explaining network predictions has become a key research topic. Counterfactual explanations can help to understand why classifier models decide for particular class assignments and, moreover, how the respective input samples would have to be modified such that the class prediction changes. Previous approaches mainly focus on image and tabular data. In this work we propose SPARCE, a generative adversarial network (GAN) architecture that generates SPARse Counterfactual Explanations for multivariate time series. Our approach provides a custom sparsity layer and regularizes the counterfactual loss function in terms of similarity, sparsity, and smoothness of trajectories. We evaluate our approach on real-world human motion datasets as well as a synthetic time series interpretability benchmark. Although we make significantly sparser modifications than other approaches, we achieve comparable or better performance on all metrics. Moreover, we demonstrate that our approach predominantly modifies salient time steps and features, leaving non-salient inputs untouched.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge