Marsel Faizullin

Best Axes Composition Extended: Multiple Gyroscopes and Accelerometers Data Fusion to Reduce Systematic Error

Sep 19, 2022

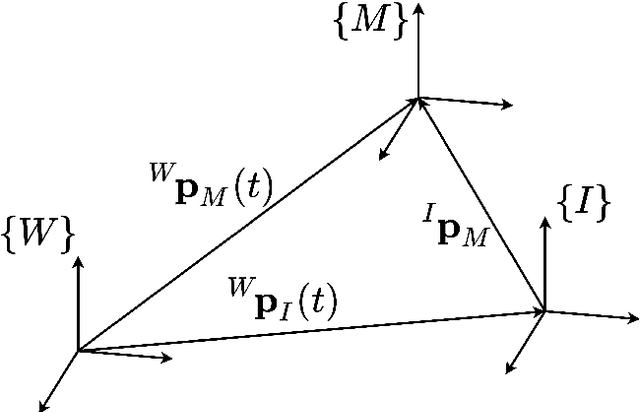

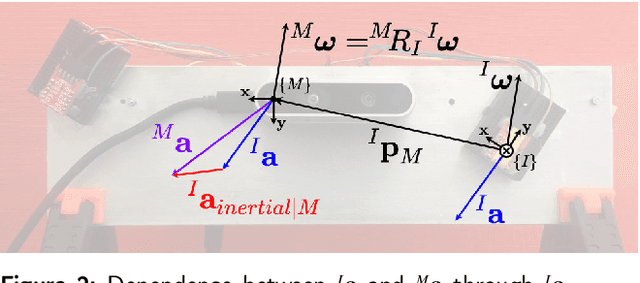

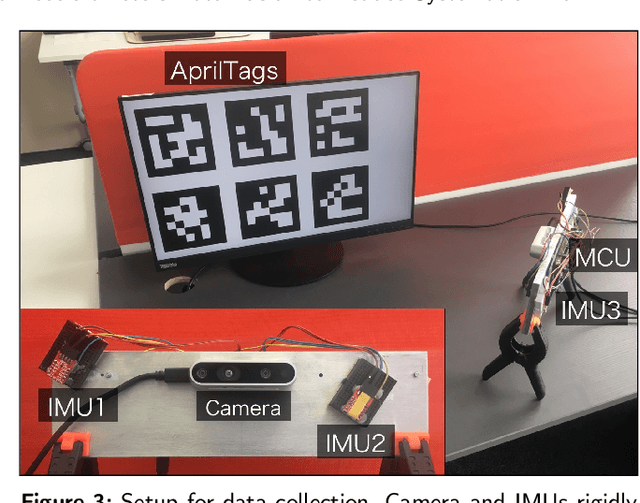

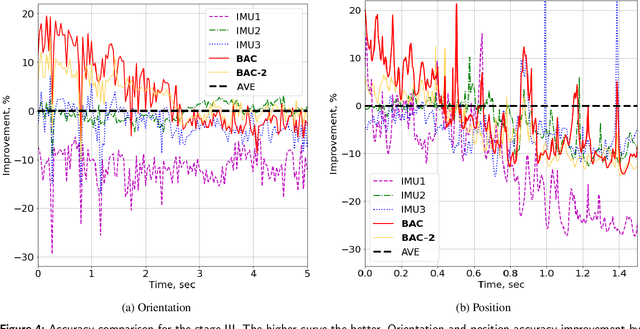

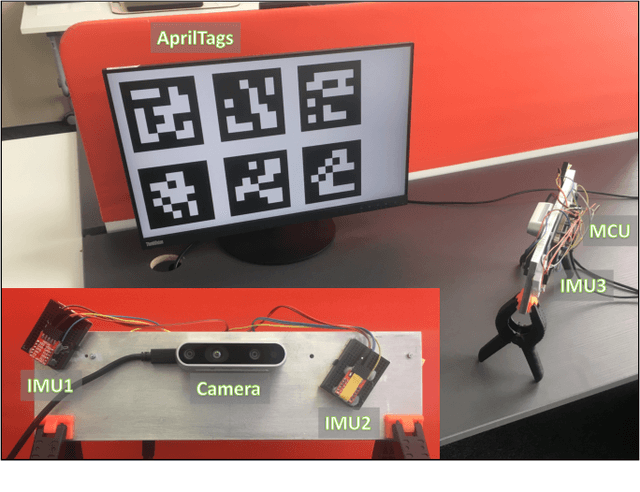

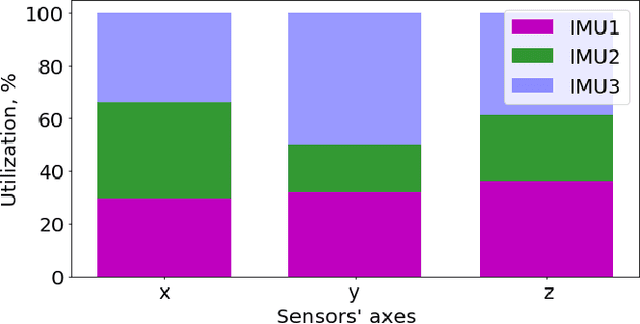

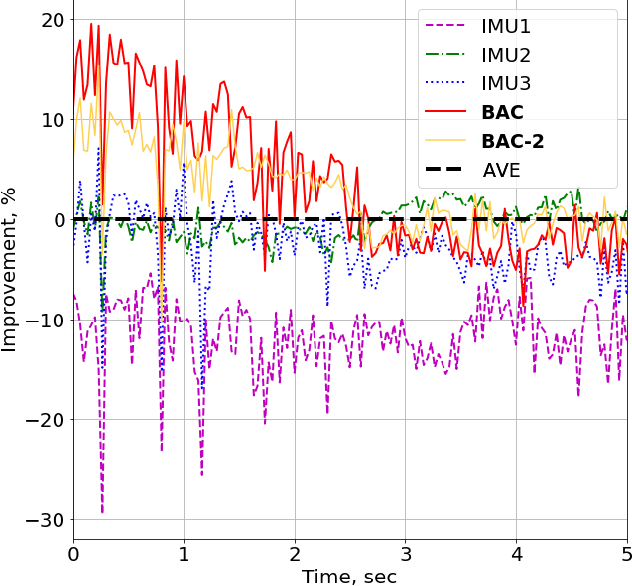

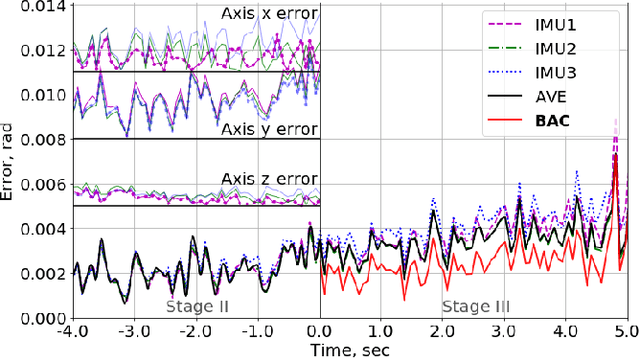

Abstract:Multiple rigidly attached Inertial Measurement Unit (IMU) sensors provide a richer flow of data compared to a single IMU. State-of-the-art methods follow a probabilistic model of IMU measurements based on the random nature of errors combined under a Bayesian framework. However, affordable low-grade IMUs, in addition, suffer from systematic errors due to their imperfections not covered by their corresponding probabilistic model. In this paper, we propose a method, the Best Axes Composition (BAC) of combining Multiple IMU (MIMU) sensors data for accurate 3D-pose estimation that takes into account both random and systematic errors by dynamically choosing the best IMU axes from the set of all available axes. We evaluate our approach on our MIMU visual-inertial sensor and compare the performance of the method with a purely probabilistic state-of-the-art approach of MIMU data fusion. We show that BAC outperforms the latter and achieves up to 20% accuracy improvement for both orientation and position estimation in open loop, but needs proper treatment to keep the obtained gain.

SmartPortraits: Depth Powered Handheld Smartphone Dataset of Human Portraits for State Estimation, Reconstruction and Synthesis

Apr 21, 2022

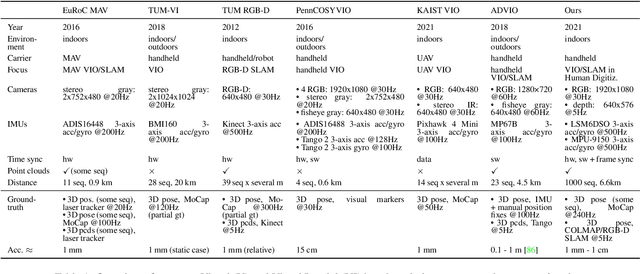

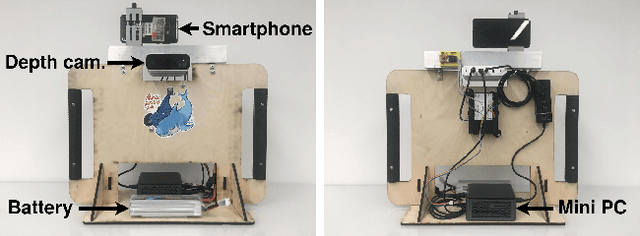

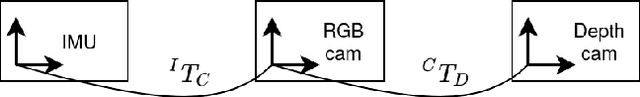

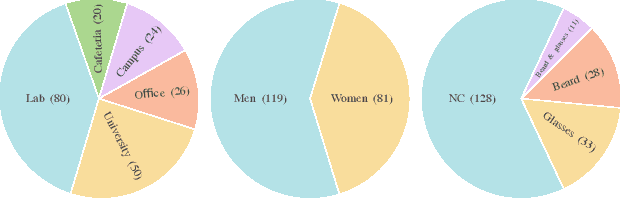

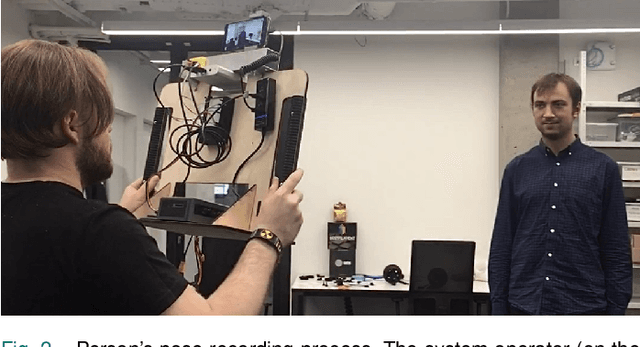

Abstract:We present a dataset of 1000 video sequences of human portraits recorded in real and uncontrolled conditions by using a handheld smartphone accompanied by an external high-quality depth camera. The collected dataset contains 200 people captured in different poses and locations and its main purpose is to bridge the gap between raw measurements obtained from a smartphone and downstream applications, such as state estimation, 3D reconstruction, view synthesis, etc. The sensors employed in data collection are the smartphone's camera and Inertial Measurement Unit (IMU), and an external Azure Kinect DK depth camera software synchronized with sub-millisecond precision to the smartphone system. During the recording, the smartphone flash is used to provide a periodic secondary source of lightning. Accurate mask of the foremost person is provided as well as its impact on the camera alignment accuracy. For evaluation purposes, we compare multiple state-of-the-art camera alignment methods by using a Motion Capture system. We provide a smartphone visual-inertial benchmark for portrait capturing, where we report results for multiple methods and motivate further use of the provided trajectories, available in the dataset, in view synthesis and 3D reconstruction tasks.

Synchronized Smartphone Video Recording System of Depth and RGB Image Frames with Sub-millisecond Precision

Nov 05, 2021

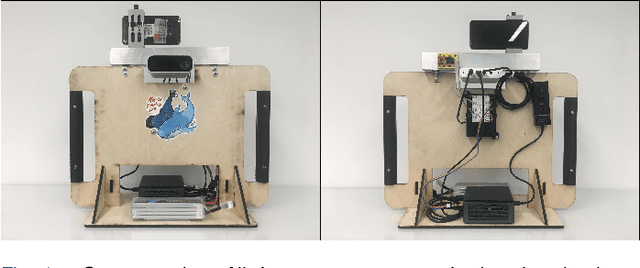

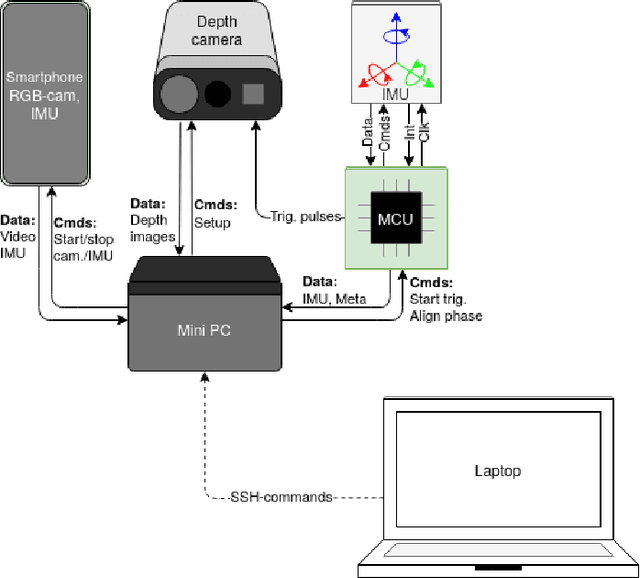

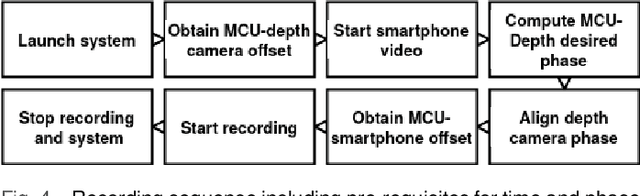

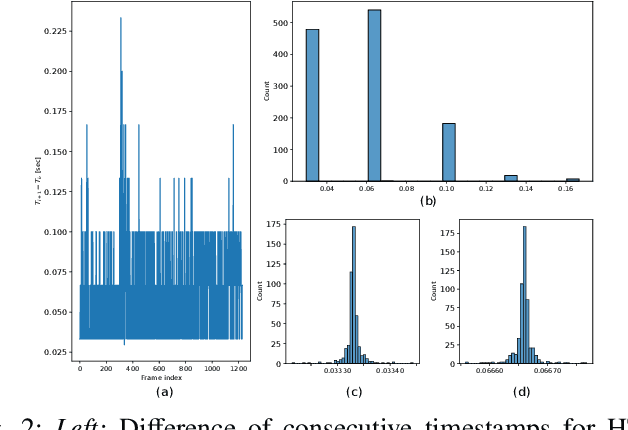

Abstract:In this paper, we propose a recording system with high time synchronization (sync) precision which consists of heterogeneous sensors such as smartphone, depth camera, IMU, etc. Due to the general interest and mass adoption of smartphones, we include at least one of such devices into our system. This heterogeneous system requires a hybrid synchronization for the two different time authorities: smartphone and MCU, where we combine a hardware wired-based trigger sync with software sync. We evaluate our sync results on a custom and novel system mixing active infra-red depth with RGB camera. Our system achieves sub-millisecond precision of time sync. Moreover, our system exposes every RGB-depth image pair at the same time with this precision. We showcase a configuration in particular but the general principles behind our system could be replicated by other projects.

Best Axes Composition: Multiple Gyroscopes IMU Sensor Fusion to Reduce Systematic Error

Jul 22, 2021

Abstract:In this paper, we propose an algorithm to combine multiple cheap Inertial Measurement Unit (IMU) sensors to calculate 3D-orientations accurately. Our approach takes into account the inherent and non-negligible systematic error in the gyroscope model and provides a solution based on the error observed during previous instants of time. Our algorithm, the Best Axes Composition (BAC), chooses dynamically the most fitted axes among IMUs to improve the estimation performance. We compare our approach with a probabilistic Multiple IMU (MIMU) approach, and we validate our algorithm in our collected dataset. As a result, it only takes as few as 2 IMUs to significantly improve accuracy, while other MIMU approaches need a higher number of sensors to achieve the same results.

Open-Source LiDAR Time Synchronization System by Mimicking GPS-clock

Jul 06, 2021

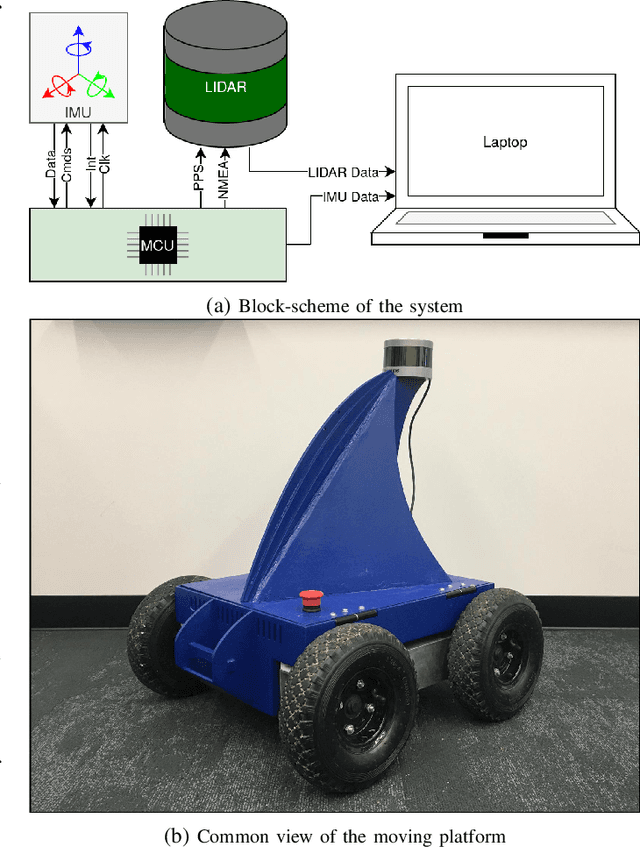

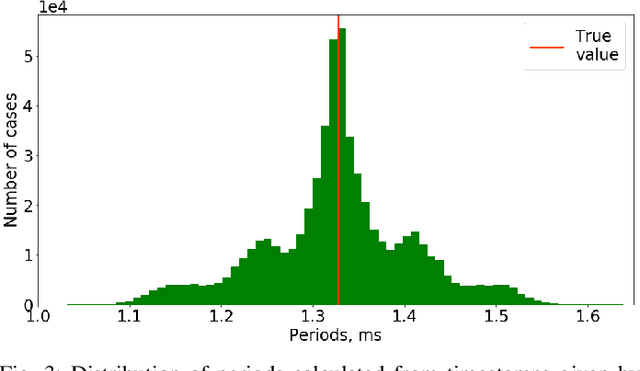

Abstract:Time synchronization of multiple sensors is one of the main issues when building sensor networks. Data fusion algorithms and their applications, such as LiDAR-IMU Odometry (LIO), rely on precise timestamping. We introduce open-source LiDAR to inertial measurement unit (IMU) hardware time synchronization system, which could be generalized to multiple sensors such as cameras, encoders, other LiDARs, etc. The system mimics a GPS-supplied clock interface by a microcontroller-powered platform and provides 1 microsecond synchronization precision. In addition, we conduct an evaluation of the system precision comparing to other synchronization methods, including timestamping provided by ROS software and LiDAR inner clock, showing clear advantages over both baseline methods.

Sub-millisecond Video Synchronization of Multiple Android Smartphones

Jul 02, 2021

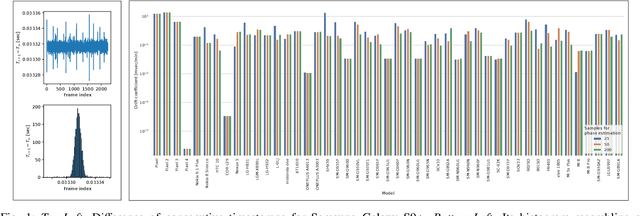

Abstract:This paper addresses the problem of building an affordable easy-to-setup synchronized multi-view camera system, which is in demand for many Computer Vision and Robotics applications in high-dynamic environments. In our work, we propose a solution for this problem - a publicly-available Android application for synchronized video recording on multiple smartphones with sub-millisecond accuracy. We present a generalized mathematical model of timestamping for Android smartphones and prove its applicability on 47 different physical devices. Also, we estimate the time drift parameter for those smartphones, which is less than 1.2 millisecond per minute for most of the considered devices, that makes smartphones' camera system a worthy analog for professional multi-view systems. Finally, we demonstrate Android-app performance on the camera system built from Android smartphones quantitatively, showing less than 300 microseconds synchronization error, and qualitatively - on panorama stitching task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge