Best Axes Composition Extended: Multiple Gyroscopes and Accelerometers Data Fusion to Reduce Systematic Error

Paper and Code

Sep 19, 2022

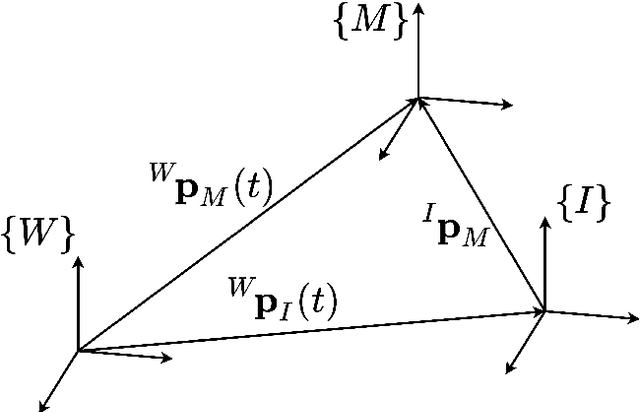

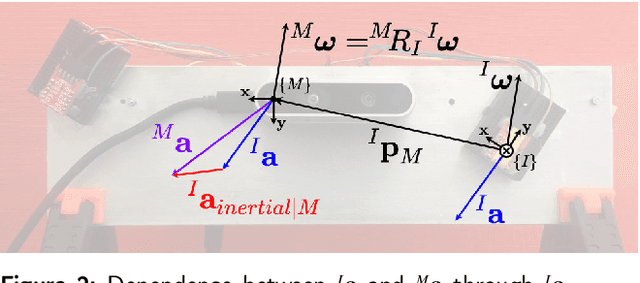

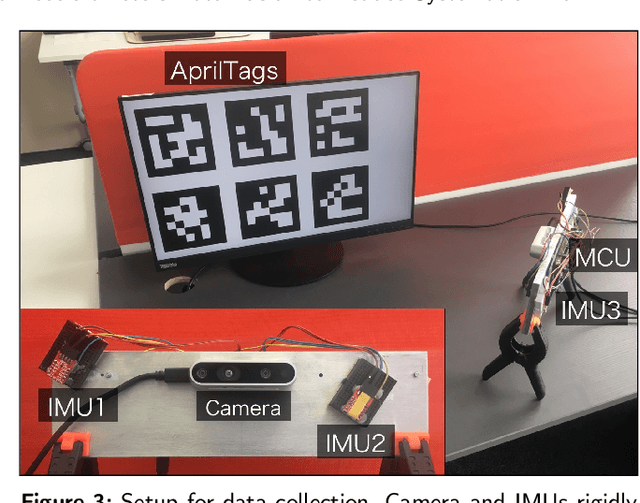

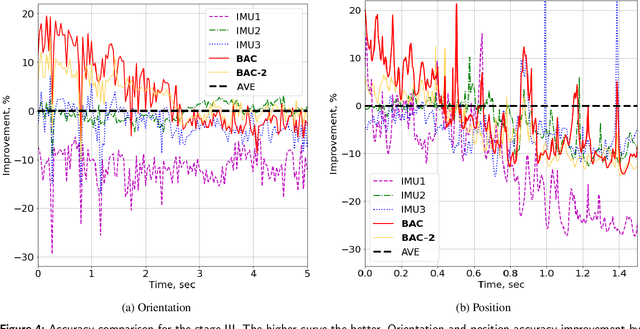

Multiple rigidly attached Inertial Measurement Unit (IMU) sensors provide a richer flow of data compared to a single IMU. State-of-the-art methods follow a probabilistic model of IMU measurements based on the random nature of errors combined under a Bayesian framework. However, affordable low-grade IMUs, in addition, suffer from systematic errors due to their imperfections not covered by their corresponding probabilistic model. In this paper, we propose a method, the Best Axes Composition (BAC) of combining Multiple IMU (MIMU) sensors data for accurate 3D-pose estimation that takes into account both random and systematic errors by dynamically choosing the best IMU axes from the set of all available axes. We evaluate our approach on our MIMU visual-inertial sensor and compare the performance of the method with a purely probabilistic state-of-the-art approach of MIMU data fusion. We show that BAC outperforms the latter and achieves up to 20% accuracy improvement for both orientation and position estimation in open loop, but needs proper treatment to keep the obtained gain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge