Marko Car

Vision-based system for a real-time detection and following of UAV

Apr 29, 2022

Abstract:In this paper a vision-based system for detection, motion tracking and following of Unmanned Aerial Vehicle (UAV) with other UAV (follower) is presented. For detection of an airborne UAV we apply a convolutional neural network YOLO trained on a collected and processed dataset of 10,000 images. The trained network is capable of detecting various multirotor UAVs in indoor, outdoor and simulation environments. Furthermore, detection results are improved with Kalman filter which ensures steady and reliable information about position and velocity of a target UAV. Preserving the target UAV in the field of view (FOV) and at required distance is accomplished by a simple nonlinear controller based on visual servoing strategy. The proposed system achieves a real-time performance on Neural Compute Stick 2 with a speed of 20 frames per second (FPS) for the detection of an UAV. Justification and efficiency of the developed vision-based system are confirmed in Gazebo simulation experiment where the target UAV is executing a 3D trajectory in a shape of number eight.

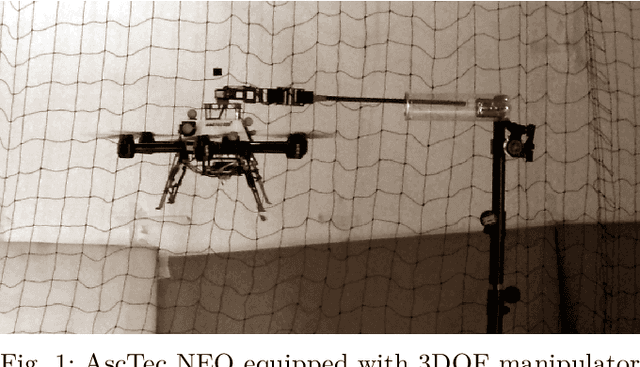

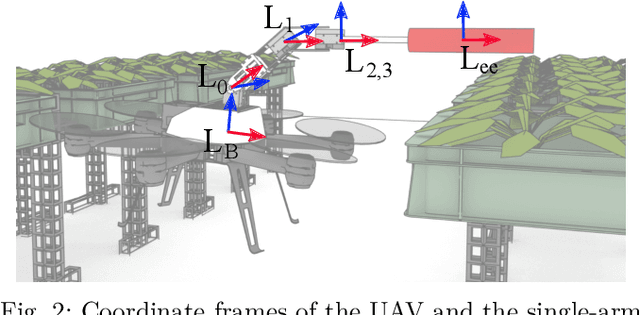

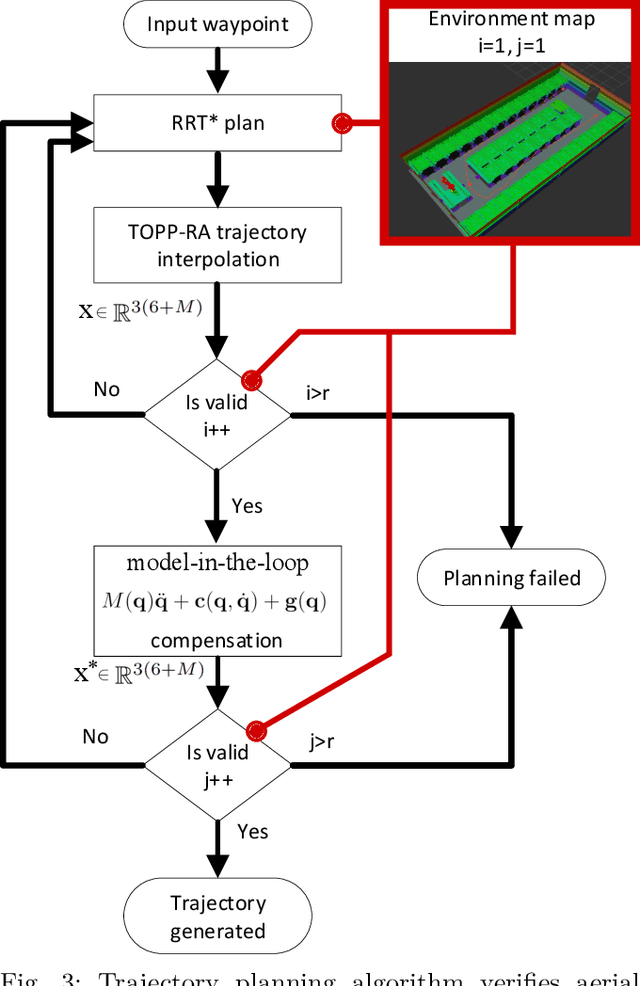

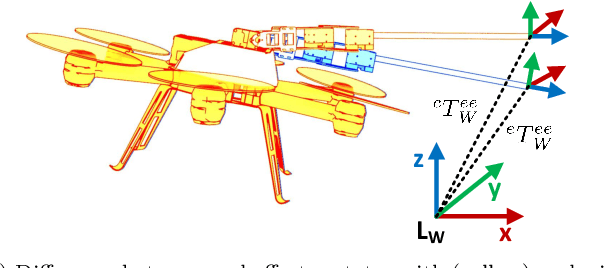

Exploiting Null Space in Aerial Manipulation through Model-In-The-Loop Motion Planning

Apr 28, 2022

Abstract:This paper presents a method for aerial manipulator end-effector trajectory tracking by encompassing dynamics of the Unmanned Aerial Vehicle (UAV) and null space of the manipulator attached to it in the motion planning procedure. The proposed method runs in phases. Trajectory planning starts by not accounting for roll and pitch angles of the underactuated UAV system. Next, we propose simulating the dynamics on such a trajectory and obtaining UAV attitude through the model. The full aerial manipulator state obtained in such a manner is further utilized to account for discrepancies in planned and simulated end-effector states. Finally, the end-effector pose is corrected through the null space of the manipulator to match the desired end-effector pose obtained in trajectory planning. Furthermore, we have applied the TOPP-RA approach on the UAV by invoking the differential flatness principle. Finally, we conducted experimental tests to verify effectiveness of the planning framework.

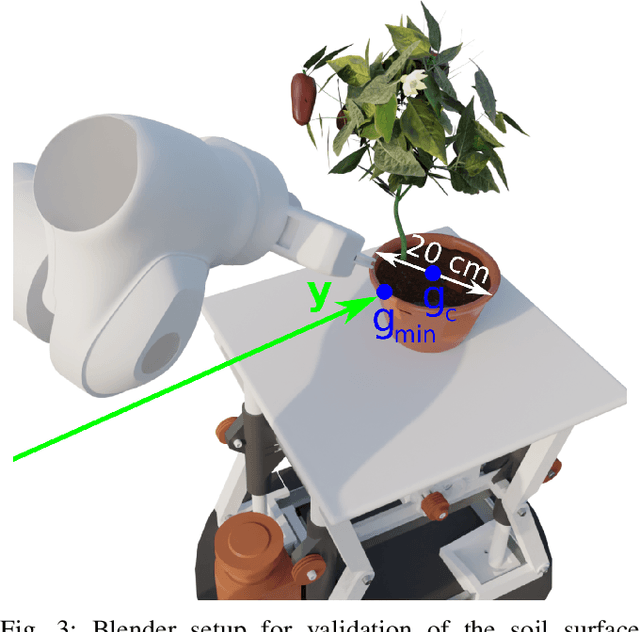

Robotic Irrigation Water Management: Estimating Soil Moisture Content by Feel and Appearance

Jan 19, 2022

Abstract:In this paper we propose a robotic system for Irrigation Water Management (IWM) in a structured robotic greenhouse environment. A commercially available robotic manipulator is equipped with an RGB-D camera and a soil moisture sensor. The two are used to automate the procedure known as "feel and appearance method", which is a way of monitoring soil moisture to determine when to irrigate and how much water to apply. We develop a compliant force control framework that enables the robot to insert the soil moisture sensor in the sensitive plant root zone of the soil, without harming the plant. RGB-D camera is used to roughly estimate the soil surface, in order to plan the soil sampling approach. Used together with the developed adaptive force control algorithm, the camera enables the robot to sample the soil without knowing the exact soil stiffness a priori. Finally, we postulate a deep learning based approach to utilize the camera to visually assess the soil health and moisture content.

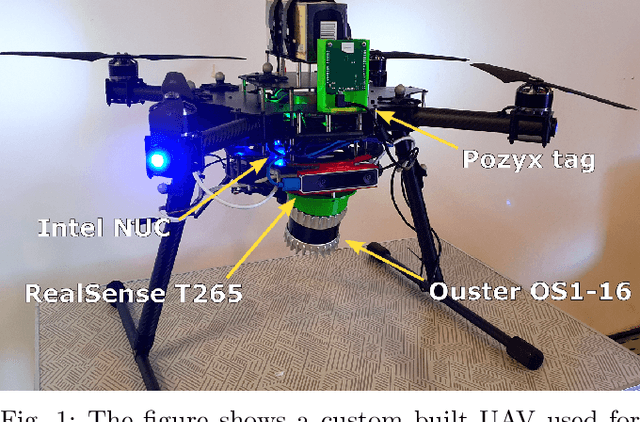

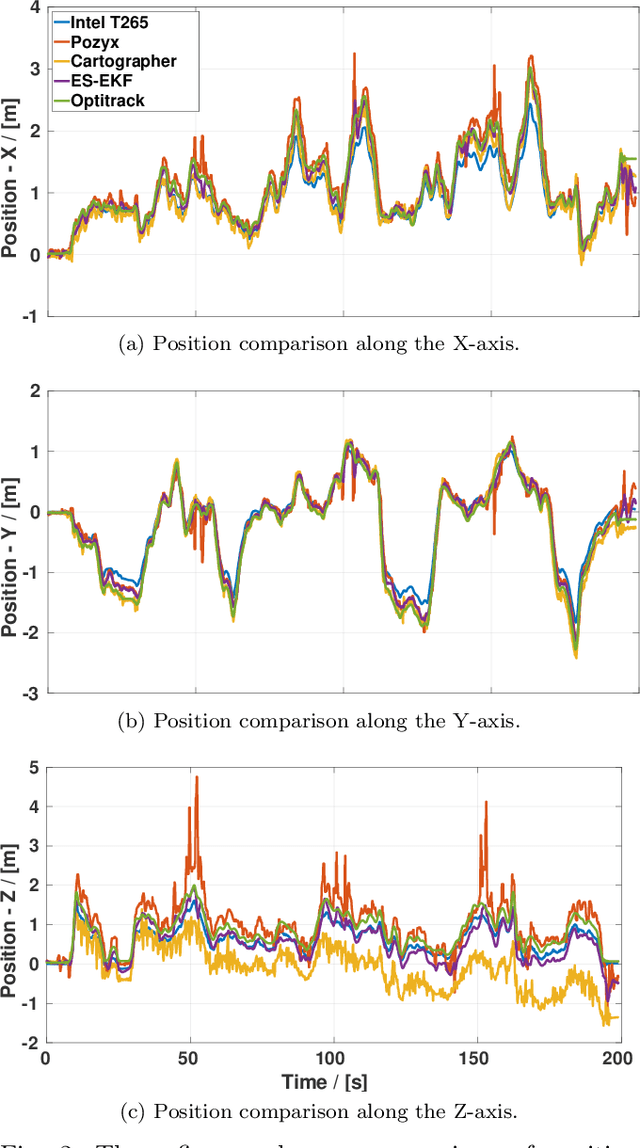

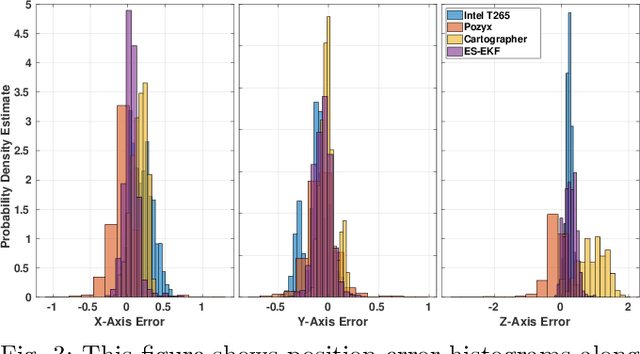

Error State Extended Kalman Filter Multi-Sensor Fusion for Unmanned Aerial Vehicle Localization in GPS and Magnetometer Denied Indoor Environments

Sep 10, 2021

Abstract:This paper addresses the issues of unmanned aerial vehicle (UAV) indoor navigation, specifically in areas where GPS and magnetometer sensor measurements are unavailable or unreliable. The proposed solution is to use an error state extended Kalman filter (ES -EKF) in the context of multi-sensor fusion. Its implementation is adapted to fuse measurements from multiple sensor sources and the state model is extended to account for sensor drift and possible calibration inaccuracies. Experimental validation is performed by fusing IMU data obtained from the PixHawk 2.1 flight controller with pose measurements from LiDAR Cartographer SLAM, visual odometry provided by the Intel T265 camera and position measurements from the Pozyx UWB indoor positioning system. The estimated odometry from ES-EKF is validated against ground truth data from the Optitrack motion capture system and its use in a position control loop to stabilize the UAV is demonstrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge